今天(2024 年 9 月 17 日),我们推出了前沿级多模态大语言模型(LLM)系列 NVLM 1.0,它在视觉语言任务上取得了最先进的结果,可与领先的专有模型(如 GPT-4o)和开放存取模型(如 Llama 3-V 405B 和 InternVL 2)相媲美。值得注意的是,NVLM 1.0 在多模态训练后的纯文本性能比其 LLM 骨干模型有所提高。

在此版本库中,我们将向社区开源 NVLM-1.0-D-72B(纯解码器架构)、纯解码器模型权重和代码。

我们使用传统的 Megatron-LM 训练我们的模型,并将代码库调整为 Huggingface,以实现模型托管、可重现性和推理。我们观察到 Megatron 代码库和 Huggingface 代码库之间存在数值差异,这些差异在预期的变化范围之内。我们提供 Huggingface 代码库和 Megatron 代码库的结果,以便与其他模型进行重现和比较。

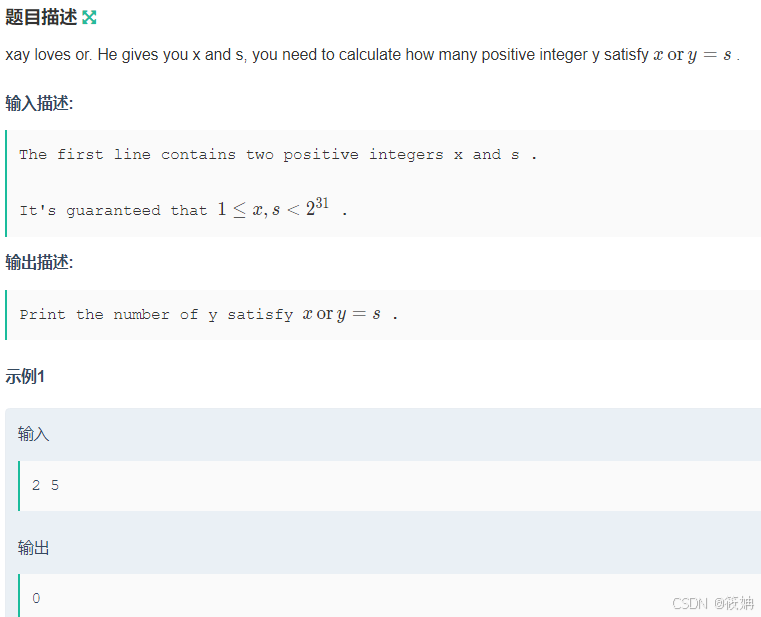

视觉语言基准

| Benchmark | MMMU (val / test) | MathVista | OCRBench | AI2D | ChartQA | DocVQA | TextVQA | RealWorldQA | VQAv2 |

|---|---|---|---|---|---|---|---|---|---|

| NVLM-D 1.0 72B (Huggingface) | 58.7 / 54.9 | 65.2 | 852 | 94.2 | 86.0 | 92.6 | 82.6 | 69.5 | 85.4 |

| NVLM-D 1.0 72B (Megatron) | 59.7 / 54.6 | 65.2 | 853 | 94.2 | 86.0 | 92.6 | 82.1 | 69.7 | 85.4 |

| Llama 3.2 90B | 60.3 / - | 57.3 | - | 92.3 | 85.5 | 90.1 | - | - | 78.1 |

| Llama 3-V 70B | 60.6 / - | - | - | 93.0 | 83.2 | 92.2 | 83.4 | - | 79.1 |

| Llama 3-V 405B | 64.5 / - | - | - | 94.1 | 85.8 | 92.6 | 84.8 | - | 80.2 |

| InternVL2-Llama3-76B | 55.2 / - | 65.5 | 839 | 94.8 | 88.4 | 94.1 | 84.4 | 72.2 | - |

| GPT-4V | 56.8 / 55.7 | 49.9 | 645 | 78.2 | 78.5 | 88.4 | 78.0 | 61.4 | 77.2 |

| GPT-4o | 69.1 / - | 63.8 | 736 | 94.2 | 85.7 | 92.8 | - | - | - |

| Claude 3.5 Sonnet | 68.3 / - | 67.7 | 788 | 94.7 | 90.8 | 95.2 | - | - | - |

| Gemini 1.5 Pro (Aug 2024) | 62.2 / - | 63.9 | 754 | 94.4 | 87.2 | 93.1 | 78.7 | 70.4 | 80.2 |

纯文字基准

| Tasks | Backbone LLM | MMLU | GSM8K | MATH | HumanEval | Avg. Accuracy |

|---|---|---|---|---|---|---|

| Proprietary | ||||||

| GPT-4.0 | N/A | 88.7 | - | 76.6 | 90.2 | - |

| Gemini Pro 1.5 (Aug 2024) | N/A | 85.9 | 90.8 | 67.7 | 84.1 | 82.1 |

| Claude 3.5 Sonnet | N/A | 88.7 | 96.4 | 71.1 | 92.0 | 87.0 |

| Open LLM | ||||||

| (a) Nous-Hermes-2-Yi-34B | N/A | 75.5 | 78.6 | 21.8 | 43.3 | 54.8 |

| (b) Qwen-72B-Instruct | N/A | 82.3 | 91.1 | 59.7 | 86.0 | 79.8 |

| (c) Llama-3-70B-Instruct | N/A | 82.0 | 93.0 | 51.0 | 81.7 | 76.6 |

| (d) Llama-3.1-70B-Instruct | N/A | 83.6 | 95.1 | 68.0 | 80.5 | 81.8 |

| (e) Llama-3.1-405B-Instruct | N/A | 87.3 | 96.8 | 73.8 | 89.0 | 86.7 |

| Open Multimodal LLM | ||||||

| VILA-1.5 40B | (a) | 73.3 | 67.5 | 16.8 | 34.1 | 🥶 47.9 (-6.9) |

| LLaVA-OneVision 72B | (b) | 80.6 | 89.9 | 49.2 | 74.4 | 🥶 73.5 (-6.3) |

| InternVL-2-Llama3-76B | (c) | 78.5 | 87.1 | 42.5 | 71.3 | 🥶 69.9 (-6.7) |

| *Llama 3-V 70B | (d) | 83.6 | 95.1 | 68.0 | 80.5 | 🙂 81.8 (0) |

| *Llama 3-V 405B | (e) | 87.3 | 96.8 | 73.8 | 89.0 | 🙂 86.7 (0) |

| NVLM-D 1.0 72B (Megatron) | (b) | 82.0 | 92.9 | 73.1 | 88.4 | 🥳 84.1 (+4.3) |

| NVLM-D 1.0 72B (Huggingface) | (b) | 81.7 | 93.2 | 73.1 | 89.0 | 🥳 84.3 (+4.5) |

在将 Megatron checkpoint 转换为 Huggingface 时,我们调整了 InternVL 代码库,以支持 HF 中的模型加载和多 GPU 推理。在将 Qwen2.5-72B-Instruct 中的标记符转换为 Huggingface 时,我们还使用了 Qwen2.5-72B-Instruct 中的标记符,因为它包含用于视觉任务的额外特殊标记符,例如 <|vision_pad|>。我们在 Qwen2-72B-Instruct 纯文本模型和 InternViT-6B-448px-V1-5 ViT 模型的基础上,利用大规模高质量多模态数据集训练 NVLM-1.0-D-72B。有关训练代码,请参阅 Megatron-LM(即将发布)。

准备环境

我们在 Dockerfile 中提供了一个用于复制的 docker 生成文件。

该 docker 映像基于 nvcr.io/nvidia/pytorch:23.09-py3。

注:我们注意到,不同的转换器版本/CUDA 版本/docker 版本会导致基准数略有不同。我们建议使用上述 Dockerfile 进行精确还原。

模型加载

import torch

from transformers import AutoModelpath = "nvidia/NVLM-D-72B"

model = AutoModel.from_pretrained(path,torch_dtype=torch.bfloat16,low_cpu_mem_usage=True,use_flash_attn=False,trust_remote_code=True).eval()多显卡

import torch

import math

from transformers import AutoModeldef split_model():device_map = {}world_size = torch.cuda.device_count()num_layers = 80# Since the first GPU will be used for ViT, treat it as half a GPU.num_layers_per_gpu = math.ceil(num_layers / (world_size - 0.5))num_layers_per_gpu = [num_layers_per_gpu] * world_sizenum_layers_per_gpu[0] = math.ceil(num_layers_per_gpu[0] * 0.5)layer_cnt = 0for i, num_layer in enumerate(num_layers_per_gpu):for j in range(num_layer):device_map[f'language_model.model.layers.{layer_cnt}'] = ilayer_cnt += 1device_map['vision_model'] = 0device_map['mlp1'] = 0device_map['language_model.model.tok_embeddings'] = 0device_map['language_model.model.embed_tokens'] = 0device_map['language_model.output'] = 0device_map['language_model.model.norm'] = 0device_map['language_model.lm_head'] = 0device_map[f'language_model.model.layers.{num_layers - 1}'] = 0return device_mappath = "nvidia/NVLM-D-72B"

device_map = split_model()

model = AutoModel.from_pretrained(path,torch_dtype=torch.bfloat16,low_cpu_mem_usage=True,use_flash_attn=False,trust_remote_code=True,device_map=device_map).eval()推理

import torch

from transformers import AutoTokenizer, AutoModel

import math

from PIL import Image

import torchvision.transforms as T

from torchvision.transforms.functional import InterpolationModedef split_model():device_map = {}world_size = torch.cuda.device_count()num_layers = 80# Since the first GPU will be used for ViT, treat it as half a GPU.num_layers_per_gpu = math.ceil(num_layers / (world_size - 0.5))num_layers_per_gpu = [num_layers_per_gpu] * world_sizenum_layers_per_gpu[0] = math.ceil(num_layers_per_gpu[0] * 0.5)layer_cnt = 0for i, num_layer in enumerate(num_layers_per_gpu):for j in range(num_layer):device_map[f'language_model.model.layers.{layer_cnt}'] = ilayer_cnt += 1device_map['vision_model'] = 0device_map['mlp1'] = 0device_map['language_model.model.tok_embeddings'] = 0device_map['language_model.model.embed_tokens'] = 0device_map['language_model.output'] = 0device_map['language_model.model.norm'] = 0device_map['language_model.lm_head'] = 0device_map[f'language_model.model.layers.{num_layers - 1}'] = 0return device_mapIMAGENET_MEAN = (0.485, 0.456, 0.406)

IMAGENET_STD = (0.229, 0.224, 0.225)def build_transform(input_size):MEAN, STD = IMAGENET_MEAN, IMAGENET_STDtransform = T.Compose([T.Lambda(lambda img: img.convert('RGB') if img.mode != 'RGB' else img),T.Resize((input_size, input_size), interpolation=InterpolationMode.BICUBIC),T.ToTensor(),T.Normalize(mean=MEAN, std=STD)])return transformdef find_closest_aspect_ratio(aspect_ratio, target_ratios, width, height, image_size):best_ratio_diff = float('inf')best_ratio = (1, 1)area = width * heightfor ratio in target_ratios:target_aspect_ratio = ratio[0] / ratio[1]ratio_diff = abs(aspect_ratio - target_aspect_ratio)if ratio_diff < best_ratio_diff:best_ratio_diff = ratio_diffbest_ratio = ratioelif ratio_diff == best_ratio_diff:if area > 0.5 * image_size * image_size * ratio[0] * ratio[1]:best_ratio = ratioreturn best_ratiodef dynamic_preprocess(image, min_num=1, max_num=12, image_size=448, use_thumbnail=False):orig_width, orig_height = image.sizeaspect_ratio = orig_width / orig_height# calculate the existing image aspect ratiotarget_ratios = set((i, j) for n in range(min_num, max_num + 1) for i in range(1, n + 1) for j in range(1, n + 1) ifi * j <= max_num and i * j >= min_num)target_ratios = sorted(target_ratios, key=lambda x: x[0] * x[1])# find the closest aspect ratio to the targettarget_aspect_ratio = find_closest_aspect_ratio(aspect_ratio, target_ratios, orig_width, orig_height, image_size)# calculate the target width and heighttarget_width = image_size * target_aspect_ratio[0]target_height = image_size * target_aspect_ratio[1]blocks = target_aspect_ratio[0] * target_aspect_ratio[1]# resize the imageresized_img = image.resize((target_width, target_height))processed_images = []for i in range(blocks):box = ((i % (target_width // image_size)) * image_size,(i // (target_width // image_size)) * image_size,((i % (target_width // image_size)) + 1) * image_size,((i // (target_width // image_size)) + 1) * image_size)# split the imagesplit_img = resized_img.crop(box)processed_images.append(split_img)assert len(processed_images) == blocksif use_thumbnail and len(processed_images) != 1:thumbnail_img = image.resize((image_size, image_size))processed_images.append(thumbnail_img)return processed_imagesdef load_image(image_file, input_size=448, max_num=12):image = Image.open(image_file).convert('RGB')transform = build_transform(input_size=input_size)images = dynamic_preprocess(image, image_size=input_size, use_thumbnail=True, max_num=max_num)pixel_values = [transform(image) for image in images]pixel_values = torch.stack(pixel_values)return pixel_valuespath = "nvidia/NVLM-D-72B"

device_map = split_model()

model = AutoModel.from_pretrained(path,torch_dtype=torch.bfloat16,low_cpu_mem_usage=True,use_flash_attn=False,trust_remote_code=True,device_map=device_map).eval()print(model)tokenizer = AutoTokenizer.from_pretrained(path, trust_remote_code=True, use_fast=False)

generation_config = dict(max_new_tokens=1024, do_sample=False)# pure-text conversation

question = 'Hello, who are you?'

response, history = model.chat(tokenizer, None, question, generation_config, history=None, return_history=True)

print(f'User: {question}\nAssistant: {response}')# single-image single-round conversation

pixel_values = load_image('path/to/your/example/image.jpg', max_num=6).to(torch.bfloat16)

question = '<image>\nPlease describe the image shortly.'

response = model.chat(tokenizer, pixel_values, question, generation_config)

print(f'User: {question}\nAssistant: {response}')