测试环境

● kubernetes 1.20.15

● default命名空间

● spark 3.1.2

● kubectl

运行架构

构建镜像

- 配置JAVA_HOME

- 下载spark二进制包spark-3.1.2-bin-hadoop3.2.tgz并解压

- 修改kubernetes/dockerfiles/spark/Dockerfile文件

ARG java_image_tag=11-jre-slimFROM openjdk:${java_image_tag}# 默认185,有root需求这里可以修改为0

ARG spark_uid=0RUN set -ex && \

sed -i 's/http:\/\/deb.\(.*\)/https:\/\/deb.\1/g' /etc/apt/sources.list && \

apt-get update && \

ln -s /lib /lib64 && \

apt install -y bash tini libc6 libpam-modules krb5-user libnss3 procps && \

mkdir -p /opt/spark && \

mkdir -p /opt/spark/examples && \

mkdir -p /opt/spark/work-dir && \

touch /opt/spark/RELEASE && \

rm /bin/sh && \

ln -sv /bin/bash /bin/sh && \

echo "auth required pam_wheel.so use_uid" >> /etc/pam.d/su && \

chgrp root /etc/passwd && chmod ug+rw /etc/passwd && \

rm -rf /var/cache/apt/*# 可以提前将依赖的第三方jar包拷贝到jars目录下

COPY jars /opt/spark/jars

COPY bin /opt/spark/bin

COPY sbin /opt/spark/sbin

COPY kubernetes/dockerfiles/spark/entrypoint.sh /opt/

COPY kubernetes/dockerfiles/spark/decom.sh /opt/

COPY examples /opt/spark/examples

COPY kubernetes/tests /opt/spark/tests

COPY data /opt/spark/dataENV SPARK_HOME /opt/sparkWORKDIR /opt/spark/work-dir

RUN chmod g+w /opt/spark/work-dir

RUN chmod a+x /opt/decom.shENTRYPOINT [ "/opt/entrypoint.sh" ]# Specify the User that the actual main process will run as

USER ${spark_uid}

- 构建镜像与推送

./bin/docker-image-tool.sh -r harbor.gistack.cn/bigdata/spark-on-k8s -t 3.1.2 build

./bin/docker-image-tool.sh -r harbor.gistack.cn/bigdata/spark-on-k8s -t 3.1.2 push# 构建其它语言镜像

./bin/docker-image-tool.sh -r harbor.gistack.cn/bigdata/spark-on-k8s \-t 3.1.2-py -p ./kubernetes/dockerfiles/spark/bindings/python/Dockerfile build./bin/docker-image-tool.sh -r harbor.gistack.cn/bigdata/spark-on-k8s \-t 3.1.2-R -p ./kubernetes/dockerfiles/spark/bindings/R/Dockerfile build

启动spark任务

- 为spark driver创建SA账户及授权

kubectl create serviceaccount spark

kubectl create clusterrolebinding spark-role --clusterrole=edit --serviceaccount=default:spark --namespace=default

- 提交自带实例任务

./bin/spark-submit \--master k8s://https://192.168.190.123:6443 \--deploy-mode cluster \--name spark-pi \--class org.apache.spark.examples.SparkPi \--conf spark.executor.instances=5 \--conf spark.kubernetes.container.image=harbor.gistack.cn/bigdata/spark-on-k8s:3.1.2 \--conf spark.kubernetes.authenticate.driver.serviceAccountName=spark \local:///opt/spark/examples/jars/spark-examples_2.12-3.1.2.jar

- 客户端运行日志

[root@k8stest spark-3.1.2-bin-hadoop3.2]# ./bin/spark-submit \

> --master k8s://https://192.168.190.123:6443 \

> --deploy-mode cluster \

> --name spark-pi \

> --class org.apache.spark.examples.SparkPi \

> --conf spark.executor.instances=5 \

> --conf spark.kubernetes.container.image=harbor.gistack.cn/bigdata/spark-on-k8s:3.1.2 \

> --conf spark.kubernetes.authenticate.driver.serviceAccountName=spark \

> local:///opt/spark/examples/jars/spark-examples_2.12-3.1.2.jar

22/06/27 18:00:00 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

22/06/27 18:00:00 INFO SparkKubernetesClientFactory: Auto-configuring K8S client using current context from users K8S config file

22/06/27 18:00:01 INFO KerberosConfDriverFeatureStep: You have not specified a krb5.conf file locally or via a ConfigMap. Make sure that you have the krb5.conf locally on the driver image.

22/06/27 18:00:03 INFO LoggingPodStatusWatcherImpl: State changed, new state:pod name: spark-pi-02432381a49a8c05-drivernamespace: defaultlabels: spark-app-selector -> spark-a119352471db48d0a4a1083c79b48e0f, spark-role -> driverpod uid: bcd6ba46-a58e-42ad-9185-4355fbf78cdbcreation time: 2022-06-27T10:00:02Zservice account name: sparkvolumes: spark-local-dir-1, spark-conf-volume-driver, spark-token-g8sl4node name: vm190116start time: 2022-06-27T10:00:02Zphase: Pendingcontainer status:container name: spark-kubernetes-drivercontainer image: harbor.gistack.cn/bigdata/spark-on-k8s:3.1.2container state: waitingpending reason: ContainerCreating

22/06/27 18:00:03 INFO LoggingPodStatusWatcherImpl: State changed, new state:pod name: spark-pi-02432381a49a8c05-drivernamespace: defaultlabels: spark-app-selector -> spark-a119352471db48d0a4a1083c79b48e0f, spark-role -> driverpod uid: bcd6ba46-a58e-42ad-9185-4355fbf78cdbcreation time: 2022-06-27T10:00:02Zservice account name: sparkvolumes: spark-local-dir-1, spark-conf-volume-driver, spark-token-g8sl4node name: vm190116start time: 2022-06-27T10:00:02Zphase: Pendingcontainer status:container name: spark-kubernetes-drivercontainer image: harbor.gistack.cn/bigdata/spark-on-k8s:3.1.2container state: waitingpending reason: ContainerCreating

22/06/27 18:00:03 INFO LoggingPodStatusWatcherImpl: Waiting for application spark-pi with submission ID default:spark-pi-02432381a49a8c05-driver to finish...

22/06/27 18:00:04 INFO LoggingPodStatusWatcherImpl: Application status for spark-a119352471db48d0a4a1083c79b48e0f (phase: Pending)

22/06/27 18:00:05 INFO LoggingPodStatusWatcherImpl: Application status for spark-a119352471db48d0a4a1083c79b48e0f (phase: Pending)

22/06/27 18:00:05 INFO LoggingPodStatusWatcherImpl: State changed, new state:pod name: spark-pi-02432381a49a8c05-drivernamespace: defaultlabels: spark-app-selector -> spark-a119352471db48d0a4a1083c79b48e0f, spark-role -> driverpod uid: bcd6ba46-a58e-42ad-9185-4355fbf78cdbcreation time: 2022-06-27T10:00:02Zservice account name: sparkvolumes: spark-local-dir-1, spark-conf-volume-driver, spark-token-g8sl4node name: vm190116start time: 2022-06-27T10:00:02Zphase: Runningcontainer status:container name: spark-kubernetes-drivercontainer image: harbor.gistack.cn/bigdata/spark-on-k8s:3.1.2container state: runningcontainer started at: 2022-06-27T10:00:04Z

22/06/27 18:00:06 INFO LoggingPodStatusWatcherImpl: Application status for spark-a119352471db48d0a4a1083c79b48e0f (phase: Running)

22/06/27 18:00:07 INFO LoggingPodStatusWatcherImpl: Application status for spark-a119352471db48d0a4a1083c79b48e0f (phase: Running)

22/06/27 18:00:08 INFO LoggingPodStatusWatcherImpl: Application status for spark-a119352471db48d0a4a1083c79b48e0f (phase: Running)

22/06/27 18:00:09 INFO LoggingPodStatusWatcherImpl: Application status for spark-a119352471db48d0a4a1083c79b48e0f (phase: Running)

22/06/27 18:00:10 INFO LoggingPodStatusWatcherImpl: Application status for spark-a119352471db48d0a4a1083c79b48e0f (phase: Running)

22/06/27 18:00:11 INFO LoggingPodStatusWatcherImpl: Application status for spark-a119352471db48d0a4a1083c79b48e0f (phase: Running)

22/06/27 18:00:12 INFO LoggingPodStatusWatcherImpl: Application status for spark-a119352471db48d0a4a1083c79b48e0f (phase: Running)

22/06/27 18:00:13 INFO LoggingPodStatusWatcherImpl: Application status for spark-a119352471db48d0a4a1083c79b48e0f (phase: Running)

22/06/27 18:00:14 INFO LoggingPodStatusWatcherImpl: Application status for spark-a119352471db48d0a4a1083c79b48e0f (phase: Running)

22/06/27 18:00:15 INFO LoggingPodStatusWatcherImpl: Application status for spark-a119352471db48d0a4a1083c79b48e0f (phase: Running)

22/06/27 18:00:16 INFO LoggingPodStatusWatcherImpl: Application status for spark-a119352471db48d0a4a1083c79b48e0f (phase: Running)

22/06/27 18:00:17 INFO LoggingPodStatusWatcherImpl: Application status for spark-a119352471db48d0a4a1083c79b48e0f (phase: Running)

22/06/27 18:00:18 INFO LoggingPodStatusWatcherImpl: Application status for spark-a119352471db48d0a4a1083c79b48e0f (phase: Running)

22/06/27 18:00:19 INFO LoggingPodStatusWatcherImpl: Application status for spark-a119352471db48d0a4a1083c79b48e0f (phase: Running)

22/06/27 18:00:20 INFO LoggingPodStatusWatcherImpl: Application status for spark-a119352471db48d0a4a1083c79b48e0f (phase: Running)

22/06/27 18:00:21 INFO LoggingPodStatusWatcherImpl: Application status for spark-a119352471db48d0a4a1083c79b48e0f (phase: Running)

22/06/27 18:00:22 INFO LoggingPodStatusWatcherImpl: Application status for spark-a119352471db48d0a4a1083c79b48e0f (phase: Running)

22/06/27 18:00:23 INFO LoggingPodStatusWatcherImpl: Application status for spark-a119352471db48d0a4a1083c79b48e0f (phase: Running)

22/06/27 18:00:24 INFO LoggingPodStatusWatcherImpl: Application status for spark-a119352471db48d0a4a1083c79b48e0f (phase: Running)

22/06/27 18:00:25 INFO LoggingPodStatusWatcherImpl: Application status for spark-a119352471db48d0a4a1083c79b48e0f (phase: Running)

22/06/27 18:00:26 INFO LoggingPodStatusWatcherImpl: Application status for spark-a119352471db48d0a4a1083c79b48e0f (phase: Running)

22/06/27 18:00:27 INFO LoggingPodStatusWatcherImpl: Application status for spark-a119352471db48d0a4a1083c79b48e0f (phase: Running)

22/06/27 18:00:27 INFO LoggingPodStatusWatcherImpl: State changed, new state:pod name: spark-pi-02432381a49a8c05-drivernamespace: defaultlabels: spark-app-selector -> spark-a119352471db48d0a4a1083c79b48e0f, spark-role -> driverpod uid: bcd6ba46-a58e-42ad-9185-4355fbf78cdbcreation time: 2022-06-27T10:00:02Zservice account name: sparkvolumes: spark-local-dir-1, spark-conf-volume-driver, spark-token-g8sl4node name: vm190116start time: 2022-06-27T10:00:02Zphase: Succeededcontainer status:container name: spark-kubernetes-drivercontainer image: harbor.gistack.cn/bigdata/spark-on-k8s:3.1.2container state: terminatedcontainer started at: 2022-06-27T10:00:04Zcontainer finished at: 2022-06-27T10:00:26Zexit code: 0termination reason: Completed

22/06/27 18:00:27 INFO LoggingPodStatusWatcherImpl: Application status for spark-a119352471db48d0a4a1083c79b48e0f (phase: Succeeded)

22/06/27 18:00:27 INFO LoggingPodStatusWatcherImpl: Container final statuses:container name: spark-kubernetes-drivercontainer image: harbor.gistack.cn/bigdata/spark-on-k8s:3.1.2container state: terminatedcontainer started at: 2022-06-27T10:00:04Zcontainer finished at: 2022-06-27T10:00:26Zexit code: 0termination reason: Completed

22/06/27 18:00:27 INFO LoggingPodStatusWatcherImpl: Application spark-pi with submission ID default:spark-pi-02432381a49a8c05-driver finished

22/06/27 18:00:27 INFO ShutdownHookManager: Shutdown hook called

22/06/27 18:00:27 INFO ShutdownHookManager: Deleting directory /tmp/spark-db110761-ab56-46b8-a40a-d811d28ff296

[root@k8stest spark-3.1.2-bin-hadoop3.2]#

-

kubernetes上运行情况:

运行结束:

-

spark driver Pod日志

++ id -u

+ myuid=0

++ id -g

+ mygid=0

+ set +e

++ getent passwd 0

+ uidentry=root:x:0:0:root:/root:/bin/bash

+ set -e

+ '[' -z root:x:0:0:root:/root:/bin/bash ']'

+ SPARK_CLASSPATH=':/opt/spark/jars/*'

+ env

+ sort -t_ -k4 -n

+ sed 's/[^=]*=\(.*\)/\1/g'

+ grep SPARK_JAVA_OPT_

+ readarray -t SPARK_EXECUTOR_JAVA_OPTS

+ '[' -n '' ']'

+ '[' -z ']'

+ '[' -z ']'

+ '[' -n '' ']'

+ '[' -z ']'

+ '[' -z x ']'

+ SPARK_CLASSPATH='/opt/spark/conf::/opt/spark/jars/*'

+ case "$1" in

+ shift 1

+ CMD=("$SPARK_HOME/bin/spark-submit" --conf "spark.driver.bindAddress=$SPARK_DRIVER_BIND_ADDRESS" --deploy-mode client "$@")

+ exec /usr/bin/tini -s -- /opt/spark/bin/spark-submit --conf spark.driver.bindAddress=10.42.5.96 --deploy-mode client --properties-file /opt/spark/conf/spark.properties --class org.apache.spark.examples.SparkPi local:///opt/spark/examples/jars/spark-examples_2.12-3.1.2.jar

WARNING: An illegal reflective access operation has occurred

WARNING: Illegal reflective access by org.apache.spark.unsafe.Platform (file:/opt/spark/jars/spark-unsafe_2.12-3.1.2.jar) to constructor java.nio.DirectByteBuffer(long,int)

WARNING: Please consider reporting this to the maintainers of org.apache.spark.unsafe.Platform

WARNING: Use --illegal-access=warn to enable warnings of further illegal reflective access operations

WARNING: All illegal access operations will be denied in a future release

22/06/27 10:00:08 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

22/06/27 10:00:08 INFO SparkContext: Running Spark version 3.1.2

22/06/27 10:00:08 INFO ResourceUtils: ==============================================================

22/06/27 10:00:08 INFO ResourceUtils: No custom resources configured for spark.driver.

22/06/27 10:00:08 INFO ResourceUtils: ==============================================================

22/06/27 10:00:08 INFO SparkContext: Submitted application: Spark Pi

22/06/27 10:00:08 INFO ResourceProfile: Default ResourceProfile created, executor resources: Map(cores -> name: cores, amount: 1, script: , vendor: , memory -> name: memory, amount: 1024, script: , vendor: , offHeap -> name: offHeap, amount: 0, script: , vendor: ), task resources: Map(cpus -> name: cpus, amount: 1.0)

22/06/27 10:00:08 INFO ResourceProfile: Limiting resource is cpus at 1 tasks per executor

22/06/27 10:00:08 INFO ResourceProfileManager: Added ResourceProfile id: 0

22/06/27 10:00:08 INFO SecurityManager: Changing view acls to: root

22/06/27 10:00:08 INFO SecurityManager: Changing modify acls to: root

22/06/27 10:00:08 INFO SecurityManager: Changing view acls groups to:

22/06/27 10:00:08 INFO SecurityManager: Changing modify acls groups to:

22/06/27 10:00:08 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(root); groups with view permissions: Set(); users with modify permissions: Set(root); groups with modify permissions: Set()

22/06/27 10:00:09 INFO Utils: Successfully started service 'sparkDriver' on port 7078.

22/06/27 10:00:09 INFO SparkEnv: Registering MapOutputTracker

22/06/27 10:00:09 INFO SparkEnv: Registering BlockManagerMaster

22/06/27 10:00:09 INFO BlockManagerMasterEndpoint: Using org.apache.spark.storage.DefaultTopologyMapper for getting topology information

22/06/27 10:00:09 INFO BlockManagerMasterEndpoint: BlockManagerMasterEndpoint up

22/06/27 10:00:09 INFO SparkEnv: Registering BlockManagerMasterHeartbeat

22/06/27 10:00:09 INFO DiskBlockManager: Created local directory at /var/data/spark-da112425-86c7-49b2-a0ab-c6609af9ec9e/blockmgr-0d1c82f2-2e1f-48c7-8698-283c8812642f

22/06/27 10:00:09 INFO MemoryStore: MemoryStore started with capacity 413.9 MiB

22/06/27 10:00:09 INFO SparkEnv: Registering OutputCommitCoordinator

22/06/27 10:00:10 INFO Utils: Successfully started service 'SparkUI' on port 4040.

22/06/27 10:00:10 INFO SparkUI: Bound SparkUI to 0.0.0.0, and started at http://spark-pi-02432381a49a8c05-driver-svc.default.svc:4040

22/06/27 10:00:10 INFO SparkContext: Added JAR local:///opt/spark/examples/jars/spark-examples_2.12-3.1.2.jar at file:/opt/spark/examples/jars/spark-examples_2.12-3.1.2.jar with timestamp 1656324008416

22/06/27 10:00:10 WARN SparkContext: The jar local:///opt/spark/examples/jars/spark-examples_2.12-3.1.2.jar has been added already. Overwriting of added jars is not supported in the current version.

22/06/27 10:00:10 INFO SparkKubernetesClientFactory: Auto-configuring K8S client using current context from users K8S config file

22/06/27 10:00:13 INFO ExecutorPodsAllocator: Going to request 5 executors from Kubernetes for ResourceProfile Id: 0, target: 5 running: 0.

22/06/27 10:00:13 INFO BasicExecutorFeatureStep: Decommissioning not enabled, skipping shutdown script

22/06/27 10:00:13 INFO Utils: Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 7079.

22/06/27 10:00:13 INFO NettyBlockTransferService: Server created on spark-pi-02432381a49a8c05-driver-svc.default.svc:7079

22/06/27 10:00:13 INFO BlockManager: Using org.apache.spark.storage.RandomBlockReplicationPolicy for block replication policy

22/06/27 10:00:13 INFO BlockManagerMaster: Registering BlockManager BlockManagerId(driver, spark-pi-02432381a49a8c05-driver-svc.default.svc, 7079, None)

22/06/27 10:00:13 INFO BlockManagerMasterEndpoint: Registering block manager spark-pi-02432381a49a8c05-driver-svc.default.svc:7079 with 413.9 MiB RAM, BlockManagerId(driver, spark-pi-02432381a49a8c05-driver-svc.default.svc, 7079, None)

22/06/27 10:00:13 INFO BlockManagerMaster: Registered BlockManager BlockManagerId(driver, spark-pi-02432381a49a8c05-driver-svc.default.svc, 7079, None)

22/06/27 10:00:13 INFO BlockManager: Initialized BlockManager: BlockManagerId(driver, spark-pi-02432381a49a8c05-driver-svc.default.svc, 7079, None)

22/06/27 10:00:13 INFO BasicExecutorFeatureStep: Decommissioning not enabled, skipping shutdown script

22/06/27 10:00:13 INFO BasicExecutorFeatureStep: Decommissioning not enabled, skipping shutdown script

22/06/27 10:00:13 INFO BasicExecutorFeatureStep: Decommissioning not enabled, skipping shutdown script

22/06/27 10:00:13 INFO BasicExecutorFeatureStep: Decommissioning not enabled, skipping shutdown script

22/06/27 10:00:20 INFO KubernetesClusterSchedulerBackend$KubernetesDriverEndpoint: Registered executor NettyRpcEndpointRef(spark-client://Executor) (10.42.5.97:54996) with ID 3, ResourceProfileId 0

22/06/27 10:00:20 INFO KubernetesClusterSchedulerBackend$KubernetesDriverEndpoint: Registered executor NettyRpcEndpointRef(spark-client://Executor) (10.42.5.99:60040) with ID 2, ResourceProfileId 0

22/06/27 10:00:20 INFO BlockManagerMasterEndpoint: Registering block manager 10.42.5.97:43211 with 413.9 MiB RAM, BlockManagerId(3, 10.42.5.97, 43211, None)

22/06/27 10:00:20 INFO BlockManagerMasterEndpoint: Registering block manager 10.42.5.99:33117 with 413.9 MiB RAM, BlockManagerId(2, 10.42.5.99, 33117, None)

22/06/27 10:00:21 INFO KubernetesClusterSchedulerBackend$KubernetesDriverEndpoint: Registered executor NettyRpcEndpointRef(spark-client://Executor) (10.42.5.98:38884) with ID 1, ResourceProfileId 0

22/06/27 10:00:21 INFO BlockManagerMasterEndpoint: Registering block manager 10.42.5.98:40293 with 413.9 MiB RAM, BlockManagerId(1, 10.42.5.98, 40293, None)

22/06/27 10:00:24 INFO KubernetesClusterSchedulerBackend$KubernetesDriverEndpoint: Registered executor NettyRpcEndpointRef(spark-client://Executor) (10.42.6.35:48062) with ID 4, ResourceProfileId 0

22/06/27 10:00:24 INFO KubernetesClusterSchedulerBackend: SchedulerBackend is ready for scheduling beginning after reached minRegisteredResourcesRatio: 0.8

22/06/27 10:00:24 INFO BlockManagerMasterEndpoint: Registering block manager 10.42.6.35:35003 with 413.9 MiB RAM, BlockManagerId(4, 10.42.6.35, 35003, None)

22/06/27 10:00:24 INFO SparkContext: Starting job: reduce at SparkPi.scala:38

22/06/27 10:00:24 INFO DAGScheduler: Got job 0 (reduce at SparkPi.scala:38) with 2 output partitions

22/06/27 10:00:24 INFO DAGScheduler: Final stage: ResultStage 0 (reduce at SparkPi.scala:38)

22/06/27 10:00:24 INFO DAGScheduler: Parents of final stage: List()

22/06/27 10:00:24 INFO DAGScheduler: Missing parents: List()

22/06/27 10:00:24 INFO DAGScheduler: Submitting ResultStage 0 (MapPartitionsRDD[1] at map at SparkPi.scala:34), which has no missing parents

22/06/27 10:00:25 INFO MemoryStore: Block broadcast_0 stored as values in memory (estimated size 3.1 KiB, free 413.9 MiB)

22/06/27 10:00:25 INFO MemoryStore: Block broadcast_0_piece0 stored as bytes in memory (estimated size 1816.0 B, free 413.9 MiB)

22/06/27 10:00:25 INFO BlockManagerInfo: Added broadcast_0_piece0 in memory on spark-pi-02432381a49a8c05-driver-svc.default.svc:7079 (size: 1816.0 B, free: 413.9 MiB)

22/06/27 10:00:25 INFO SparkContext: Created broadcast 0 from broadcast at DAGScheduler.scala:1388

22/06/27 10:00:25 INFO DAGScheduler: Submitting 2 missing tasks from ResultStage 0 (MapPartitionsRDD[1] at map at SparkPi.scala:34) (first 15 tasks are for partitions Vector(0, 1))

22/06/27 10:00:25 INFO TaskSchedulerImpl: Adding task set 0.0 with 2 tasks resource profile 0

22/06/27 10:00:25 INFO TaskSetManager: Starting task 0.0 in stage 0.0 (TID 0) (10.42.5.98, executor 1, partition 0, PROCESS_LOCAL, 4597 bytes) taskResourceAssignments Map()

22/06/27 10:00:25 INFO TaskSetManager: Starting task 1.0 in stage 0.0 (TID 1) (10.42.5.97, executor 3, partition 1, PROCESS_LOCAL, 4597 bytes) taskResourceAssignments Map()

22/06/27 10:00:25 INFO BlockManagerInfo: Added broadcast_0_piece0 in memory on 10.42.5.98:40293 (size: 1816.0 B, free: 413.9 MiB)

22/06/27 10:00:25 INFO BlockManagerInfo: Added broadcast_0_piece0 in memory on 10.42.5.97:43211 (size: 1816.0 B, free: 413.9 MiB)

22/06/27 10:00:26 INFO TaskSetManager: Finished task 1.0 in stage 0.0 (TID 1) in 848 ms on 10.42.5.97 (executor 3) (1/2)

22/06/27 10:00:26 INFO TaskSetManager: Finished task 0.0 in stage 0.0 (TID 0) in 905 ms on 10.42.5.98 (executor 1) (2/2)

22/06/27 10:00:26 INFO TaskSchedulerImpl: Removed TaskSet 0.0, whose tasks have all completed, from pool

22/06/27 10:00:26 INFO DAGScheduler: ResultStage 0 (reduce at SparkPi.scala:38) finished in 1.165 s

22/06/27 10:00:26 INFO DAGScheduler: Job 0 is finished. Cancelling potential speculative or zombie tasks for this job

22/06/27 10:00:26 INFO TaskSchedulerImpl: Killing all running tasks in stage 0: Stage finished

22/06/27 10:00:26 INFO DAGScheduler: Job 0 finished: reduce at SparkPi.scala:38, took 1.368000 s

Pi is roughly 3.1293956469782347

22/06/27 10:00:26 INFO SparkUI: Stopped Spark web UI at http://spark-pi-02432381a49a8c05-driver-svc.default.svc:4040

22/06/27 10:00:26 INFO KubernetesClusterSchedulerBackend: Shutting down all executors

22/06/27 10:00:26 INFO KubernetesClusterSchedulerBackend$KubernetesDriverEndpoint: Asking each executor to shut down

22/06/27 10:00:26 WARN ExecutorPodsWatchSnapshotSource: Kubernetes client has been closed (this is expected if the application is shutting down.)

22/06/27 10:00:26 INFO MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped!

22/06/27 10:00:26 INFO MemoryStore: MemoryStore cleared

22/06/27 10:00:26 INFO BlockManager: BlockManager stopped

22/06/27 10:00:26 INFO BlockManagerMaster: BlockManagerMaster stopped

22/06/27 10:00:26 INFO OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped!

22/06/27 10:00:26 INFO SparkContext: Successfully stopped SparkContext

22/06/27 10:00:26 INFO ShutdownHookManager: Shutdown hook called

22/06/27 10:00:26 INFO ShutdownHookManager: Deleting directory /var/data/spark-da112425-86c7-49b2-a0ab-c6609af9ec9e/spark-562c9819-1859-46c6-9c08-4487303da431

22/06/27 10:00:26 INFO ShutdownHookManager: Deleting directory /tmp/spark-3e96bc2f-e078-4078-a24c-8f1fb9d75162

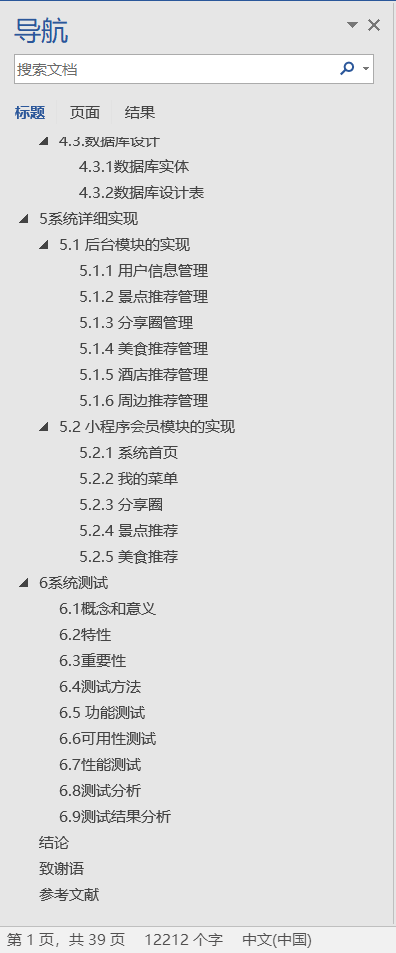

总结

- 通过Client提交spark任务到kubernetes apiserver;

- kubernetes上启动spark driver Pod作为spark master角色;

- spark driver通过sa账户创建spark exec pod来运行任务;

- 无论spark driver还是spark exec都是通过kubernets scheduler调度完成分配的;

- 运行任务完成后自动删除spark exec pod,spark driver状态变为completed。