通义千问2.5-7B-Instruct-AWQ量化,但在npu上运行报上面错误,奇怪?:

Exception:weight model.layers.0.self_attn.q_proj.weight does not exist

AssertionError: weight model.layers.0.self_attn.q_proj.weight does not exist

https://www.modelscope.cn/models/Qwen/Qwen2.5-7B-Instruct-AWQ/files

原因是不支持,华为有自己的量化方法:

https://www.hiascend.com/document/detail/zh/mindie/10RC3/mindiellm/llmdev/mindie_llm0281.html

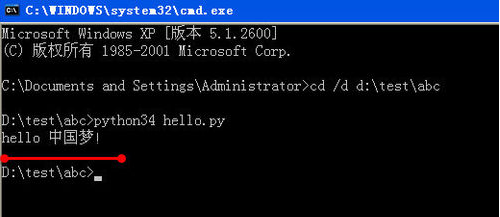

测试转换:

root@dev-8242526b-01f2-4a54-b89d-f6d9c57c692d-qjhpf:/usr/local/Ascend/llm_model# python examples/models/qwen/convert_quant_weights.py --model_path /home/apulis-dev/teamdata/Qwen2.5-7B-Instruct-AWQ --save_directory /home/apulis-dev/teamdata/Qwen2.5-7B --w_bit

8 --a_bit 8 --disable_level L5 --device_type npu --calib_file /usr/local/Ascend/llm_model/examples/convert/model_slim/boolq.jsonl

2024-11-08 11:12:59,068 [INFO] [pid: 776716] env.py-55: {'use_ascend': True, 'max_memory_gb': None, 'reserved_memory_gb': 3, 'skip_warmup': False, 'visible_devices': '0', 'use_host_chooser': True, 'bind_cpu': True}

[W compiler_depend.ts:623] Warning: expandable_segments currently defaults to false. You can enable this feature by `export PYTORCH_NPU_ALLOC_CONF = expandable_segments:True`. (function operator())

Traceback (most recent call last):

File "/usr/local/Ascend/llm_model/examples/models/qwen/convert_quant_weights.py", line 52, in <module>

quant_weight_generator = Quantifier(args.model_path, quant_config, anti_outlier_config, args.device_type)

File "/usr/local/Ascend/llm_model/examples/convert/model_slim/quantifier.py", line 79, in __init__

self.model = AutoModelForCausalLM.from_pretrained(

File "/usr/local/python3.10.2/lib/python3.10/site-packages/transformers/models/auto/auto_factory.py", line 561, in from_pretrained

return model_class.from_pretrained(

File "/usr/local/python3.10.2/lib/python3.10/site-packages/transformers/modeling_utils.py", line 3014, in from_pretrained

config.quantization_config = AutoHfQuantizer.merge_quantization_configs(

File "/usr/local/python3.10.2/lib/python3.10/site-packages/transformers/quantizers/auto.py", line 145, in merge_quantization_configs

quantization_config = AutoQuantizationConfig.from_dict(quantization_config)

File "/usr/local/python3.10.2/lib/python3.10/site-packages/transformers/quantizers/auto.py", line 75, in from_dict

return target_cls.from_dict(quantization_config_dict)

File "/usr/local/python3.10.2/lib/python3.10/site-packages/transformers/utils/quantization_config.py", line 90, in from_dict

config = cls(**config_dict)

File "/usr/local/python3.10.2/lib/python3.10/site-packages/transformers/utils/quantization_config.py", line 655, in __init__

self.post_init()

File "/usr/local/python3.10.2/lib/python3.10/site-packages/transformers/utils/quantization_config.py", line 662, in post_init

raise ValueError("AWQ is only available on GPU")

ValueError: AWQ is only available on GPU

[ERROR] 2024-11-08-11:13:06 (PID:776716, Device:0, RankID:-1) ERR99999 UNKNOWN application exception

root@dev-8242526b-01f2-4a54-b89d-f6d9c57c692d-qjhpf:/usr/local/Ascend/llm_model#

原配置文件内容:

"quantization_config": {

"bits": 4,

"group_size": 128,

"modules_to_not_convert": null,

"quant_method": "awq",

"version": "gemm",

"zero_point": true

},

去掉修改为: "quantize":"w8a8",

root@dev-8242526b-01f2-4a54-b89d-f6d9c57c692d-qjhpf:/home/apulis-dev/teamdata# more Qwen2.5-7B-Instruct-AWQ/config.json

{

"architectures": [

"Qwen2ForCausalLM"

],

"attention_dropout": 0.0,

"bos_token_id": 151643,

"eos_token_id": 151645,

"hidden_act": "silu",

"hidden_size": 3584,

"initializer_range": 0.02,

"intermediate_size": 18944,

"max_position_embeddings": 32768,

"max_window_layers": 28,

"model_type": "qwen2",

"num_attention_heads": 28,

"num_hidden_layers": 28,

"num_key_value_heads": 4,

"quantize":"w8a8",

"rms_norm_eps": 1e-06,

"rope_theta": 1000000.0,

"sliding_window": 131072,

"tie_word_embeddings": false,

"torch_dtype": "float16",

"transformers_version": "4.41.1",

"use_cache": true,

"use_sliding_window": false,

"vocab_size": 152064

}

root@dev-8242526b-01f2-4a54-b89d-f6d9c57c692d-qjhpf:/usr/local/Ascend/llm_model# python examples/models/qwen/convert_quant_weights.py --model_path /home/apulis-dev/teamdata/Qwen2.5-7B-Instruct-AWQ --save_directory /home/apulis-dev/teamdata/Qwen2.5-7B --w_bit 8 --a_bit 8 --disable_level L5 --device_type npu --calib_file /usr/local/Ascend/llm_model/examples/convert/model_slim/boolq.jsonl

2024-11-08 11:19:51,266 [INFO] [pid: 781496] env.py-55: {'use_ascend': True, 'max_memory_gb': None, 'reserved_memory_gb': 3, 'skip_warmup': False, 'visible_devices': '0', 'use_host_chooser': True, 'bind_cpu': True}

[W compiler_depend.ts:623] Warning: expandable_segments currently defaults to false. You can enable this feature by `export PYTORCH_NPU_ALLOC_CONF = expandable_segments:True`. (function operator())

Loading checkpoint shards: 100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 2/2 [00:02<00:00, 1.20s/it]

Some weights of the model checkpoint at /home/apulis-dev/teamdata/Qwen2.5-7B-Instruct-AWQ were not used when initializing Qwen2ForCausalLM: ['model.layers.0.mlp.down_proj.qweight', 'model.layers.0.mlp.down_proj.qzeros', 'model.layers.0.mlp.down_proj.scales', 'model.layers.0.mlp.gate_proj.qweight', 'model.layers.0.mlp.gate_proj.qzeros', 'model.layers.0.mlp.gate_proj.scales', 'model.layers.0.mlp.up_proj.qweight', 'model.layers.0.mlp.up_proj.qzeros', 'model.layers.0.mlp.up_proj.scales', 'model.layers.0.self_attn.k_proj.qweight', 'model.layers.0.self_attn.k_proj.qzeros', 'model.layers.0.self_attn.k_proj.scales', 'model.layers.0.self_attn.o_proj.qweight', 'model.layers.0.self_attn.o_proj.qzeros', 'model.layers.0.self_attn.o_proj.scales', 'model.layers.0.self_attn.q_proj.qweight', 'model.layers.0.self_attn.q_proj.qzeros', 'model.layers.0.self_attn.q_proj.scales', 'model.layers.0.self_attn.v_proj.qweight', 'model.layers.0.self_attn.v_proj.qzeros', 'model.layers.0.self_attn.v_proj.scales', 'model.layers.1.mlp.down_proj.qweight', 'model.layers.1.mlp.down_proj.qzeros', 'model.layers.1.mlp.down_proj.scales', 'model.layers.1.mlp.gate_proj.qweight', 'model.layers.1.mlp.gate_proj.qzeros', 'model.layers.1.mlp.gate_proj.scales', 'model.layers.1.mlp.up_proj.qweight', 'model.layers.1.mlp.up_proj.qzeros', 'model.layers.1.mlp.up_proj.scales', 'model.layers.1.self_attn.k_proj.qweight', 'model.layers.1.self_attn.k_proj.qzeros', 'model.layers.1.self_attn.k_proj.scales', 'model.layers.1.self_attn.o_proj.qweight', 'model.layers.1.self_attn.o_proj.qzeros', 'model.layers.1.self_attn.o_proj.scales', 'model.layers.1.self_attn.q_proj.qweight', 'model.layers.1.self_attn.q_proj.qzeros', 'model.layers.1.self_attn.q_proj.scales', 'model.layers.1.self_attn.v_proj.qweight', 'model.layers.1.self_attn.v_proj.qzeros', 'model.layers.1.self_attn.v_proj.scales', 'model.layers.10.mlp.down_proj.qweight', 'model.layers.10.mlp.down_proj.qzeros', 'model.layers.10.mlp.down_proj.scales', 'model.layers.10.mlp.gate_proj.qweight', 'model.layers.10.mlp.gate_proj.qzeros', 'model.layers.10.mlp.gate_proj.scales', 'model.layers.10.mlp.up_proj.qweight', 'model.layers.10.mlp.up_proj.qzeros', 'model.layers.10.mlp.up_proj.scales', 'model.layers.10.self_attn.k_proj.qweight', 'model.layers.10.self_attn.k_proj.qzeros', 'model.layers.10.self_attn.k_proj.scales', 'model.layers.10.self_attn.o_proj.qweight', 'model.layers.10.self_attn.o_proj.qzeros', 'model.layers.10.self_attn.o_proj.scales', 'model.layers.10.self_attn.q_proj.qweight', 'model.layers.10.self_attn.q_proj.qzeros', 'model.layers.10.self_attn.q_proj.scales', 'model.layers.10.self_attn.v_proj.qweight', 'model.layers.10.self_attn.v_proj.qzeros', 'model.layers.10.self_attn.v_proj.scales', 'model.layers.11.mlp.down_proj.qweight', 'model.layers.11.mlp.down_proj.qzeros', 'model.layers.11.mlp.down_proj.scales', 'model.layers.11.mlp.gate_proj.qweight', 'model.layers.11.mlp.gate_proj.qzeros', 'model.layers.11.mlp.gate_proj.scales', 'model.layers.11.mlp.up_proj.qweight', 'model.layers.11.mlp.up_proj.qzeros', 'model.layers.11.mlp.up_proj.scales', 'model.layers.11.self_attn.k_proj.qweight', 'model.layers.11.self_attn.k_proj.qzeros', 'model.layers.11.self_attn.k_proj.scales', 'model.layers.11.self_attn.o_proj.qweight', 'model.layers.11.self_attn.o_proj.qzeros', 'model.layers.11.self_attn.o_proj.scales', 'model.layers.11.self_attn.q_proj.qweight', 'model.layers.11.self_attn.q_proj.qzeros', 'model.layers.11.self_attn.q_proj.scales', 'model.layers.11.self_attn.v_proj.qweight', 'model.layers.11.self_attn.v_proj.qzeros', 'model.layers.11.self_attn.v_proj.scales', 'model.layers.12.mlp.down_proj.qweight', 'model.layers.12.mlp.down_proj.qzeros', 'model.layers.12.mlp.down_proj.scales', 'model.layers.12.mlp.gate_proj.qweight', 'model.layers.12.mlp.gate_proj.qzeros', 'model.layers.12.mlp.gate_proj.scales', 'model.layers.12.mlp.up_proj.qweight', 'model.layers.12.mlp.up_proj.qzeros', 'model.layers.12.mlp.up_proj.scales', 'model.layers.12.self_attn.k_proj.qweight', 'model.layers.12.self_attn.k_proj.qzeros', 'model.layers.12.self_attn.k_proj.scales', 'model.layers.12.self_attn.o_proj.qweight', 'model.layers.12.self_attn.o_proj.qzeros', 'model.layers.12.self_attn.o_proj.scales', 'model.layers.12.self_attn.q_proj.qweight', 'model.layers.12.self_attn.q_proj.qzeros', 'model.layers.12.self_attn.q_proj.scales', 'model.layers.12.self_attn.v_proj.qweight', 'model.layers.12.self_attn.v_proj.qzeros', 'model.layers.12.self_attn.v_proj.scales', 'model.layers.13.mlp.down_proj.qweight', 'model.layers.13.mlp.down_proj.qzeros', 'model.layers.13.mlp.down_proj.scales', 'model.layers.13.mlp.gate_proj.qweight', 'model.layers.13.mlp.gate_proj.qzeros', 'model.layers.13.mlp.gate_proj.scales', 'model.layers.13.mlp.up_proj.qweight', 'model.layers.13.mlp.up_proj.qzeros', 'model.layers.13.mlp.up_proj.scales', 'model.layers.13.self_attn.k_proj.qweight', 'model.layers.13.self_attn.k_proj.qzeros', 'model.layers.13.self_attn.k_proj.scales', 'model.layers.13.self_attn.o_proj.qweight', 'model.layers.13.self_attn.o_proj.qzeros', 'model.layers.13.self_attn.o_proj.scales', 'model.layers.13.self_attn.q_proj.qweight', 'model.layers.13.self_attn.q_proj.qzeros', 'model.layers.13.self_attn.q_proj.scales', 'model.layers.13.self_attn.v_proj.qweight', 'model.layers.13.self_attn.v_proj.qzeros', 'model.layers.13.self_attn.v_proj.scales', 'model.layers.14.mlp.down_proj.qweight', 'model.layers.14.mlp.down_proj.qzeros', 'model.layers.14.mlp.down_proj.scales', 'model.layers.14.mlp.gate_proj.qweight', 'model.layers.14.mlp.gate_proj.qzeros', 'model.layers.14.mlp.gate_proj.scales', 'model.layers.14.mlp.up_proj.qweight', 'model.layers.14.mlp.up_proj.qzeros', 'model.layers.14.mlp.up_proj.scales', 'model.layers.14.self_attn.k_proj.qweight', 'model.layers.14.self_attn.k_proj.qzeros', 'model.layers.14.self_attn.k_proj.scales', 'model.layers.14.self_attn.o_proj.qweight', 'model.layers.14.self_attn.o_proj.qzeros', 'model.layers.14.self_attn.o_proj.scales', 'model.layers.14.self_attn.q_proj.qweight', 'model.layers.14.self_attn.q_proj.qzeros', 'model.layers.14.self_attn.q_proj.scales', 'model.layers.14.self_attn.v_proj.qweight', 'model.layers.14.self_attn.v_proj.qzeros', 'model.layers.14.self_attn.v_proj.scales', 'model.layers.15.mlp.down_proj.qweight', 'model.layers.15.mlp.down_proj.qzeros', 'model.layers.15.mlp.down_proj.scales', 'model.layers.15.mlp.gate_proj.qweight', 'model.layers.15.mlp.gate_proj.qzeros', 'model.layers.15.mlp.gate_proj.scales', 'model.layers.15.mlp.up_proj.qweight', 'model.layers.15.mlp.up_proj.qzeros', 'model.layers.15.mlp.up_proj.scales', 'model.layers.15.self_attn.k_proj.qweight', 'model.layers.15.self_attn.k_proj.qzeros', 'model.layers.15.self_attn.k_proj.scales', 'model.layers.15.self_attn.o_proj.qweight', 'model.layers.15.self_attn.o_proj.qzeros', 'model.layers.15.self_attn.o_proj.scales', 'model.layers.15.self_attn.q_proj.qweight', 'model.layers.15.self_attn.q_proj.qzeros', 'model.layers.15.self_attn.q_proj.scales', 'model.layers.15.self_attn.v_proj.qweight', 'model.layers.15.self_attn.v_proj.qzeros', 'model.layers.15.self_attn.v_proj.scales', 'model.layers.16.mlp.down_proj.qweight', 'model.layers.16.mlp.down_proj.qzeros', 'model.layers.16.mlp.down_proj.scales', 'model.layers.16.mlp.gate_proj.qweight', 'model.layers.16.mlp.gate_proj.qzeros', 'model.layers.16.mlp.gate_proj.scales', 'model.layers.16.mlp.up_proj.qweight', 'model.layers.16.mlp.up_proj.qzeros', 'model.layers.16.mlp.up_proj.scales', 'model.layers.16.self_attn.k_proj.qweight', 'model.layers.16.self_attn.k_proj.qzeros', 'model.layers.16.self_attn.k_proj.scales', 'model.layers.16.self_attn.o_proj.qweight', 'model.layers.16.self_attn.o_proj.qzeros', 'model.layers.16.self_attn.o_proj.scales', 'model.layers.16.self_attn.q_proj.qweight', 'model.layers.16.self_attn.q_proj.qzeros', 'model.layers.16.self_attn.q_proj.scales', 'model.layers.16.self_attn.v_proj.qweight', 'model.layers.16.self_attn.v_proj.qzeros', 'model.layers.16.self_attn.v_proj.scales', 'model.layers.17.mlp.down_proj.qweight', 'model.layers.17.mlp.down_proj.qzeros', 'model.layers.17.mlp.down_proj.scales', 'model.layers.17.mlp.gate_proj.qweight', 'model.layers.17.mlp.gate_proj.qzeros', 'model.layers.17.mlp.gate_proj.scales', 'model.layers.17.mlp.up_proj.qweight', 'model.layers.17.mlp.up_proj.qzeros', 'model.layers.17.mlp.up_proj.scales', 'model.layers.17.self_attn.k_proj.qweight', 'model.layers.17.self_attn.k_proj.qzeros', 'model.layers.17.self_attn.k_proj.scales', 'model.layers.17.self_attn.o_proj.qweight', 'model.layers.17.self_attn.o_proj.qzeros', 'model.layers.17.self_attn.o_proj.scales', 'model.layers.17.self_attn.q_proj.qweight', 'model.layers.17.self_attn.q_proj.qzeros', 'model.layers.17.self_attn.q_proj.scales', 'model.layers.17.self_attn.v_proj.qweight', 'model.layers.17.self_attn.v_proj.qzeros', 'model.layers.17.self_attn.v_proj.scales', 'model.layers.18.mlp.down_proj.qweight', 'model.layers.18.mlp.down_proj.qzeros', 'model.layers.18.mlp.down_proj.scales', 'model.layers.18.mlp.gate_proj.qweight', 'model.layers.18.mlp.gate_proj.qzeros', 'model.layers.18.mlp.gate_proj.scales', 'model.layers.18.mlp.up_proj.qweight', 'model.layers.18.mlp.up_proj.qzeros', 'model.layers.18.mlp.up_proj.scales', 'model.layers.18.self_attn.k_proj.qweight', 'model.layers.18.self_attn.k_proj.qzeros', 'model.layers.18.self_attn.k_proj.scales', 'model.layers.18.self_attn.o_proj.qweight', 'model.layers.18.self_attn.o_proj.qzeros', 'model.layers.18.self_attn.o_proj.scales', 'model.layers.18.self_attn.q_proj.qweight', 'model.layers.18.self_attn.q_proj.qzeros', 'model.layers.18.self_attn.q_proj.scales', 'model.layers.18.self_attn.v_proj.qweight', 'model.layers.18.self_attn.v_proj.qzeros', 'model.layers.18.self_attn.v_proj.scales', 'model.layers.19.mlp.down_proj.qweight', 'model.layers.19.mlp.down_proj.qzeros', 'model.layers.19.mlp.down_proj.scales', 'model.layers.19.mlp.gate_proj.qweight', 'model.layers.19.mlp.gate_proj.qzeros', 'model.layers.19.mlp.gate_proj.scales', 'model.layers.19.mlp.up_proj.qweight', 'model.layers.19.mlp.up_proj.qzeros', 'model.layers.19.mlp.up_proj.scales', 'model.layers.19.self_attn.k_proj.qweight', 'model.layers.19.self_attn.k_proj.qzeros', 'model.layers.19.self_attn.k_proj.scales', 'model.layers.19.self_attn.o_proj.qweight', 'model.layers.19.self_attn.o_proj.qzeros', 'model.layers.19.self_attn.o_proj.scales', 'model.layers.19.self_attn.q_proj.qweight', 'model.layers.19.self_attn.q_proj.qzeros', 'model.layers.19.self_attn.q_proj.scales', 'model.layers.19.self_attn.v_proj.qweight', 'model.layers.19.self_attn.v_proj.qzeros', 'model.layers.19.self_attn.v_proj.scales', 'model.layers.2.mlp.down_proj.qweight', 'model.layers.2.mlp.down_proj.qzeros', 'model.layers.2.mlp.down_proj.scales', 'model.layers.2.mlp.gate_proj.qweight', 'model.layers.2.mlp.gate_proj.qzeros', 'model.layers.2.mlp.gate_proj.scales', 'model.layers.2.mlp.up_proj.qweight', 'model.layers.2.mlp.up_proj.qzeros', 'model.layers.2.mlp.up_proj.scales', 'model.layers.2.self_attn.k_proj.qweight', 'model.layers.2.self_attn.k_proj.qzeros', 'model.layers.2.self_attn.k_proj.scales', 'model.layers.2.self_attn.o_proj.qweight', 'model.layers.2.self_attn.o_proj.qzeros', 'model.layers.2.self_attn.o_proj.scales', 'model.layers.2.self_attn.q_proj.qweight', 'model.layers.2.self_attn.q_proj.qzeros', 'model.layers.2.self_attn.q_proj.scales', 'model.layers.2.self_attn.v_proj.qweight', 'model.layers.2.self_attn.v_proj.qzeros', 'model.layers.2.self_attn.v_proj.scales', 'model.layers.20.mlp.down_proj.qweight', 'model.layers.20.mlp.down_proj.qzeros', 'model.layers.20.mlp.down_proj.scales', 'model.layers.20.mlp.gate_proj.qweight', 'model.layers.20.mlp.gate_proj.qzeros', 'model.layers.20.mlp.gate_proj.scales', 'model.layers.20.mlp.up_proj.qweight', 'model.layers.20.mlp.up_proj.qzeros', 'model.layers.20.mlp.up_proj.scales', 'model.layers.20.self_attn.k_proj.qweight', 'model.layers.20.self_attn.k_proj.qzeros', 'model.layers.20.self_attn.k_proj.scales', 'model.layers.20.self_attn.o_proj.qweight', 'model.layers.20.self_attn.o_proj.qzeros', 'model.layers.20.self_attn.o_proj.scales', 'model.layers.20.self_attn.q_proj.qweight', 'model.layers.20.self_attn.q_proj.qzeros', 'model.layers.20.self_attn.q_proj.scales', 'model.layers.20.self_attn.v_proj.qweight', 'model.layers.20.self_attn.v_proj.qzeros', 'model.layers.20.self_attn.v_proj.scales', 'model.layers.21.mlp.down_proj.qweight', 'model.layers.21.mlp.down_proj.qzeros', 'model.layers.21.mlp.down_proj.scales', 'model.layers.21.mlp.gate_proj.qweight', 'model.layers.21.mlp.gate_proj.qzeros', 'model.layers.21.mlp.gate_proj.scales', 'model.layers.21.mlp.up_proj.qweight', 'model.layers.21.mlp.up_proj.qzeros', 'model.layers.21.mlp.up_proj.scales', 'model.layers.21.self_attn.k_proj.qweight', 'model.layers.21.self_attn.k_proj.qzeros', 'model.layers.21.self_attn.k_proj.scales', 'model.layers.21.self_attn.o_proj.qweight', 'model.layers.21.self_attn.o_proj.qzeros', 'model.layers.21.self_attn.o_proj.scales', 'model.layers.21.self_attn.q_proj.qweight', 'model.layers.21.self_attn.q_proj.qzeros', 'model.layers.21.self_attn.q_proj.scales', 'model.layers.21.self_attn.v_proj.qweight', 'model.layers.21.self_attn.v_proj.qzeros', 'model.layers.21.self_attn.v_proj.scales', 'model.layers.22.mlp.down_proj.qweight', 'model.layers.22.mlp.down_proj.qzeros', 'model.layers.22.mlp.down_proj.scales', 'model.layers.22.mlp.gate_proj.qweight', 'model.layers.22.mlp.gate_proj.qzeros', 'model.layers.22.mlp.gate_proj.scales', 'model.layers.22.mlp.up_proj.qweight', 'model.layers.22.mlp.up_proj.qzeros', 'model.layers.22.mlp.up_proj.scales', 'model.layers.22.self_attn.k_proj.qweight', 'model.layers.22.self_attn.k_proj.qzeros', 'model.layers.22.self_attn.k_proj.scales', 'model.layers.22.self_attn.o_proj.qweight', 'model.layers.22.self_attn.o_proj.qzeros', 'model.layers.22.self_attn.o_proj.scales', 'model.layers.22.self_attn.q_proj.qweight', 'model.layers.22.self_attn.q_proj.qzeros', 'model.layers.22.self_attn.q_proj.scales', 'model.layers.22.self_attn.v_proj.qweight', 'model.layers.22.self_attn.v_proj.qzeros', 'model.layers.22.self_attn.v_proj.scales', 'model.layers.23.mlp.down_proj.qweight', 'model.layers.23.mlp.down_proj.qzeros', 'model.layers.23.mlp.down_proj.scales', 'model.layers.23.mlp.gate_proj.qweight', 'model.layers.23.mlp.gate_proj.qzeros', 'model.layers.23.mlp.gate_proj.scales', 'model.layers.23.mlp.up_proj.qweight', 'model.layers.23.mlp.up_proj.qzeros', 'model.layers.23.mlp.up_proj.scales', 'model.layers.23.self_attn.k_proj.qweight', 'model.layers.23.self_attn.k_proj.qzeros', 'model.layers.23.self_attn.k_proj.scales', 'model.layers.23.self_attn.o_proj.qweight', 'model.layers.23.self_attn.o_proj.qzeros', 'model.layers.23.self_attn.o_proj.scales', 'model.layers.23.self_attn.q_proj.qweight', 'model.layers.23.self_attn.q_proj.qzeros', 'model.layers.23.self_attn.q_proj.scales', 'model.layers.23.self_attn.v_proj.qweight', 'model.layers.23.self_attn.v_proj.qzeros', 'model.layers.23.self_attn.v_proj.scales', 'model.layers.24.mlp.down_proj.qweight', 'model.layers.24.mlp.down_proj.qzeros', 'model.layers.24.mlp.down_proj.scales', 'model.layers.24.mlp.gate_proj.qweight', 'model.layers.24.mlp.gate_proj.qzeros', 'model.layers.24.mlp.gate_proj.scales', 'model.layers.24.mlp.up_proj.qweight', 'model.layers.24.mlp.up_proj.qzeros', 'model.layers.24.mlp.up_proj.scales', 'model.layers.24.self_attn.k_proj.qweight', 'model.layers.24.self_attn.k_proj.qzeros', 'model.layers.24.self_attn.k_proj.scales', 'model.layers.24.self_attn.o_proj.qweight', 'model.layers.24.self_attn.o_proj.qzeros', 'model.layers.24.self_attn.o_proj.scales', 'model.layers.24.self_attn.q_proj.qweight', 'model.layers.24.self_attn.q_proj.qzeros', 'model.layers.24.self_attn.q_proj.scales', 'model.layers.24.self_attn.v_proj.qweight', 'model.layers.24.self_attn.v_proj.qzeros', 'model.layers.24.self_attn.v_proj.scales', 'model.layers.25.mlp.down_proj.qweight', 'model.layers.25.mlp.down_proj.qzeros', 'model.layers.25.mlp.down_proj.scales', 'model.layers.25.mlp.gate_proj.qweight', 'model.layers.25.mlp.gate_proj.qzeros', 'model.layers.25.mlp.gate_proj.scales', 'model.layers.25.mlp.up_proj.qweight', 'model.layers.25.mlp.up_proj.qzeros', 'model.layers.25.mlp.up_proj.scales', 'model.layers.25.self_attn.k_proj.qweight', 'model.layers.25.self_attn.k_proj.qzeros', 'model.layers.25.self_attn.k_proj.scales', 'model.layers.25.self_attn.o_proj.qweight', 'model.layers.25.self_attn.o_proj.qzeros', 'model.layers.25.self_attn.o_proj.scales', 'model.layers.25.self_attn.q_proj.qweight', 'model.layers.25.self_attn.q_proj.qzeros', 'model.layers.25.self_attn.q_proj.scales', 'model.layers.25.self_attn.v_proj.qweight', 'model.layers.25.self_attn.v_proj.qzeros', 'model.layers.25.self_attn.v_proj.scales', 'model.layers.26.mlp.down_proj.qweight', 'model.layers.26.mlp.down_proj.qzeros', 'model.layers.26.mlp.down_proj.scales', 'model.layers.26.mlp.gate_proj.qweight', 'model.layers.26.mlp.gate_proj.qzeros', 'model.layers.26.mlp.gate_proj.scales', 'model.layers.26.mlp.up_proj.qweight', 'model.layers.26.mlp.up_proj.qzeros', 'model.layers.26.mlp.up_proj.scales', 'model.layers.26.self_attn.k_proj.qweight', 'model.layers.26.self_attn.k_proj.qzeros', 'model.layers.26.self_attn.k_proj.scales', 'model.layers.26.self_attn.o_proj.qweight', 'model.layers.26.self_attn.o_proj.qzeros', 'model.layers.26.self_attn.o_proj.scales', 'model.layers.26.self_attn.q_proj.qweight', 'model.layers.26.self_attn.q_proj.qzeros', 'model.layers.26.self_attn.q_proj.scales', 'model.layers.26.self_attn.v_proj.qweight', 'model.layers.26.self_attn.v_proj.qzeros', 'model.layers.26.self_attn.v_proj.scales', 'model.layers.27.mlp.down_proj.qweight', 'model.layers.27.mlp.down_proj.qzeros', 'model.layers.27.mlp.down_proj.scales', 'model.layers.27.mlp.gate_proj.qweight', 'model.layers.27.mlp.gate_proj.qzeros', 'model.layers.27.mlp.gate_proj.scales', 'model.layers.27.mlp.up_proj.qweight', 'model.layers.27.mlp.up_proj.qzeros', 'model.layers.27.mlp.up_proj.scales', 'model.layers.27.self_attn.k_proj.qweight', 'model.layers.27.self_attn.k_proj.qzeros', 'model.layers.27.self_attn.k_proj.scales', 'model.layers.27.self_attn.o_proj.qweight', 'model.layers.27.self_attn.o_proj.qzeros', 'model.layers.27.self_attn.o_proj.scales', 'model.layers.27.self_attn.q_proj.qweight', 'model.layers.27.self_attn.q_proj.qzeros', 'model.layers.27.self_attn.q_proj.scales', 'model.layers.27.self_attn.v_proj.qweight', 'model.layers.27.self_attn.v_proj.qzeros', 'model.layers.27.self_attn.v_proj.scales', 'model.layers.3.mlp.down_proj.qweight', 'model.layers.3.mlp.down_proj.qzeros', 'model.layers.3.mlp.down_proj.scales', 'model.layers.3.mlp.gate_proj.qweight', 'model.layers.3.mlp.gate_proj.qzeros', 'model.layers.3.mlp.gate_proj.scales', 'model.layers.3.mlp.up_proj.qweight', 'model.layers.3.mlp.up_proj.qzeros', 'model.layers.3.mlp.up_proj.scales', 'model.layers.3.self_attn.k_proj.qweight', 'model.layers.3.self_attn.k_proj.qzeros', 'model.layers.3.self_attn.k_proj.scales', 'model.layers.3.self_attn.o_proj.qweight', 'model.layers.3.self_attn.o_proj.qzeros', 'model.layers.3.self_attn.o_proj.scales', 'model.layers.3.self_attn.q_proj.qweight', 'model.layers.3.self_attn.q_proj.qzeros', 'model.layers.3.self_attn.q_proj.scales', 'model.layers.3.self_attn.v_proj.qweight', 'model.layers.3.self_attn.v_proj.qzeros', 'model.layers.3.self_attn.v_proj.scales', 'model.layers.4.mlp.down_proj.qweight', 'model.layers.4.mlp.down_proj.qzeros', 'model.layers.4.mlp.down_proj.scales', 'model.layers.4.mlp.gate_proj.qweight', 'model.layers.4.mlp.gate_proj.qzeros', 'model.layers.4.mlp.gate_proj.scales', 'model.layers.4.mlp.up_proj.qweight', 'model.layers.4.mlp.up_proj.qzeros', 'model.layers.4.mlp.up_proj.scales', 'model.layers.4.self_attn.k_proj.qweight', 'model.layers.4.self_attn.k_proj.qzeros', 'model.layers.4.self_attn.k_proj.scales', 'model.layers.4.self_attn.o_proj.qweight', 'model.layers.4.self_attn.o_proj.qzeros', 'model.layers.4.self_attn.o_proj.scales', 'model.layers.4.self_attn.q_proj.qweight', 'model.layers.4.self_attn.q_proj.qzeros', 'model.layers.4.self_attn.q_proj.scales', 'model.layers.4.self_attn.v_proj.qweight', 'model.layers.4.self_attn.v_proj.qzeros', 'model.layers.4.self_attn.v_proj.scales', 'model.layers.5.mlp.down_proj.qweight', 'model.layers.5.mlp.down_proj.qzeros', 'model.layers.5.mlp.down_proj.scales', 'model.layers.5.mlp.gate_proj.qweight', 'model.layers.5.mlp.gate_proj.qzeros', 'model.layers.5.mlp.gate_proj.scales', 'model.layers.5.mlp.up_proj.qweight', 'model.layers.5.mlp.up_proj.qzeros', 'model.layers.5.mlp.up_proj.scales', 'model.layers.5.self_attn.k_proj.qweight', 'model.layers.5.self_attn.k_proj.qzeros', 'model.layers.5.self_attn.k_proj.scales', 'model.layers.5.self_attn.o_proj.qweight', 'model.layers.5.self_attn.o_proj.qzeros', 'model.layers.5.self_attn.o_proj.scales', 'model.layers.5.self_attn.q_proj.qweight', 'model.layers.5.self_attn.q_proj.qzeros', 'model.layers.5.self_attn.q_proj.scales', 'model.layers.5.self_attn.v_proj.qweight', 'model.layers.5.self_attn.v_proj.qzeros', 'model.layers.5.self_attn.v_proj.scales', 'model.layers.6.mlp.down_proj.qweight', 'model.layers.6.mlp.down_proj.qzeros', 'model.layers.6.mlp.down_proj.scales', 'model.layers.6.mlp.gate_proj.qweight', 'model.layers.6.mlp.gate_proj.qzeros', 'model.layers.6.mlp.gate_proj.scales', 'model.layers.6.mlp.up_proj.qweight', 'model.layers.6.mlp.up_proj.qzeros', 'model.layers.6.mlp.up_proj.scales', 'model.layers.6.self_attn.k_proj.qweight', 'model.layers.6.self_attn.k_proj.qzeros', 'model.layers.6.self_attn.k_proj.scales', 'model.layers.6.self_attn.o_proj.qweight', 'model.layers.6.self_attn.o_proj.qzeros', 'model.layers.6.self_attn.o_proj.scales', 'model.layers.6.self_attn.q_proj.qweight', 'model.layers.6.self_attn.q_proj.qzeros', 'model.layers.6.self_attn.q_proj.scales', 'model.layers.6.self_attn.v_proj.qweight', 'model.layers.6.self_attn.v_proj.qzeros', 'model.layers.6.self_attn.v_proj.scales', 'model.layers.7.mlp.down_proj.qweight', 'model.layers.7.mlp.down_proj.qzeros', 'model.layers.7.mlp.down_proj.scales', 'model.layers.7.mlp.gate_proj.qweight', 'model.layers.7.mlp.gate_proj.qzeros', 'model.layers.7.mlp.gate_proj.scales', 'model.layers.7.mlp.up_proj.qweight', 'model.layers.7.mlp.up_proj.qzeros', 'model.layers.7.mlp.up_proj.scales', 'model.layers.7.self_attn.k_proj.qweight', 'model.layers.7.self_attn.k_proj.qzeros', 'model.layers.7.self_attn.k_proj.scales', 'model.layers.7.self_attn.o_proj.qweight', 'model.layers.7.self_attn.o_proj.qzeros', 'model.layers.7.self_attn.o_proj.scales', 'model.layers.7.self_attn.q_proj.qweight', 'model.layers.7.self_attn.q_proj.qzeros', 'model.layers.7.self_attn.q_proj.scales', 'model.layers.7.self_attn.v_proj.qweight', 'model.layers.7.self_attn.v_proj.qzeros', 'model.layers.7.self_attn.v_proj.scales', 'model.layers.8.mlp.down_proj.qweight', 'model.layers.8.mlp.down_proj.qzeros', 'model.layers.8.mlp.down_proj.scales', 'model.layers.8.mlp.gate_proj.qweight', 'model.layers.8.mlp.gate_proj.qzeros', 'model.layers.8.mlp.gate_proj.scales', 'model.layers.8.mlp.up_proj.qweight', 'model.layers.8.mlp.up_proj.qzeros', 'model.layers.8.mlp.up_proj.scales', 'model.layers.8.self_attn.k_proj.qweight', 'model.layers.8.self_attn.k_proj.qzeros', 'model.layers.8.self_attn.k_proj.scales', 'model.layers.8.self_attn.o_proj.qweight', 'model.layers.8.self_attn.o_proj.qzeros', 'model.layers.8.self_attn.o_proj.scales', 'model.layers.8.self_attn.q_proj.qweight', 'model.layers.8.self_attn.q_proj.qzeros', 'model.layers.8.self_attn.q_proj.scales', 'model.layers.8.self_attn.v_proj.qweight', 'model.layers.8.self_attn.v_proj.qzeros', 'model.layers.8.self_attn.v_proj.scales', 'model.layers.9.mlp.down_proj.qweight', 'model.layers.9.mlp.down_proj.qzeros', 'model.layers.9.mlp.down_proj.scales', 'model.layers.9.mlp.gate_proj.qweight', 'model.layers.9.mlp.gate_proj.qzeros', 'model.layers.9.mlp.gate_proj.scales', 'model.layers.9.mlp.up_proj.qweight', 'model.layers.9.mlp.up_proj.qzeros', 'model.layers.9.mlp.up_proj.scales', 'model.layers.9.self_attn.k_proj.qweight', 'model.layers.9.self_attn.k_proj.qzeros', 'model.layers.9.self_attn.k_proj.scales', 'model.layers.9.self_attn.o_proj.qweight', 'model.layers.9.self_attn.o_proj.qzeros', 'model.layers.9.self_attn.o_proj.scales', 'model.layers.9.self_attn.q_proj.qweight', 'model.layers.9.self_attn.q_proj.qzeros', 'model.layers.9.self_attn.q_proj.scales', 'model.layers.9.self_attn.v_proj.qweight', 'model.layers.9.self_attn.v_proj.qzeros', 'model.layers.9.self_attn.v_proj.scales']

- This IS expected if you are initializing Qwen2ForCausalLM from the checkpoint of a model trained on another task or with another architecture (e.g. initializing a BertForSequenceClassification model from a BertForPreTraining model).

- This IS NOT expected if you are initializing Qwen2ForCausalLM from the checkpoint of a model that you expect to be exactly identical (initializing a BertForSequenceClassification model from a BertForSequenceClassification model).

Some weights of Qwen2ForCausalLM were not initialized from the model checkpoint at /home/apulis-dev/teamdata/Qwen2.5-7B-Instruct-AWQ and are newly initialized: ['model.layers.0.mlp.down_proj.weight', 'model.layers.0.mlp.gate_proj.weight', 'model.layers.0.mlp.up_proj.weight', 'model.layers.0.self_attn.k_proj.weight', 'model.layers.0.self_attn.o_proj.weight', 'model.layers.0.self_attn.q_proj.weight', 'model.layers.0.self_attn.v_proj.weight', 'model.layers.1.mlp.down_proj.weight', 'model.layers.1.mlp.gate_proj.weight', 'model.layers.1.mlp.up_proj.weight', 'model.layers.1.self_attn.k_proj.weight', 'model.layers.1.self_attn.o_proj.weight', 'model.layers.1.self_attn.q_proj.weight', 'model.layers.1.self_attn.v_proj.weight', 'model.layers.10.mlp.down_proj.weight', 'model.layers.10.mlp.gate_proj.weight', 'model.layers.10.mlp.up_proj.weight', 'model.layers.10.self_attn.k_proj.weight', 'model.layers.10.self_attn.o_proj.weight', 'model.layers.10.self_attn.q_proj.weight', 'model.layers.10.self_attn.v_proj.weight', 'model.layers.11.mlp.down_proj.weight', 'model.layers.11.mlp.gate_proj.weight', 'model.layers.11.mlp.up_proj.weight', 'model.layers.11.self_attn.k_proj.weight', 'model.layers.11.self_attn.o_proj.weight', 'model.layers.11.self_attn.q_proj.weight', 'model.layers.11.self_attn.v_proj.weight', 'model.layers.12.mlp.down_proj.weight', 'model.layers.12.mlp.gate_proj.weight', 'model.layers.12.mlp.up_proj.weight', 'model.layers.12.self_attn.k_proj.weight', 'model.layers.12.self_attn.o_proj.weight', 'model.layers.12.self_attn.q_proj.weight', 'model.layers.12.self_attn.v_proj.weight', 'model.layers.13.mlp.down_proj.weight', 'model.layers.13.mlp.gate_proj.weight', 'model.layers.13.mlp.up_proj.weight', 'model.layers.13.self_attn.k_proj.weight', 'model.layers.13.self_attn.o_proj.weight', 'model.layers.13.self_attn.q_proj.weight', 'model.layers.13.self_attn.v_proj.weight', 'model.layers.14.mlp.down_proj.weight', 'model.layers.14.mlp.gate_proj.weight', 'model.layers.14.mlp.up_proj.weight', 'model.layers.14.self_attn.k_proj.weight', 'model.layers.14.self_attn.o_proj.weight', 'model.layers.14.self_attn.q_proj.weight', 'model.layers.14.self_attn.v_proj.weight', 'model.layers.15.mlp.down_proj.weight', 'model.layers.15.mlp.gate_proj.weight', 'model.layers.15.mlp.up_proj.weight', 'model.layers.15.self_attn.k_proj.weight', 'model.layers.15.self_attn.o_proj.weight', 'model.layers.15.self_attn.q_proj.weight', 'model.layers.15.self_attn.v_proj.weight', 'model.layers.16.mlp.down_proj.weight', 'model.layers.16.mlp.gate_proj.weight', 'model.layers.16.mlp.up_proj.weight', 'model.layers.16.self_attn.k_proj.weight', 'model.layers.16.self_attn.o_proj.weight', 'model.layers.16.self_attn.q_proj.weight', 'model.layers.16.self_attn.v_proj.weight', 'model.layers.17.mlp.down_proj.weight', 'model.layers.17.mlp.gate_proj.weight', 'model.layers.17.mlp.up_proj.weight', 'model.layers.17.self_attn.k_proj.weight', 'model.layers.17.self_attn.o_proj.weight', 'model.layers.17.self_attn.q_proj.weight', 'model.layers.17.self_attn.v_proj.weight', 'model.layers.18.mlp.down_proj.weight', 'model.layers.18.mlp.gate_proj.weight', 'model.layers.18.mlp.up_proj.weight', 'model.layers.18.self_attn.k_proj.weight', 'model.layers.18.self_attn.o_proj.weight', 'model.layers.18.self_attn.q_proj.weight', 'model.layers.18.self_attn.v_proj.weight', 'model.layers.19.mlp.down_proj.weight', 'model.layers.19.mlp.gate_proj.weight', 'model.layers.19.mlp.up_proj.weight', 'model.layers.19.self_attn.k_proj.weight', 'model.layers.19.self_attn.o_proj.weight', 'model.layers.19.self_attn.q_proj.weight', 'model.layers.19.self_attn.v_proj.weight', 'model.layers.2.mlp.down_proj.weight', 'model.layers.2.mlp.gate_proj.weight', 'model.layers.2.mlp.up_proj.weight', 'model.layers.2.self_attn.k_proj.weight', 'model.layers.2.self_attn.o_proj.weight', 'model.layers.2.self_attn.q_proj.weight', 'model.layers.2.self_attn.v_proj.weight', 'model.layers.20.mlp.down_proj.weight', 'model.layers.20.mlp.gate_proj.weight', 'model.layers.20.mlp.up_proj.weight', 'model.layers.20.self_attn.k_proj.weight', 'model.layers.20.self_attn.o_proj.weight', 'model.layers.20.self_attn.q_proj.weight', 'model.layers.20.self_attn.v_proj.weight', 'model.layers.21.mlp.down_proj.weight', 'model.layers.21.mlp.gate_proj.weight', 'model.layers.21.mlp.up_proj.weight', 'model.layers.21.self_attn.k_proj.weight', 'model.layers.21.self_attn.o_proj.weight', 'model.layers.21.self_attn.q_proj.weight', 'model.layers.21.self_attn.v_proj.weight', 'model.layers.22.mlp.down_proj.weight', 'model.layers.22.mlp.gate_proj.weight', 'model.layers.22.mlp.up_proj.weight', 'model.layers.22.self_attn.k_proj.weight', 'model.layers.22.self_attn.o_proj.weight', 'model.layers.22.self_attn.q_proj.weight', 'model.layers.22.self_attn.v_proj.weight', 'model.layers.23.mlp.down_proj.weight', 'model.layers.23.mlp.gate_proj.weight', 'model.layers.23.mlp.up_proj.weight', 'model.layers.23.self_attn.k_proj.weight', 'model.layers.23.self_attn.o_proj.weight', 'model.layers.23.self_attn.q_proj.weight', 'model.layers.23.self_attn.v_proj.weight', 'model.layers.24.mlp.down_proj.weight', 'model.layers.24.mlp.gate_proj.weight', 'model.layers.24.mlp.up_proj.weight', 'model.layers.24.self_attn.k_proj.weight', 'model.layers.24.self_attn.o_proj.weight', 'model.layers.24.self_attn.q_proj.weight', 'model.layers.24.self_attn.v_proj.weight', 'model.layers.25.mlp.down_proj.weight', 'model.layers.25.mlp.gate_proj.weight', 'model.layers.25.mlp.up_proj.weight', 'model.layers.25.self_attn.k_proj.weight', 'model.layers.25.self_attn.o_proj.weight', 'model.layers.25.self_attn.q_proj.weight', 'model.layers.25.self_attn.v_proj.weight', 'model.layers.26.mlp.down_proj.weight', 'model.layers.26.mlp.gate_proj.weight', 'model.layers.26.mlp.up_proj.weight', 'model.layers.26.self_attn.k_proj.weight', 'model.layers.26.self_attn.o_proj.weight', 'model.layers.26.self_attn.q_proj.weight', 'model.layers.26.self_attn.v_proj.weight', 'model.layers.27.mlp.down_proj.weight', 'model.layers.27.mlp.gate_proj.weight', 'model.layers.27.mlp.up_proj.weight', 'model.layers.27.self_attn.k_proj.weight', 'model.layers.27.self_attn.o_proj.weight', 'model.layers.27.self_attn.q_proj.weight', 'model.layers.27.self_attn.v_proj.weight', 'model.layers.3.mlp.down_proj.weight', 'model.layers.3.mlp.gate_proj.weight', 'model.layers.3.mlp.up_proj.weight', 'model.layers.3.self_attn.k_proj.weight', 'model.layers.3.self_attn.o_proj.weight', 'model.layers.3.self_attn.q_proj.weight', 'model.layers.3.self_attn.v_proj.weight', 'model.layers.4.mlp.down_proj.weight', 'model.layers.4.mlp.gate_proj.weight', 'model.layers.4.mlp.up_proj.weight', 'model.layers.4.self_attn.k_proj.weight', 'model.layers.4.self_attn.o_proj.weight', 'model.layers.4.self_attn.q_proj.weight', 'model.layers.4.self_attn.v_proj.weight', 'model.layers.5.mlp.down_proj.weight', 'model.layers.5.mlp.gate_proj.weight', 'model.layers.5.mlp.up_proj.weight', 'model.layers.5.self_attn.k_proj.weight', 'model.layers.5.self_attn.o_proj.weight', 'model.layers.5.self_attn.q_proj.weight', 'model.layers.5.self_attn.v_proj.weight', 'model.layers.6.mlp.down_proj.weight', 'model.layers.6.mlp.gate_proj.weight', 'model.layers.6.mlp.up_proj.weight', 'model.layers.6.self_attn.k_proj.weight', 'model.layers.6.self_attn.o_proj.weight', 'model.layers.6.self_attn.q_proj.weight', 'model.layers.6.self_attn.v_proj.weight', 'model.layers.7.mlp.down_proj.weight', 'model.layers.7.mlp.gate_proj.weight', 'model.layers.7.mlp.up_proj.weight', 'model.layers.7.self_attn.k_proj.weight', 'model.layers.7.self_attn.o_proj.weight', 'model.layers.7.self_attn.q_proj.weight', 'model.layers.7.self_attn.v_proj.weight', 'model.layers.8.mlp.down_proj.weight', 'model.layers.8.mlp.gate_proj.weight', 'model.layers.8.mlp.up_proj.weight', 'model.layers.8.self_attn.k_proj.weight', 'model.layers.8.self_attn.o_proj.weight', 'model.layers.8.self_attn.q_proj.weight', 'model.layers.8.self_attn.v_proj.weight', 'model.layers.9.mlp.down_proj.weight', 'model.layers.9.mlp.gate_proj.weight', 'model.layers.9.mlp.up_proj.weight', 'model.layers.9.self_attn.k_proj.weight', 'model.layers.9.self_attn.o_proj.weight', 'model.layers.9.self_attn.q_proj.weight', 'model.layers.9.self_attn.v_proj.weight']

You should probably TRAIN this model on a down-stream task to be able to use it for predictions and inference.

Special tokens have been added in the vocabulary, make sure the associated word embeddings are fine-tuned or trained.

2024-11-08 11:34:06,314 - msmodelslim-logger - INFO - Automatic disable last layer name: lm_head

feature process: 100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 5/5 [00:02<00:00, 2.48it/s]

('Warning: torch.save with "_use_new_zipfile_serialization = False" is not recommended for npu tensor, which may bring unexpected errors and hopefully set "_use_new_zipfile_serialization = True"', 'if it is necessary to use this, please convert the npu tensor to cpu tensor for saving')

2024-11-08 11:34:09,205 - msmodelslim-logger - INFO - The number of nn.Linear and nn.Conv2d is 197.

2024-11-08 11:34:09,206 - msmodelslim-logger - INFO - Automatic disabled layer names are:

model.layers.0.self_attn.k_proj

model.layers.0.self_attn.q_proj

model.layers.0.self_attn.v_proj

model.layers.26.self_attn.k_proj

model.layers.26.self_attn.q_proj

2024-11-08 11:34:09,206 - msmodelslim-logger - INFO - roll back:lm_head

model.layers.0.mlp.down_proj

model.layers.0.self_attn.k_proj

model.layers.0.self_attn.q_proj

model.layers.0.self_attn.v_proj

model.layers.1.mlp.down_proj

model.layers.10.mlp.down_proj

model.layers.11.mlp.down_proj

model.layers.12.mlp.down_proj

model.layers.13.mlp.down_proj

model.layers.14.mlp.down_proj

model.layers.15.mlp.down_proj

model.layers.16.mlp.down_proj

model.layers.17.mlp.down_proj

model.layers.18.mlp.down_proj

model.layers.19.mlp.down_proj

model.layers.2.mlp.down_proj

model.layers.20.mlp.down_proj

model.layers.21.mlp.down_proj

model.layers.22.mlp.down_proj

model.layers.23.mlp.down_proj

model.layers.24.mlp.down_proj

model.layers.25.mlp.down_proj

model.layers.26.mlp.down_proj

model.layers.26.self_attn.k_proj

model.layers.26.self_attn.q_proj

model.layers.27.mlp.down_proj

model.layers.3.mlp.down_proj

model.layers.4.mlp.down_proj

model.layers.5.mlp.down_proj

model.layers.6.mlp.down_proj

model.layers.7.mlp.down_proj

model.layers.8.mlp.down_proj

model.layers.9.mlp.down_proj

2024-11-08 11:34:09,569 - msmodelslim-logger - INFO - use min-max observer:model.layers.0.self_attn.o_proj.quant_input, range_parm:8.5546875

2024-11-08 11:34:09,570 - msmodelslim-logger - INFO - use min-max observer:model.layers.0.mlp.gate_proj.quant_input, range_parm:5.7109375

2024-11-08 11:34:09,570 - msmodelslim-logger - INFO - use min-max observer:model.layers.0.mlp.up_proj.quant_input, range_parm:5.7109375

2024-11-08 11:34:09,570 - msmodelslim-logger - INFO - use min-max observer:model.layers.1.self_attn.q_proj.quant_input, range_parm:11.125

2024-11-08 11:34:09,570 - msmodelslim-logger - INFO - use min-max observer:model.layers.1.self_attn.k_proj.quant_input, range_parm:11.125

2024-11-08 11:34:09,571 - msmodelslim-logger - INFO - use min-max observer:model.layers.1.self_attn.v_proj.quant_input, range_parm:11.125

2024-11-08 11:34:09,571 - msmodelslim-logger - INFO - use min-max observer:model.layers.1.self_attn.o_proj.quant_input, range_parm:5.3515625

2024-11-08 11:34:09,571 - msmodelslim-logger - INFO - use min-max observer:model.layers.1.mlp.gate_proj.quant_input, range_parm:7.20703125

2024-11-08 11:34:09,571 - msmodelslim-logger - INFO - use min-max observer:model.layers.1.mlp.up_proj.quant_input, range_parm:7.20703125

2024-11-08 11:34:09,572 - msmodelslim-logger - INFO - use min-max observer:model.layers.2.self_attn.q_proj.quant_input, range_parm:7.8046875

2024-11-08 11:34:09,572 - msmodelslim-logger - INFO - use min-max observer:model.layers.2.self_attn.k_proj.quant_input, range_parm:7.8046875

2024-11-08 11:34:09,572 - msmodelslim-logger - INFO - use min-max observer:model.layers.2.self_attn.v_proj.quant_input, range_parm:7.8046875

2024-11-08 11:34:09,572 - msmodelslim-logger - INFO - use min-max observer:model.layers.2.self_attn.o_proj.quant_input, range_parm:8.21875

2024-11-08 11:34:09,573 - msmodelslim-logger - INFO - use min-max observer:model.layers.2.mlp.gate_proj.quant_input, range_parm:7.140625

2024-11-08 11:34:09,573 - msmodelslim-logger - INFO - use min-max observer:model.layers.2.mlp.up_proj.quant_input, range_parm:7.140625

2024-11-08 11:34:09,573 - msmodelslim-logger - INFO - use min-max observer:model.layers.3.self_attn.q_proj.quant_input, range_parm:8.6484375

2024-11-08 11:34:09,573 - msmodelslim-logger - INFO - use min-max observer:model.layers.3.self_attn.k_proj.quant_input, range_parm:8.6484375

2024-11-08 11:34:09,573 - msmodelslim-logger - INFO - use min-max observer:model.layers.3.self_attn.v_proj.quant_input, range_parm:8.6484375

2024-11-08 11:34:09,574 - msmodelslim-logger - INFO - use min-max observer:model.layers.3.self_attn.o_proj.quant_input, range_parm:4.59375

2024-11-08 11:34:09,574 - msmodelslim-logger - INFO - use min-max observer:model.layers.3.mlp.gate_proj.quant_input, range_parm:12.3671875

2024-11-08 11:34:09,574 - msmodelslim-logger - INFO - use min-max observer:model.layers.3.mlp.up_proj.quant_input, range_parm:12.3671875

2024-11-08 11:34:09,574 - msmodelslim-logger - INFO - use min-max observer:model.layers.4.self_attn.q_proj.quant_input, range_parm:9.7734375

2024-11-08 11:34:09,575 - msmodelslim-logger - INFO - use min-max observer:model.layers.4.self_attn.k_proj.quant_input, range_parm:9.7734375

2024-11-08 11:34:09,575 - msmodelslim-logger - INFO - use min-max observer:model.layers.4.self_attn.v_proj.quant_input, range_parm:9.7734375

2024-11-08 11:34:09,575 - msmodelslim-logger - INFO - use min-max observer:model.layers.4.self_attn.o_proj.quant_input, range_parm:4.63671875

2024-11-08 11:34:09,575 - msmodelslim-logger - INFO - use min-max observer:model.layers.4.mlp.gate_proj.quant_input, range_parm:5.859375

2024-11-08 11:34:09,575 - msmodelslim-logger - INFO - use min-max observer:model.layers.4.mlp.up_proj.quant_input, range_parm:5.859375

2024-11-08 11:34:09,576 - msmodelslim-logger - INFO - use min-max observer:model.layers.5.self_attn.q_proj.quant_input, range_parm:7.984375

2024-11-08 11:34:09,576 - msmodelslim-logger - INFO - use min-max observer:model.layers.5.self_attn.k_proj.quant_input, range_parm:7.984375

2024-11-08 11:34:09,576 - msmodelslim-logger - INFO - use min-max observer:model.layers.5.self_attn.v_proj.quant_input, range_parm:7.984375

2024-11-08 11:34:09,576 - msmodelslim-logger - INFO - use min-max observer:model.layers.5.self_attn.o_proj.quant_input, range_parm:4.60546875

2024-11-08 11:34:09,577 - msmodelslim-logger - INFO - use min-max observer:model.layers.5.mlp.gate_proj.quant_input, range_parm:8.8671875

2024-11-08 11:34:09,577 - msmodelslim-logger - INFO - use min-max observer:model.layers.5.mlp.up_proj.quant_input, range_parm:8.8671875

2024-11-08 11:34:09,577 - msmodelslim-logger - INFO - use min-max observer:model.layers.6.self_attn.q_proj.quant_input, range_parm:9.1484375

2024-11-08 11:34:09,577 - msmodelslim-logger - INFO - use min-max observer:model.layers.6.self_attn.k_proj.quant_input, range_parm:9.1484375

2024-11-08 11:34:09,577 - msmodelslim-logger - INFO - use min-max observer:model.layers.6.self_attn.v_proj.quant_input, range_parm:9.1484375

2024-11-08 11:34:09,578 - msmodelslim-logger - INFO - use min-max observer:model.layers.6.self_attn.o_proj.quant_input, range_parm:4.4453125

2024-11-08 11:34:09,578 - msmodelslim-logger - INFO - use min-max observer:model.layers.6.mlp.gate_proj.quant_input, range_parm:6.97265625

2024-11-08 11:34:09,578 - msmodelslim-logger - INFO - use min-max observer:model.layers.6.mlp.up_proj.quant_input, range_parm:6.97265625

2024-11-08 11:34:09,578 - msmodelslim-logger - INFO - use min-max observer:model.layers.7.self_attn.q_proj.quant_input, range_parm:7.83203125

2024-11-08 11:34:09,579 - msmodelslim-logger - INFO - use min-max observer:model.layers.7.self_attn.k_proj.quant_input, range_parm:7.83203125

2024-11-08 11:34:09,579 - msmodelslim-logger - INFO - use min-max observer:model.layers.7.self_attn.v_proj.quant_input, range_parm:7.83203125

2024-11-08 11:34:09,579 - msmodelslim-logger - INFO - use min-max observer:model.layers.7.self_attn.o_proj.quant_input, range_parm:5.5390625

2024-11-08 11:34:09,579 - msmodelslim-logger - INFO - use min-max observer:model.layers.7.mlp.gate_proj.quant_input, range_parm:7.8984375

2024-11-08 11:34:09,580 - msmodelslim-logger - INFO - use min-max observer:model.layers.7.mlp.up_proj.quant_input, range_parm:7.8984375

2024-11-08 11:34:09,580 - msmodelslim-logger - INFO - use min-max observer:model.layers.8.self_attn.q_proj.quant_input, range_parm:8.71875

2024-11-08 11:34:09,580 - msmodelslim-logger - INFO - use min-max observer:model.layers.8.self_attn.k_proj.quant_input, range_parm:8.71875

2024-11-08 11:34:09,580 - msmodelslim-logger - INFO - use min-max observer:model.layers.8.self_attn.v_proj.quant_input, range_parm:8.71875

2024-11-08 11:34:09,580 - msmodelslim-logger - INFO - use min-max observer:model.layers.8.self_attn.o_proj.quant_input, range_parm:5.9140625

2024-11-08 11:34:09,581 - msmodelslim-logger - INFO - use min-max observer:model.layers.8.mlp.gate_proj.quant_input, range_parm:9.109375

2024-11-08 11:34:09,581 - msmodelslim-logger - INFO - use min-max observer:model.layers.8.mlp.up_proj.quant_input, range_parm:9.109375

2024-11-08 11:34:09,581 - msmodelslim-logger - INFO - use min-max observer:model.layers.9.self_attn.q_proj.quant_input, range_parm:6.45703125

2024-11-08 11:34:09,581 - msmodelslim-logger - INFO - use min-max observer:model.layers.9.self_attn.k_proj.quant_input, range_parm:6.45703125

2024-11-08 11:34:09,582 - msmodelslim-logger - INFO - use min-max observer:model.layers.9.self_attn.v_proj.quant_input, range_parm:6.45703125

2024-11-08 11:34:09,582 - msmodelslim-logger - INFO - use min-max observer:model.layers.9.self_attn.o_proj.quant_input, range_parm:4.76171875

2024-11-08 11:34:09,582 - msmodelslim-logger - INFO - use min-max observer:model.layers.9.mlp.gate_proj.quant_input, range_parm:6.05859375

2024-11-08 11:34:09,582 - msmodelslim-logger - INFO - use min-max observer:model.layers.9.mlp.up_proj.quant_input, range_parm:6.05859375

2024-11-08 11:34:09,582 - msmodelslim-logger - INFO - use min-max observer:model.layers.10.self_attn.q_proj.quant_input, range_parm:7.23046875

2024-11-08 11:34:09,583 - msmodelslim-logger - INFO - use min-max observer:model.layers.10.self_attn.k_proj.quant_input, range_parm:7.23046875

2024-11-08 11:34:09,583 - msmodelslim-logger - INFO - use min-max observer:model.layers.10.self_attn.v_proj.quant_input, range_parm:7.23046875

2024-11-08 11:34:09,583 - msmodelslim-logger - INFO - use min-max observer:model.layers.10.self_attn.o_proj.quant_input, range_parm:4.83203125

2024-11-08 11:34:09,583 - msmodelslim-logger - INFO - use min-max observer:model.layers.10.mlp.gate_proj.quant_input, range_parm:5.9921875

2024-11-08 11:34:09,584 - msmodelslim-logger - INFO - use min-max observer:model.layers.10.mlp.up_proj.quant_input, range_parm:5.9921875

2024-11-08 11:34:09,584 - msmodelslim-logger - INFO - use min-max observer:model.layers.11.self_attn.q_proj.quant_input, range_parm:9.0859375

2024-11-08 11:34:09,584 - msmodelslim-logger - INFO - use min-max observer:model.layers.11.self_attn.k_proj.quant_input, range_parm:9.0859375

2024-11-08 11:34:09,584 - msmodelslim-logger - INFO - use min-max observer:model.layers.11.self_attn.v_proj.quant_input, range_parm:9.0859375

2024-11-08 11:34:09,584 - msmodelslim-logger - INFO - use min-max observer:model.layers.11.self_attn.o_proj.quant_input, range_parm:4.83984375

2024-11-08 11:34:09,585 - msmodelslim-logger - INFO - use min-max observer:model.layers.11.mlp.gate_proj.quant_input, range_parm:6.1875

2024-11-08 11:34:09,585 - msmodelslim-logger - INFO - use min-max observer:model.layers.11.mlp.up_proj.quant_input, range_parm:6.1875

2024-11-08 11:34:09,585 - msmodelslim-logger - INFO - use min-max observer:model.layers.12.self_attn.q_proj.quant_input, range_parm:8.546875

2024-11-08 11:34:09,585 - msmodelslim-logger - INFO - use min-max observer:model.layers.12.self_attn.k_proj.quant_input, range_parm:8.546875

2024-11-08 11:34:09,586 - msmodelslim-logger - INFO - use min-max observer:model.layers.12.self_attn.v_proj.quant_input, range_parm:8.546875

2024-11-08 11:34:09,586 - msmodelslim-logger - INFO - use min-max observer:model.layers.12.self_attn.o_proj.quant_input, range_parm:5.30859375

2024-11-08 11:34:09,586 - msmodelslim-logger - INFO - use min-max observer:model.layers.12.mlp.gate_proj.quant_input, range_parm:6.6484375

2024-11-08 11:34:09,586 - msmodelslim-logger - INFO - use min-max observer:model.layers.12.mlp.up_proj.quant_input, range_parm:6.6484375

2024-11-08 11:34:09,586 - msmodelslim-logger - INFO - use min-max observer:model.layers.13.self_attn.q_proj.quant_input, range_parm:8.5390625

2024-11-08 11:34:09,587 - msmodelslim-logger - INFO - use min-max observer:model.layers.13.self_attn.k_proj.quant_input, range_parm:8.5390625

2024-11-08 11:34:09,587 - msmodelslim-logger - INFO - use min-max observer:model.layers.13.self_attn.v_proj.quant_input, range_parm:8.5390625

2024-11-08 11:34:09,587 - msmodelslim-logger - INFO - use min-max observer:model.layers.13.self_attn.o_proj.quant_input, range_parm:4.99609375

2024-11-08 11:34:09,587 - msmodelslim-logger - INFO - use min-max observer:model.layers.13.mlp.gate_proj.quant_input, range_parm:7.12890625

2024-11-08 11:34:09,588 - msmodelslim-logger - INFO - use min-max observer:model.layers.13.mlp.up_proj.quant_input, range_parm:7.12890625

2024-11-08 11:34:09,588 - msmodelslim-logger - INFO - use min-max observer:model.layers.14.self_attn.q_proj.quant_input, range_parm:8.2109375

2024-11-08 11:34:09,588 - msmodelslim-logger - INFO - use min-max observer:model.layers.14.self_attn.k_proj.quant_input, range_parm:8.2109375

2024-11-08 11:34:09,588 - msmodelslim-logger - INFO - use min-max observer:model.layers.14.self_attn.v_proj.quant_input, range_parm:8.2109375

2024-11-08 11:34:09,588 - msmodelslim-logger - INFO - use min-max observer:model.layers.14.self_attn.o_proj.quant_input, range_parm:5.5

2024-11-08 11:34:09,589 - msmodelslim-logger - INFO - use min-max observer:model.layers.14.mlp.gate_proj.quant_input, range_parm:6.6328125

2024-11-08 11:34:09,589 - msmodelslim-logger - INFO - use min-max observer:model.layers.14.mlp.up_proj.quant_input, range_parm:6.6328125

2024-11-08 11:34:09,589 - msmodelslim-logger - INFO - use min-max observer:model.layers.15.self_attn.q_proj.quant_input, range_parm:8.265625

2024-11-08 11:34:09,589 - msmodelslim-logger - INFO - use min-max observer:model.layers.15.self_attn.k_proj.quant_input, range_parm:8.265625

2024-11-08 11:34:09,590 - msmodelslim-logger - INFO - use min-max observer:model.layers.15.self_attn.v_proj.quant_input, range_parm:8.265625

2024-11-08 11:34:09,590 - msmodelslim-logger - INFO - use min-max observer:model.layers.15.self_attn.o_proj.quant_input, range_parm:4.41796875

2024-11-08 11:34:09,590 - msmodelslim-logger - INFO - use min-max observer:model.layers.15.mlp.gate_proj.quant_input, range_parm:6.67578125

2024-11-08 11:34:09,590 - msmodelslim-logger - INFO - use min-max observer:model.layers.15.mlp.up_proj.quant_input, range_parm:6.67578125

2024-11-08 11:34:09,590 - msmodelslim-logger - INFO - use min-max observer:model.layers.16.self_attn.q_proj.quant_input, range_parm:9.1171875

2024-11-08 11:34:09,591 - msmodelslim-logger - INFO - use min-max observer:model.layers.16.self_attn.k_proj.quant_input, range_parm:9.1171875

2024-11-08 11:34:09,591 - msmodelslim-logger - INFO - use min-max observer:model.layers.16.self_attn.v_proj.quant_input, range_parm:9.1171875

2024-11-08 11:34:09,591 - msmodelslim-logger - INFO - use min-max observer:model.layers.16.self_attn.o_proj.quant_input, range_parm:4.7890625

2024-11-08 11:34:09,591 - msmodelslim-logger - INFO - use min-max observer:model.layers.16.mlp.gate_proj.quant_input, range_parm:7.5703125

2024-11-08 11:34:09,592 - msmodelslim-logger - INFO - use min-max observer:model.layers.16.mlp.up_proj.quant_input, range_parm:7.5703125

2024-11-08 11:34:09,592 - msmodelslim-logger - INFO - use min-max observer:model.layers.17.self_attn.q_proj.quant_input, range_parm:8.0390625

2024-11-08 11:34:09,592 - msmodelslim-logger - INFO - use min-max observer:model.layers.17.self_attn.k_proj.quant_input, range_parm:8.0390625

2024-11-08 11:34:09,592 - msmodelslim-logger - INFO - use min-max observer:model.layers.17.self_attn.v_proj.quant_input, range_parm:8.0390625

2024-11-08 11:34:09,592 - msmodelslim-logger - INFO - use min-max observer:model.layers.17.self_attn.o_proj.quant_input, range_parm:4.171875

2024-11-08 11:34:09,593 - msmodelslim-logger - INFO - use min-max observer:model.layers.17.mlp.gate_proj.quant_input, range_parm:7.6796875

2024-11-08 11:34:09,593 - msmodelslim-logger - INFO - use min-max observer:model.layers.17.mlp.up_proj.quant_input, range_parm:7.6796875

2024-11-08 11:34:09,593 - msmodelslim-logger - INFO - use min-max observer:model.layers.18.self_attn.q_proj.quant_input, range_parm:8.921875

2024-11-08 11:34:09,594 - msmodelslim-logger - INFO - use min-max observer:model.layers.18.self_attn.k_proj.quant_input, range_parm:8.921875

2024-11-08 11:34:09,594 - msmodelslim-logger - INFO - use min-max observer:model.layers.18.self_attn.v_proj.quant_input, range_parm:8.921875

2024-11-08 11:34:09,594 - msmodelslim-logger - INFO - use min-max observer:model.layers.18.self_attn.o_proj.quant_input, range_parm:4.69921875

2024-11-08 11:34:09,594 - msmodelslim-logger - INFO - use min-max observer:model.layers.18.mlp.gate_proj.quant_input, range_parm:9.03125

2024-11-08 11:34:09,594 - msmodelslim-logger - INFO - use min-max observer:model.layers.18.mlp.up_proj.quant_input, range_parm:9.03125

2024-11-08 11:34:09,595 - msmodelslim-logger - INFO - use min-max observer:model.layers.19.self_attn.q_proj.quant_input, range_parm:7.77734375

2024-11-08 11:34:09,595 - msmodelslim-logger - INFO - use min-max observer:model.layers.19.self_attn.k_proj.quant_input, range_parm:7.77734375

2024-11-08 11:34:09,595 - msmodelslim-logger - INFO - use min-max observer:model.layers.19.self_attn.v_proj.quant_input, range_parm:7.77734375

2024-11-08 11:34:09,595 - msmodelslim-logger - INFO - use min-max observer:model.layers.19.self_attn.o_proj.quant_input, range_parm:5.05078125

2024-11-08 11:34:09,596 - msmodelslim-logger - INFO - use min-max observer:model.layers.19.mlp.gate_proj.quant_input, range_parm:9.1484375

2024-11-08 11:34:09,596 - msmodelslim-logger - INFO - use min-max observer:model.layers.19.mlp.up_proj.quant_input, range_parm:9.1484375

2024-11-08 11:34:09,596 - msmodelslim-logger - INFO - use min-max observer:model.layers.20.self_attn.q_proj.quant_input, range_parm:8.3125

2024-11-08 11:34:09,596 - msmodelslim-logger - INFO - use min-max observer:model.layers.20.self_attn.k_proj.quant_input, range_parm:8.3125

2024-11-08 11:34:09,596 - msmodelslim-logger - INFO - use min-max observer:model.layers.20.self_attn.v_proj.quant_input, range_parm:8.3125

2024-11-08 11:34:09,597 - msmodelslim-logger - INFO - use min-max observer:model.layers.20.self_attn.o_proj.quant_input, range_parm:5.09375

2024-11-08 11:34:09,597 - msmodelslim-logger - INFO - use min-max observer:model.layers.20.mlp.gate_proj.quant_input, range_parm:9.546875

2024-11-08 11:34:09,597 - msmodelslim-logger - INFO - use min-max observer:model.layers.20.mlp.up_proj.quant_input, range_parm:9.546875

2024-11-08 11:34:09,597 - msmodelslim-logger - INFO - use min-max observer:model.layers.21.self_attn.q_proj.quant_input, range_parm:8.34375

2024-11-08 11:34:09,598 - msmodelslim-logger - INFO - use min-max observer:model.layers.21.self_attn.k_proj.quant_input, range_parm:8.34375

2024-11-08 11:34:09,598 - msmodelslim-logger - INFO - use min-max observer:model.layers.21.self_attn.v_proj.quant_input, range_parm:8.34375

2024-11-08 11:34:09,598 - msmodelslim-logger - INFO - use min-max observer:model.layers.21.self_attn.o_proj.quant_input, range_parm:5.03125

2024-11-08 11:34:09,598 - msmodelslim-logger - INFO - use min-max observer:model.layers.21.mlp.gate_proj.quant_input, range_parm:9.5234375

2024-11-08 11:34:09,598 - msmodelslim-logger - INFO - use min-max observer:model.layers.21.mlp.up_proj.quant_input, range_parm:9.5234375

2024-11-08 11:34:09,599 - msmodelslim-logger - INFO - use min-max observer:model.layers.22.self_attn.q_proj.quant_input, range_parm:6.23046875

2024-11-08 11:34:09,599 - msmodelslim-logger - INFO - use min-max observer:model.layers.22.self_attn.k_proj.quant_input, range_parm:6.23046875

2024-11-08 11:34:09,599 - msmodelslim-logger - INFO - use min-max observer:model.layers.22.self_attn.v_proj.quant_input, range_parm:6.23046875

2024-11-08 11:34:09,599 - msmodelslim-logger - INFO - use min-max observer:model.layers.22.self_attn.o_proj.quant_input, range_parm:4.953125

2024-11-08 11:34:09,600 - msmodelslim-logger - INFO - use min-max observer:model.layers.22.mlp.gate_proj.quant_input, range_parm:8.0

2024-11-08 11:34:09,600 - msmodelslim-logger - INFO - use min-max observer:model.layers.22.mlp.up_proj.quant_input, range_parm:8.0

2024-11-08 11:34:09,600 - msmodelslim-logger - INFO - use min-max observer:model.layers.23.self_attn.q_proj.quant_input, range_parm:7.2109375

2024-11-08 11:34:09,600 - msmodelslim-logger - INFO - use min-max observer:model.layers.23.self_attn.k_proj.quant_input, range_parm:7.2109375

2024-11-08 11:34:09,600 - msmodelslim-logger - INFO - use min-max observer:model.layers.23.self_attn.v_proj.quant_input, range_parm:7.2109375

2024-11-08 11:34:09,601 - msmodelslim-logger - INFO - use min-max observer:model.layers.23.self_attn.o_proj.quant_input, range_parm:6.640625

2024-11-08 11:34:09,601 - msmodelslim-logger - INFO - use min-max observer:model.layers.23.mlp.gate_proj.quant_input, range_parm:6.37109375

2024-11-08 11:34:09,601 - msmodelslim-logger - INFO - use min-max observer:model.layers.23.mlp.up_proj.quant_input, range_parm:6.37109375

2024-11-08 11:34:09,601 - msmodelslim-logger - INFO - use min-max observer:model.layers.24.self_attn.q_proj.quant_input, range_parm:8.4921875

2024-11-08 11:34:09,602 - msmodelslim-logger - INFO - use min-max observer:model.layers.24.self_attn.k_proj.quant_input, range_parm:8.4921875

2024-11-08 11:34:09,602 - msmodelslim-logger - INFO - use min-max observer:model.layers.24.self_attn.v_proj.quant_input, range_parm:8.4921875

2024-11-08 11:34:09,602 - msmodelslim-logger - INFO - use min-max observer:model.layers.24.self_attn.o_proj.quant_input, range_parm:8.6953125

2024-11-08 11:34:09,602 - msmodelslim-logger - INFO - use min-max observer:model.layers.24.mlp.gate_proj.quant_input, range_parm:6.83984375

2024-11-08 11:34:09,602 - msmodelslim-logger - INFO - use min-max observer:model.layers.24.mlp.up_proj.quant_input, range_parm:6.83984375

2024-11-08 11:34:09,603 - msmodelslim-logger - INFO - use min-max observer:model.layers.25.self_attn.q_proj.quant_input, range_parm:6.421875

2024-11-08 11:34:09,603 - msmodelslim-logger - INFO - use min-max observer:model.layers.25.self_attn.k_proj.quant_input, range_parm:6.421875

2024-11-08 11:34:09,603 - msmodelslim-logger - INFO - use min-max observer:model.layers.25.self_attn.v_proj.quant_input, range_parm:6.421875

2024-11-08 11:34:09,603 - msmodelslim-logger - INFO - use min-max observer:model.layers.25.self_attn.o_proj.quant_input, range_parm:9.4609375

2024-11-08 11:34:09,604 - msmodelslim-logger - INFO - use min-max observer:model.layers.25.mlp.gate_proj.quant_input, range_parm:6.30078125

2024-11-08 11:34:09,604 - msmodelslim-logger - INFO - use min-max observer:model.layers.25.mlp.up_proj.quant_input, range_parm:6.30078125

2024-11-08 11:34:09,604 - msmodelslim-logger - INFO - use min-max observer:model.layers.26.self_attn.v_proj.quant_input, range_parm:13.421875

2024-11-08 11:34:09,604 - msmodelslim-logger - INFO - use min-max observer:model.layers.26.self_attn.o_proj.quant_input, range_parm:7.1796875

2024-11-08 11:34:09,605 - msmodelslim-logger - INFO - use min-max observer:model.layers.26.mlp.gate_proj.quant_input, range_parm:7.89453125

2024-11-08 11:34:09,605 - msmodelslim-logger - INFO - use min-max observer:model.layers.26.mlp.up_proj.quant_input, range_parm:7.89453125

2024-11-08 11:34:09,605 - msmodelslim-logger - INFO - use min-max observer:model.layers.27.self_attn.q_proj.quant_input, range_parm:13.2890625

2024-11-08 11:34:09,605 - msmodelslim-logger - INFO - use min-max observer:model.layers.27.self_attn.k_proj.quant_input, range_parm:13.2890625

2024-11-08 11:34:09,605 - msmodelslim-logger - INFO - use min-max observer:model.layers.27.self_attn.v_proj.quant_input, range_parm:13.2890625

2024-11-08 11:34:09,606 - msmodelslim-logger - INFO - use min-max observer:model.layers.27.self_attn.o_proj.quant_input, range_parm:5.80078125

2024-11-08 11:34:09,606 - msmodelslim-logger - INFO - use min-max observer:model.layers.27.mlp.gate_proj.quant_input, range_parm:6.515625

2024-11-08 11:34:09,606 - msmodelslim-logger - INFO - use min-max observer:model.layers.27.mlp.up_proj.quant_input, range_parm:6.515625

2024-11-08 11:34:09,606 - msmodelslim-logger - INFO - Wrap Quantizer success!

2024-11-08 11:34:09,606 - msmodelslim-logger - INFO - Calibration start!

0%| | 0/50 [00:00<?, ?it/s]2024-11-08 11:34:09,633 - msmodelslim-logger - INFO - layer:model.layers.0.self_attn.o_proj.quant_input, range: 8.5546875, Automatically set the drop rate:1.0

2024-11-08 11:34:09,658 - msmodelslim-logger - INFO - layer:model.layers.0.mlp.gate_proj.quant_input, range: 5.7109375, Automatically set the drop rate:1.0

2024-11-08 11:34:09,686 - msmodelslim-logger - INFO - layer:model.layers.0.mlp.up_proj.quant_input, range: 5.7109375, Automatically set the drop rate:1.0

2024-11-08 11:34:09,703 - msmodelslim-logger - INFO - layer:model.layers.1.self_attn.q_proj.quant_input, range: 11.125, Automatically set the drop rate:1.0

2024-11-08 11:34:09,707 - msmodelslim-logger - INFO - layer:model.layers.1.self_attn.k_proj.quant_input, range: 11.125, Automatically set the drop rate:1.0

2024-11-08 11:34:09,709 - msmodelslim-logger - INFO - layer:model.layers.1.self_attn.v_proj.quant_input, range: 11.125, Automatically set the drop rate:1.0

2024-11-08 11:34:09,717 - msmodelslim-logger - INFO - layer:model.layers.1.self_attn.o_proj.quant_input, range: 5.3515625, Automatically set the drop rate:1.0

2024-11-08 11:34:09,742 - msmodelslim-logger - INFO - layer:model.layers.1.mlp.gate_proj.quant_input, range: 7.20703125, Automatically set the drop rate:1.0

2024-11-08 11:34:09,770 - msmodelslim-logger - INFO - layer:model.layers.1.mlp.up_proj.quant_input, range: 7.20703125, Automatically set the drop rate:1.0

2024-11-08 11:34:09,784 - msmodelslim-logger - INFO - layer:model.layers.2.self_attn.q_proj.quant_input, range: 7.8046875, Automatically set the drop rate:1.0

2024-11-08 11:34:09,787 - msmodelslim-logger - INFO - layer:model.layers.2.self_attn.k_proj.quant_input, range: 7.8046875, Automatically set the drop rate:1.0

2024-11-08 11:34:09,790 - msmodelslim-logger - INFO - layer:model.layers.2.self_attn.v_proj.quant_input, range: 7.8046875, Automatically set the drop rate:1.0

2024-11-08 11:34:09,797 - msmodelslim-logger - INFO - layer:model.layers.2.self_attn.o_proj.quant_input, range: 8.21875, Automatically set the drop rate:1.0

2024-11-08 11:34:09,822 - msmodelslim-logger - INFO - layer:model.layers.2.mlp.gate_proj.quant_input, range: 7.140625, Automatically set the drop rate:1.0

2024-11-08 11:34:09,850 - msmodelslim-logger - INFO - layer:model.layers.2.mlp.up_proj.quant_input, range: 7.140625, Automatically set the drop rate:1.0

2024-11-08 11:34:09,864 - msmodelslim-logger - INFO - layer:model.layers.3.self_attn.q_proj.quant_input, range: 8.6484375, Automatically set the drop rate:1.0

2024-11-08 11:34:09,868 - msmodelslim-logger - INFO - layer:model.layers.3.self_attn.k_proj.quant_input, range: 8.6484375, Automatically set the drop rate:1.0

2024-11-08 11:34:09,870 - msmodelslim-logger - INFO - layer:model.layers.3.self_attn.v_proj.quant_input, range: 8.6484375, Automatically set the drop rate:1.0

2024-11-08 11:34:09,878 - msmodelslim-logger - INFO - layer:model.layers.3.self_attn.o_proj.quant_input, range: 4.59375, Automatically set the drop rate:1.0

2024-11-08 11:34:09,903 - msmodelslim-logger - INFO - layer:model.layers.3.mlp.gate_proj.quant_input, range: 12.3671875, Automatically set the drop rate:1.0

2024-11-08 11:34:09,931 - msmodelslim-logger - INFO - layer:model.layers.3.mlp.up_proj.quant_input, range: 12.3671875, Automatically set the drop rate:1.0

2024-11-08 11:34:09,949 - msmodelslim-logger - INFO - layer:model.layers.4.self_attn.q_proj.quant_input, range: 9.7734375, Automatically set the drop rate:1.0

2024-11-08 11:34:09,953 - msmodelslim-logger - INFO - layer:model.layers.4.self_attn.k_proj.quant_input, range: 9.7734375, Automatically set the drop rate:1.0

2024-11-08 11:34:09,956 - msmodelslim-logger - INFO - layer:model.layers.4.self_attn.v_proj.quant_input, range: 9.7734375, Automatically set the drop rate:1.0

2024-11-08 11:34:09,963 - msmodelslim-logger - INFO - layer:model.layers.4.self_attn.o_proj.quant_input, range: 4.63671875, Automatically set the drop rate:1.0

2024-11-08 11:34:09,988 - msmodelslim-logger - INFO - layer:model.layers.4.mlp.gate_proj.quant_input, range: 5.859375, Automatically set the drop rate:1.0

2024-11-08 11:34:10,017 - msmodelslim-logger - INFO - layer:model.layers.4.mlp.up_proj.quant_input, range: 5.859375, Automatically set the drop rate:1.0

2024-11-08 11:34:10,031 - msmodelslim-logger - INFO - layer:model.layers.5.self_attn.q_proj.quant_input, range: 7.984375, Automatically set the drop rate:1.0

2024-11-08 11:34:10,034 - msmodelslim-logger - INFO - layer:model.layers.5.self_attn.k_proj.quant_input, range: 7.984375, Automatically set the drop rate:1.0

2024-11-08 11:34:10,037 - msmodelslim-logger - INFO - layer:model.layers.5.self_attn.v_proj.quant_input, range: 7.984375, Automatically set the drop rate:1.0

2024-11-08 11:34:10,044 - msmodelslim-logger - INFO - layer:model.layers.5.self_attn.o_proj.quant_input, range: 4.60546875, Automatically set the drop rate:1.0

2024-11-08 11:34:10,070 - msmodelslim-logger - INFO - layer:model.layers.5.mlp.gate_proj.quant_input, range: 8.8671875, Automatically set the drop rate:1.0

2024-11-08 11:34:10,098 - msmodelslim-logger - INFO - layer:model.layers.5.mlp.up_proj.quant_input, range: 8.8671875, Automatically set the drop rate:1.0

2024-11-08 11:34:10,112 - msmodelslim-logger - INFO - layer:model.layers.6.self_attn.q_proj.quant_input, range: 9.1484375, Automatically set the drop rate:1.0

2024-11-08 11:34:10,115 - msmodelslim-logger - INFO - layer:model.layers.6.self_attn.k_proj.quant_input, range: 9.1484375, Automatically set the drop rate:1.0

2024-11-08 11:34:10,118 - msmodelslim-logger - INFO - layer:model.layers.6.self_attn.v_proj.quant_input, range: 9.1484375, Automatically set the drop rate:1.0

2024-11-08 11:34:10,126 - msmodelslim-logger - INFO - layer:model.layers.6.self_attn.o_proj.quant_input, range: 4.4453125, Automatically set the drop rate:1.0

2024-11-08 11:34:10,151 - msmodelslim-logger - INFO - layer:model.layers.6.mlp.gate_proj.quant_input, range: 6.97265625, Automatically set the drop rate:1.0

2024-11-08 11:34:10,179 - msmodelslim-logger - INFO - layer:model.layers.6.mlp.up_proj.quant_input, range: 6.97265625, Automatically set the drop rate:1.0

2024-11-08 11:34:10,193 - msmodelslim-logger - INFO - layer:model.layers.7.self_attn.q_proj.quant_input, range: 7.83203125, Automatically set the drop rate:1.0

2024-11-08 11:34:10,197 - msmodelslim-logger - INFO - layer:model.layers.7.self_attn.k_proj.quant_input, range: 7.83203125, Automatically set the drop rate:1.0

2024-11-08 11:34:10,199 - msmodelslim-logger - INFO - layer:model.layers.7.self_attn.v_proj.quant_input, range: 7.83203125, Automatically set the drop rate:1.0

2024-11-08 11:34:10,207 - msmodelslim-logger - INFO - layer:model.layers.7.self_attn.o_proj.quant_input, range: 5.5390625, Automatically set the drop rate:1.0

2024-11-08 11:34:10,232 - msmodelslim-logger - INFO - layer:model.layers.7.mlp.gate_proj.quant_input, range: 7.8984375, Automatically set the drop rate:1.0

2024-11-08 11:34:10,260 - msmodelslim-logger - INFO - layer:model.layers.7.mlp.up_proj.quant_input, range: 7.8984375, Automatically set the drop rate:1.0

2024-11-08 11:34:10,274 - msmodelslim-logger - INFO - layer:model.layers.8.self_attn.q_proj.quant_input, range: 8.71875, Automatically set the drop rate:1.0

2024-11-08 11:34:10,277 - msmodelslim-logger - INFO - layer:model.layers.8.self_attn.k_proj.quant_input, range: 8.71875, Automatically set the drop rate:1.0

2024-11-08 11:34:10,280 - msmodelslim-logger - INFO - layer:model.layers.8.self_attn.v_proj.quant_input, range: 8.71875, Automatically set the drop rate:1.0

2024-11-08 11:34:10,288 - msmodelslim-logger - INFO - layer:model.layers.8.self_attn.o_proj.quant_input, range: 5.9140625, Automatically set the drop rate:1.0

2024-11-08 11:34:10,313 - msmodelslim-logger - INFO - layer:model.layers.8.mlp.gate_proj.quant_input, range: 9.109375, Automatically set the drop rate:1.0

2024-11-08 11:34:10,341 - msmodelslim-logger - INFO - layer:model.layers.8.mlp.up_proj.quant_input, range: 9.109375, Automatically set the drop rate:1.0

2024-11-08 11:34:10,355 - msmodelslim-logger - INFO - layer:model.layers.9.self_attn.q_proj.quant_input, range: 6.45703125, Automatically set the drop rate:1.0

2024-11-08 11:34:10,359 - msmodelslim-logger - INFO - layer:model.layers.9.self_attn.k_proj.quant_input, range: 6.45703125, Automatically set the drop rate:1.0

2024-11-08 11:34:10,362 - msmodelslim-logger - INFO - layer:model.layers.9.self_attn.v_proj.quant_input, range: 6.45703125, Automatically set the drop rate:1.0

2024-11-08 11:34:10,370 - msmodelslim-logger - INFO - layer:model.layers.9.self_attn.o_proj.quant_input, range: 4.76171875, Automatically set the drop rate:1.0

2024-11-08 11:34:10,396 - msmodelslim-logger - INFO - layer:model.layers.9.mlp.gate_proj.quant_input, range: 6.05859375, Automatically set the drop rate:1.0

2024-11-08 11:34:10,424 - msmodelslim-logger - INFO - layer:model.layers.9.mlp.up_proj.quant_input, range: 6.05859375, Automatically set the drop rate:1.0

2024-11-08 11:34:10,438 - msmodelslim-logger - INFO - layer:model.layers.10.self_attn.q_proj.quant_input, range: 7.23046875, Automatically set the drop rate:1.0

2024-11-08 11:34:10,442 - msmodelslim-logger - INFO - layer:model.layers.10.self_attn.k_proj.quant_input, range: 7.23046875, Automatically set the drop rate:1.0

2024-11-08 11:34:10,445 - msmodelslim-logger - INFO - layer:model.layers.10.self_attn.v_proj.quant_input, range: 7.23046875, Automatically set the drop rate:1.0

2024-11-08 11:34:10,453 - msmodelslim-logger - INFO - layer:model.layers.10.self_attn.o_proj.quant_input, range: 4.83203125, Automatically set the drop rate:1.0

2024-11-08 11:34:10,478 - msmodelslim-logger - INFO - layer:model.layers.10.mlp.gate_proj.quant_input, range: 5.9921875, Automatically set the drop rate:1.0

2024-11-08 11:34:10,507 - msmodelslim-logger - INFO - layer:model.layers.10.mlp.up_proj.quant_input, range: 5.9921875, Automatically set the drop rate:1.0

2024-11-08 11:34:10,521 - msmodelslim-logger - INFO - layer:model.layers.11.self_attn.q_proj.quant_input, range: 9.0859375, Automatically set the drop rate:1.0

2024-11-08 11:34:10,525 - msmodelslim-logger - INFO - layer:model.layers.11.self_attn.k_proj.quant_input, range: 9.0859375, Automatically set the drop rate:1.0

2024-11-08 11:34:10,528 - msmodelslim-logger - INFO - layer:model.layers.11.self_attn.v_proj.quant_input, range: 9.0859375, Automatically set the drop rate:1.0

2024-11-08 11:34:10,536 - msmodelslim-logger - INFO - layer:model.layers.11.self_attn.o_proj.quant_input, range: 4.83984375, Automatically set the drop rate:1.0

2024-11-08 11:34:10,561 - msmodelslim-logger - INFO - layer:model.layers.11.mlp.gate_proj.quant_input, range: 6.1875, Automatically set the drop rate:1.0

2024-11-08 11:34:10,590 - msmodelslim-logger - INFO - layer:model.layers.11.mlp.up_proj.quant_input, range: 6.1875, Automatically set the drop rate:1.0

2024-11-08 11:34:10,604 - msmodelslim-logger - INFO - layer:model.layers.12.self_attn.q_proj.quant_input, range: 8.546875, Automatically set the drop rate:1.0

2024-11-08 11:34:10,607 - msmodelslim-logger - INFO - layer:model.layers.12.self_attn.k_proj.quant_input, range: 8.546875, Automatically set the drop rate:1.0

2024-11-08 11:34:10,610 - msmodelslim-logger - INFO - layer:model.layers.12.self_attn.v_proj.quant_input, range: 8.546875, Automatically set the drop rate:1.0

2024-11-08 11:34:10,619 - msmodelslim-logger - INFO - layer:model.layers.12.self_attn.o_proj.quant_input, range: 5.30859375, Automatically set the drop rate:1.0

2024-11-08 11:34:10,644 - msmodelslim-logger - INFO - layer:model.layers.12.mlp.gate_proj.quant_input, range: 6.6484375, Automatically set the drop rate:1.0

2024-11-08 11:34:10,672 - msmodelslim-logger - INFO - layer:model.layers.12.mlp.up_proj.quant_input, range: 6.6484375, Automatically set the drop rate:1.0

2024-11-08 11:34:10,686 - msmodelslim-logger - INFO - layer:model.layers.13.self_attn.q_proj.quant_input, range: 8.5390625, Automatically set the drop rate:1.0

2024-11-08 11:34:10,690 - msmodelslim-logger - INFO - layer:model.layers.13.self_attn.k_proj.quant_input, range: 8.5390625, Automatically set the drop rate:1.0

2024-11-08 11:34:10,693 - msmodelslim-logger - INFO - layer:model.layers.13.self_attn.v_proj.quant_input, range: 8.5390625, Automatically set the drop rate:1.0

2024-11-08 11:34:10,701 - msmodelslim-logger - INFO - layer:model.layers.13.self_attn.o_proj.quant_input, range: 4.99609375, Automatically set the drop rate:1.0

2024-11-08 11:34:10,727 - msmodelslim-logger - INFO - layer:model.layers.13.mlp.gate_proj.quant_input, range: 7.12890625, Automatically set the drop rate:1.0

2024-11-08 11:34:10,755 - msmodelslim-logger - INFO - layer:model.layers.13.mlp.up_proj.quant_input, range: 7.12890625, Automatically set the drop rate:1.0

2024-11-08 11:34:10,769 - msmodelslim-logger - INFO - layer:model.layers.14.self_attn.q_proj.quant_input, range: 8.2109375, Automatically set the drop rate:1.0

2024-11-08 11:34:10,773 - msmodelslim-logger - INFO - layer:model.layers.14.self_attn.k_proj.quant_input, range: 8.2109375, Automatically set the drop rate:1.0

2024-11-08 11:34:10,776 - msmodelslim-logger - INFO - layer:model.layers.14.self_attn.v_proj.quant_input, range: 8.2109375, Automatically set the drop rate:1.0

2024-11-08 11:34:10,784 - msmodelslim-logger - INFO - layer:model.layers.14.self_attn.o_proj.quant_input, range: 5.5, Automatically set the drop rate:1.0

2024-11-08 11:34:10,809 - msmodelslim-logger - INFO - layer:model.layers.14.mlp.gate_proj.quant_input, range: 6.6328125, Automatically set the drop rate:1.0

2024-11-08 11:34:10,838 - msmodelslim-logger - INFO - layer:model.layers.14.mlp.up_proj.quant_input, range: 6.6328125, Automatically set the drop rate:1.0

2024-11-08 11:34:10,852 - msmodelslim-logger - INFO - layer:model.layers.15.self_attn.q_proj.quant_input, range: 8.265625, Automatically set the drop rate:1.0

2024-11-08 11:34:10,855 - msmodelslim-logger - INFO - layer:model.layers.15.self_attn.k_proj.quant_input, range: 8.265625, Automatically set the drop rate:1.0

2024-11-08 11:34:10,858 - msmodelslim-logger - INFO - layer:model.layers.15.self_attn.v_proj.quant_input, range: 8.265625, Automatically set the drop rate:1.0

2024-11-08 11:34:10,866 - msmodelslim-logger - INFO - layer:model.layers.15.self_attn.o_proj.quant_input, range: 4.41796875, Automatically set the drop rate:1.0