基于K8S编排部署EFK日志收集系统

案例分析

1. 规划节点

节点规划,见表1。

表1 节点规划

| IP | 主机名 | k8s版本信息 |

|---|---|---|

| 192.168.100.3 | master | v1.25.2 |

| 192.168.100.4 | node | v1.25.2 |

2. 基础准备

Kubernete环境已安装完成,将提供的软件包efk-img.tar.gz上传至master节点/root目录下并解压。

3. EFK简介

EFK 代表 Elasticsearch、Fluentd 和 Kibana。EFK 是 Kubernetes 日志聚合和分析的常用且最佳的开源选择。

- Elasticsearch 是一种分布式且可扩展的搜索引擎,通常用于筛选大量日志数据。它是一个基于 Lucene 搜索引擎(来自 Apache 的搜索库)的 NoSQL 数据库。它的主要工作是存储日志并从 fluentd 检索日志。

- Fluentd 是一家原木运输商。它是一个开源的日志收集代理,支持多种数据源和输出格式。此外,它还可以将日志转发到 Stackdriver、Cloudwatch、elasticsearch、Splunk、Bigquery 等解决方案。简而言之,它是生成日志数据的系统和存储日志数据的系统之间的统一层。

- Kibana 是用于查询、数据可视化和仪表板的 UI 工具。它是一个查询引擎,允许您通过 Web 界面浏览日志数据,为事件日志构建可视化效果,特定于查询以筛选信息以检测问题。您可以使用 Kibana 虚拟构建任何类型的仪表板。Kibana 查询语言 (KQL) 用于查询 Elasticsearch 数据。在这里,我们使用 Kibana 在 Elasticsearch 中查询索引数据。

案例实施

1. 基础环境准备

(1)导入软件包

[root@master ~]# nerdctl load -i efk-img.tar.gz

查看集群状态:

[root@master ~]# kubectl cluster-info

Kubernetes control plane is running at https://apiserver.cluster.local:6443

CoreDNS is running at https://apiserver.cluster.local:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxyTo further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

2. 配置StorageClass 动态绑定

(1)配置NFS Server端

[root@master ~]# yum install -y nfs-utils rpcbind

设置 NFS 服务开机自启

[root@master ~]# systemctl enable nfs rpcbind --now

创建EFK共享目录

[root@master ~]# mkdir -p /root/data/

[root@master ~]# chmod -R 777 /root/data/

编辑 NFS 配置文件

[root@master ~]# vim /etc/exports

/root/data/ *(rw,sync,no_all_squash,no_root_squash)

刷新 NFS 导出列表并启动 NFS 服务

[root@master ~]# exportfs -r

[root@master ~]# systemctl restart nfs

查看 NFS 共享的导出状态

[root@master ~]# showmount -e

Export list for master:

/root/data *

(2)创建ServiceAccount并配置RBAC

创建目录存放EFK配置文件

[root@master ~]# mkdir efk

[root@master ~]# cd efk/

编写 rbac 配置文件

[root@master efk]# vim rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:name: nfs-client-provisioner---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:name: leader-locking-nfs-client-provisioner

rules:

- apiGroups:- ""resources:- endpointsverbs:- get- list- watch- create- update- patch---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:name: leader-locking-nfs-client-provisioner

roleRef:apiGroup: rbac.authorization.k8s.iokind: Rolename: leader-locking-nfs-client-provisioner

subjects:

- kind: ServiceAccountname: nfs-client-provisionernamespace: default---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:name: nfs-client-provisioner-runner

rules:

- apiGroups:- ""resources:- persistentvolumesverbs:- get- list- watch- create- delete

- apiGroups:- ""resources:- persistentvolumeclaimsverbs:- get- list- watch- update

- apiGroups:- ""resources:- endpointsverbs:- get- list- watch- create- update- patch

- apiGroups:- storage.k8s.ioresources:- storageclassesverbs:- get- list- watch

- apiGroups:- ""resources:- eventsverbs:- create- update- patch

- apiGroups:- ""resources:- pods- namespacesverbs:- get- list- watch---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:name: run-nfs-client-provisioner

roleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: nfs-client-provisioner-runner

subjects:

- kind: ServiceAccountname: nfs-client-provisionernamespace: default

部署 rbac 文件

[root@master efk]# kubectl apply -f rbac.yaml

(3)配置NFS Provisioner

导入镜像包

[root@master ~]# nerdctl -n k8s.io load -i nfs-subdir-external-provisioner-v4.0.2.tar

编写 nfs 配置文件

[root@master efk]# vim nfs-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:name: nfs-client-provisioner # 部署对象名称为 nfs-client-provisionernamespace: default # 部署在 default 命名空间

spec:replicas: 1 # 副本数量为 1selector:matchLabels:app: nfs-client-provisioner # 选择带有此标签的 Podtemplate:metadata:labels:app: nfs-client-provisioner # 为 Pod 设置标签spec:serviceAccountName: nfs-client-provisioner # 使用 nfs-client-provisioner 服务账号containers:- name: nfs-client-provisioner # 容器名称为 nfs-client-provisionerimage: registry.k8s.io/sig-storage/nfs-subdir-external-provisioner:v4.0.2 # 使用指定版本的 NFS provisioner 镜像imagePullPolicy: IfNotPresentenv:- name: PROVISIONER_NAME # 设置环境变量 PROVISIONER_NAMEvalue: nfs.client.com # 环境变量的值为 nfs.client.com- name: NFS_SERVER # 设置 NFS 服务端地址value: 192.168.100.3 # 替换为实际的 NFS 服务端 IP 地址- name: NFS_PATH # 设置 NFS 共享目录路径value: /root/data/ # 替换为实际的 NFS 共享目录路径volumeMounts:- name: nfs-client-root # 数据卷挂载点名称mountPath: /persistentvolumes # 容器内的挂载路径volumes:- name: nfs-client-root # 定义 NFS 数据卷nfs:server: 192.168.100.3 # 替换为实际的 NFS 服务端 IP 地址path: /root/data/ # 替换为实际的 NFS 共享目录路径径

部署 nfs 文件

[root@master efk]# kubectl apply -f nfs-deployment.yaml

查看 Pod 运行状态

[root@master efk]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nfs-client-provisioner-76fdd7948b-2n9wv 1/1 Running 0 2s

(4)配置StorageClass动态绑定

[root@master efk]# vim storageclass.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:name: managed-nfs-storage # StorageClass 名称为 managed-nfs-storageannotations:storageclass.kubernetes.io/is-default-class: "true" # 使用 annotations 指定为默认 StorageClass

provisioner: nfs.client.com # 设置 Provisioner 为 nfs.client.com,对应 NFS Provisioner

parameters:archiveOnDelete: "false" # 当 PVC 被删除时,NFS 上的持久卷不会被自动删除

部署 StorageClass 文件

[root@master efk]# kubectl apply -f storageclass.yaml

3. 配置EFK

(1)配置Elasticsearch

[root@master efk]# vim elasticsearch.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:name: es-cluster

spec:serviceName: elasticsearchreplicas: 3selector:matchLabels:app: elasticsearchtemplate:metadata:labels:app: elasticsearchspec:serviceAccountName: nfs-client-provisioner # 使用 NFS 服务账号提供卷服务initContainers:- name: fix-permissionsimage: busyboximagePullPolicy: IfNotPresentcommand: ["sh", "-c", "chown -R 1000:1000 /usr/share/elasticsearch/data"]securityContext:privileged: truevolumeMounts:- name: elasticsearch-datamountPath: /usr/share/elasticsearch/data- name: increase-vm-max-mapimage: busyboximagePullPolicy: IfNotPresentcommand: ["sysctl", "-w", "vm.max_map_count=262144"]securityContext:privileged: true- name: increase-fd-ulimitimage: busyboximagePullPolicy: IfNotPresentcommand: ["sh", "-c", "ulimit -n 65536"]securityContext:privileged: truecontainers:- name: elasticsearchimage: docker.elastic.co/elasticsearch/elasticsearch:7.2.0imagePullPolicy: IfNotPresentresources:limits:cpu: 1000mrequests:cpu: 100mports:- containerPort: 9200name: restprotocol: TCP- containerPort: 9300name: inter-nodeprotocol: TCPvolumeMounts:- name: elasticsearch-datamountPath: /usr/share/elasticsearch/dataenv:- name: cluster.namevalue: k8s-logs- name: node.namevalueFrom:fieldRef:fieldPath: metadata.name- name: discovery.seed_hostsvalue: "es-cluster-0.elasticsearch,es-cluster-1.elasticsearch,es-cluster-2.elasticsearch"- name: cluster.initial_master_nodesvalue: "es-cluster-0,es-cluster-1,es-cluster-2"- name: ES_JAVA_OPTSvalue: "-Xms512m -Xmx512m"volumeClaimTemplates:- metadata:name: elasticsearch-datalabels:app: elasticsearchannotations:volume.beta.kubernetes.io/storage-class: "managed-nfs-storage" # 存储类的名称,使用 NFS 存储spec:accessModes: [ "ReadWriteOnce" ]resources:requests:storage: 100Gi

部署 elasticsearch-sts 文件

[root@master efk]# kubectl apply -f elasticsearch.yaml

查看 Pod 运行状态

[root@master efk]# kubectl get pod

NAME READY STATUS RESTARTS AGE

es-cluster-0 1/1 Running 0 17s

es-cluster-1 1/1 Running 0 13s

es-cluster-2 1/1 Running 0 7s

nfs-client-provisioner-79865487f9-tnssn 1/1 Running 0 5m29s

配置 service 文件

[root@master efk]# vim elasticsearch-svc.yaml

apiVersion: v1

kind: Service

metadata:name: elasticsearchlabels:app: elasticsearch

spec:selector:app: elasticsearchclusterIP: Noneports:- port: 9200name: rest- port: 9300name: inter-node

部署 service 文件

[root@master efk]# kubectl apply -f elasticsearch-svc.yaml

查看 service

[root@master efk]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

elasticsearch ClusterIP None <none> 9200/TCP,9300/TCP 3s

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 7d19h

验证 Elasticsearch 部署 开启端口转发

[root@master efk]# kubectl port-forward es-cluster-0 9200:9200

打开一个新的 Terminal 窗口,以便我们可以查询 REST API 接口

[root@master efk]# curl -XGET 'localhost:9200/_cluster/health?pretty'

{"cluster_name": "k8s-logs", // 集群的名称为 "k8s-logs"。"status": "green", // 集群状态为 "green",表示所有的主分片和副本分片都正常。"timed_out": false, // 表示查询没有超时。"number_of_nodes": 3, // 集群中有 3 个节点。"number_of_data_nodes": 3, // 集群中有 3 个数据节点,表明这些节点存储数据。"active_primary_shards": 1, // 当前活跃的主分片数量为 1。"active_shards": 2, // 当前活跃的分片总数为 2(包括主分片和副本分片)。"relocating_shards": 0, // 当前没有正在重新分配的分片。"initializing_shards": 0, // 当前没有初始化的分片。"unassigned_shards": 0, // 当前没有未分配的分片,意味着所有分片都已分配给节点。"delayed_unassigned_shards": 0, // 当前没有延迟的未分配分片。"number_of_pending_tasks": 0, // 当前没有待处理的任务。"number_of_in_flight_fetch": 0, // 当前没有正在进行的获取请求。"task_max_waiting_in_queue_millis": 0, // 当前没有任务在队列中等待,表示没有延迟。"active_shards_percent_as_number": 100.0 // 活跃分片百分比为 100,表示所有分片都处于活跃状态。

}

测试 headless 域名解析

### 创建测试Pod

[root@master efk]# vim pod.yaml

apiVersion: v1

kind: Pod

metadata:name: nslookup

spec:containers:- name: dataimage: busyboximagePullPolicy: IfNotPresentargs:- /bin/sh- -c- sleep 36000

部署 Pod 文件

[root@master efk]# kubectl apply -f pod.yaml

测试解析

[root@master efk]# kubectl exec -it nslookup -- sh

/ # nslookup es-cluster-0.elasticsearch.default.svc.cluster.local

Server: 10.96.0.10

Address: 10.96.0.10:53Name: es-cluster-0.elasticsearch.default.svc.cluster.local

Address: 10.244.0.18/ # nslookup elasticsearch.default.svc.cluster.local

Server: 10.96.0.10

Address: 10.96.0.10:53Name: elasticsearch.default.svc.cluster.local

Address: 10.244.0.18

Name: elasticsearch.default.svc.cluster.local

Address: 10.244.0.20

Name: elasticsearch.default.svc.cluster.local

Address: 10.244.0.21

(2)配置Kibana

[root@master efk]# vim kibana.yaml

apiVersion: apps/v1

kind: Deployment

metadata:name: kibanalabels:app: kibana

spec:replicas: 1selector:matchLabels:app: kibanatemplate:metadata:labels:app: kibanaspec:containers:- name: kibanaimage: docker.elastic.co/kibana/kibana:7.2.0imagePullPolicy: IfNotPresentports:- containerPort: 5601resources:limits:cpu: 1000mrequests:cpu: 100menv:- name: ELASTICSEARCH_URLvalue: http://elasticsearch.default.svc.cluster.local:9200

部署 kibana 文件

[root@master efk]# kubectl apply -f kibana.yaml

查看 Pod 运行状态

[root@master efk]# kubectl get pod

NAME READY STATUS RESTARTS AGE

es-cluster-0 1/1 Running 0 3m30s

es-cluster-1 1/1 Running 0 3m26s

es-cluster-2 1/1 Running 0 3m20s

kibana-774758dd6c-r5c9s 1/1 Running 0 3s

nfs-client-provisioner-79865487f9-tnssn 1/1 Running 0 8m42s

配置 service 文件

[root@master efk]# vim kibana-svc.yaml

apiVersion: v1

kind: Service

metadata:name: kibanalabels:app: kibana

spec:type: LoadBalancerports:- protocol: TCPport: 80targetPort: 5601selector:app: kibana

部署 service 文件

[root@master efk]# kubectl apply -f kibana-svc.yaml

查看 service

[root@master efk]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

elasticsearch ClusterIP None <none> 9200/TCP,9300/TCP 8m

kibana LoadBalancer 10.99.16.75 <pending> 80:32361/TCP 32s

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 7d19h

验证 Kibana 部署 开启端口转发

[root@master efk]# kubectl port-forward kibana-786f7f49d-4vlqk 5601:5601

打开一个新的 Terminal 窗口,以便我们可以查询 REST API 接口

[root@master efk]# curl http://localhost:5601/app/kibana

(3)配置Fluentd

创建角色文件

[root@master efk]# vim fluentd-rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:name: fluentdlabels:app: fluentd

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:name: fluentdlabels:app: fluentd

rules:

- apiGroups:- ""resources:- pods- namespacesverbs:- get- list- watch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:name: fluentd

roleRef:kind: ClusterRolename: fluentdapiGroup: rbac.authorization.k8s.io

subjects:

- kind: ServiceAccountname: fluentdnamespace: default

---

apiVersion: apps/v1

kind: DaemonSet

metadata:name: fluentdnamespace: defaultlabels:app: fluentd

spec:selector:matchLabels:app: fluentdtemplate:metadata:labels:app: fluentdspec:serviceAccount: fluentdserviceAccountName: fluentdtolerations:- operator: Existscontainers:- name: fluentdimage: fluent/fluentd-kubernetes-daemonset:v1.4.2-debian-elasticsearch-1.1env:- name: FLUENT_ELASTICSEARCH_HOSTvalue: "elasticsearch.default.svc.cluster.local"- name: FLUENT_ELASTICSEARCH_PORTvalue: "9200"- name: FLUENT_ELASTICSEARCH_SCHEMEvalue: "http"- name: FLUENTD_SYSTEMD_CONFvalue: disable- name: FLUENT_ELASTICSEARCH_SED_DISABLEvalue: "true"resources:limits:cpu: 300mmemory: 512Mirequests:cpu: 50mmemory: 100MivolumeMounts:- name: varlogmountPath: /var/log- name: varlibdockercontainersmountPath: /var/lib/docker/containersreadOnly: true- name: configmountPath: /fluentd/etc/kubernetes.confsubPath: kubernetes.conf- name: index-templatemountPath: /fluentd/etc/index_template.jsonsubPath: index_template.jsonterminationGracePeriodSeconds: 30volumes:- name: varloghostPath:path: /var/log- name: varlibdockercontainershostPath:path: /var/lib/docker/containers- name: configconfigMap:name: fluentd-configitems:- key: kubernetes.confpath: kubernetes.conf- name: index-templateconfigMap:name: fluentd-configitems:- key: index_template.jsonpath: index_template.json

部署 fluentd-role 文件

[root@master efk]# kubectl apply -f fluentd-rbac.yaml

创建 configmap 文件

[root@master efk]# vim fluentd-config.yaml

kind: ConfigMap

apiVersion: v1

metadata:name: fluentd-configlabels:addonmanager.kubernetes.io/mode: Reconcile

data:kubernetes.conf: |-# AUTOMATICALLY GENERATED# DO NOT EDIT THIS FILE DIRECTLY, USE /templates/conf/kubernetes.conf.erb<match fluent.**>@type elasticsearchrequest_timeout 2147483648include_tag_key truehost "#{ENV['FLUENT_ELASTICSEARCH_HOST']}"port "#{ENV['FLUENT_ELASTICSEARCH_PORT']}"path "#{ENV['FLUENT_ELASTICSEARCH_PATH']}"scheme "#{ENV['FLUENT_ELASTICSEARCH_SCHEME'] || 'http'}"ssl_verify "#{ENV['FLUENT_ELASTICSEARCH_SSL_VERIFY'] || 'true'}"ssl_version "#{ENV['FLUENT_ELASTICSEARCH_SSL_VERSION'] || 'TLSv1'}"reload_connections "#{ENV['FLUENT_ELASTICSEARCH_RELOAD_CONNECTIONS'] || 'false'}"reconnect_on_error "#{ENV['FLUENT_ELASTICSEARCH_RECONNECT_ON_ERROR'] || 'true'}"reload_on_failure "#{ENV['FLUENT_ELASTICSEARCH_RELOAD_ON_FAILURE'] || 'true'}"log_es_400_reason "#{ENV['FLUENT_ELASTICSEARCH_LOG_ES_400_REASON'] || 'false'}"logstash_prefix "#{ENV['FLUENT_ELASTICSEARCH_LOGSTASH_PREFIX'] || 'logstash'}"logstash_format "#{ENV['FLUENT_ELASTICSEARCH_LOGSTASH_FORMAT'] || 'true'}"index_name "#{ENV['FLUENT_ELASTICSEARCH_LOGSTASH_INDEX_NAME'] || 'logstash'}"type_name "#{ENV['FLUENT_ELASTICSEARCH_LOGSTASH_TYPE_NAME'] || 'fluentd'}"enable_ilm true#ilm_policy_id watch-history-ilm-policy#ilm_policy_overwrite false#rollover_index true#ilm_policy {}template_name delete-after-7daystemplate_file /fluentd/etc/index_template.json#customize_template {"<<index_prefix>>": "fluentd"}<buffer>@type filepath /var/log/fluentd-bufferflush_thread_count "#{ENV['FLUENT_ELASTICSEARCH_BUFFER_FLUSH_THREAD_COUNT'] || '8'}"flush_interval "#{ENV['FLUENT_ELASTICSEARCH_BUFFER_FLUSH_INTERVAL'] || '5s'}"chunk_limit_size "#{ENV['FLUENT_ELASTICSEARCH_BUFFER_CHUNK_LIMIT_SIZE'] || '4M'}"queue_limit_length "#{ENV['FLUENT_ELASTICSEARCH_BUFFER_QUEUE_LIMIT_LENGTH'] || '32'}"retry_max_interval "#{ENV['FLUENT_ELASTICSEARCH_BUFFER_RETRY_MAX_INTERVAL'] || '30'}"retry_forever true</buffer></match><source>@type tailtag kubernetes.*path /var/log/containers/*.logpos_file /var/log/kube-containers.log.posread_from_head false<parse>@type multi_format<pattern>format jsontime_format %Y-%m-%dT%H:%M:%S.%NZ</pattern><pattern>format regexptime_format %Y-%m-%dT%H:%M:%S.%N%:zexpression /^(?<time>.+)\b(?<stream>stdout|stderr)\b(?<log>.*)$/</pattern></parse></source><source>@type tail@id in_tail_minionpath /var/log/salt/minionpos_file /var/log/fluentd-salt.postag saltread_from_head false<parse>@type regexpexpression /^(?<time>[^ ]* [^ ,]*)[^\[]*\[[^\]]*\]\[(?<severity>[^ \]]*) *\] (?<message>.*)$/time_format %Y-%m-%d %H:%M:%S</parse></source><source>@type tail@id in_tail_startupscriptpath /var/log/startupscript.logpos_file /var/log/fluentd-startupscript.log.postag startupscriptread_from_head false<parse>@type syslog</parse></source><source>@type tail@id in_tail_dockerpath /var/log/docker.logpos_file /var/log/fluentd-docker.log.postag dockerread_from_head false<parse>@type regexpexpression /^time="(?<time>[^)]*)" level=(?<severity>[^ ]*) msg="(?<message>[^"]*)"( err="(?<error>[^"]*)")?( statusCode=($<status_code>\d+))?/</parse></source><source>@type tail@id in_tail_etcdpath /var/log/etcd.logpos_file /var/log/fluentd-etcd.log.postag etcdread_from_head false<parse>@type none</parse></source><source>@type tail@id in_tail_kubeletmultiline_flush_interval 5spath /var/log/kubelet.logpos_file /var/log/fluentd-kubelet.log.postag kubeletread_from_head false<parse>@type kubernetes</parse></source><source>@type tail@id in_tail_kube_proxymultiline_flush_interval 5spath /var/log/kube-proxy.logpos_file /var/log/fluentd-kube-proxy.log.postag kube-proxyread_from_head false<parse>@type kubernetes</parse></source><source>@type tail@id in_tail_kube_apiservermultiline_flush_interval 5spath /var/log/kube-apiserver.logpos_file /var/log/fluentd-kube-apiserver.log.postag kube-apiserverread_from_head false<parse>@type kubernetes</parse></source><source>@type tail@id in_tail_kube_controller_managermultiline_flush_interval 5spath /var/log/kube-controller-manager.logpos_file /var/log/fluentd-kube-controller-manager.log.postag kube-controller-managerread_from_head false<parse>@type kubernetes</parse></source><source>@type tail@id in_tail_kube_schedulermultiline_flush_interval 5spath /var/log/kube-scheduler.logpos_file /var/log/fluentd-kube-scheduler.log.postag kube-schedulerread_from_head false<parse>@type kubernetes</parse></source><source>@type tail@id in_tail_reschedulermultiline_flush_interval 5spath /var/log/rescheduler.logpos_file /var/log/fluentd-rescheduler.log.postag reschedulerread_from_head false<parse>@type kubernetes</parse></source><source>@type tail@id in_tail_glbcmultiline_flush_interval 5spath /var/log/glbc.logpos_file /var/log/fluentd-glbc.log.postag glbcread_from_head false<parse>@type kubernetes</parse></source><source>@type tail@id in_tail_cluster_autoscalermultiline_flush_interval 5spath /var/log/cluster-autoscaler.logpos_file /var/log/fluentd-cluster-autoscaler.log.postag cluster-autoscalerread_from_head false<parse>@type kubernetes</parse></source># Example:# 2017-02-09T00:15:57.992775796Z AUDIT: id="90c73c7c-97d6-4b65-9461-f94606ff825f" ip="104.132.1.72" method="GET" user="kubecfg" as="<self>" asgroups="<lookup>" namespace="default" uri="/api/v1/namespaces/default/pods"# 2017-02-09T00:15:57.993528822Z AUDIT: id="90c73c7c-97d6-4b65-9461-f94606ff825f" response="200"<source>@type tail@id in_tail_kube_apiserver_auditmultiline_flush_interval 5spath /var/log/kubernetes/kube-apiserver-audit.logpos_file /var/log/kube-apiserver-audit.log.postag kube-apiserver-auditread_from_head false<parse>@type multilineformat_firstline /^\S+\s+AUDIT:/# Fields must be explicitly captured by name to be parsed into the record.# Fields may not always be present, and order may change, so this just looks# for a list of key="\"quoted\" value" pairs separated by spaces.# Unknown fields are ignored.# Note: We can't separate query/response lines as format1/format2 because# they don't always come one after the other for a given query.format1 /^(?<time>\S+) AUDIT:(?: (?:id="(?<id>(?:[^"\\]|\\.)*)"|ip="(?<ip>(?:[^"\\]|\\.)*)"|method="(?<method>(?:[^"\\]|\\.)*)"|user="(?<user>(?:[^"\\]|\\.)*)"|groups="(?<groups>(?:[^"\\]|\\.)*)"|as="(?<as>(?:[^"\\]|\\.)*)"|asgroups="(?<asgroups>(?:[^"\\]|\\.)*)"|namespace="(?<namespace>(?:[^"\\]|\\.)*)"|uri="(?<uri>(?:[^"\\]|\\.)*)"|response="(?<response>(?:[^"\\]|\\.)*)"|\w+="(?:[^"\\]|\\.)*"))*/time_format %Y-%m-%dT%T.%L%Z</parse></source><filter kubernetes.**>@type kubernetes_metadata@id filter_kube_metadata</filter>index_template.json: |-{"index_patterns": ["logstash-*"],"settings": {"index": {"lifecycle": {"name": "watch-history-ilm-policy","rollover_alias": ""}}}}

部署 configmap 文件

[root@master efk]# kubectl apply -f fluentd-config.yaml

创建fluentd

[root@master efk]# vim fluentd.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:name: fluentdnamespace: defaultlabels:app: fluentd

spec:selector:matchLabels:app: fluentdtemplate:metadata:labels:app: fluentdspec:serviceAccount: fluentdserviceAccountName: fluentdtolerations:- operator: Existscontainers:- name: fluentdimage: fluent/fluentd-kubernetes-daemonset:v1.4.2-debian-elasticsearch-1.1env:- name: FLUENT_ELASTICSEARCH_HOSTvalue: "elasticsearch.default.svc.cluster.local"- name: FLUENT_ELASTICSEARCH_PORTvalue: "9200"- name: FLUENT_ELASTICSEARCH_SCHEMEvalue: "http"- name: FLUENTD_SYSTEMD_CONFvalue: disable- name: FLUENT_ELASTICSEARCH_SED_DISABLEvalue: "true"resources:limits:cpu: 300mmemory: 512Mirequests:cpu: 50mmemory: 100MivolumeMounts:- name: varlogmountPath: /var/log- name: varlibdockercontainersmountPath: /var/lib/docker/containersreadOnly: true- name: configmountPath: /fluentd/etc/kubernetes.confsubPath: kubernetes.conf- name: index-templatemountPath: /fluentd/etc/index_template.jsonsubPath: index_template.jsonterminationGracePeriodSeconds: 30volumes:- name: varloghostPath:path: /var/log- name: varlibdockercontainershostPath:path: /var/lib/docker/containers- name: configconfigMap:name: fluentd-configitems:- key: kubernetes.confpath: kubernetes.conf- name: index-templateconfigMap:name: fluentd-configitems:- key: index_template.jsonpath: index_template.json

部署 fluentd 文件

[root@master efk]# kubectl apply -f fluentd.yaml

查看 Pod 运行状态

[root@master efk]# kubectl get pod

NAME READY STATUS RESTARTS AGE

es-cluster-0 1/1 Running 0 6h31m

es-cluster-1 1/1 Running 0 6h31m

es-cluster-2 1/1 Running 0 6h31m

fluentd-5dkzc 1/1 Running 0 17m

fluentd-k6h54 1/1 Running 0 17m

kibana-786f7f49d-x6qt8 1/1 Running 0 6h31m

nfs-client-provisioner-79865487f9-c28ql 1/1 Running 0 7h12m

(4)登录kibana控制台

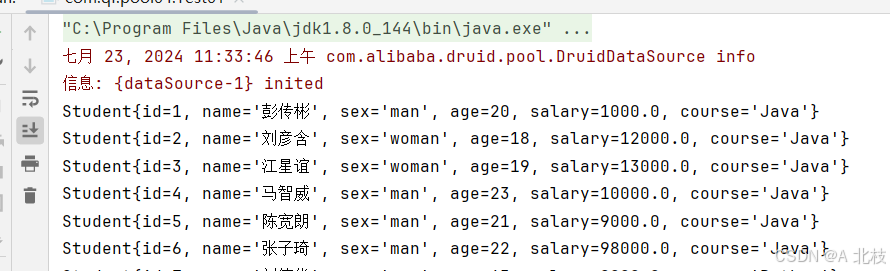

(5)数据源模拟

[root@master efk]# vim data.yaml

apiVersion: v1

kind: Pod

metadata:name: data-logs

spec:containers:- name: counterimage: busyboximagePullPolicy: IfNotPresentargs:- /bin/sh- -c- 'i=0; while true; do echo "$i: Hello, are you collecting my data? $(date)"; i=$((i+1)); sleep 5; done'

部署 data 文件

[root@master efk]# kubectl apply -f data.yaml

(6)创建索引模式

配置索引模式

配置时间戳筛选数据

查看数据