爬取古诗文网的推荐古诗

- 思路分析

- 完整代码

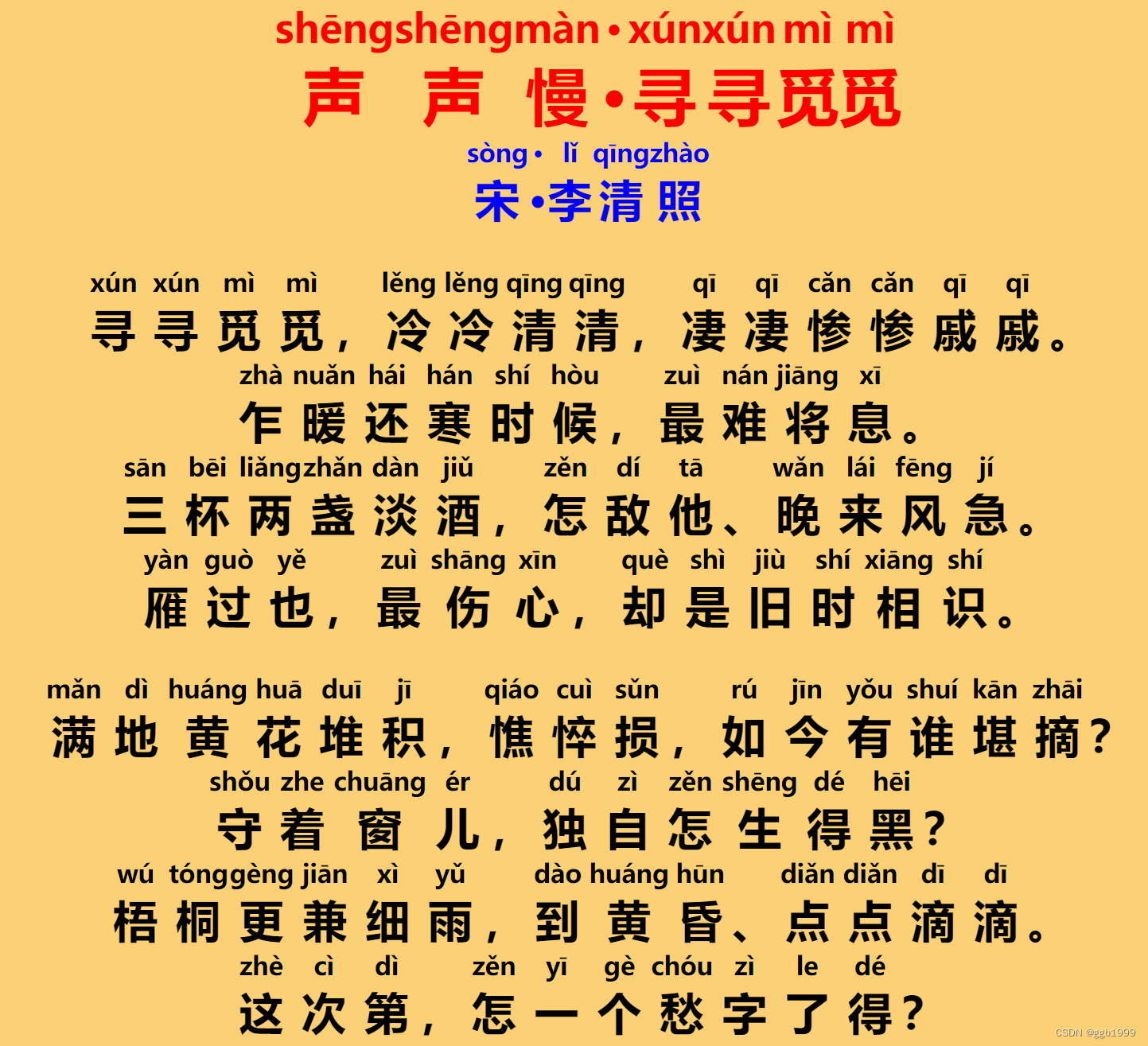

- 结果展示

思路分析

本次的主要目的是练习使用正则表达式提取网页中的数据。

该网站的推荐古诗文一共有10页,页码可以在URL中进行控制,比如说,下面的URL指的是第一页。

https://www.gushiwen.org/default.aspx?page=1

想要第几页只需要让page等于对应的页码数即可。

页面数据的提取使用的是正则表达式,由于爬取的数据较少,这里我就直接保存成CSV文件了。

完整代码

# !/usr/bin/env python

# —*— coding: utf-8 —*—

# @Time: 2020/2/3 13:04

# @Author: Martin

# @File: ancient_poetry.py

# @Software:PyCharm

import requests

import re

import pandas as pddef main():endIndex = 10data = []url = 'https://www.gushiwen.org/default.aspx?page=%d'for i in range(1, endIndex+1):data += parse_page(url % i)save(data)def parse_page(url):headers = {'Referer': 'https://www.gushiwen.org/default.aspx?page=1','User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.130 Safari/537.36'}r = requests.get(url, headers=headers)text = r.texttitles = re.findall(r'<div class="cont">.*?<b>(.*?)</b>', text, re.DOTALL)dynasties = re.findall(r'<p class="source">.*?<a.*?>(.*?)</a>', text, re.DOTALL)authors = re.findall(r'<p class="source">.*?<a.*?>.*?<a.*?>(.*?)</a>', text, re.DOTALL)contents = re.findall(r'<div class="contson".*?>(.*?)</div>', text, re.DOTALL)poem_contents = []for content in contents:item = re.sub(r'<.*?>', "", content).strip()poem_contents.append(item)poem_list = []for title, dynasty, author, content in zip(titles, dynasties, authors, poem_contents):poem_list.append({'title': title,'author': author,'dynasty': dynasty,'content': content})print(poem_list)return poem_listdef save(data):pd.DataFrame(data).to_csv('./result/poem.csv', index=False, encoding='utf_8_sig')if __name__ == '__main__':main()结果展示