CV - 计算机视觉 | ML - 机器学习 | RL - 强化学习 | NLP 自然语言处理

Subjects: cs.CV、cs.AI、cs.LG、cs.IR

1.Graph Signal Sampling for Inductive One-Bit Matrix Completion: a Closed-form Solution(ICLR 2023)

标题:归纳单比特矩阵完成的图信号采样:一个闭式解决方案

作者:Chao Chen, Haoyu Geng, Gang Zeng, Zhaobing Han, Hua Chai, Xiaokang Yang, Junchi Yan

文章链接:https://arxiv.org/abs/2302.03933v1(

项目代码:https://github.com/cchao0116/GSIMC-ICLR2023

摘要:

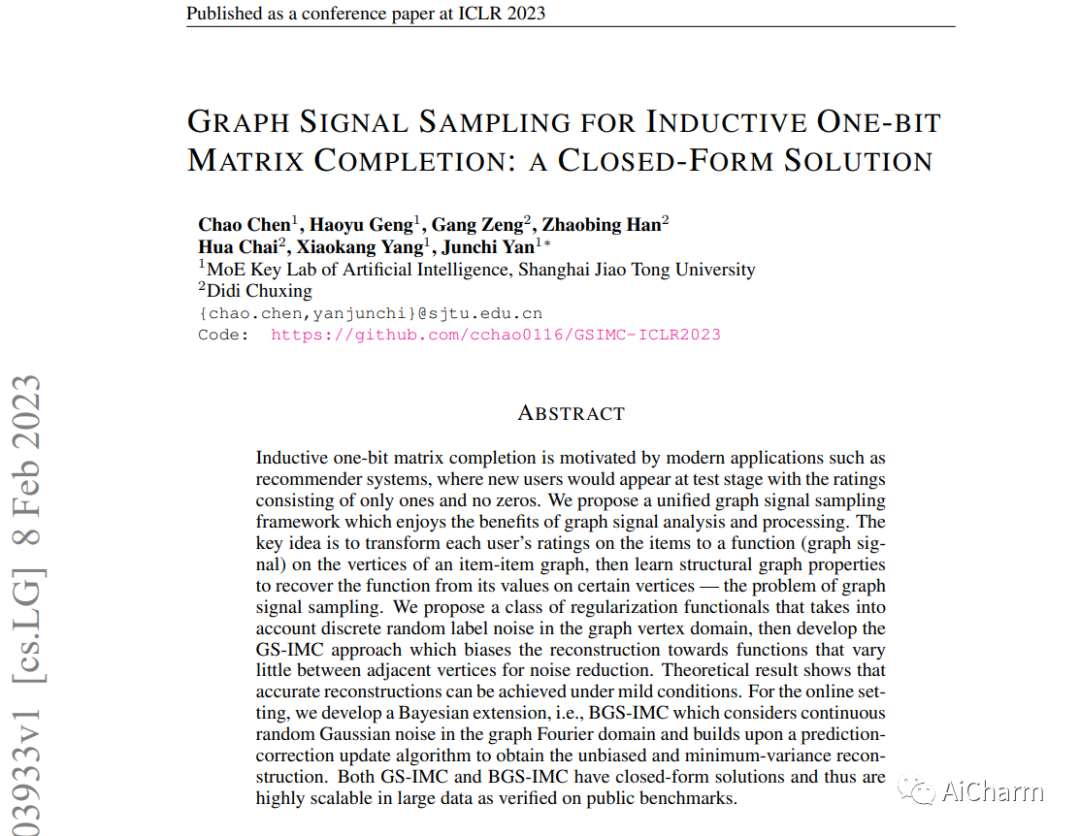

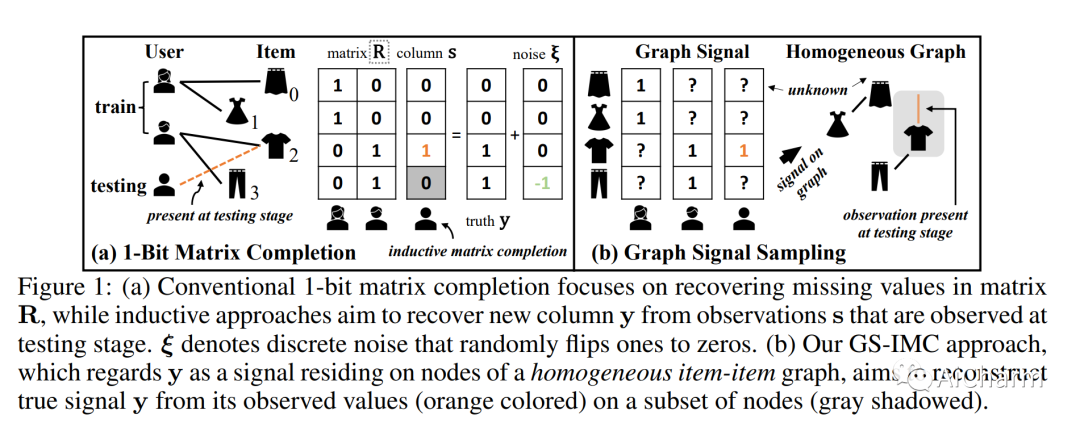

归纳式单比特矩阵完成法是由现代应用所激发的,如推荐系统,新用户会在测试阶段出现,其评分只由1而没有0组成。我们提出了一个统一的图信号采样框架,它享有图信号分析和处理的好处。其关键思想是将每个用户对项目的评分转化为项目-项目图顶点上的函数(信号),然后学习结构图属性,从某些顶点上的数值恢复函数--这就是图信号采样的问题。我们提出了一类考虑到图顶点域中离散随机标签噪声的正则化函数,然后开发了GS-IMC方法,该方法使重建偏向于相邻顶点之间变化不大的函数,以减少噪声。理论结果表明,在温和条件下可以实现精确的重建。对于在线设置,我们开发了一个贝叶斯扩展,即BGS-IMC,它考虑了图形傅里叶域中的连续随机高斯噪声,并建立在预测-校正更新算法之上,以获得无偏和最小方差的重建。GS-IMC和BGS-IMC都有封闭式的解决方案,因此在大数据中具有高度的可扩展性。实验表明,我们的方法在公共基准上取得了最先进的性能。

Inductive one-bit matrix completion is motivated by modern applications such as recommender systems, where new users would appear at test stage with the ratings consisting of only ones and no zeros. We propose a unified graph signal sampling framework which enjoys the benefits of graph signal analysis and processing. The key idea is to transform each user's ratings on the items to a function (signal) on the vertices of an item-item graph, then learn structural graph properties to recover the function from its values on certain vertices -- the problem of graph signal sampling. We propose a class of regularization functionals that takes into account discrete random label noise in the graph vertex domain, then develop the GS-IMC approach which biases the reconstruction towards functions that vary little between adjacent vertices for noise reduction. Theoretical result shows that accurate reconstructions can be achieved under mild conditions. For the online setting, we develop a Bayesian extension, i.e., BGS-IMC which considers continuous random Gaussian noise in the graph Fourier domain and builds upon a prediction-correction update algorithm to obtain the unbiased and minimum-variance reconstruction. Both GS-IMC and BGS-IMC have closed-form solutions and thus are highly scalable in large data. Experiments show that our methods achieve state-of-the-art performance on public benchmarks.

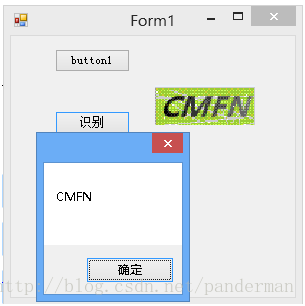

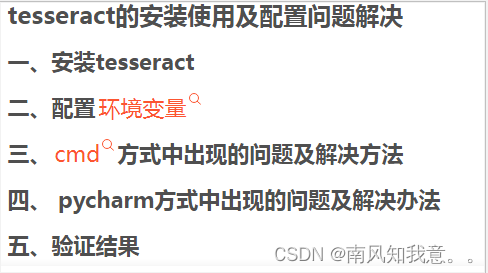

2.Geometric Perception based Efficient Text Recognition

标题:基于几何感知的高效文本识别

作者:P. N. Deelaka, D. R. Jayakodi, D. Y. Silva

文章链接:https://arxiv.org/abs/2302.03873v1

项目代码:https://github.com/ACRA-FL/GeoTRNet

摘要:

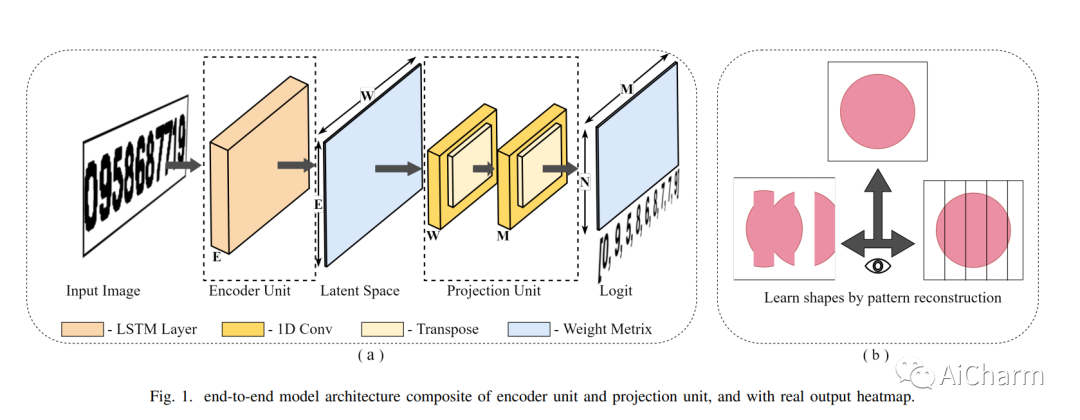

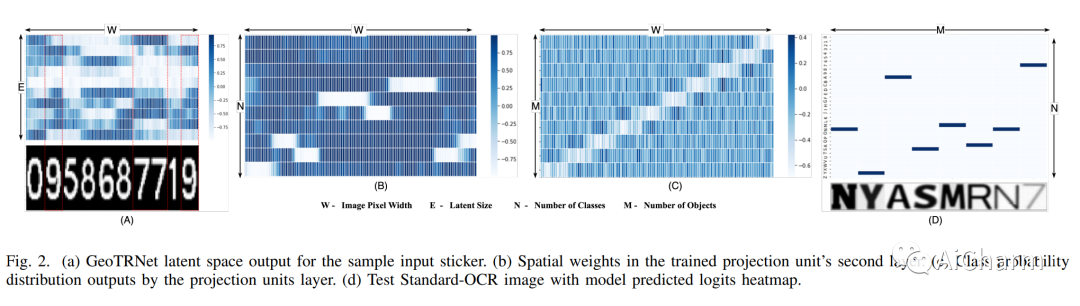

每个场景文本识别(STR)任务都由文本定位和文本识别作为主要子任务。然而,在现实世界中具有固定摄像头位置的应用中,如设备显示器读取、基于图像的数据输入和印刷文件数据提取,其基础数据往往是普通的场景文本。因此,在这些任务中,使用通用的、庞大的模型与定制的、高效的模型相比,在模型的可部署性、数据隐私和模型的可靠性方面存在明显的缺点。因此,本文介绍了开发模型的基本概念、理论、实现和实验结果,这些模型针对任务本身高度专业化,不仅实现了SOTA性能,而且具有最小的模型权重、更短的推理时间和高的模型可靠性。我们介绍了一个新的深度学习架构(GeoTRNet),它被训练来识别普通场景图像中的数字,只使用现有的几何特征,模仿人类对文本识别的感知。

Every Scene Text Recognition (STR) task consists of text localization & text recognition as the prominent sub-tasks. However, in real-world applications with fixed camera positions such as equipment monitor reading, image-based data entry, and printed document data extraction, the underlying data tends to be regular scene text. Hence, in these tasks, the use of generic, bulky models comes up with significant disadvantages compared to customized, efficient models in terms of model deployability, data privacy & model reliability. Therefore, this paper introduces the underlying concepts, theory, implementation, and experiment results to develop models, which are highly specialized for the task itself, to achieve not only the SOTA performance but also to have minimal model weights, shorter inference time, and high model reliability. We introduce a novel deep learning architecture (GeoTRNet), trained to identify digits in a regular scene image, only using the geometrical features present, mimicking human perception over text recognition.

3.Neural Artistic Style Transfer with Conditional Adversaria

标题:有条件对抗性的神经艺术风格转移

作者:P. N. Deelaka

文章链接:https://arxiv.org/abs/2302.03875v1

项目代码:https://github.com/nipdep/STGAN

摘要:

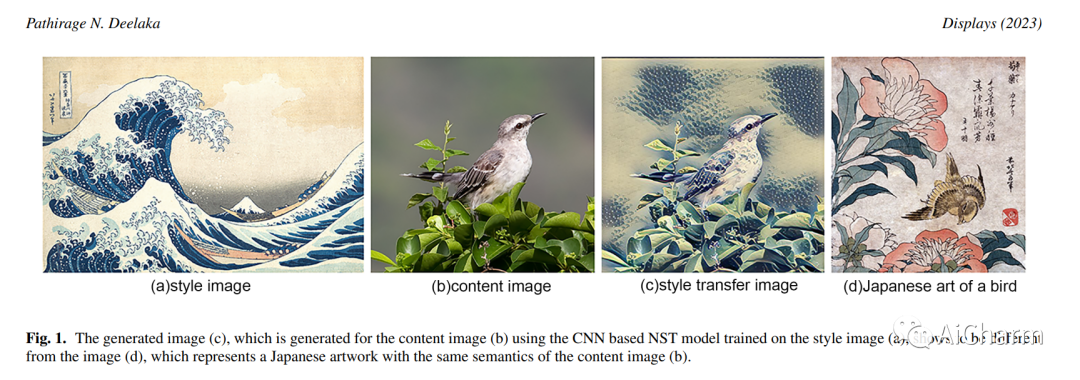

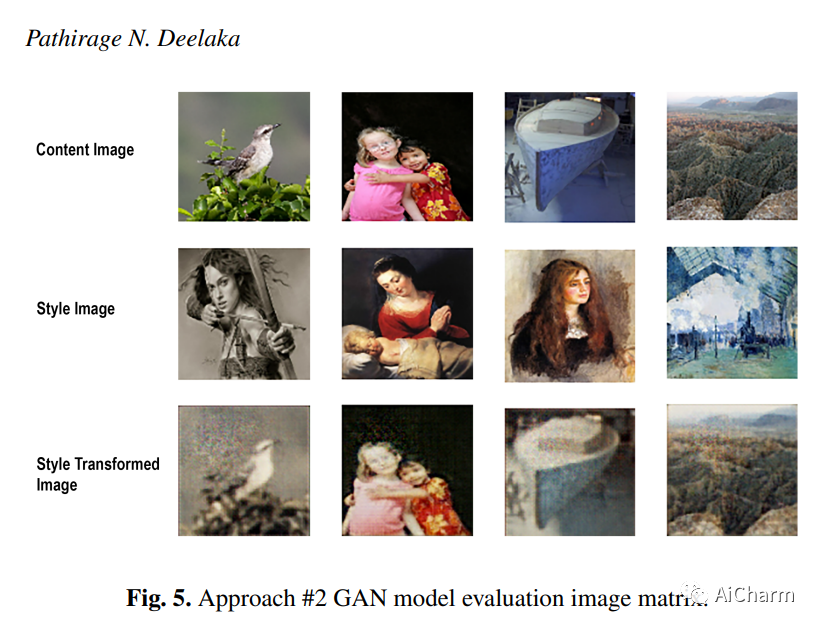

一个神经艺术风格转换(NST)模型可以通过添加著名图像的风格来修改简单图像的外观。即使转换后的图像看起来并不完全像各自风格图像的同一艺术家的艺术作品,但生成的图像还是很吸引人。一般来说,一个训练有素的NST模型专攻一种风格,而单一的图像代表这种风格。然而,在一个新的风格下生成图像是一个繁琐的过程,其中包括完整的模型训练。在本文中,我们提出了两种方法,向风格图像独立的神经风格转移模型迈进。换句话说,经过训练的模型可以在任何内容、风格图像输入对下产生语义准确的生成图像。我们的新贡献是一个单向的GAN模型,它通过模型结构确保了循环一致性。此外,这导致了更小的模型尺寸和有效的训练和验证阶段。

A neural artistic style transformation (NST) model can modify the appearance of a simple image by adding the style of a famous image. Even though the transformed images do not look precisely like artworks by the same artist of the respective style images, the generated images are appealing. Generally, a trained NST model specialises in a style, and a single image represents that style. However, generating an image under a new style is a tedious process, which includes full model training. In this paper, we present two methods that step toward the style image independent neural style transfer model. In other words, the trained model could generate semantically accurate generated image under any content, style image input pair. Our novel contribution is a unidirectional-GAN model that ensures the Cyclic consistency by the model architecture.Furthermore, this leads to much smaller model size and an efficient training and validation phase.