文章目录

- 前言

- 一、漫画生成怎么搞?

- 二、White-box Cartoon Representations

- 1.网络结构

- 2.代码

- 附

前言

提示:这里可以添加本文要记录的大概内容:

例如:随着人工智能的不断发展,机器学习这门技术也越来越重要,很多人都开启了学习机器学习,本文就介绍了机器学习的基础内容。

提示:以下是本篇文章正文内容,下面案例可供参考

一、漫画生成怎么搞?

我们先假设一下:你要将qq头像改为自己真人的卡通图,那么你会怎么做呢?比如这是你的图像(size:256256,如果尺寸不一样或者不是正方形需要resize,如opencv中resize函数将图像调整为256256

output = cv2.resize(input,(256,256),interpolation=cv2.INTER_AREA)

GAN之前的传统方法:使用边缘检测、双边滤波器和降采样,得到图像如下,可以看到,噪点很多,有些关键线条也没有展现出来

GAN网络使用的方法是根据图像对去不断地学习,如输入图像1和对应已有的漫画B,GAN网络从图片1中获取关键特征,不停地生成一张图像C,当C与B的差值很小时停止,当有很多这样地图像对时,我们就有了一个模型。输入一张图像,就可以生成一张对应地漫画图像,我这次使用的GAN(White-box Cartoon)生成效果如下,还勉强吧,跟大厂还是有比较大的差距,但胜在结构简单、方便学习。

二、White-box Cartoon Representations

1.网络结构

这是一篇CVPR2020,论文和github地址如下:

论文地址

github地址

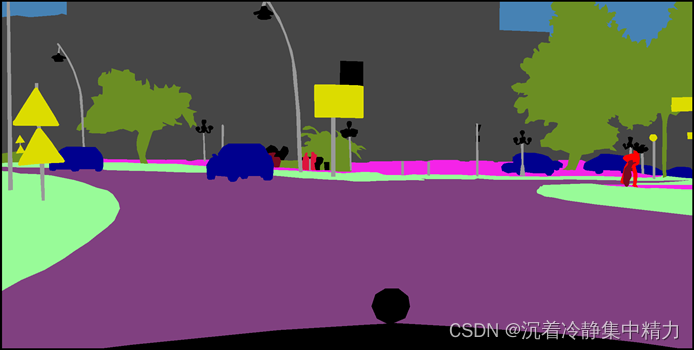

这篇论文的亮点就是使用了三个网络分别学习表层、结构和纹理特征

下面这张图可以看到,拿生成的图像和输入图像计算结构损失,其他的纹理和表层损失是和已有的卡通图做的,这个思路可以说是非常有见解的,可以在其他领域借鉴(有的放矢、各个击破)

2.代码

下面这个代码是根据github上改的,输入图像最大为720*720。

修改后的代码如下:

import os

import cv2

import torch

import numpy as np

import torch.nn as nnclass ResBlock(nn.Module):def __init__(self, num_channel):super(ResBlock, self).__init__()self.conv_layer = nn.Sequential(nn.Conv2d(num_channel, num_channel, 3, 1, 1),nn.BatchNorm2d(num_channel),nn.ReLU(inplace=True),nn.Conv2d(num_channel, num_channel, 3, 1, 1),nn.BatchNorm2d(num_channel))self.activation = nn.ReLU(inplace=True)def forward(self, inputs):output = self.conv_layer(inputs)output = self.activation(output + inputs)return outputclass DownBlock(nn.Module):def __init__(self, in_channel, out_channel):super(DownBlock, self).__init__()self.conv_layer = nn.Sequential(nn.Conv2d(in_channel, out_channel, 3, 2, 1),nn.BatchNorm2d(out_channel),nn.ReLU(inplace=True),nn.Conv2d(out_channel, out_channel, 3, 1, 1),nn.BatchNorm2d(out_channel),nn.ReLU(inplace=True))def forward(self, inputs):output = self.conv_layer(inputs)return outputclass UpBlock(nn.Module):def __init__(self, in_channel, out_channel, is_last=False):super(UpBlock, self).__init__()self.is_last = is_lastself.conv_layer = nn.Sequential(nn.Conv2d(in_channel, in_channel, 3, 1, 1),nn.BatchNorm2d(in_channel),nn.ReLU(inplace=True),nn.Upsample(scale_factor=2),nn.Conv2d(in_channel, out_channel, 3, 1, 1))self.act = nn.Sequential(nn.BatchNorm2d(out_channel),nn.ReLU(inplace=True))self.last_act = nn.Tanh()def forward(self, inputs):output = self.conv_layer(inputs)if self.is_last:output = self.last_act(output)else:output = self.act(output)return outputclass SimpleGenerator(nn.Module):def __init__(self, num_channel=32, num_blocks=4):super(SimpleGenerator, self).__init__()self.down1 = DownBlock(3, num_channel)self.down2 = DownBlock(num_channel, num_channel*2)self.down3 = DownBlock(num_channel*2, num_channel*3)self.down4 = DownBlock(num_channel*3, num_channel*4)res_blocks = [ResBlock(num_channel*4)]*num_blocksself.res_blocks = nn.Sequential(*res_blocks)self.up1 = UpBlock(num_channel*4, num_channel*3)self.up2 = UpBlock(num_channel*3, num_channel*2)self.up3 = UpBlock(num_channel*2, num_channel)self.up4 = UpBlock(num_channel, 3, is_last=True)def forward(self, inputs):down1 = self.down1(inputs)down2 = self.down2(down1)down3 = self.down3(down2)down4 = self.down4(down3)down4 = self.res_blocks(down4)up1 = self.up1(down4)up2 = self.up2(up1+down3)up3 = self.up3(up2+down2)up4 = self.up4(up3+down1)return up4

weight = torch.load('weight.pth', map_location='cpu')

model = SimpleGenerator()

model.load_state_dict(weight)

model.eval()img = cv2.imread(r'input.jpg')image = img/127.5 - 1

image = image.transpose(2, 0, 1)

image = torch.tensor(image).unsqueeze(0)

output = model(image.float())

output = output.squeeze(0).detach().numpy()

output = output.transpose(1, 2, 0)

output = (output + 1) * 127.5

output = np.clip(output, 0, 255).astype(np.uint8)

cv2.imwrite('output.jpg', output)

模型地址

附

如果对应用这方面感兴趣的话,这里还有一篇CVPR2021的,Encoding in Style: a StyleGAN Encoder for Image-to-Image Translation

github地址

功能嘛,如下图所示(注意,有些功能只在数据集中效果好)

![[四格漫画] 第523话 电脑的买法](https://img-blog.csdn.net/20161004110842142?watermark/2/text/aHR0cDovL2Jsb2cuY3Nkbi5uZXQv/font/5a6L5L2T/fontsize/400/fill/I0JBQkFCMA==/dissolve/70/gravity/Center)

![[四格漫画] 第504话 网络相机](https://img-blog.csdn.net/20161004115214223?watermark/2/text/aHR0cDovL2Jsb2cuY3Nkbi5uZXQv/font/5a6L5L2T/fontsize/400/fill/I0JBQkFCMA==/dissolve/70/gravity/Center)