文章目录

- 一:安装calico:

- 1.1:weget安装Colico网络通信插件:

- 1.2:修改calico.yaml网卡相关配置:

- 1.2.1:查看本机ip 网卡相关信息:

- 1.2.2:修改calico.yaml网卡interface相关信息

- 1.3:kubectl apply -f calico.yaml 生成calico pod 对象:

- 1.3.1:异常日志抛出:

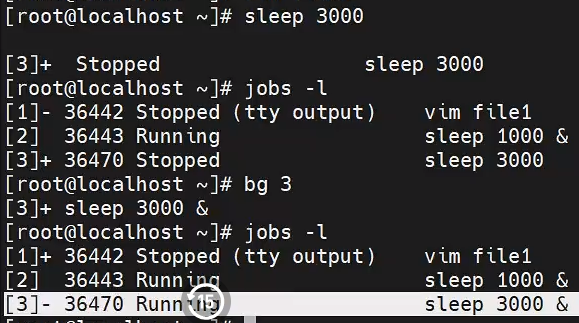

- 1.3.2:场景一:执行K8S admin config配置文件替换相关操作:

- 1.3.2:场景二:执行K8S admin config配置文件替换相关操作:

- 二:安装完成calico pod:解决没用正常运行问题:

- 2.1:查看calico pod 运行状态:

- 2.2:查看init:error calico pod 异常节点信息:执行 kubectl describe pod podcode

- 2.3:可以试试重新下载coredns image 和 执行docker tag coredns相关命令:

- 2.4:再次查看coredns和calico pods启动信息:

- 2.4.1:执行命令kubectl get pod -A.查看coredns和calico pods启动信息:

- 2.5:查看异常calico-node pod 日志:

- 2.5.1:master:命令: kubectl logs -f calico-node-cwpt8 -n kube-system:

- 2.5.2:master:查看异常日志

- 2.5.3:master:telnet 异常信息ip:port 地址加端口:

- 2.5.3.1:安装telnet插件:

- 2.5.3.2:telnet 异常信息ip:port 地址加端口: telnet 192.168.56.102 10250

- 2.5.3.3:开放路由不通的机器端口:10250

- 2.5.3.4:成功: telnet 192.168.56.102 10250

- 2.6:master:再次查看异常calico-node pod 日志:还是不行

- 2.7:master:查看coredns 异常日志:显示和从机器网络有关

- 2.8:cluster:查看coredns 异常日志:显示和从机器网络有关

- 2.8.1:cluster:查看异常日志:journalctl -f -u kubelet:

- 2.8.1.1重点:cni相关配置找不到:"Unable to update cni config" err="no networks found in /etc/cni/net.d"

- 2.8.2:master:查看/etc/cni/net.d配置信息:

- 2.8.3:拷贝到cluster从master:/etc/cni/net.d配置信息

- 2.9:重启kubelet查看各nodes节点状态

- 三:后续问题:

一:安装calico:

1.1:weget安装Colico网络通信插件:

执行: wget --no-check-certificate https://projectcalico.docs.tigera.io/archive/v3.25/manifests/calico.yaml

[root@vboxnode3ccccccttttttchenyang kubernetes]# wget --no-check-certificate https://projectcalico.docs.tigera.io/archive/v3.25/manifests/calico.yaml

--2023-05-03 02:23:02-- https://projectcalico.docs.tigera.io/archive/v3.25/manifests/calico.yaml

正在解析主机 projectcalico.docs.tigera.io (projectcalico.docs.tigera.io)... 13.228.199.255, 18.139.194.139, 2406:da18:880:3800::c8, ...

正在连接 projectcalico.docs.tigera.io (projectcalico.docs.tigera.io)|13.228.199.255|:443... 已连接。

已发出 HTTP 请求,正在等待回应... 200 OK

长度:238089 (233K) [text/yaml]

正在保存至: “calico.yaml”100%[=====================================================================================>] 238,089 392KB/s 用时 0.6s2023-05-03 02:23:03 (392 KB/s) - 已保存 “calico.yaml” [238089/238089])

1.2:修改calico.yaml网卡相关配置:

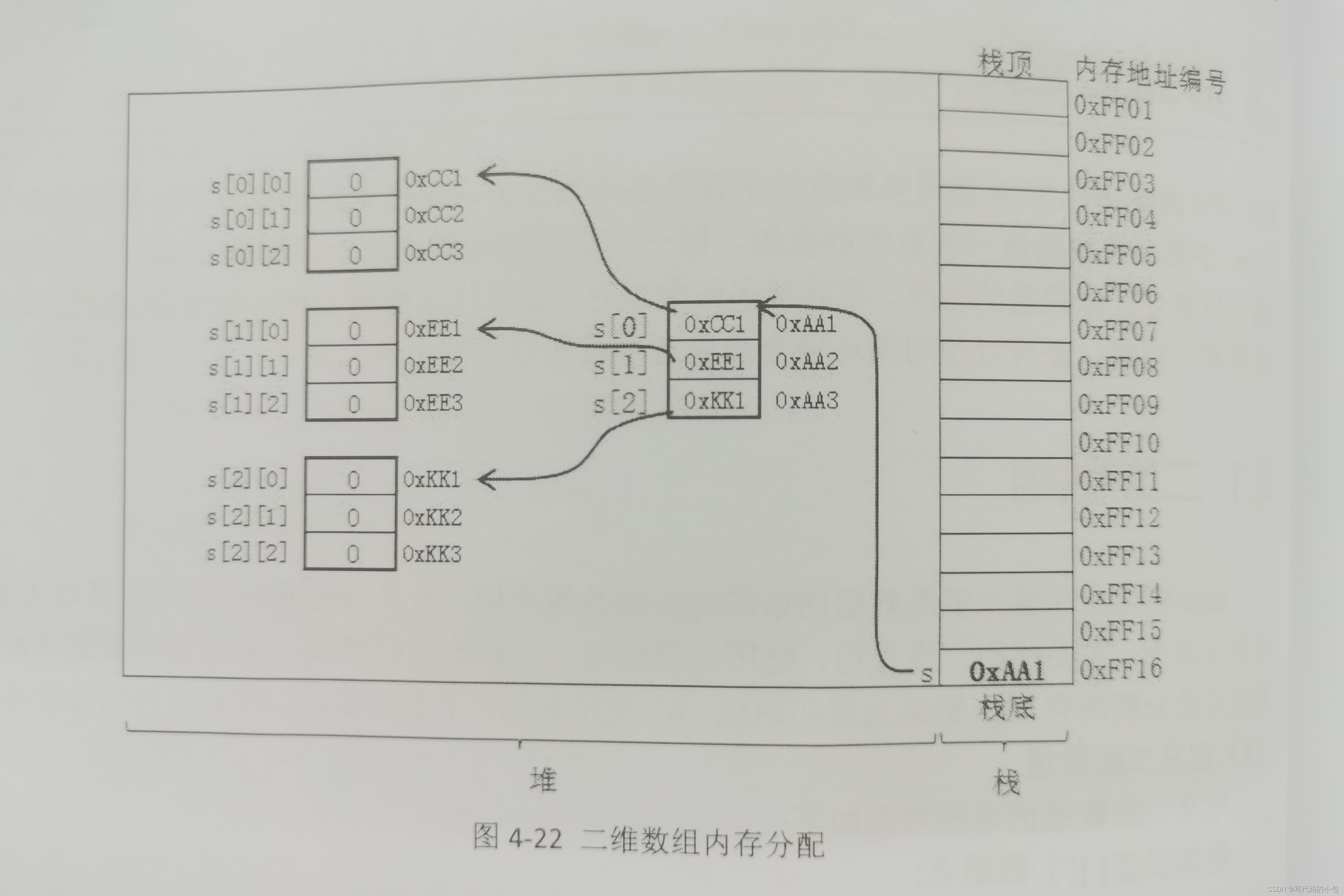

1.2.1:查看本机ip 网卡相关信息:

[root@vboxnode3ccccccttttttchenyang ~]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft foreverinet6 ::1/128 scope hostvalid_lft forever preferred_lft forever

2: enp0s3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000link/ether 08:00:27:84:1b:f1 brd ff:ff:ff:ff:ff:ffinet 192.168.56.103/24 brd 192.168.56.255 scope global noprefixroute dynamic enp0s3valid_lft 409sec preferred_lft 409secinet6 fe80::2f24:1558:442c:89f0/64 scope link tentative noprefixroute dadfailedvalid_lft forever preferred_lft foreverinet6 fe80::643c:80ac:6748:61cd/64 scope link noprefixroutevalid_lft forever preferred_lft forever

3: enp0s8: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000link/ether 08:00:27:61:45:2b brd ff:ff:ff:ff:ff:ffinet 10.0.3.15/24 brd 10.0.3.255 scope global noprefixroute dynamic enp0s8valid_lft 85662sec preferred_lft 85662secinet6 fe80::62a5:e7dc:430f:3cf6/64 scope link noprefixroutevalid_lft forever preferred_lft forever

4: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group defaultlink/ether 02:42:b3:3c:9f:26 brd ff:ff:ff:ff:ff:ffinet 172.17.0.1/16 brd 172.17.255.255 scope global docker0valid_lft forever preferred_lft foreverinet6 fe80::42:b3ff:fe3c:9f26/64 scope linkvalid_lft forever preferred_lft forever

6: vethb3a646a@if5: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group defaultlink/ether 7e:67:28:1f:c9:1c brd ff:ff:ff:ff:ff:ff link-netnsid 0inet6 fe80::7c67:28ff:fe1f:c91c/64 scope linkvalid_lft forever preferred_lft forever

8: veth87a3698@if7: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group defaultlink/ether de:5c:0b:87:e1:9c brd ff:ff:ff:ff:ff:ff link-netnsid 1inet6 fe80::dc5c:bff:fe87:e19c/64 scope linkvalid_lft forever preferred_lft forever

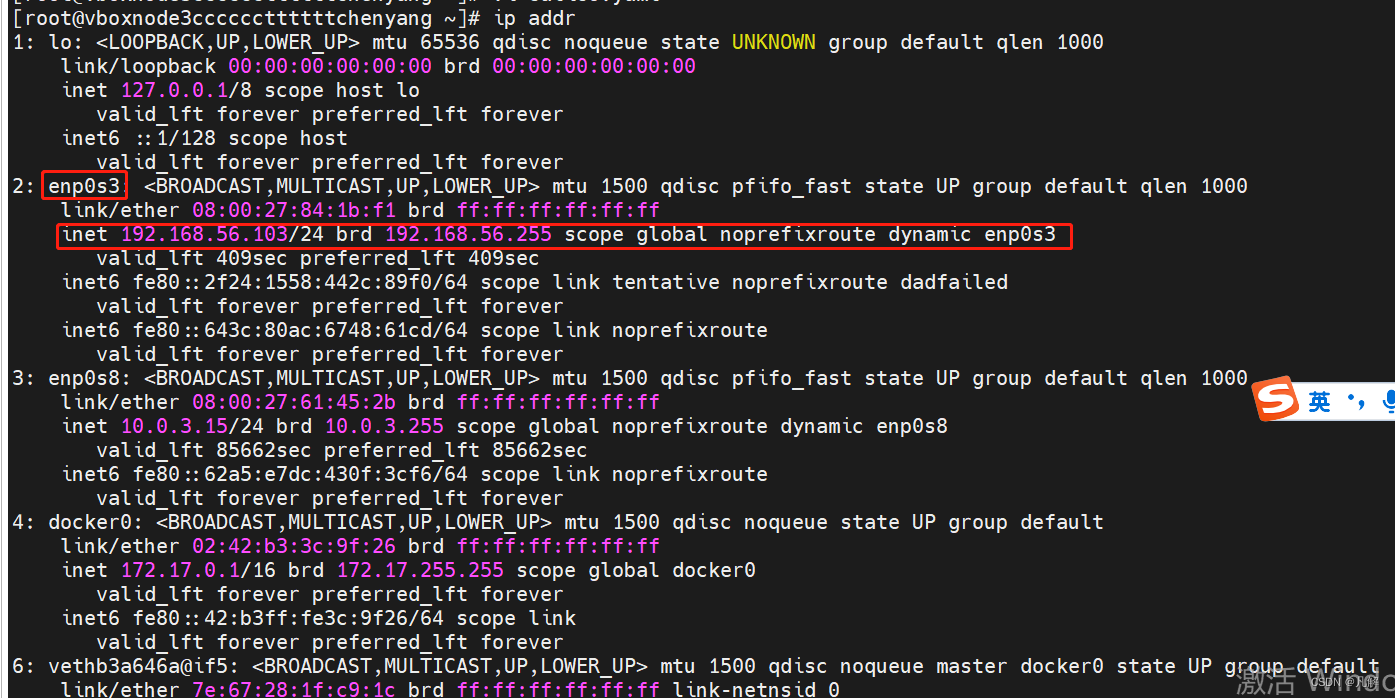

1.2.2:修改calico.yaml网卡interface相关信息

# Cluster type to identify the deployment type- name: CLUSTER_TYPEvalue: "k8s,bgp"- name: IP_AUTODETECTION_METHODvalue: "interface=enp0s3"

1.3:kubectl apply -f calico.yaml 生成calico pod 对象:

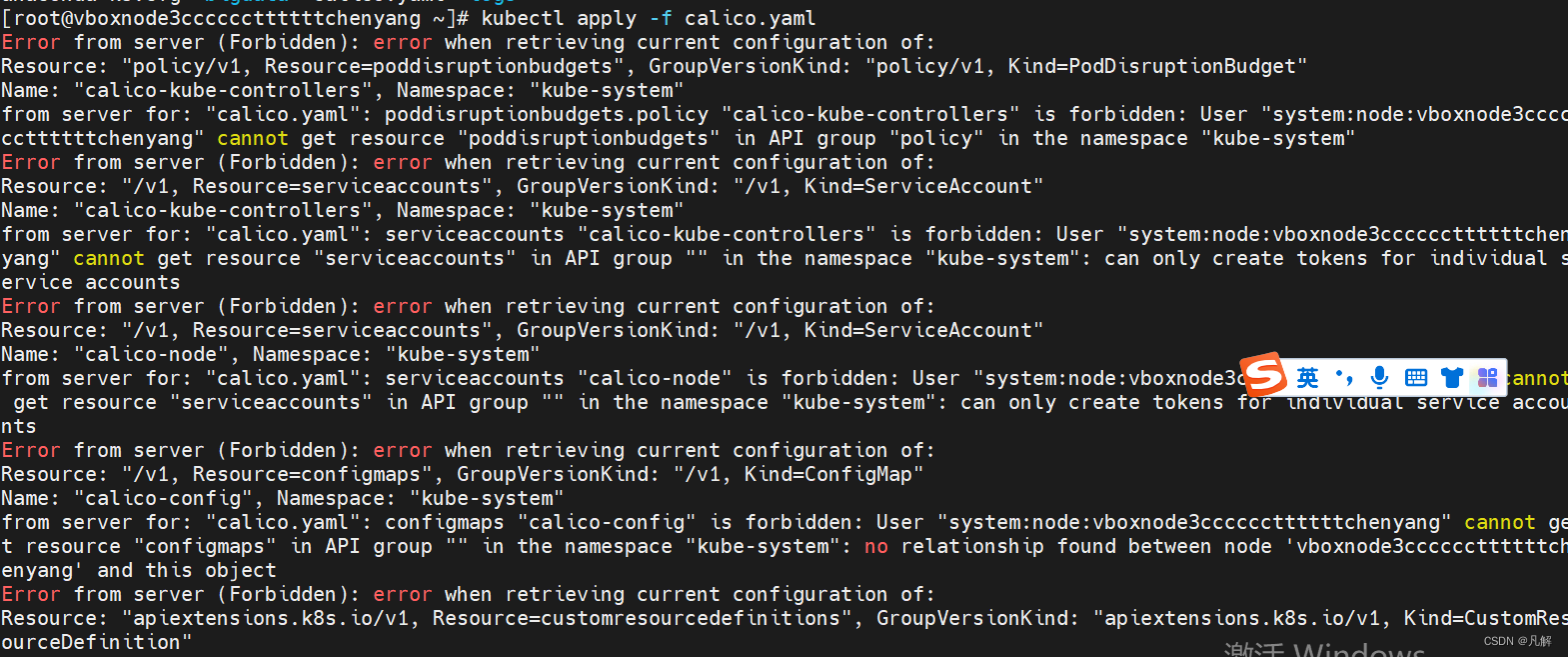

1.3.1:异常日志抛出:

[root@vboxnode3ccccccttttttchenyang ~]# kubectl apply -f calico.yaml

Error from server (Forbidden): error when retrieving current configuration of:

Resource: "policy/v1, Resource=poddisruptionbudgets", GroupVersionKind: "policy/v1, Kind=PodDisruptionBudget"

Name: "calico-kube-controllers", Namespace: "kube-system"

from server for: "calico.yaml": poddisruptionbudgets.policy "calico-kube-controllers" is forbidden: User "system:node:vboxnode3ccccccttttttchenyang" cannot get resource "poddisruptionbudgets" in API group "policy" in the namespace "kube-system"

Error from server (Forbidden): error when retrieving current configuration of:

Resource: "/v1, Resource=serviceaccounts", GroupVersionKind: "/v1, Kind=ServiceAccount"

Name: "calico-kube-controllers", Namespace: "kube-system"

from server for: "calico.yaml": serviceaccounts "calico-kube-controllers" is forbidden: User "system:node:vboxnode3ccccccttttttchenyang" cannot get resource "serviceaccounts" in API group "" in the namespace "kube-system": can only create tokens for individual service accounts

Error from server (Forbidden): error when retrieving current configuration of:

Resource: "/v1, Resource=serviceaccounts", GroupVersionKind: "/v1, Kind=ServiceAccount"

Name: "calico-node", Namespace: "kube-system"

from server for: "calico.yaml": serviceaccounts "calico-node" is forbidden: User "system:node:vboxnode3ccccccttttttchenyang" cannot get resource "serviceaccounts" in API group "" in the namespace "kube-system": can only create tokens for individual service accounts

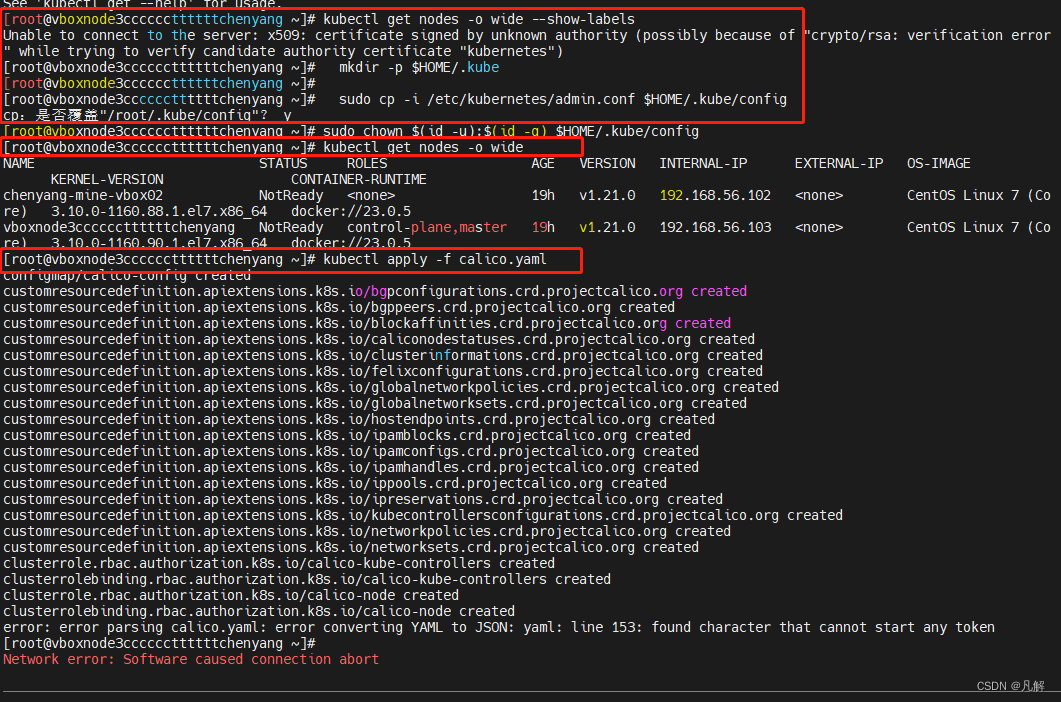

1.3.2:场景一:执行K8S admin config配置文件替换相关操作:

mkdir -p $HOME/.kubesudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/configsudo chown $(id -u):$(id -g) $HOME/.kube/config

[root@vboxnode3ccccccttttttchenyang ~]# kubectl get nodes -o wide --show-labels

Unable to connect to the server: x509: certificate signed by unknown authority (possibly because of "crypto/rsa: verification error " while trying to verify candidate authority certificate "kubernetes")

[root@vboxnode3ccccccttttttchenyang ~]# mkdir -p $HOME/.kube

[root@vboxnode3ccccccttttttchenyang ~]#

[root@vboxnode3ccccccttttttchenyang ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

cp:是否覆盖"/root/.kube/config"? y

[root@vboxnode3ccccccttttttchenyang ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

[root@vboxnode3ccccccttttttchenyang ~]# kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

chenyang-mine-vbox02 NotReady <none> 19h v1.21.0 192.168.56.102 <none> CentOS Linux 7 (Co re) 3.10.0-1160.88.1.el7.x86_64 docker://23.0.5

vboxnode3ccccccttttttchenyang NotReady control-plane,master 19h v1.21.0 192.168.56.103 <none> CentOS Linux 7 (Co re) 3.10.0-1160.90.1.el7.x86_64 docker://23.0.5

[root@vboxnode3ccccccttttttchenyang ~]# kubectl apply -f calico.yaml

configmap/calico-config created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/caliconodestatuses.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipreservations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

error: error parsing calico.yaml: error converting YAML to JSON: yaml: line 153: found character that cannot start any token

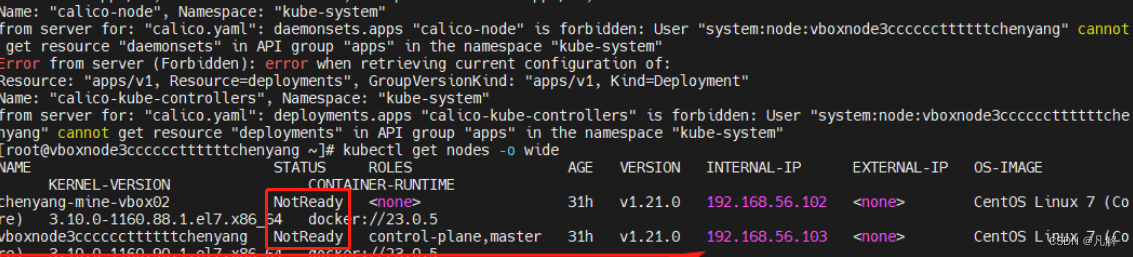

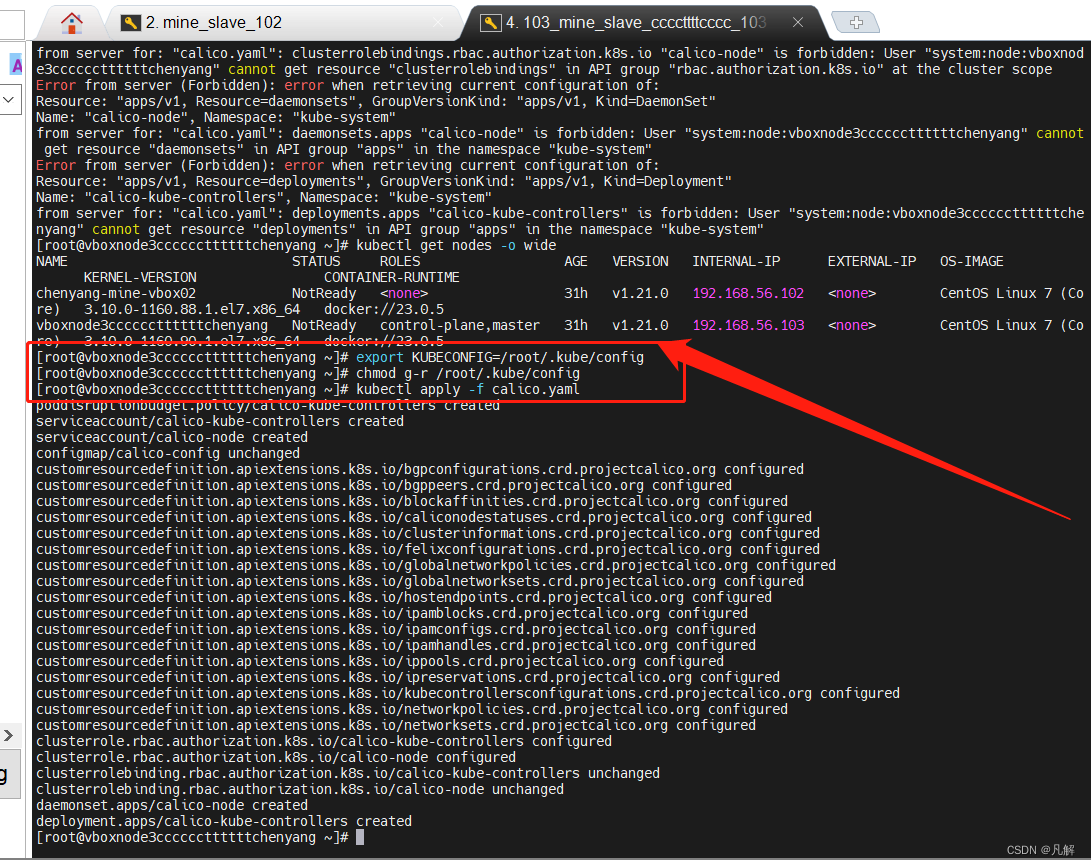

1.3.2:场景二:执行K8S admin config配置文件替换相关操作:

执行相关命令:

export KUBECONFIG=/root/.kube/config

chmod g-r /root/.kube/config

kubectl apply -f calico.yaml

[root@vboxnode3ccccccttttttchenyang ~]# export KUBECONFIG=/root/.kube/config

[root@vboxnode3ccccccttttttchenyang ~]# chmod g-r /root/.kube/config

[root@vboxnode3ccccccttttttchenyang ~]# kubectl apply -f calico.yaml

from server for: "calico.yaml": clusterrolebindings.rbac.authorization.k8s.io "calico-node" is forbidden: User "system:node:vboxnod e3ccccccttttttchenyang" cannot get resource "clusterrolebindings" in API group "rbac.authorization.k8s.io" at the cluster scope

Error from server (Forbidden): error when retrieving current configuration of:

Resource: "apps/v1, Resource=daemonsets", GroupVersionKind: "apps/v1, Kind=DaemonSet"

Name: "calico-node", Namespace: "kube-system"

from server for: "calico.yaml": daemonsets.apps "calico-node" is forbidden: User "system:node:vboxnode3ccccccttttttchenyang" cannot get resource "daemonsets" in API group "apps" in the namespace "kube-system"

Error from server (Forbidden): error when retrieving current configuration of:

Resource: "apps/v1, Resource=deployments", GroupVersionKind: "apps/v1, Kind=Deployment"

Name: "calico-kube-controllers", Namespace: "kube-system"

from server for: "calico.yaml": deployments.apps "calico-kube-controllers" is forbidden: User "system:node:vboxnode3ccccccttttttche nyang" cannot get resource "deployments" in API group "apps" in the namespace "kube-system"

[root@vboxnode3ccccccttttttchenyang ~]# kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

chenyang-mine-vbox02 NotReady <none> 31h v1.21.0 192.168.56.102 <none> CentOS Linux 7 (Co re) 3.10.0-1160.88.1.el7.x86_64 docker://23.0.5

vboxnode3ccccccttttttchenyang NotReady control-plane,master 31h v1.21.0 192.168.56.103 <none> CentOS Linux 7 (Co re) 3.10.0-1160.90.1.el7.x86_64 docker://23.0.5

[root@vboxnode3ccccccttttttchenyang ~]# export KUBECONFIG=/root/.kube/config

[root@vboxnode3ccccccttttttchenyang ~]# chmod g-r /root/.kube/config

[root@vboxnode3ccccccttttttchenyang ~]# kubectl apply -f calico.yaml

poddisruptionbudget.policy/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

serviceaccount/calico-node created

configmap/calico-config unchanged

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/caliconodestatuses.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/ipreservations.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org configured

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers configured

clusterrole.rbac.authorization.k8s.io/calico-node configured

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers unchanged

clusterrolebinding.rbac.authorization.k8s.io/calico-node unchanged

daemonset.apps/calico-node created

deployment.apps/calico-kube-controllers created

[root@vboxnode3ccccccttttttchenyang ~]#

二:安装完成calico pod:解决没用正常运行问题:

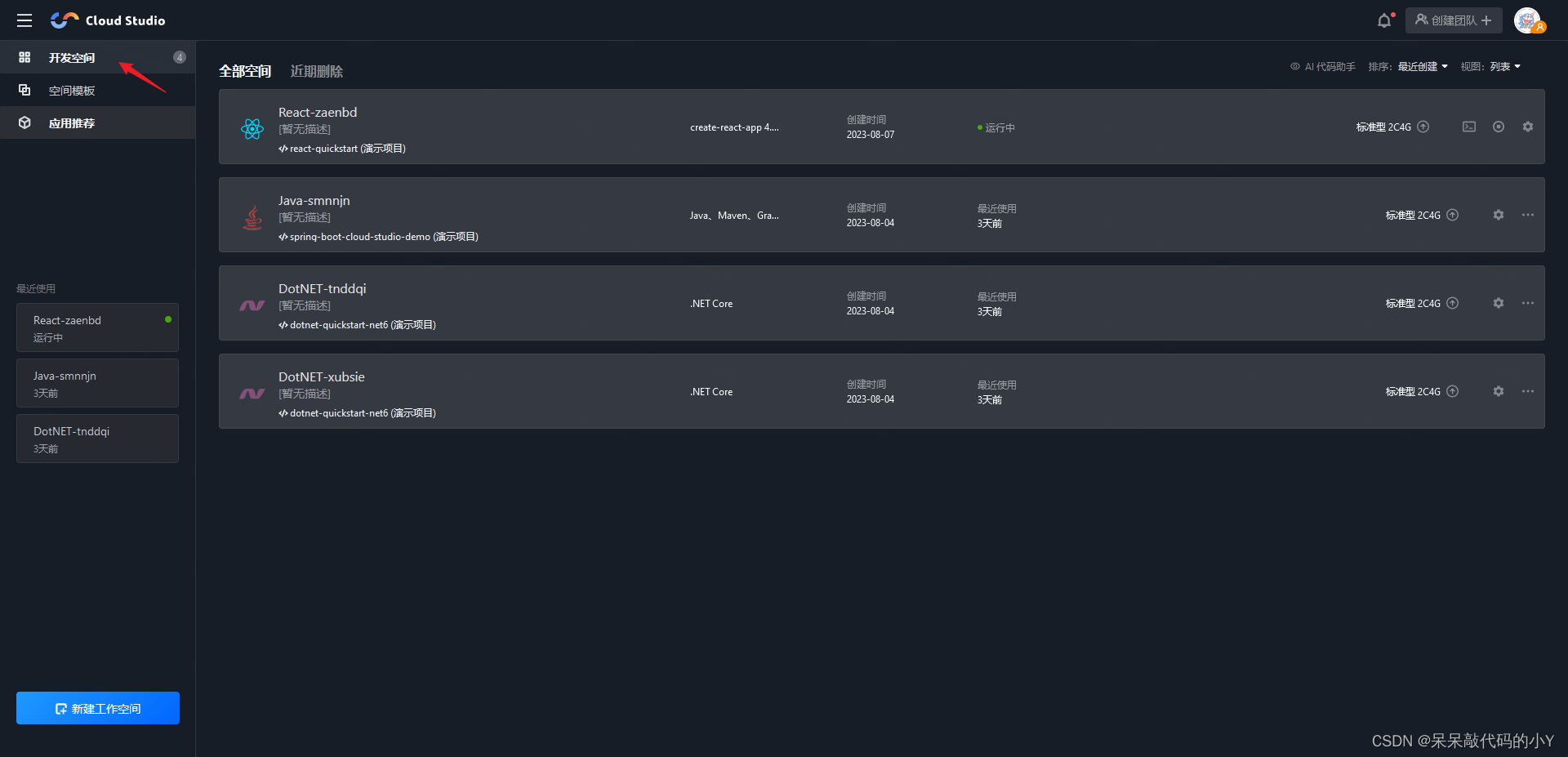

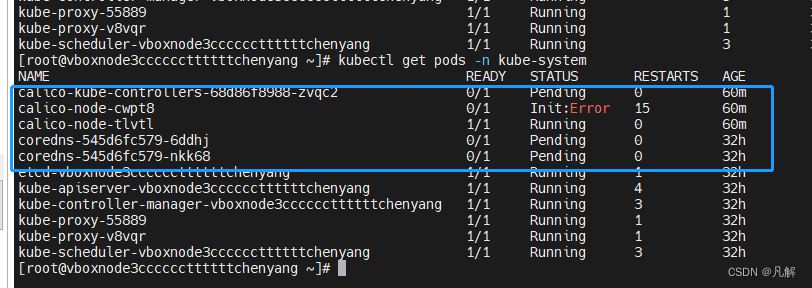

2.1:查看calico pod 运行状态:

查看所有命名空间

kubectl get ns -o wide

查看所有pod在kube-system命名空间

kubectl get pods -n kube-system

[root@vboxnode3ccccccttttttchenyang ~]# kubectl get ns -o wide

[root@vboxnode3ccccccttttttchenyang ~]# kubectl get pods -n kube-system

[root@vboxnode3ccccccttttttchenyang ~]# kubectl get ns -o wide

NAME STATUS AGE

default Active 31h

kube-node-lease Active 31h

kube-public Active 31h

kube-system Active 31h

[root@vboxnode3ccccccttttttchenyang ~]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-68d86f8988-zvqc2 0/1 Pending 0 30m

calico-node-cwpt8 0/1 Init:CrashLoopBackOff 9 30m

calico-node-tlvtl 1/1 Running 0 30m

coredns-545d6fc579-6ddhj 0/1 Pending 0 31h

coredns-545d6fc579-nkk68 0/1 Pending 0 31h

etcd-vboxnode3ccccccttttttchenyang 1/1 Running 1 31h

kube-apiserver-vboxnode3ccccccttttttchenyang 1/1 Running 4 31h

kube-controller-manager-vboxnode3ccccccttttttchenyang 1/1 Running 3 31h

kube-proxy-55889 1/1 Running 1 31h

kube-proxy-v8vqr 1/1 Running 1 31h

kube-scheduler-vboxnode3ccccccttttttchenyang 1/1 Running 3 31h

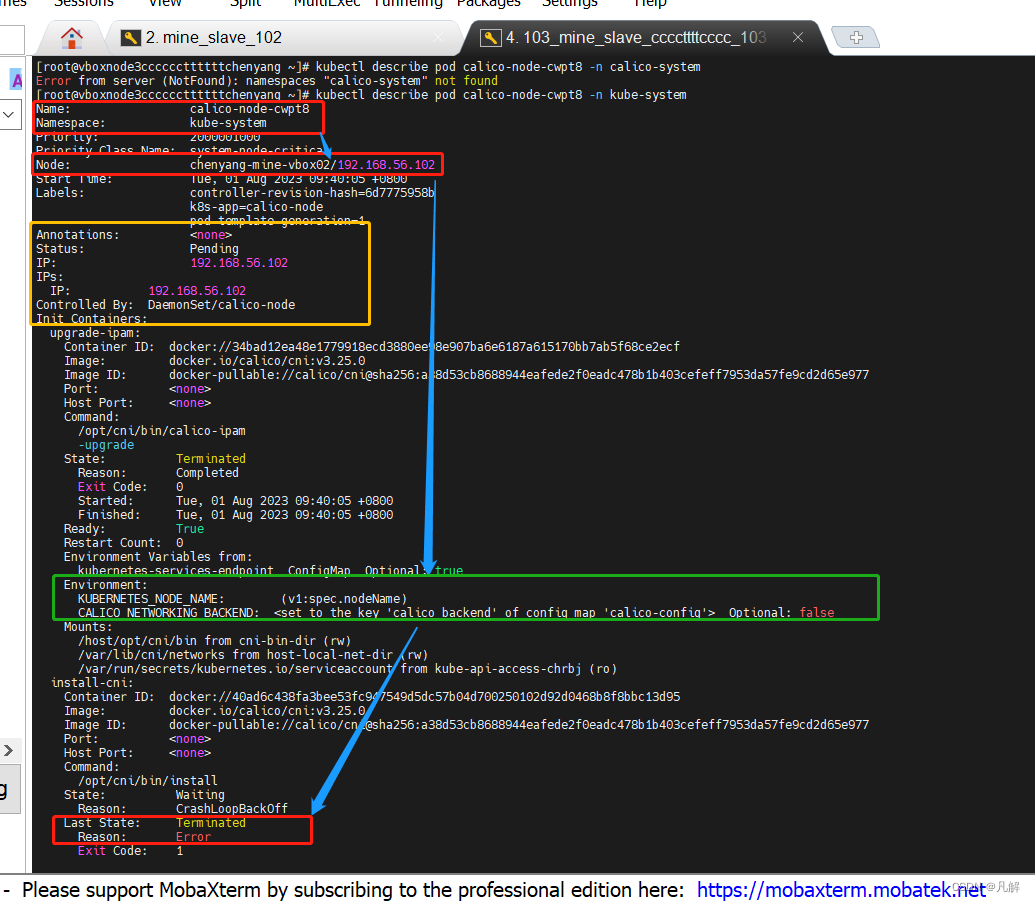

2.2:查看init:error calico pod 异常节点信息:执行 kubectl describe pod podcode

命令:kubectl describe pod calico-node-cwpt8 -n calico-system

部分关键信息打印:

[root@vboxnode3ccccccttttttchenyang ~]# kubectl describe pod calico-node-cwpt8 -n calico-system

Error from server (NotFound): namespaces "calico-system" not found

[root@vboxnode3ccccccttttttchenyang ~]# kubectl describe pod calico-node-cwpt8 -n kube-system

Name: calico-node-cwpt8

Namespace: kube-system

Priority: 2000001000

Priority Class Name: system-node-critical

Node: chenyang-mine-vbox02/192.168.56.102

Start Time: Tue, 01 Aug 2023 09:40:05 +0800

Labels: controller-revision-hash=6d7775958bk8s-app=calico-nodepod-template-generation=1

Annotations: <none>

Status: Pending

IP: 192.168.56.102

IPs:IP: 192.168.56.102

Controlled By: DaemonSet/calico-node

Init Containers:upgrade-ipam:Container ID: docker://34bad12ea48e1779918ecd3880ee98e907ba6e6187a615170bb7ab5f68ce2ecfImage: docker.io/calico/cni:v3.25.0Image ID: docker-pullable://calico/cni@sha256:a38d53cb8688944eafede2f0eadc478b1b403cefeff7953da57fe9cd2d65e977Port: <none>Host Port: <none>Command:/opt/cni/bin/calico-ipam-upgradeState: TerminatedReason: CompletedExit Code: 0Started: Tue, 01 Aug 2023 09:40:05 +0800Finished: Tue, 01 Aug 2023 09:40:05 +0800Ready: TrueRestart Count: 0Environment Variables from:kubernetes-services-endpoint ConfigMap Optional: trueEnvironment:KUBERNETES_NODE_NAME: (v1:spec.nodeName)CALICO_NETWORKING_BACKEND: <set to the key 'calico_backend' of config map 'calico-config'> Optional: falseMounts:/host/opt/cni/bin from cni-bin-dir (rw)/var/lib/cni/networks from host-local-net-dir (rw)/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-chrbj (ro)install-cni:Container ID: docker://40ad6c438fa3bee53fc947549d5dc57b04d700250102d92d0468b8f8bbc13d95Image: docker.io/calico/cni:v3.25.0Image ID: docker-pullable://calico/cni@sha256:a38d53cb8688944eafede2f0eadc478b1b403cefeff7953da57fe9cd2d65e977Port: <none>Host Port: <none>Command:/opt/cni/bin/installState: WaitingReason: CrashLoopBackOffLast State: TerminatedReason: ErrorExit Code: 1Started: Tue, 01 Aug 2023 11:18:31 +0800Finished: Tue, 01 Aug 2023 11:19:02 +0800Ready: FalseRestart Count: 22Environment Variables from:kubernetes-services-endpoint ConfigMap Optional: trueEnvironment:CNI_CONF_NAME: 10-calico.conflistCNI_NETWORK_CONFIG: <set to the key 'cni_network_config' of config map 'calico-config'> Optional: falseKUBERNETES_NODE_NAME: (v1:spec.nodeName)CNI_MTU: <set to the key 'veth_mtu' of config map 'calico-config'> Optional: falseSLEEP: false

2.3:可以试试重新下载coredns image 和 执行docker tag coredns相关命令:

2.4:再次查看coredns和calico pods启动信息:

2.4.1:执行命令kubectl get pod -A.查看coredns和calico pods启动信息:

[root@vboxnode3ccccccttttttchenyang ~]# kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-68d86f8988-zvqc2 1/1 Running 1 24h

kube-system calico-node-cwpt8 0/1 Init:CrashLoopBackOff 9 24h

kube-system calico-node-tlvtl 1/1 Running 1 24h

kube-system coredns-545d6fc579-nggnz 0/1 Pending 0 22h

kube-system coredns-545d6fc579-rbd8c 0/1 Pending 0 22h

kube-system etcd-vboxnode3ccccccttttttchenyang 1/1 Running 2 2d7h

kube-system kube-apiserver-vboxnode3ccccccttttttchenyang 1/1 Running 5 2d7h

kube-system kube-controller-manager-vboxnode3ccccccttttttchenyang 1/1 Running 6 2d7h

kube-system kube-proxy-55889 1/1 Running 2 2d7h

kube-system kube-proxy-v8vqr 1/1 Running 2 2d7h

kube-system kube-scheduler-vboxnode3ccccccttttttchenyang 1/1 Running 6 2d7h

2.5:查看异常calico-node pod 日志:

2.5.1:master:命令: kubectl logs -f calico-node-cwpt8 -n kube-system:

[root@vboxnode3ccccccttttttchenyang ~]# kubectl logs -f calico-node-cwpt8 -n kube-system

2.5.2:master:查看异常日志

[root@vboxnode3ccccccttttttchenyang ~]# kubectl logs -f calico-node-cwpt8 -n kube-system

Error from server: Get "https://192.168.56.102:10250/containerLogs/kube-system/calico-node-cwpt8/calico-node?follow=true": dial tcp 192.168.56.102:10250: connect: no route to host

2.5.3:master:telnet 异常信息ip:port 地址加端口:

2.5.3.1:安装telnet插件:

[root@vboxnode3ccccccttttttchenyang ~]# telnet 192.168.56.102:10250

-bash: telnet: 未找到命令

[root@vboxnode3ccccccttttttchenyang ~]# rpm -q telnet

未安装软件包 telnet

[root@vboxnode3ccccccttttttchenyang ~]# rpm -q telnet-server

未安装软件包 telnet-server

[root@vboxnode3ccccccttttttchenyang ~]# yum list telnet*

已加载插件:fastestmirror

Loading mirror speeds from cached hostfile* base: ftp.sjtu.edu.cn* extras: ftp.sjtu.edu.cn* updates: mirrors.bfsu.edu.cn

可安装的软件包

telnet.x86_64 1:0.17-66.el7 updates

telnet-server.x86_64 1:0.17-66.el7 updates

[root@vboxnode3ccccccttttttchenyang ~]# yum install telnet-server

已加载插件:fastestmirror

Loading mirror speeds from cached hostfile* base: ftp.sjtu.edu.cn* extras: ftp.sjtu.edu.cn* updates: mirrors.bfsu.edu.cn

base | 3.6 kB 00:00:00

docker-ce-stable | 3.5 kB 00:00:00

extras | 2.9 kB 00:00:00

kubernetes | 1.4 kB 00:00:00

updates | 2.9 kB 00:00:00

docker-ce-stable/7/x86_64/primary_db | 116 kB 00:00:01

正在解决依赖关系

--> 正在检查事务

---> 软件包 telnet-server.x86_64.1.0.17-66.el7 将被 安装

--> 解决依赖关系完成依赖关系解决===========================================================================================================================Package 架构 版本 源 大小

===========================================================================================================================

正在安装:telnet-server x86_64 1:0.17-66.el7 updates 41 k事务概要

===========================================================================================================================

安装 1 软件包总下载量:41 k

安装大小:55 k

Is this ok [y/d/N]: y

Downloading packages:

telnet-server-0.17-66.el7.x86_64.rpm | 41 kB 00:00:00

Running transaction check

Running transaction test

Transaction test succeeded

Running transaction正在安装 : 1:telnet-server-0.17-66.el7.x86_64 1/1验证中 : 1:telnet-server-0.17-66.el7.x86_64 1/1已安装:telnet-server.x86_64 1:0.17-66.el7完毕!

2.5.3.2:telnet 异常信息ip:port 地址加端口: telnet 192.168.56.102 10250

[root@vboxnode3ccccccttttttchenyang ~]# telnet 192.168.56.102:10250

telnet: 192.168.56.102:10250: Name or service not known

192.168.56.102:10250: Unknown host

[root@vboxnode3ccccccttttttchenyang ~]# telnet 192.168.56.102 10250

Trying 192.168.56.102...

telnet: connect to address 192.168.56.102: No route to host

[root@vboxnode3ccccccttttttchenyang ~]#

2.5.3.3:开放路由不通的机器端口:10250

systemctl status firewalld

否则->systemctl start firewalld.service

firewall-cmd --permanent --zone=public --add-port=10250/tcp

firewall-cmd --reload

firewall-cmd --permanent --zone=public --list-port[root@chenyang-mine-vbox02 ~]# firewall-cmd --permanent --zone=public --list-port

3306/tcp 8848/tcp 6443/tcp 8080/tcp 8083/tcp 8086/tcp 9200/tcp 9300/tcp 10250/tcp

[root@chenyang-mine-vbox02 ~]#

2.5.3.4:成功: telnet 192.168.56.102 10250

[root@vboxnode3ccccccttttttchenyang ~]# telnet 192.168.56.102 10250

Trying 192.168.56.102…

Connected to 192.168.56.102.

Escape character is ‘^]’.

2.6:master:再次查看异常calico-node pod 日志:还是不行

[root@vboxnode3ccccccttttttchenyang ~]# kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-68d86f8988-gxqbk 1/1 Running 0 46m

kube-system calico-node-rdwd7 0/1 Init:CrashLoopBackOff 12 46m

kube-system calico-node-xnhjc 1/1 Running 0 46m

kube-system coredns-545d6fc579-dmjsp 0/1 Running 0 48m

kube-system coredns-545d6fc579-pklfv 0/1 Running 0 48m

kube-system etcd-vboxnode3ccccccttttttchenyang 1/1 Running 0 48m

kube-system kube-apiserver-vboxnode3ccccccttttttchenyang 1/1 Running 0 48m

kube-system kube-controller-manager-vboxnode3ccccccttttttchenyang 1/1 Running 0 48m

kube-system kube-proxy-w5gls 1/1 Running 0 48m

kube-system kube-proxy-xt4gw 1/1 Running 0 47m

kube-system kube-scheduler-vboxnode3ccccccttttttchenyang 1/1 Running 0 48m

[root@vboxnode3ccccccttttttchenyang ~]# kubectl logs calico-node-rdwd7 -f --tail=50 -n kube-system

Error from server (BadRequest): container "calico-node" in pod "calico-node-rdwd7" is waiting to start: PodInitializing

[root@vboxnode3ccccccttttttchenyang ~]#

2.7:master:查看coredns 异常日志:显示和从机器网络有关

重点:意思是从节点网络无法被当前master节点查找到网络资源

User “system:serviceaccount:kube-system:coredns” cannot list resource “endpointslices” in API group “discovery.k8s.io” at the cluster scope

[root@vboxnode3ccccccttttttchenyang ~]# kubectl logs coredns-545d6fc579-dmjsp -f --tail=50 -n kube-system

E0805 18:36:01.342345 1 reflector.go:138] pkg/mod/k8s.io/client-go@v0.22.2/tools/cache/reflector.go:167: Failed to watch *v1.EndpointSlice: failed to list *v1.EndpointSlice: endpointslices.discovery.k8s.io is forbidden: User "system:serviceaccount:kube-system:coredns" cannot list resource "endpointslices" in API group "discovery.k8s.io" at the cluster scope

[INFO] plugin/ready: Still waiting on: "kubernetes"

E0805 18:36:02.676563 1 reflector.go:138] pkg/mod/k8s.io/client-go@v0.22.2/tools/cache/reflector.go:167: Failed to watch *v1.EndpointSlice: failed to list *v1.EndpointSlice: endpointslices.discovery.k8s.io is forbidden: User "system:serviceaccount:kube-system:coredns" cannot list resource "endpointslices" in API group "discovery.k8s.io" at the cluster scope

E0805 18:36:04.964823 1 reflector.go:138] pkg/mod/k8s.io/client-go@v0.22.2/tools/cache/reflector.go:167: Failed to watch *v1.EndpointSlice: failed to list *v1.EndpointSlice: endpointslices.discovery.k8s.io is forbidden: User "system:serviceaccount:kube-system:coredns" cannot list resource "endpointslices" in API group "discovery.k8s.io" at the cluster scope

[WARNING] plugin/kubernetes: starting server with unsynced Kubernetes API

2.8:cluster:查看coredns 异常日志:显示和从机器网络有关

2.8.1:cluster:查看异常日志:journalctl -f -u kubelet:

2.8.1.1重点:cni相关配置找不到:“Unable to update cni config” err=“no networks found in /etc/cni/net.d”

[root@chenyang-mine-vbox02 ~]# cd /etc/cni/net.d/

[root@chenyang-mine-vbox02 net.d]# ls

[root@chenyang-mine-vbox02 net.d]#

[root@chenyang-mine-vbox02 ~]# journalctl -f -u kubelet

-- Logs begin at 日 2023-08-06 02:16:53 CST. --

8月 06 02:43:22 chenyang-mine-vbox02 kubelet[6109]: I0806 02:43:22.030642 6109 cni.go:239] "Unable to update cni config" err="no networks found in /etc/cni/net.d"

8月 06 02:43:23 chenyang-mine-vbox02 kubelet[6109]: E0806 02:43:23.138847 6109 pod_workers.go:190] "Error syncing pod, skipping" err="failed to \"StartContainer\" for \"install-cni\" with CrashLoopBackOff: \"back-off 2m40s restarting failed container=install-cni pod=calico-node-rdwd7_kube-system(940cdb9e-c99b-46d3-a1f5-92ee1f175299)\"" pod="kube-system/calico-node-rdwd7" podUID=940cdb9e-c99b-46d3-a1f5-92ee1f175299

8月 06 02:43:23 chenyang-mine-vbox02 kubelet[6109]: E0806 02:43:23.215196 6109 kubelet.go:2218] "Container runtime network not ready" networkReady="NetworkReady=false reason:NetworkPluginNotReady message:docker: network plugin is not ready: cni config uninitialized"

8月 06 02:43:27 chenyang-mine-vbox02 kubelet[6109]: I0806 02:43:27.032036 6109 cni.go:239] "Unable to update cni config" err="no networks found in /etc/cni/net.d"

2.8.2:master:查看/etc/cni/net.d配置信息:

[root@vboxnode3ccccccttttttchenyang ~]# cd /etc/cni/net.d/

[root@vboxnode3ccccccttttttchenyang net.d]# ls

10-calico.conflist calico-kubeconfig

[root@vboxnode3ccccccttttttchenyang net.d]#

2.8.3:拷贝到cluster从master:/etc/cni/net.d配置信息

[root@chenyang-mine-vbox02 net.d]# touch calico-kubeconfig

[root@chenyang-mine-vbox02 net.d]# touch 10-calico.conflist

[root@chenyang-mine-vbox02 net.d]# vi 10-calico.conflist

[root@chenyang-mine-vbox02 net.d]# vi calico-kubeconfig

[root@chenyang-mine-vbox02 net.d]# ls

10-calico.conflist calico-kubeconfig

[root@chenyang-mine-vbox02 net.d]#

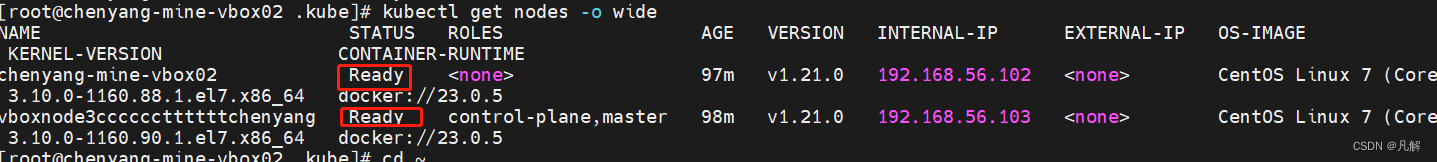

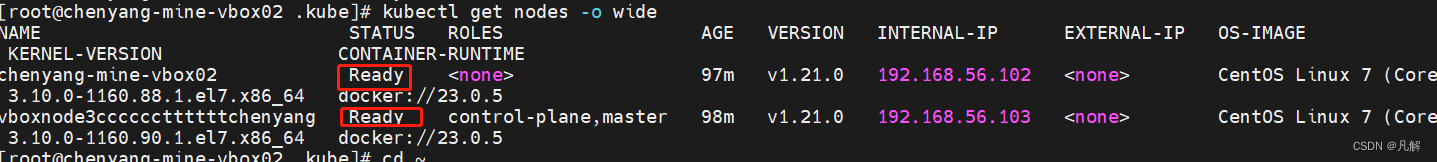

2.9:重启kubelet查看各nodes节点状态

systemctl restart kubelet

kubectl get nodes -o wide

[root@chenyang-mine-vbox02 .kube]# systemctl restart kubelet

[root@chenyang-mine-vbox02 .kube]# kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

chenyang-mine-vbox02 Ready <none> 97m v1.21.0 192.168.56.102 <none> CentOS Linux 7 (Core) 3.10.0-1160.88.1.el7.x86_64 docker://23.0.5

vboxnode3ccccccttttttchenyang Ready control-plane,master 98m v1.21.0 192.168.56.103 <none> CentOS Linux 7 (Core) 3.10.0-1160.90.1.el7.x86_64 docker://23.0.5

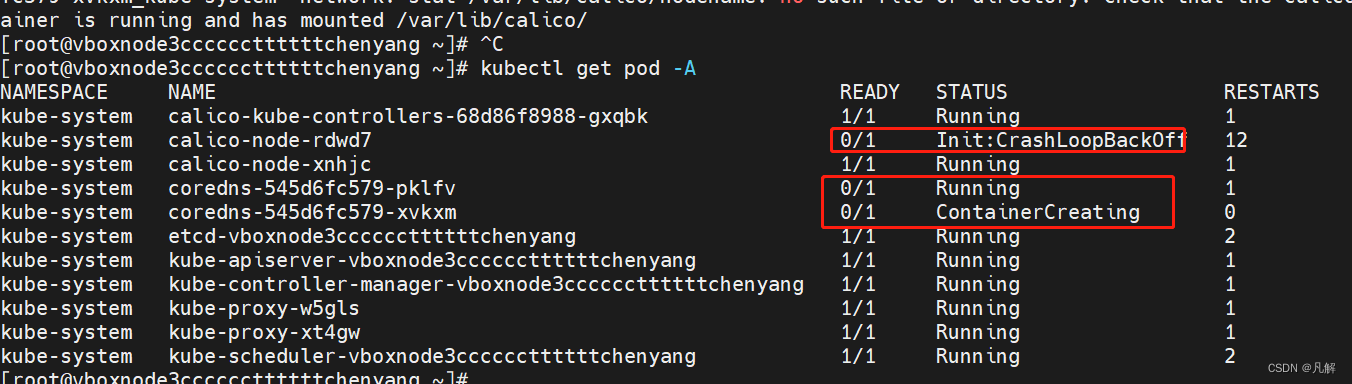

三:后续问题:

虽然节点状态从NotReady变为Ready,但coredns和calico还是没有Ready,后续会继续跟进。

[root@vboxnode3ccccccttttttchenyang ~]# kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-68d86f8988-gxqbk 1/1 Running 1 111m

kube-system calico-node-rdwd7 0/1 Init:CrashLoopBackOff 12 111m

kube-system calico-node-xnhjc 1/1 Running 1 111m

kube-system coredns-545d6fc579-pklfv 0/1 Running 1 113m

kube-system coredns-545d6fc579-xvkxm 0/1 ContainerCreating 0 13m

kube-system etcd-vboxnode3ccccccttttttchenyang 1/1 Running 2 114m

kube-system kube-apiserver-vboxnode3ccccccttttttchenyang 1/1 Running 1 114m

kube-system kube-controller-manager-vboxnode3ccccccttttttchenyang 1/1 Running 1 114m

kube-system kube-proxy-w5gls 1/1 Running 1 113m

kube-system kube-proxy-xt4gw 1/1 Running 1 113m

kube-system kube-scheduler-vboxnode3ccccccttttttchenyang 1/1 Running 2 114m

[root@vboxnode3ccccccttttttchenyang ~]#