代码中涉及的图片实验数据下载地址:https://download.csdn.net/download/m0_37567738/88235543?spm=1001.2014.3001.5501

代码:

import torch

import torch.nn as nn

import torch.nn.functional as F

#from utils import load_data,get_accur,train

import timeimport torchvision

from torchvision import transforms

from torch.utils.data import DataLoader

import torch

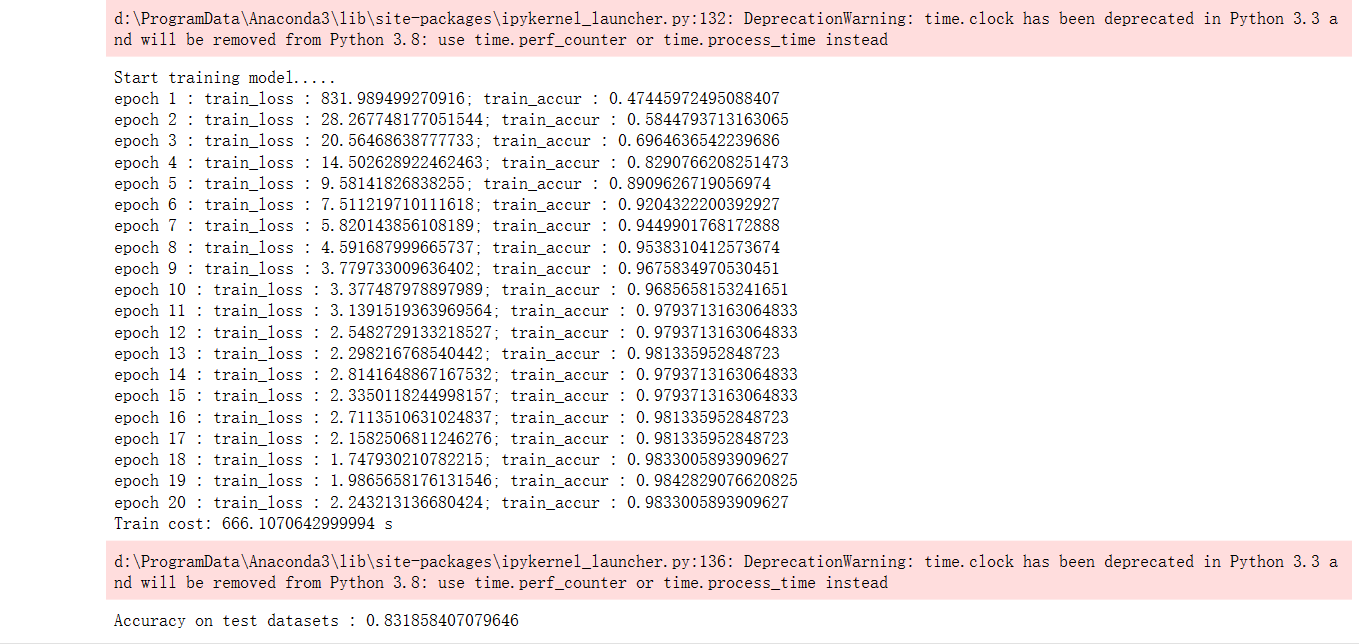

import torch.optim as optim

import numpy as npdef load_data(path, batch_size):datasets = torchvision.datasets.ImageFolder(root = path,transform = transforms.Compose([transforms.ToTensor()]))dataloder = DataLoader(datasets, batch_size=batch_size, shuffle=True)return datasets,dataloderdef get_accur(preds, labels):preds = preds.argmax(dim=1)return torch.sum(preds == labels).item()def train(model, epochs, learning_rate, dataloader, criterion, testdataloader):optimizer = optim.Adam(model.parameters(),lr=learning_rate)train_loss_list = []test_loss_list = []train_accur_list = []test_accur_list = []train_len = len(dataloader.dataset)test_len = len(testdataloader.dataset)for i in range(epochs):train_loss = 0.0train_accur = 0test_loss = 0.0test_accur = 0for batch in dataloader:imgs, labels = batchpreds = model(imgs)optimizer.zero_grad()loss = criterion(preds, labels)loss.backward()optimizer.step()train_loss += loss.item()train_accur += get_accur(preds,labels)train_loss_list.append(train_loss)train_accur_list.append(train_accur / train_len)for batch in testdataloader:imgs, labels = batchpreds = model(imgs)loss = criterion(preds, labels)test_loss += loss.item()test_accur += get_accur(preds,labels)test_loss_list.append(test_loss)test_accur_list.append(test_accur / test_len)print("epoch {} : train_loss : {}; train_accur : {}".format(i + 1, train_loss, train_accur / train_len))return np.array(train_accur_list), np.array(train_loss_list), np.array(test_accur_list), np.array(test_loss_list)class ResidualBlock(nn.Module):def __init__(self, inchannel, outchannel, stride=1):super().__init__()self.left = nn.Sequential(nn.Conv2d(inchannel, outchannel, kernel_size=3, stride=stride, padding=1, bias=False),nn.BatchNorm2d(outchannel),nn.ReLU(inplace=True),nn.Conv2d(outchannel, outchannel, kernel_size=3, stride=1,padding=1, bias=False),# 尺寸不发生变化 通道改变nn.BatchNorm2d(outchannel))self.shortcut = nn.Sequential()# 注意shortcut是对输入X进行卷积,利用1×1卷积改变形状if inchannel != outchannel or stride != 1:self.shortcut = nn.Sequential(nn.Conv2d(inchannel, outchannel, kernel_size=1, stride=stride, bias=False),nn.BatchNorm2d(outchannel))def forward(self, X):h = self.left(X)# 先相加再激活h += self.shortcut(X)out = F.relu(h)return outclass ResidualNet(nn.Module):def __init__(self):super().__init__()self.residual_block = nn.Sequential(ResidualBlock(3, 32),ResidualBlock(32, 64),ResidualBlock(64, 32),ResidualBlock(32, 3))self.fc1 = nn.Linear(3 * 64 * 64, 1024)self.fc2 = nn.Linear(1024, 3)def forward(self, X):h = self.residual_block(X)h = h.view(-1, 3 * 64 * 64)h = self.fc1(h)out = self.fc2(h)return outif __name__ == "__main__":train_path = "./cnn/train/"test_path = "./cnn/test/"_, train_dataloader = load_data(train_path, 32)_, test_dataloader = load_data(test_path, 32)model = ResidualNet()critic = nn.CrossEntropyLoss()epoch = 20lr = 0.01start = time.clock()print("Start training model.....")train_accur_list, train_loss_list, test_accur_list, test_loss_list = train(model, epoch, lr, train_dataloader,critic, test_dataloader)end = time.clock()print("Train cost: {} s".format(end - start))test_accur = 0for batch in test_dataloader:imgs, labels = batchpreds = model(imgs)test_accur += get_accur(preds, labels)print("Accuracy on test datasets : {}".format(test_accur / len(test_dataloader.dataset)))执行结果:

![[机器学习]特征工程:主成分分析](https://img-blog.csdnimg.cn/img_convert/29d4c948ca9d9f2770c6e926a210d0b2.png)