目录

pandas读取数据

查看数据异常

提取指定列

将dataframe数据以numpy形式提取

数据划分

随机森林回归

GBDT回归

特征重要性可视化

输出:

绘制3D散点图

导入自定义包且.py文件修改时jupyter notebook自动同步

dataframe删除某列中重复字段并删除对应行

LASSO回归

绘制回归误差图

输出:

Adaboost回归

LightGBM回归

XGBoost

绘制学习曲线

输出:

绘制dataframe数据分布图

输出:

SVM分类

使用贝叶斯优化SVM

输出:

后续:

绘制ROC曲线

输出:

PCA降维

PCA降维可视化

输出:

求解极值

输出解释:

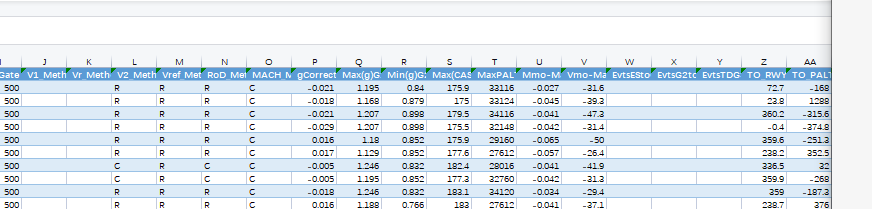

pandas读取数据

import numpy as np

import pandas as pd

import random

Molecular_Descriptor = pd.read_excel('Molecular_Descriptor.xlsx',header=0)

Molecular_Descriptor.head()查看数据异常

#判断数据NAN,INF

print(Molecular_Descriptor.isnull().any())

print(np.isnan(Molecular_Descriptor).any())

print(np.isfinite(Molecular_Descriptor).all())

print(np.isinf(Molecular_Descriptor).all())提取指定列

Molecular_Descriptor.iloc[:,1:]将dataframe数据以numpy形式提取

# .values能够将dataframe中的数据以numpy的形式读取

X = Molecular_Descriptor.iloc[:,1:].values

Y = ERα_activity.iloc[:,2].values数据划分

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X,Y,test_size=0.2,random_state=0)#打印出原始样本集、训练集和测试集的数目

print("The length of original data X is:", X.shape[0])

print("The length of train Data is:", X_train.shape[0])

print("The length of test Data is:", X_test.shape[0])随机森林回归

#导入随机森林库

from sklearn.ensemble import RandomForestRegressor

#导入sklearn度量库

from sklearn import metrics

#定义分类器

RFRegressor = RandomForestRegressor(n_estimators=200, random_state=0)

#模型训练

RFregressor.fit(X_train, y_train)

#模型预测

y_pred = RFregressor.predict(X_test)

#输出回归模型评价指标

print('Mean Absolute Error:', metrics.mean_absolute_error(y_test, y_pred))

print('Mean Squared Error:', metrics.mean_squared_error(y_test, y_pred))

print('Root Mean Squared Error:',np.sqrt(metrics.mean_squared_error(y_test, y_pred)))

#获得特征重要性

print(RFregressor.feature_importances_)GBDT回归

from sklearn.ensemble import GradientBoostingRegressor

gbdt = GradientBoostingRegressor(random_state=0)

gbdt.fit(X_train, y_train)

y_pred = gbdt.predict(X_test)

print('Mean Absolute Error:', metrics.mean_absolute_error(y_test, y_pred))

print('Mean Squared Error:', metrics.mean_squared_error(y_test, y_pred))

print('Root Mean Squared Error:',np.sqrt(metrics.mean_squared_error(y_test, y_pred)))特征重要性可视化

import matplotlib.pyplot as plt

plt.rcParams["font.sans-serif"] = ["SimHei"] # 用来正常显示中文标签

plt.rcParams["axes.unicode_minus"] = False # 解决负号"-"显示为方块的问题plt.rcParams['savefig.dpi'] = 150 #图片像素

plt.rcParams['figure.dpi'] = 150 #分辨率def plot_feature_importance(dataset, model_bst):\'''dataset : 数据集 dataframemodel_bst : 训练好的模型'''list_feature_name = list(dataset.columns[1:])list_feature_importance = list(model_bst.feature_importances_)dataframe_feature_importance = pd.DataFrame({'feature_name': list_feature_name, 'importance': list_feature_importance})dataframe_feature_importance20 = dataframe_feature_importance.sort_values(by='importance', ascending=False)[:20]print(dataframe_feature_importance20)x = range(len(dataframe_feature_importance20['feature_name']))plt.xticks(x, dataframe_feature_importance20['feature_name'], rotation=90, fontsize=8)plt.plot(x, dataframe_feature_importance20['importance'])plt.xlabel("分子描述符")plt.ylabel("重要程度")plt.title('重要程度可视化')plt.grid()#保存图像#plt.savefig('重要程度可视化.png')plt.show()return dataframe_feature_importance20['feature_name']if __name__ == '__main__':# 传入数据集dataframe , 模型对特征重要性进行评估gbdt_name = plot_feature_importance(Molecular_Descriptor,gbdt)输出:

绘制3D散点图

绘制3D散点图

z = list(range(0,729))

plt.rcParams['savefig.dpi'] = 150 #图片像素

plt.rcParams['figure.dpi'] = 150 #分辨率

plt.rcParams["font.sans-serif"] = ["SimHei"] # 用来正常显示中文标签

plt.rcParams["axes.unicode_minus"] = False # 解决负号"-"显示为方块的问题

from mpl_toolkits.mplot3d import Axes3D

x = regressor.feature_importances_

y = gbdt.feature_importances_

fig = plt.figure()

plt.subplots_adjust(right=0.8)

ax = fig.add_subplot(111, projection='3d') # 创建一个三维的绘图工程

ax.scatter(x,y,z,c='b',s=5,alpha=1)

#设置x、y轴坐标刻标以及对应的标签

plt.xticks(fontsize=7)

plt.yticks(fontsize=7)

#统一设置x、y、z轴标签字体

plt.tick_params(labelsize=7)

#设置x、y、z标签

plt.xlabel("x轴",fontsize=8)

plt.ylabel("y轴",fontsize=8)

ax.set_zlabel('z轴',fontsize=8)

plt.savefig('这是三维图.png')导入自定义包且.py文件修改时jupyter notebook自动同步

%load_ext autoreload

%autoreload 2dataframe删除某列中重复字段并删除对应行

dataframe_feature_importance = dataframe_feature_importance.drop_duplicates(subset=['feature_name'], keep='first', inplace=False)LASSO回归

from sklearn import linear_modelmodel = linear_model.LassoCV()

model.fit(X_train, y_train)

y_predict = model.predict(X_test)

print('Mean Absolute Error:', metrics.mean_absolute_error(y_test, y_predict))

print('Mean Squared Error:', metrics.mean_squared_error(y_test, y_predict))

print('Root Mean Squared Error:',np.sqrt(metrics.mean_squared_error(y_test, y_predict)))绘制回归误差图

x_t = np.linspace(0, len(np.array(y_test)), len(np.array(y_test)))

plt.plot(x_t, y_test, marker='.', label="origin data")

# plt.xticks([])

plt.plot(x_t, y_predict, 'r-', marker='.', label="predict", lw=1)

plt.xlabel('样本编号')

plt.ylabel('预测结果')

# plt.figure(figsize=(10,100))

plt.legend(labels=['test','predict'],loc='best')

# plt.xticks([])

score = model.score(X_test,y_test)

print(score)

plt.text(140, 3, 'score=%.4f' % score, fontdict={'size': 15, 'color': 'red'})

plt.savefig('Lasso.png')输出:

Adaboost回归

Adaboost回归

from sklearn.ensemble import AdaBoostClassifier

clf = AdaBoostRegressor(DecisionTreeRegressor(max_depth=3),n_estimators=5000, random_state=123)

clf.fit(X_train,y_train)

y_predict = clf.predict(X_test)

print('Mean Absolute Error:', metrics.mean_absolute_error(y_test, y_predict))

print('Mean Squared Error:', metrics.mean_squared_error(y_test, y_predict))

print('Root Mean Squared Error:',np.sqrt(metrics.mean_squared_error(y_test, y_predict)))LightGBM回归

import lightgbm as lgbclf = lgb.LGBMRegressor(

boosting_type='gbdt',

random_state=2019,

objective='regression')

# 训练模型

clf.fit(X=X_train, y=y_train, eval_metric='MSE', verbose=50)

y_predict = clf.predict(X_test)

print('Mean Absolute Error:', metrics.mean_absolute_error(y_test, y_predict))

print('Mean Squared Error:', metrics.mean_squared_error(y_test, y_predict))

print('Root Mean Squared Error:',np.sqrt(metrics.mean_squared_error(y_test, y_predict)))XGBoost

import xgboost as xgb

clf = xgb.XGBRegressor(max_depth=5, learning_rate=0.1, n_estimators=5000, silent=False, objective='reg:gamma')

# 训练模型

clf.fit(X=X_train, y=y_train)

y_predict = clf.predict(X_test)

print('Mean Absolute Error:', metrics.mean_absolute_error(y_test, y_predict))

print('Mean Squared Error:', metrics.mean_squared_error(y_test, y_predict))

print('Root Mean Squared Error:',np.sqrt(metrics.mean_squared_error(y_test, y_predict)))绘制学习曲线

from sklearn.model_selection import learning_curve

from sklearn.model_selection import ShuffleSplit

def plot_learning_curve(estimator, title, X, y, ylim=None, cv=None,n_jobs=1, train_sizes=np.linspace(.1, 1.0, 5)):plt.figure()plt.title(title)if ylim is not None:plt.ylim(*ylim)plt.xlabel("Training examples")plt.ylabel("Score")train_sizes, train_scores, test_scores = learning_curve(estimator, X, y, cv=cv, n_jobs=n_jobs, train_sizes=train_sizes)train_scores_mean = np.mean(train_scores, axis=1)train_scores_std = np.std(train_scores, axis=1)test_scores_mean = np.mean(test_scores, axis=1)test_scores_std = np.std(test_scores, axis=1)plt.grid()plt.fill_between(train_sizes, train_scores_mean - train_scores_std,train_scores_mean + train_scores_std, alpha=0.1,color="r")plt.fill_between(train_sizes, test_scores_mean - test_scores_std,test_scores_mean + test_scores_std, alpha=0.1, color="g")plt.plot(train_sizes, train_scores_mean, 'o-', color="r",label="Training score")plt.plot(train_sizes, test_scores_mean, 'o-', color="g",label="Cross-validation score")plt.legend(loc="best")return plt

if __name__ == '__main__':title = "Learning Curves"# Cross validation with 100 iterations to get smoother mean test and train# score curves, each time with 20% data randomly selected as a validation set.cv = ShuffleSplit(n_splits=10, test_size=0.2, random_state=0)estimator =lgb.LGBMRegressor(learning_rate=0.001,max_depth=-1,n_estimators=10000,boosting_type='gbdt',random_state=2019,objective='regression',)#模型 图像标题 数据 标签 K折p = plot_learning_curve(estimator, title, XX, YY, cv=cv, n_jobs=4)p.savefig('LearnCurves.png')输出:

绘制dataframe数据分布图

#

name = ['gmin', 'MDEC-22', 'minaaN', 'maxHBint10', 'minHBint10', 'maxdO','C2SP1', 'BCUTw-1h', 'BCUTp-1l', 'MDEN-33', 'VC-4', 'nAtomLAC','SHBint10', 'minHBint4', 'C2SP2', 'MDEC-24', 'hmax', 'SHBint9','fragC', 'LipinskiFailures']

# 提取数据指定列

t = Molecular_Descriptor[name]

#数据归一化

t = (t-t.min())/(t.max()-t.min())t.plot(alpha=0.8)

#横向拉长x轴

N=100

plt.legend(loc=2, bbox_to_anchor=(1.05,1.0),borderaxespad= 0)

# change x internal size

plt.gca().margins(x=0)

plt.gcf().canvas.draw()

tl = plt.gca().get_xticklabels()

# maxsize = max([t.get_window_extent().width for t in tl])

maxsize = 30

m = 0.2 # inch margin

s = maxsize / plt.gcf().dpi * N + 2 * m

margin = m / plt.gcf().get_size_inches()[0]plt.gcf().subplots_adjust(left=margin, right=1. - margin)

plt.gcf().set_size_inches(s, plt.gcf().get_size_inches()[1])

#合理布局

plt.tight_layout()

plt.savefig("数据分布.png")输出:

SVM分类

from sklearn.svm import SVC

from sklearn import metrics

#定义SVM分类器

clf = SVC()

#模型训练

clf.fit(X_train,y_train)

#模型预测

y_pred = clf.predict(X_test)

#模型评估

print('准确率=%.4f'%metrics.accuracy_score(y_test,y_pred))

print('召回率=%.4f'%metrics.recall_score(y_test, y_pred, pos_label=1))

print('精准率=%.4f'%metrics.precision_score(y_test, y_pred, pos_label=1) )

print('F1=%.4f'%metrics.f1_score(y_test, y_pred, average='weighted',pos_label=1) )使用贝叶斯优化SVM

from sklearn.datasets import make_classification

from sklearn.model_selection import cross_val_score

from sklearn.ensemble import RandomForestClassifier as RFC

from sklearn.svm import SVCfrom bayes_opt import BayesianOptimization

from bayes_opt.util import Coloursdef svc_cv(C, gamma, X_train, y_train):"""SVC cross validation.This function will instantiate a SVC classifier with parameters C andgamma. Combined with data and targets this will in turn be used to performcross validation. The result of cross validation is returned.Our goal is to find combinations of C and gamma that maximizes the roc_aucmetric."""#设置分类器estimator = SVC(C=C, gamma=gamma, random_state=2)#交叉验证cval = cross_val_score(estimator, X_train, y_train, scoring='roc_auc', cv=4)return cval.mean()

def optimize_svc(X_train, y_train):"""Apply Bayesian Optimization to SVC parameters."""def svc_crossval(expC, expGamma):"""Wrapper of SVC cross validation.Notice how we transform between regular and log scale. While thisis not technically necessary, it greatly improves the performanceof the optimizer."""C = 10 ** expCgamma = 10 ** expGammareturn svc_cv(C=C, gamma=gamma, X_train=X_train, y_train=y_train)optimizer = BayesianOptimization(f=svc_crossval,#设置超参范围pbounds={"expC": (-3, 4), "expGamma": (-4, -1)},random_state=1234,verbose=2)optimizer.maximize(n_iter=20)print("Final result:", optimizer.max)

if __name__ == '__main__':#开始搜索超参optimize_svc(X_train, y_train)输出:

| iter | target | expC | expGamma |

-------------------------------------------------

| 1 | 0.8239 | -2.042 | -2.134 |

| 2 | 0.8973 | -0.8114 | -1.644 |

| 3 | 0.8791 | 0.8999 | -3.182 |

| 4 | 0.8635 | -1.618 | -1.594 |

| 5 | 0.9104 | 1.791 | -1.372 |

| 6 | 0.9213 | 1.099 | -1.502 |

| 7 | 0.9165 | 0.2084 | -1.0 |

| 8 | 0.8727 | 2.0 | -4.0 |

| 9 | 0.9117 | 1.131 | -1.0 |

| 10 | 0.9241 | 0.3228 | -1.88 |

| 11 | 0.9346 | 2.0 | -2.322 |

| 12 | 0.9335 | 1.429 | -2.239 |

| 13 | 0.7927 | -3.0 | -4.0 |

| 14 | 0.927 | 2.0 | -2.715 |

| 15 | 0.9354 | 1.742 | -2.249 |

=================================================

Final result: {'target': 0.9353828944247531, 'params': {'expC': 1.7417094883510253, 'expGamma': -2.248984327197053}}iter为迭代次数,target为模型所获得的分数(越高越好),expC、expGamma为需要贝叶斯优化的参数

后续:

如何使用?根据搜索到的超参数'params': {'expC': 1.7417094883510253, 'expGamma': -2.248984327197053}重新训练分类器即可

clf = SVC(C=10**1.74,gamma=10**(-2.248))

clf.fit(X_train,y_train)

y_pred = clf.predict(X_test)绘制ROC曲线

import matplotlib.pyplot as plt

from sklearn.metrics import roc_curve, auc

import matplotlib.pyplot as pltplt.rcParams['savefig.dpi'] = 150 #图片像素

plt.rcParams['figure.dpi'] = 150 #分辨率

#传入真实值和预测值

fpr, tpr, thersholds = roc_curve(y_test, y_pred, pos_label=1)for i, value in enumerate(thersholds):print("%f %f %f" % (fpr[i], tpr[i], value))

roc_auc = auc(fpr, tpr)plt.plot(fpr, tpr, 'k--', label='ROC (area = {0:.2f})'.format(roc_auc), lw=2,c='r')plt.xlim([-0.05, 1.05]) # 设置x、y轴的上下限,以免和边缘重合,更好的观察图像的整体

plt.ylim([-0.05, 1.05])

plt.xlabel('False Positive Rate')

plt.ylabel('True Positive Rate') # 可以使用中文,但需要导入一些库即字体

plt.title('ROC Curve')

plt.legend(loc="lower right")

plt.savefig('Caco-2分类ROC曲线.png')

plt.show()

print(roc_auc)输出:

PCA降维

from sklearn.decomposition import PCA

#定义PCA分类器,n_components为需要降到的维数

pca = PCA(n_components=50)

# X.shape = (1974,729)

#数据转换 (1974,729) -> (1974,50)

new_X = pca.fit_transform(X)

#new_X.shape = (1974,50)PCA降维可视化

# plt.rcParams['savefig.dpi'] = 150 #图片像素

# plt.rcParams['figure.dpi'] = 150 #分辨率

plt.rcParams["font.sans-serif"] = ["SimHei"] # 用来正常显示中文标签

plt.rcParams["axes.unicode_minus"] = False # 解决负号"-"显示为方块的问题

from mpl_toolkits.mplot3d import Axes3D

# 降到3维

pca = PCA(n_components=3)

pca_test = pca.fit_transform(X_test)

pca_test.shape

fig = plt.figure()

plt.subplots_adjust(right=0.8)

ax = fig.add_subplot(111, projection='3d') # 创建一个三维的绘图工程

y_pred==0

#分离0 1

label0 = pca_test[y_pred==0]

label1 = pca_test[y_pred==1]

# label0

ax.scatter(label0[:,0],label0[:,1],label0[:,2],label=0,alpha=0.8)

ax.scatter(label1[:,0],label1[:,1],label1[:,2],label=1,alpha=0.8)

plt.legend()

plt.savefig('Caco2分类三维图像.png')输出:

求解极值

# coding=utf-8

from scipy.optimize import minimize

import numpy as np#设置参数范围/约束条件

l_x_min = [0,1,2,3]

l_x_max = [4,5,6,7]

def fun():#minimize只能求极小值,如果需要极大值,则在函数前添加负号,本案例为求极大值v=lambda x: -1*(coef[0]*x[0]+coef[1]*x[1]+coef[2]*x[2]+coef[3]*x[3]+intercept)return v

def con():# 约束条件 分为eq 和ineq#eq表示 函数结果等于0 ; ineq 表示 表达式大于等于0 #{'type': 'ineq', 'fun': lambda x: x[0] - l_x_min[0]}表示 x[0] - l_x_min[0]>=0cons = ({'type': 'ineq', 'fun': lambda x: x[0] - l_x_min[0]},\{'type': 'ineq', 'fun': lambda x: -x[0] + l_x_max[0]},\{'type': 'ineq', 'fun': lambda x: x[1] - l_x_min[1]},\{'type': 'ineq', 'fun': lambda x: -x[1] + l_x_max[1]},\{'type': 'ineq', 'fun': lambda x: x[2] - l_x_min[2]},\{'type': 'ineq', 'fun': lambda x: -x[2] + l_x_max[2]},\{'type': 'ineq', 'fun': lambda x: x[3] - l_x_min[3]},\{'type': 'ineq', 'fun': lambda x: -x[3] + l_x_max[3]})return consif __name__ == "__main__":#定义常量值cons = con()#设置初始猜测值 x0 = np.random.rand(4)res = minimize(fun(), x0, method='SLSQP',constraints=cons)print(res.fun)print(res.success)print(res.x)输出解释:

#举例:

[output]:

-1114.4862509294192 # 由于在开始时给函数添加符号,最后还需要*-1,因此极大值为1114.4862509294192

True #成功找到极值

[-1.90754988e-10 6.36254335e+00 -1.25920646e-10 1.90480000e-01] #该极值对应x解