文章目录

- 前言

- util实验sleep

- util实验pingpong

- util实验primes

- xv6初始化代码分析

- syscall实验trace

- syscall实验sysinfo

- xv6内存学习笔记

- pgtbl实验Print a page table

- pgtbl实验A kernel page table per process

- xv6 trap学习

- trap实验Backtrace

- trap实验Alarm

- lazy实验Lazy allocation

- xv6中断和设备驱动学习

- net驱动实验

- xv6进程调度学习

- Multithreading实验Uthread: switching between threads

- Multithreading实验Using threads

- Multithreading实验Barrier

- xv6文件系统学习

- fs实验Large files

前言

记录做实验时对xv6 2020操作系统的观察和理解,环境搭建Ubuntu20.04,交叉编译工具链直接apt安装就行,自己编译反而会因为选择的qemu和编译工具版本不匹配导致无法启动xv6。

代码仓库网址:https://github.com/cmyhhhh/xv6-labs-2020/

util实验sleep

在Makefile的UPROGS里加入$U/_sleep\,用于后续清理编译后的文件。

系统调用接口xv6实验里已经申明好,user/user.h文件定义了系统调用和工具接口,usys.pl用于生成usys.S文件,usys.S文件用于调用os内部实现的系统调用,例如在用户态调用sleep就执行ecall SYS_sleep。

在RISC-V架构中,ecall(Environment Call)指令用于实现从低特权级代码(如用户模式)向高特权级代码(如内核模式)的调用。当ecall指令执行时,会设置scause寄存器(记录异常原因)和sepc寄存器(保存发生异常时的指令地址)。操作系统的异常处理程序会根据a7寄存器的值来确定具体的系统调用类型,并执行相应的系统调用,跳转到stvec寄存器(保存S模式下的trap处理程序的地址)指向的地址,从而进入异常处理程序。a0-a5 寄存器依次用来表示 Syscall 编程接口中定义的参数,a0 寄存器存放 Syscall 的返回值。

调用的sys_sleep函数在kernel/sysproc.c中,sys_sleep会调用os实现的sleep函数:

uint64

sys_sleep(void)

{int n;uint ticks0;if(argint(0, &n) < 0) // 从trapframe中获取参数return -1;acquire(&tickslock); // 获取ticks锁ticks0 = ticks;while(ticks - ticks0 < n){ // 当ticks的差值小于指定睡眠ticks数时一直睡眠if(myproc()->killed){ // 调用sleep的进程被kill,则直接终止release(&tickslock);return -1;}sleep(&ticks, &tickslock); // 调用os内部实现的sleep函数}release(&tickslock);return 0;

}

os实现的sleep函数在kernel/proc.c中,参数chan是ticks,表明是在ticks上进行休眠,睡眠一个tick后被唤醒:

// Atomically release lock and sleep on chan.

// Reacquires lock when awakened.

void

sleep(void *chan, struct spinlock *lk)

{struct proc *p = myproc();// Must acquire p->lock in order to// change p->state and then call sched.// Once we hold p->lock, we can be// guaranteed that we won't miss any wakeup// (wakeup locks p->lock),// so it's okay to release lk.if(lk != &p->lock){ //DOC: sleeplock0acquire(&p->lock); //DOC: sleeplock1release(lk);}// Go to sleep.p->chan = chan;p->state = SLEEPING;sched();// Tidy up.p->chan = 0;// Reacquire original lock.if(lk != &p->lock){release(&p->lock);acquire(lk);}

}

因此实现的sleep 1是睡眠一个tick:

#include "kernel/types.h"

#include "kernel/stat.h"

#include "user/user.h"int

main(int argc, char *argv[])

{if(argc != 2){fprintf(2, "usage: sleep [ticks]\n");exit(1);}int time = atoi(argv[1]);sleep(time);exit(0);

}util实验pingpong

需要用到pipe,sys_pipe实现如下:

uint64

sys_pipe(void)

{uint64 fdarray; // user pointer to array of two integersstruct file *rf, *wf;int fd0, fd1;struct proc *p = myproc();if(argaddr(0, &fdarray) < 0)return -1;// pipealloc函数会分配两个file结构体,分别用于管道的读端和写端if(pipealloc(&rf, &wf) < 0) return -1;fd0 = -1;// 为管道的读端和写端分配文件描述符if((fd0 = fdalloc(rf)) < 0 || (fd1 = fdalloc(wf)) < 0){ if(fd0 >= 0)p->ofile[fd0] = 0;fileclose(rf);fileclose(wf);return -1;}// 将文件描述符复制到用户空间if(copyout(p->pagetable, fdarray, (char*)&fd0, sizeof(fd0)) < 0 ||copyout(p->pagetable, fdarray+sizeof(fd0), (char *)&fd1, sizeof(fd1)) < 0){p->ofile[fd0] = 0;p->ofile[fd1] = 0;fileclose(rf);fileclose(wf);return -1;}return 0;

}

sys_fork代码如下,创建一个新进程,并把父进程的一些信息赋值给子进程,将子进程的parent指针指向父进程:

uint64

sys_fork(void)

{return fork();

}// Create a new process, copying the parent.

// Sets up child kernel stack to return as if from fork() system call.

int

fork(void)

{int i, pid;struct proc *np;struct proc *p = myproc();// Allocate process.if((np = allocproc()) == 0){return -1;}// Copy user memory from parent to child.if(uvmcopy(p->pagetable, np->pagetable, p->sz) < 0){freeproc(np);release(&np->lock);return -1;}np->sz = p->sz;np->parent = p;// copy saved user registers.*(np->trapframe) = *(p->trapframe);// Cause fork to return 0 in the child.np->trapframe->a0 = 0;// increment reference counts on open file descriptors.for(i = 0; i < NOFILE; i++)if(p->ofile[i])np->ofile[i] = filedup(p->ofile[i]);np->cwd = idup(p->cwd);safestrcpy(np->name, p->name, sizeof(p->name));pid = np->pid;np->state = RUNNABLE;release(&np->lock);return pid;

}

最终代码如下:

#include "kernel/types.h"

#include "kernel/stat.h"

#include "user/user.h"int

main(int argc, char *argv[])

{int pong_fd[2], ping_fd[2];char buf[4];if(pipe(pong_fd) < 0 || pipe(ping_fd) < 0){fprintf(2, "pipe error\n");exit(1);}if(fork() == 0){read(pong_fd[0], buf, 4);printf("%d: received %s\n", getpid(), buf);write(ping_fd[1], "ping", 4);}else {write(pong_fd[1], "pong", 4);// wait(0);read(ping_fd[0], buf, 4);printf("%d: received %s\n", getpid(), buf);}exit(0);

}util实验primes

主要是要及时关闭不用的pipe,在写完之后关闭fd[1],读完之后关闭fd[0],代码如下:

#include "kernel/types.h"

#include "kernel/stat.h"

#include "user/user.h"void prime(int fd[2]){int num, prime_num;read(fd[0],&prime_num,sizeof(prime_num));printf("prime %d\n", prime_num);int buf[36],cnt=0;while(read(fd[0],&num,sizeof(num))){if(num%prime_num!=0){buf[cnt++]=num;}}close(fd[0]);if(cnt==0){return;}int fd_next[2];pipe(fd_next);for(int i=0;i<cnt;i++){write(fd_next[1],&buf[i],sizeof(buf[i]));}close(fd_next[1]);if(fork()==0){prime(fd_next);}wait(0);close(fd_next[0]);return;

}int

main(int argc, char *argv[])

{int fd[2];pipe(fd);for(int i=2;i<=35;i++){write(fd[1],&i,sizeof(i));}close(fd[1]);prime(fd);exit(0);

}xv6初始化代码分析

bootloader将kernel加载到内存中,从entry.S文件开始执行,entry.S文件设置sp指针并调用start()进行初始化。

// entry.S needs one stack per CPU.

__attribute__ ((aligned (16))) char stack0[4096 * NCPU];// entry.S jumps here in machine mode on stack0.

void

start()

{// set M Previous Privilege mode to Supervisor, for mret.unsigned long x = r_mstatus();x &= ~MSTATUS_MPP_MASK;x |= MSTATUS_MPP_S;w_mstatus(x);// set M Exception Program Counter to main, for mret.// requires gcc -mcmodel=medanyw_mepc((uint64)main);// disable paging for now.w_satp(0);// delegate all interrupts and exceptions to supervisor mode.w_medeleg(0xffff);w_mideleg(0xffff);w_sie(r_sie() | SIE_SEIE | SIE_STIE | SIE_SSIE);// configure Physical Memory Protection to give supervisor mode// access to all of physical memory.w_pmpaddr0(0x3fffffffffffffull);w_pmpcfg0(0xf);// ask for clock interrupts.timerinit();// keep each CPU's hartid in its tp register, for cpuid().int id = r_mhartid();w_tp(id);// switch to supervisor mode and jump to main().asm volatile("mret");

}

mstatus寄存器如下,WPRI是保留位。MPP是机器模式保留特权状态位,MPP为b00时,表示处理器进入异常服务程序前处于U模式;MPP为b11时,表示处理器进入异常服务程序前处于M模式;MPP为b01时,表示处理器进入异常服务程序前处于S模式。:

MEPC(Machine Exception Program Counter)用于存储程序从异常服务程序退出时要返回的程序计数器值(即 PC 值),即返回地址设置为 main 函数的地址。

satp(Supervisor Address Translation and Protection)寄存器用于管理地址转换和保护机制。

medeleg(Machine exception delegation register)将特定的异常(exceptions)从机器模式(M-mode)委托(delegate)给更低特权级别的模式,通常是 supervisor mode(S-mode)。同理,mideleg(Machine Interrupt delegation register)是将特定中断进行委托。

SIE(Supervisor Interrupt Enable)寄存器用于控制和查询处于Supervisor Mode(S-mode)下的中断使能状态,包括软件中断(SSIE)、定时器中断(STIE)和外部中断(SEIE)。SIP(Supervisor Interrupt Pending)寄存器记录了哪些中断是待处理的,而SIE寄存器决定哪些中断是被使能的。

每一个 PMP 项定义了一个访问控制区域。一个 PMP 项包括一个 8 位控制寄存器和一个地址寄存器,仅可在 M 态下访问,处理器支持最多 64 个 PMP 项。PMP 访问控制区域的大小是可设置的,最小可支持 4 字节大小的区域。pmpaddr寄存器是物理内存保护(Physical Memory Protection, PMP)单元的一部分,用于定义物理内存区域的访问权限。在 RV64 中,寄存器存储 56 位物理地址的第 2 至第 55 位。pmpcfg(Physical Memory Protection Configuration)寄存器用于配置物理内存区域的访问权限和匹配模式。结合pmpcfg0配置寄存器,pmpaddr0寄存器可以定义一个物理内存区域的起始地址。这个区域的大小和对齐方式由pmpcfg0中的地址匹配模式(例如NAPOT、NA4、TOR)决定。具体计算细节参考这个网址。

MIE(Machine Interrupt Enable)寄存器用于管理和控制机器模式(M-mode)下的中断使能状态。

riscv提供两个 64 位的 M 模式寄存器 mtime 和 mtimecmp,并通过 MMIO 方式映射到地址空间。mtime 需要以固定的频率递增,并在发生溢出时回绕。当 mtime 大于或等于 mtimecmp 时,由核内中断控制器 (CLINT, Core-Local Interrupt Controller) 产生 timer 中断。中断的使能由 mie 寄存器中的 MTIE 和 STIE 位控制,mip 中的 MPIE 和 SPIE 则指示了 timer 中断是否处于 pending。RISC-V 还定义了一个 64 位非特权 CSR 寄存器 time,time 计数器是前面提到的 mtime 的只读映射。

在增加虚拟化扩展以后,特权模式会发生一定变化。相应地,timer 支持也进行了如下扩展:htimedelta 和 htimedeltah 是 Hypervisor 扩展里的 CSR,在 VS/VU 模式下读取 time 结果是真正的 host 中的 time 加上 htimedelta。同样的,对于 RV32 htimedelta 保存了低 32 位,高 32 位保存在 htimedeltah。

由于 mtimecmp 只能在 M 模式下访问,对于 S/HS 模式下的内核和 VU/VS 模式下的虚拟机需要通过 SBI 才能访问,会造成较大的中断延迟和性能开销。为了解决这一问题,RISC-V 新增了 sstc 拓展支持。sstc 扩展为 HS 模式和 VS 模式分别新增了 stimecmp 和 vstimecmp 寄存器,当 t i m e > = s t i m e c m p time >= stimecmp time>=stimecmp (HS)或 t i m e + h t i m e d e l t a > = v s t i m e c m p time+htimedelta >= vstimecmp time+htimedelta>=vstimecmp (VS)时会产生 timer 中断,不再需要通过 SBI 陷入其他模式。资料来源。

mcounteren寄存器是机器模式(M-mode)下的计数器使能寄存器,用于控制硬件性能监视器(Hardware Performance Monitor, HPM)计数器在机器模式下的可用性。mcounteren寄存器允许机器模式决定哪些性能计数器可以被更低特权级别的模式(如S模式或U模式)访问。

mhartid寄存器是用于标识当前硬件线程(Hardware Thread,简称hart)。每个RISC-V核心可以有一个或多个硬件线程,mhartid寄存器提供了一个唯一的标识符来区分它们。每个核心可以独立执行指令流,相当于多个处理器工作在一起。每个核心通常被视为一个硬件线程。比如 双核处理器(有两个独立的核心,通常有两个硬件线程)。

main函数如下,console初始化uart接口作为命令行输出口:

#include "types.h"

#include "param.h"

#include "memlayout.h"

#include "riscv.h"

#include "defs.h"volatile static int started = 0;// start() jumps here in supervisor mode on all CPUs.

void

main()

{if(cpuid() == 0){consoleinit();printfinit();printf("\n");printf("xv6 kernel is booting\n");printf("\n");kinit(); // physical page allocatorkvminit(); // create kernel page tablekvminithart(); // turn on pagingprocinit(); // process tabletrapinit(); // trap vectorstrapinithart(); // install kernel trap vectorplicinit(); // set up interrupt controllerplicinithart(); // ask PLIC for device interruptsbinit(); // buffer cacheiinit(); // inode tablefileinit(); // file tablevirtio_disk_init(); // emulated hard diskuserinit(); // first user process__sync_synchronize();started = 1;} else {while(started == 0);__sync_synchronize();printf("hart %d starting\n", cpuid());kvminithart(); // turn on pagingtrapinithart(); // install kernel trap vectorplicinithart(); // ask PLIC for device interrupts}scheduler();

}

syscall实验trace

关于使用系统调用的过程在sleep实验中已分析过,需要定义trace的系统调用号、函数定义以及系统调用入口。在proc结构体中加上mask变量,用于指示哪些系统调用需要被trace输出,fork中子进程还需要继承mask变量。在syscall.c中修改的代码段如下:

static char *syscall_names[] = {"", "fork", "exit", "wait", "pipe", "read", "kill", "exec", "fstat", "chdir", "dup", "getpid", "sbrk", "sleep", "uptime", "open", "write", "mknod", "unlink", "link", "mkdir", "close", "trace"};void

syscall(void)

{int num;struct proc *p = myproc();num = p->trapframe->a7;if(num > 0 && num < NELEM(syscalls) && syscalls[num]) {p->trapframe->a0 = syscalls[num]();if(1<<num & p->mask){printf("%d: syscall %s -> %d\n", p->pid, syscall_names[num], p->trapframe->a0);}} else {printf("%d %s: unknown sys call %d\n",p->pid, p->name, num);p->trapframe->a0 = -1;}

}

sys_trace函数实现如下:

uint64

sys_trace(void)

{int mask;if(argint(0, &mask)<0){return -1;}myproc()->mask = mask;return 0;

}

syscall实验sysinfo

sysinfo需要获取当前的进程个数和空闲内存统计,即sysinfo结构体:

struct sysinfo {uint64 freemem; // amount of free memory (bytes)uint64 nproc; // number of process

};

进程的定义在proc.h中,需要遍历os中所有的proc,判断state是否为UNUSED:

enum procstate { UNUSED, SLEEPING, RUNNABLE, RUNNING, ZOMBIE };// Per-process state

struct proc {struct spinlock lock;// p->lock must be held when using these:enum procstate state; // Process statestruct proc *parent; // Parent processvoid *chan; // If non-zero, sleeping on chanint killed; // If non-zero, have been killedint xstate; // Exit status to be returned to parent's waitint pid; // Process ID// these are private to the process, so p->lock need not be held.uint64 kstack; // Virtual address of kernel stackuint64 sz; // Size of process memory (bytes)pagetable_t pagetable; // User page tablestruct trapframe *trapframe; // data page for trampoline.Sstruct context context; // swtch() here to run processstruct file *ofile[NOFILE]; // Open filesstruct inode *cwd; // Current directorychar name[16]; // Process name (debugging)int mask; // mask for trace

};

参考proc.c中allocproc分配进程的函数:

// Look in the process table for an UNUSED proc.

// If found, initialize state required to run in the kernel,

// and return with p->lock held.

// If there are no free procs, or a memory allocation fails, return 0.

static struct proc*

allocproc(void)

{struct proc *p;for(p = proc; p < &proc[NPROC]; p++) {acquire(&p->lock);if(p->state == UNUSED) {goto found;} else {release(&p->lock);}}return 0;found:p->pid = allocpid();// Allocate a trapframe page.if((p->trapframe = (struct trapframe *)kalloc()) == 0){release(&p->lock);return 0;}// An empty user page table.p->pagetable = proc_pagetable(p);if(p->pagetable == 0){freeproc(p);release(&p->lock);return 0;}// Set up new context to start executing at forkret,// which returns to user space.memset(&p->context, 0, sizeof(p->context));p->context.ra = (uint64)forkret;p->context.sp = p->kstack + PGSIZE;return p;

}

在proc.c构造一个用于统计当前正在使用的proc数量的函数:

/*** 计算当前系统中处于使用状态的进程数量。* * 遍历进程数组,对每个进程结构体进行检查。* 使用互斥锁确保在检查进程状态时不会被其他线程中断。* * @return uint64 返回系统中处于使用状态的进程数量。*/

uint64

proc_num(void){struct proc *p;uint64 num = 0;for(p = proc; p < &proc[NPROC]; p++) {acquire(&p->lock);if(p->state != UNUSED) {num++;}release(&p->lock);}return num;

}

关于内存分配的结构体和函数在kalloc.c中,kinit初始化锁和内存,freerange调用kfree按PGSIZE(4096)为单位对内存进行初始化,kfree将全置为1,:

extern char end[]; // first address after kernel.// defined by kernel.ld.struct run {struct run *next;

};struct {struct spinlock lock;struct run *freelist;

} kmem;void

kinit()

{initlock(&kmem.lock, "kmem");freerange(end, (void*)PHYSTOP);

}void

freerange(void *pa_start, void *pa_end)

{char *p;p = (char*)PGROUNDUP((uint64)pa_start);for(; p + PGSIZE <= (char*)pa_end; p += PGSIZE)kfree(p);

}// Free the page of physical memory pointed at by v,

// which normally should have been returned by a

// call to kalloc(). (The exception is when

// initializing the allocator; see kinit above.)

void

kfree(void *pa)

{struct run *r;if(((uint64)pa % PGSIZE) != 0 || (char*)pa < end || (uint64)pa >= PHYSTOP)panic("kfree");// Fill with junk to catch dangling refs.memset(pa, 1, PGSIZE);r = (struct run*)pa;acquire(&kmem.lock);r->next = kmem.freelist;kmem.freelist = r;release(&kmem.lock);

}

所以获取空闲内存就需要遍历freelist,在kalloc.c新增函数:

/*** 计算当前系统中的空闲内存总量。* * 该函数通过遍历内存空闲链表来计算空闲内存的数量,并返回总的空闲内存大小。* 它首先获取内存管理锁,以防止在遍历过程中内存分配或释放导致链表变化。* 遍历结束后释放锁,并返回计算得到的空闲内存总量。* * @return 返回当前系统的空闲内存总量,单位是字节。*/

uint64

freemem(void){struct run *r;uint64 freemem_num = 0;acquire(&kmem.lock);r = kmem.freelist;while(r){freemem_num++;r = r->next;}release(&kmem.lock);return freemem_num*PGSIZE;

}

还需要在defs.h加上上述两个新增函数的声明。

获取了进程数和空闲内存后,还需将sysinfo结构体交给用户空间,因为用户态内存空间和内核态是隔离的。需要用到copyout函数将长度为len的数据从内核空间src复制到用户空间dstva,PGROUNDDOWN获取dstva的页起始地址,walkaddr查找虚拟地址对应的物理地址,memmove用于从内核空间的src地址复制n个字节到用户空间的pa0 + (dstva - va0)地址:

#define PGROUNDDOWN(a) (((a)) & ~(PGSIZE-1))// Copy from kernel to user.

// Copy len bytes from src to virtual address dstva in a given page table.

// Return 0 on success, -1 on error.

int

copyout(pagetable_t pagetable, uint64 dstva, char *src, uint64 len)

{uint64 n, va0, pa0;while(len > 0){va0 = PGROUNDDOWN(dstva);pa0 = walkaddr(pagetable, va0);if(pa0 == 0)return -1;n = PGSIZE - (dstva - va0);if(n > len)n = len;memmove((void *)(pa0 + (dstva - va0)), src, n);len -= n;src += n;dstva = va0 + PGSIZE;}return 0;

}

walkaddr检查当前虚址是否超出最大内存(Sv39),调用walk去获取虚址对应的PTE(页表项),PTE_V表示PTE是否有效,PTE_U表示是否用户态可用,最后将PTE转成物理地址:

// one beyond the highest possible virtual address.

// MAXVA is actually one bit less than the max allowed by

// Sv39, to avoid having to sign-extend virtual addresses

// that have the high bit set.

#define MAXVA (1L << (9 + 9 + 9 + 12 - 1))// Look up a virtual address, return the physical address,

// or 0 if not mapped.

// Can only be used to look up user pages.

uint64

walkaddr(pagetable_t pagetable, uint64 va)

{pte_t *pte;uint64 pa;if(va >= MAXVA)return 0;pte = walk(pagetable, va, 0);if(pte == 0)return 0;if((*pte & PTE_V) == 0)return 0;if((*pte & PTE_U) == 0)return 0;pa = PTE2PA(*pte);return pa;

}// Return the address of the PTE in page table pagetable

// that corresponds to virtual address va. If alloc!=0,

// create any required page-table pages.

//

// The risc-v Sv39 scheme has three levels of page-table

// pages. A page-table page contains 512 64-bit PTEs.

// A 64-bit virtual address is split into five fields:

// 39..63 -- must be zero.

// 30..38 -- 9 bits of level-2 index.

// 21..29 -- 9 bits of level-1 index.

// 12..20 -- 9 bits of level-0 index.

// 0..11 -- 12 bits of byte offset within the page.

pte_t *

walk(pagetable_t pagetable, uint64 va, int alloc)

{if(va >= MAXVA)panic("walk");for(int level = 2; level > 0; level--) {pte_t *pte = &pagetable[PX(level, va)];if(*pte & PTE_V) {pagetable = (pagetable_t)PTE2PA(*pte);} else {if(!alloc || (pagetable = (pde_t*)kalloc()) == 0)return 0;memset(pagetable, 0, PGSIZE);*pte = PA2PTE(pagetable) | PTE_V;}}return &pagetable[PX(0, va)];

}

在 Sv39 虚拟内存布局中,有效物理内存地址为 56 位,虚拟内存地址为 64 位,但仅低 39 位有效,共分为三层页表。

寻址过程如下图所示,内核将根页表页的物理地址写入 satp 寄存器中,每个 CPU都有自己的 satp 寄存器。每次都使用VPN到列表中找到每层页表的PPN,最后将(PPN>>10(PTE的flags有10位))<<12 + offset获得物理地址。:

在完成上述的学习后,编写出的sys_sysinfo如下:

uint64

sys_sysinfo(void)

{struct sysinfo info;uint64 addr;if(argaddr(0, &addr) < 0)return -1;info.nproc = proc_num();info.freemem = freemem();struct proc* p = myproc();if(copyout(p->pagetable, addr, (char*)&info, sizeof(info)) < 0){return -1;}return 0;

}

xv6内存学习笔记

关于内存初始化和Sv39寻址方式在上个实验中已经记录,下图显示了虚拟地址和物理地址的布局和映射关系。

main 调用 kvminit 来创建内核的页表。这个调用发生在 xv6 在 RISC V 启用分页之前,所以地址直接指向物理内存。 Kvminit首先分配一页物理内存来存放根页表页。然后调用kvmmap 将内核所需要的硬件资源映射到物理地址。kvmmap调用walk来分配内核内存空间给各级页表。

// Initialize the one kernel_pagetable

void

kvminit(void)

{kernel_pagetable = kvmmake();

}// Make a direct-map page table for the kernel.

pagetable_t

kvmmake(void)

{pagetable_t kpgtbl;kpgtbl = (pagetable_t) kalloc();memset(kpgtbl, 0, PGSIZE);// uart registerskvmmap(kpgtbl, UART0, UART0, PGSIZE, PTE_R | PTE_W);// virtio mmio disk interfacekvmmap(kpgtbl, VIRTIO0, VIRTIO0, PGSIZE, PTE_R | PTE_W);// PLICkvmmap(kpgtbl, PLIC, PLIC, 0x4000000, PTE_R | PTE_W);// map kernel text executable and read-only.kvmmap(kpgtbl, KERNBASE, KERNBASE, (uint64)etext-KERNBASE, PTE_R | PTE_X);// map kernel data and the physical RAM we'll make use of.kvmmap(kpgtbl, (uint64)etext, (uint64)etext, PHYSTOP-(uint64)etext, PTE_R | PTE_W);// map the trampoline for trap entry/exit to// the highest virtual address in the kernel.kvmmap(kpgtbl, TRAMPOLINE, (uint64)trampoline, PGSIZE, PTE_R | PTE_X);// allocate and map a kernel stack for each process.proc_mapstacks(kpgtbl);return kpgtbl;

}// add a mapping to the kernel page table.

// only used when booting.

// does not flush TLB or enable paging.

void

kvmmap(pagetable_t kpgtbl, uint64 va, uint64 pa, uint64 sz, int perm)

{if(mappages(kpgtbl, va, sz, pa, perm) != 0)panic("kvmmap");

}// Create PTEs for virtual addresses starting at va that refer to

// physical addresses starting at pa.

// va and size MUST be page-aligned.

// Returns 0 on success, -1 if walk() couldn't

// allocate a needed page-table page.

int

mappages(pagetable_t pagetable, uint64 va, uint64 size, uint64 pa, int perm)

{uint64 a, last;pte_t *pte;if((va % PGSIZE) != 0)panic("mappages: va not aligned");if((size % PGSIZE) != 0)panic("mappages: size not aligned");if(size == 0)panic("mappages: size");a = va;last = va + size - PGSIZE;for(;;){if((pte = walk(pagetable, a, 1)) == 0)return -1;if(*pte & PTE_V)panic("mappages: remap");*pte = PA2PTE(pa) | perm | PTE_V;if(a == last)break;a += PGSIZE;pa += PGSIZE;}return 0;

}

main调用 kvminithart来映射内核页表。它将根页表页的物理地址写入寄存器 satp 中。在这之后, CPU 将使用内核页表翻译地址。由于内核使用唯一映射,所以指令的虚拟地址将映射到正确的物理内存地址。

// Switch h/w page table register to the kernel's page table,

// and enable paging.

void

kvminithart()

{// wait for any previous writes to the page table memory to finish.sfence_vma();w_satp(MAKE_SATP(kernel_pagetable));// flush stale entries from the TLB.sfence_vma();

}

satp寄存器如下图所示,mode为8时设置成Sv39:

procinit为每个进程分配一个内核栈 :

// initialize the proc table.

void

procinit(void)

{struct proc *p;initlock(&pid_lock, "nextpid");initlock(&wait_lock, "wait_lock");for(p = proc; p < &proc[NPROC]; p++) {initlock(&p->lock, "proc");p->state = UNUSED;p->kstack = KSTACK((int) (p - proc));}

}

用户态空间用malloc来分配堆内存空间,最终使用sbrk系统调用,sbrk由growproc实现,growproc 调用 uvmalloc 或 uvmdealloc ,取决于 n 是正数还是负数。uvmdealloc 调用 uvmunmap,使用 walk 来查找 PTE,使用 kfree 来释放它们所引用的物理内存。

// Grow or shrink user memory by n bytes.

// Return 0 on success, -1 on failure.

int

growproc(int n)

{uint64 sz;struct proc *p = myproc();sz = p->sz;if(n > 0){if((sz = uvmalloc(p->pagetable, sz, sz + n, PTE_W)) == 0) {return -1;}} else if(n < 0){sz = uvmdealloc(p->pagetable, sz, sz + n);}p->sz = sz;return 0;

}// Allocate PTEs and physical memory to grow process from oldsz to

// newsz, which need not be page aligned. Returns new size or 0 on error.

uint64

uvmalloc(pagetable_t pagetable, uint64 oldsz, uint64 newsz, int xperm)

{char *mem;uint64 a;if(newsz < oldsz)return oldsz;oldsz = PGROUNDUP(oldsz);for(a = oldsz; a < newsz; a += PGSIZE){mem = kalloc();if(mem == 0){uvmdealloc(pagetable, a, oldsz);return 0;}memset(mem, 0, PGSIZE);if(mappages(pagetable, a, PGSIZE, (uint64)mem, PTE_R|PTE_U|xperm) != 0){kfree(mem);uvmdealloc(pagetable, a, oldsz);return 0;}}return newsz;

}// Deallocate user pages to bring the process size from oldsz to

// newsz. oldsz and newsz need not be page-aligned, nor does newsz

// need to be less than oldsz. oldsz can be larger than the actual

// process size. Returns the new process size.

uint64

uvmdealloc(pagetable_t pagetable, uint64 oldsz, uint64 newsz)

{if(newsz >= oldsz)return oldsz;if(PGROUNDUP(newsz) < PGROUNDUP(oldsz)){int npages = (PGROUNDUP(oldsz) - PGROUNDUP(newsz)) / PGSIZE;uvmunmap(pagetable, PGROUNDUP(newsz), npages, 1);}return newsz;

}// Remove npages of mappings starting from va. va must be

// page-aligned. The mappings must exist.

// Optionally free the physical memory.

void

uvmunmap(pagetable_t pagetable, uint64 va, uint64 npages, int do_free)

{uint64 a;pte_t *pte;if((va % PGSIZE) != 0)panic("uvmunmap: not aligned");for(a = va; a < va + npages*PGSIZE; a += PGSIZE){if((pte = walk(pagetable, a, 0)) == 0)panic("uvmunmap: walk");if((*pte & PTE_V) == 0)panic("uvmunmap: not mapped");if(PTE_FLAGS(*pte) == PTE_V)panic("uvmunmap: not a leaf");if(do_free){uint64 pa = PTE2PA(*pte);kfree((void*)pa);}*pte = 0;}

}

pgtbl实验Print a page table

仿照freewalk函数改写就行:

void

walk_vmprint(pagetable_t pagetable, int level){for(int i = 0; i < 512; i++){pte_t pte = pagetable[i];if(pte & PTE_V){uint64 child = PTE2PA(pte);for(int j = 0; j <= level; j++){if(j == 0) printf("..");else printf(" ..");}printf("%d: pte %p pa %p\n", i, pte, child);if((pte & (PTE_R|PTE_W|PTE_X)) == 0){walk_vmprint((pagetable_t)child, level+1);}} }return;

}void

vmprint(pagetable_t pagetable){printf("page table %p\n",pagetable);walk_vmprint(pagetable, 0);return;

}

pgtbl实验A kernel page table per process

之前的进程共享同一个内核页表,这个实验需要为每个进程各自维护一个页表。

在struct proc中新增kpagetable字段用于存储进程的内核页表:

根据kvminit(生成唯一的内核页表),逻辑不变,但需要在创建新进程时为每个进程页表分别进行映射,而不是只为唯一内核页表进行映射。主要改变proc_kvmmap函数:

// 初始化进程的内核页表。

pagetable_t

proc_kvminit()

{pagetable_t kpagetable = (pagetable_t) kalloc();memset(kpagetable, 0, PGSIZE);// uart registersproc_kvmmap(kpagetable, UART0, UART0, PGSIZE, PTE_R | PTE_W);// virtio mmio disk interfaceproc_kvmmap(kpagetable, VIRTIO0, VIRTIO0, PGSIZE, PTE_R | PTE_W);// CLINTproc_kvmmap(kpagetable, CLINT, CLINT, 0x10000, PTE_R | PTE_W);// PLICproc_kvmmap(kpagetable, PLIC, PLIC, 0x400000, PTE_R | PTE_W);// map kernel text executable and read-only.proc_kvmmap(kpagetable, KERNBASE, KERNBASE, (uint64)etext-KERNBASE, PTE_R | PTE_X);// map kernel data and the physical RAM we'll make use of.proc_kvmmap(kpagetable, (uint64)etext, (uint64)etext, PHYSTOP-(uint64)etext, PTE_R | PTE_W);// map the trampoline for trap entry/exit to// the highest virtual address in the kernel.proc_kvmmap(kpagetable, TRAMPOLINE, (uint64)trampoline, PGSIZE, PTE_R | PTE_X);return kpagetable;

}// 在进程内核页表中进行映射。

void

proc_kvmmap(pagetable_t kpagetable, uint64 va, uint64 pa, uint64 sz, int perm)

{if(mappages(kpagetable, va, sz, pa, perm) != 0)panic("proc_kvmmap");

}

实验之前的内核栈是在procinit中为每个进程创建,在进程创建前就已经映射好。但实验要求内核栈要放入进程的内核页表中,因此需要把这步放到allocproc中,在创建进程时进行映射。进程的内核页表同理。

在scheduler调度时,需要重新加载进程对应的内核页表,同时刷新TLB。在没有进程时,切换到内核所属的页表。

释放进程时,也要把进程所属的内核页面释放,参考proc_freepagetable释放用户页表函数,把内核栈取消映射并释放内存,其余在初始化进程内核页表的地址空间取消映射但不能释放空间,最后调用uvmfree函数取消叶子结点外的页表映射并释放对应内存,uvmfree要求叶子结点的映射全部被移除,否则报错。由于在内核中除了内核栈使用了进程的内核页表,其余地方并没有申请多余内存,因此可以使用uvmfree(pagetable, 0),否则也需要像用户页表那样新增一个proc变量,来记录分配的地址。

// free a proc structure and the data hanging from it,

// including user pages.

// p->lock must be held.

static void

freeproc(struct proc *p)

{if(p->trapframe)kfree((void*)p->trapframe);p->trapframe = 0;if(p->pagetable)proc_freepagetable(p->pagetable, p->sz);if(p->kpagetable)proc_freekpagetable(p->kpagetable, p->kstack);p->pagetable = 0;p->sz = 0;p->pid = 0;p->parent = 0;p->name[0] = 0;p->chan = 0;p->killed = 0;p->xstate = 0;p->state = UNUSED;

}#define DIV_UP(a, b) (((a) + ((b) - 1)) / (b))

void

proc_freekpagetable(pagetable_t pagetable, uint64 kstack)

{if(kstack){uvmunmap(pagetable, kstack, 1, 1);}extern char etext[]; // kernel.ld sets this to end of kernel code.extern char trampoline[]; // trampoline.Suvmunmap(pagetable, UART0, 1, 0);uvmunmap(pagetable, VIRTIO0, 1, 0);uvmunmap(pagetable, CLINT, DIV_UP(0x10000, PGSIZE), 0);uvmunmap(pagetable, PLIC, DIV_UP(0x400000, PGSIZE), 0);uvmunmap(pagetable, KERNBASE, DIV_UP(((uint64)etext-KERNBASE), PGSIZE), 0);uvmunmap(pagetable, (uint64)etext, DIV_UP((PHYSTOP-(uint64)etext), PGSIZE), 0);uvmunmap(pagetable, TRAMPOLINE, 1, 0);uvmfree(pagetable, 0);

}

在完成实验手册的步骤后,编译会出错,提示kvmpa中使用了内核的页表(非进程),因此需要改成进程的内核页表。

// translate a kernel virtual address to

// a physical address. only needed for

// addresses on the stack.

// assumes va is page aligned.

uint64

kvmpa(uint64 va)

{uint64 off = va % PGSIZE;pte_t *pte;uint64 pa;struct proc *p = myproc();pte = walk(p->kpagetable, va, 0);if(pte == 0)panic("kvmpa");if((*pte & PTE_V) == 0)panic("kvmpa");pa = PTE2PA(*pte);return pa+off;

}

xv6 trap学习

trap分为系统调用、异常和中断三类。

关于一部分系统调用的xv6代码分析在sleep实验中。在内核和用户态页表中,都有trampoline页,包括uservec和userret作为系统调用的入口和出口。

riscv硬件自动进行的操作如下:

- 如果该 trap 是设备中断,且 sstatus SIE 位为 0 ,则不要执行以下任何操作。

- 通过清除 SIE 来禁用中断。

- 复制 pc 到 sepc。

- 将当前模式 用户或监督者 保存在 sstatus 的 SPP位。

- 在 scause 设置该次 trap 的原因。

- 将模式转换为监督者。

- 将 stvec 复制到 pc 。

- 执行新的 pc。

uservec保存相关寄存器后,跳转到usertrap函数。usertrap根据trap类型进行处理,usertrap 会把用户 pc 加 4 ,因为 RISC V 在执行系统调用时,会留下指向 ecall 指令的程序指针,需要加 4清除ecall。在退出时,usertrap 检查进程是否已经被杀死或应该让出 CPU (如果这个 trap 是一个定时器中断)。

内核和用户态内存交互代码分析见 sysinfo实验部分。

写时复制( copy on write cow)fork是指父或子进程在store时才分配内存,通过页面故障异常(pagepage fault exception)实现。COW fork中的基本设计是父进程和子进程最初共享所有的物理页面,但将它们映射设置为只读。因此,当子进程或父进程执行 store 指令时,RISC V CPU 会引发一个页面故障异常。作为对这个异常的响应,内核会拷贝一份包含故障地址的页。然后将一个副本的读写映射在子进程地址空间,另一个副本的读/写映射在父进程地址空间。更新页表后,内核在引起故障的指令处恢复故障处理。因为内核已经更新了相关的 PTE ,允许写入,所以现在故障指令将正常执行。

懒分配(lazy allocation)是指先在页表进行映射,但不分配物理空间,等使用(即发生页面故障)才实际分配物理空间。

磁盘上分页 (paging from disk) 是指内存超过实际可用的物理RAM,可以将其写入磁盘中。标记为无效。如果一个应用程序读取或写入一个被换出到磁盘的页, CPU 将遇到一个页面故障。内核就可以检查故障地址。如果该地址属于磁盘上的页面,内核就会分配一个物理内存的页面,从磁盘上读取页面到该内存,更新 PTE 为有效并引用该内存,然后恢复应用程序。为了给该页腾出空间,内核可能要交换另一个页。

trap实验Backtrace

r_fp读取s0寄存器,即栈底寄存器,作为函数返回值:

// 读取栈底的值

static inline uint64

r_fp()

{uint64 x;asm volatile("mv %0, s0" : "=r" (x) );return x;

}

返回地址位于栈底指针的固定偏移量(-8),前一个函数栈底指针位于当前栈底指针的固定偏移量(-16),一直追溯前一个栈底指针就能完成backtrace函数。

Xv6在页面对齐地址为每个进程的栈分配一个页面,可以使用PGROUNDDOWN(fp)和PGROUNDUP(fp)计算进程的栈页面的顶部和底部地址,栈地址要位于这个页面之中:

void

backtrace(void)

{uint64 fp = r_fp();uint64 page_top = PGROUNDUP(fp);uint64 page_bottom = PGROUNDDOWN(fp);while(fp >= page_bottom && fp < page_top){printf("%p\n", *((uint64*)(fp-8))); // 将uint64(栈地址)转成指针类型进行访问fp = *((uint64*)(fp-16)); // 访问前一个函数的栈地址}

}

trap实验Alarm

先申明好sigalarm和sigreturn定义,在proc.h的struct proc里加入相关变量:

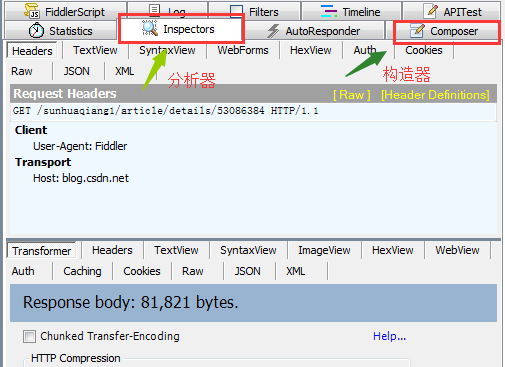

usertrap函数中通过scause(Supervisor Cause Register)寄存器判断导致S态的原因,当scause==8时表明是从ecall进入内核:

而which_dev从devintr()获取当前中断来自哪个设备,第一个if处理supervisor external interrupt,外部中断通常由外部设备或硬件事件触发,例如I/O设备、传感器等,中断信号会被传递到平台级中断控制器(PLIC),然后由PLIC将中断信号传递给相应的HART(硬件抽象线程);else if处理Supervisor Software Interrupt,软件中断通常由软件指令触发,例如通过SBI(Supervisor Binary Interface)调用,软件中断可以用于在不同的核心之间发送IPI(Inter-Processor Interrupt),用于同步或通信。SIP寄存器在前面已介绍过:

// check if it's an external interrupt or software interrupt,

// and handle it.

// returns 2 if timer interrupt,

// 1 if other device,

// 0 if not recognized.

int

devintr()

{uint64 scause = r_scause();if((scause & 0x8000000000000000L) &&(scause & 0xff) == 9){// this is a supervisor external interrupt, via PLIC.// irq indicates which device interrupted.int irq = plic_claim();if(irq == UART0_IRQ){uartintr();} else if(irq == VIRTIO0_IRQ){virtio_disk_intr();} else if(irq){printf("unexpected interrupt irq=%d\n", irq);}// the PLIC allows each device to raise at most one// interrupt at a time; tell the PLIC the device is// now allowed to interrupt again.if(irq)plic_complete(irq);return 1;} else if(scause == 0x8000000000000001L){// software interrupt from a machine-mode timer interrupt,// forwarded by timervec in kernelvec.S.if(cpuid() == 0){clockintr();}// acknowledge the software interrupt by clearing// the SSIP bit in sip.w_sip(r_sip() & ~2);return 2;} else {return 0;}

}

因此在which_dev == 2时处理alarm。但由于handler函数在用户态,页表与内核页表是隔离的,需要返回用户态才能执行。而p->trapframe->epc控制从内核态切换到用户态执行指令的地址,将epc设置成handler函数地址返回到用户态就会执行handler函数。在usertrap里具体代码如下:

sysproc里新增系统调用实现如下:

uint64

sys_sigalarm(void){int interval;uint64 handler;if(argint(0, &interval) < 0)return -1;if(argaddr(1, &handler) < 0)return -1;if(interval<0)return -1;struct proc* p = myproc();p->alarm_interval = interval;p->alarm_handler = handler;p->alarm_ticks = 0;return 0;

}

uint64

sys_sigreturn(void){return 0;

}但只是修改epc会导致无法返回原函数,并且trapframe里的寄存器都会被handler改变,所以需要保存原函数的上下文,使得sigreturn能够从handler里恢复。在struct proc里需要新增一个变量来报错trapframe,由于alarm不能重复进入,不需要考虑多trapframe问题,并在allocproc中奖trapframe_bak初始化为0:

在之前没有考虑不可重复进入alarm(在alarm里调用alarm)的情况,在usertrap里处理alarm时将记录已过去tick的变量alarm_ticks执行完alarm赋值为0,这会导致不断重复执行handler无法退出。为了不重复执行alarm,usertrap里alarm_ticks不归零,这样只能执行一次alarm。trapframe_bak利用了trapframe所在页的剩余空间进行存储:

能够再次执行alarm的时候是在handler里调用sigreturn退出之后,因此在sigreturn里将alarm_ticks归零就能防止递归执行alarm。此外,sigreturn还承担恢复原进程的任务,需要将trapframe恢复。在函数返回时,a0寄存器里会存放函数返回值,这会导致trapframe里的a0不能被恢复,所以将trapframe->a0作为返回值:

uint64

sys_sigreturn(void){struct proc* p = myproc();if(p->trapframe_bak == 0)return -1;*(p->trapframe) = *(p->trapframe_bak);p->trapframe_bak = 0;p->alarm_ticks = 0;return p->trapframe->a0;

}

lazy实验Lazy allocation

lazy分配是指在实际使用时才真正分配物理内存空间,原来的sys_sbrk是申请时就分配内存,需要修改成只增加堆的大小:

在usertrap中,当发生page fault时实际分配内存空间。需要注意的是,一定要在va外面加上PGROUNDDOWN,否则mappages里面会映射2个页面。scause的13(Store/AMO page fault)和15(load page fault)分别对应写和读错误。stval寄存器用于存储与异常相关的信息,在发生页错误(page fault)时,stval寄存器会保存导致页错误的虚拟地址。当触发硬件断点,或者发生指令获取、加载或存储访问或页面错误异常,或者发生指令获取或AMO地址不对齐异常时,将使用错误地址写入stval。对于其他异常,stval被设置为零,但将来的标准可能会为其他异常重新定义stval的设置:

sbrk申请的内存空间可能未被使用,但uvmunmap取消映射和释放内存时会连带着这部分多余内存地址,因此uvmunmap会报错,需要进行修改。但不能将if((*pte & PTE_V) == 0)直接注释,否则下面执行kfree会报错:

xv6中断和设备驱动学习

控制台驱动 (console.c) 是驱动结构的一个简单说明。控制台驱动通过连接到 RISC V 上的 UART 串行端口硬件,接受输入的字符。与驱动交互的UART 硬件是由 QEMU 仿真的 16550 芯片。UART硬件在软件看来是一组 内存映射 的控制寄存器。UART的内存映射地址从 0x10000000 开始,即 UART0。这里有一些 UART控制寄存器,每个寄存器的宽度是一个字节。

void

consoleinit(void)

{initlock(&cons.lock, "cons");uartinit();// connect read and write system calls// to consoleread and consolewrite.devsw[CONSOLE].read = consoleread;devsw[CONSOLE].write = consolewrite;

}

uartinit()来初始化uart0接口作为console的读写硬件,:

#define Reg(reg) ((volatile unsigned char *)(UART0 + (reg)))

#define WriteReg(reg, v) (*(Reg(reg)) = (v))void

uartinit(void)

{// disable interrupts.// 0: 关闭receiver ready register中断WriteReg(IER, 0x00);// special mode to set baud rate.// 进入设置波特率的模式WriteReg(LCR, LCR_BAUD_LATCH);// LSB for baud rate of 38.4K.WriteReg(0, 0x03);// MSB for baud rate of 38.4K.WriteReg(1, 0x00);// leave set-baud mode,// and set word length to 8 bits, no parity.// 离开设置波特率的模式,设置传输字长为8bitWriteReg(LCR, LCR_EIGHT_BITS);// reset and enable FIFOs.// FCR_FIFO_ENABLE使能输入输出两个FIFO// FCR_FIFO_CLEAR清空两个FIFO并将其计数逻辑设置为0 // FIFO是UART内部的WriteReg(FCR, FCR_FIFO_ENABLE | FCR_FIFO_CLEAR);// enable transmit and receive interrupts.// 使能输入输出中断WriteReg(IER, IER_TX_ENABLE | IER_RX_ENABLE);// 初始化uart读写缓冲区的锁initlock(&uart_tx_lock, "uart");

}

在xv6中使用devsw作为驱动对外载体,数组号作为设备号。在UNIX系统中,驱动设备号分为主和从两部分,主设备号用来确定设备要使用的驱动程序大类,从设备号是大类里的细分:

// map major device number to device functions.

struct devsw {int (*read)(int, uint64, int);int (*write)(int, uint64, int);

};

在user/init.c中,使用mknod(“console”, CONSOLE, 0)注册console,CONSOLE为主设备号,0为从设备号:

if(open("console", O_RDWR) < 0){mknod("console", CONSOLE, 0);open("console", O_RDWR);}

用户态的printf最后会使用write系统调用,对console进行读写,即调用devsw中的write函数指针,指向consolewrite:

void

printf(const char *fmt, ...)

{va_list ap;va_start(ap, fmt);vprintf(1, fmt, ap); // 最终调用putc,console的fd(设备号)为1

}static void

putc(int fd, char c)

{write(fd, &c, 1);

}

consolewrite逐字符将用户态地址src的内容复制到c中,再调用uartputc向uart0中写数据,直到n个字符都加入到输出缓冲区中,user_src表示是用户态页表,需要转换成物理地址,若为0都是在内核态页表:

//

// user write()s to the console go here.

//

int

consolewrite(int user_src, uint64 src, int n)

{int i;for(i = 0; i < n; i++){char c;if(either_copyin(&c, user_src, src+i, 1) == -1)break;uartputc(c);}return i;

}// Copy from either a user address, or kernel address,

// depending on usr_src.

// Returns 0 on success, -1 on error.

int

either_copyin(void *dst, int user_src, uint64 src, uint64 len)

{struct proc *p = myproc();if(user_src){return copyin(p->pagetable, dst, src, len);} else {memmove(dst, (char*)src, len);return 0;}

}

uartputc将一个字符放入输出缓冲区,如果缓冲区满了就睡眠,但真正发送数据是在uartstart()函数里:

// add a character to the output buffer and tell the

// UART to start sending if it isn't already.

// blocks if the output buffer is full.

// because it may block, it can't be called

// from interrupts; it's only suitable for use

// by write().

void

uartputc(int c)

{acquire(&uart_tx_lock);if(panicked){for(;;);}while(uart_tx_w == uart_tx_r + UART_TX_BUF_SIZE){// buffer is full.// wait for uartstart() to open up space in the buffer.sleep(&uart_tx_r, &uart_tx_lock);}uart_tx_buf[uart_tx_w % UART_TX_BUF_SIZE] = c;uart_tx_w += 1;uartstart();release(&uart_tx_lock);

}

uartstart先判断写缓冲区是否为空,空则不需要发送数据直接返回。然后通过LSR寄存器判断uart是否空闲,若不空闲页直接返回。当发送缓冲区中有字符并且UART正处于空闲状态时,就从缓冲区取出一个字符,唤醒在art_tx_r地址上进行睡眠等待的锁,将其改成RUNNABLE状态进入调度队列,和uartputc中的sleep对应。因为uartstart还在uartintr中被调用,即中断部分,可以唤醒被阻塞的uartputc。

// if the UART is idle, and a character is waiting

// in the transmit buffer, send it.

// caller must hold uart_tx_lock.

// called from both the top- and bottom-half.

void

uartstart()

{while(1){if(uart_tx_w == uart_tx_r){// transmit buffer is empty.ReadReg(ISR);return;}if((ReadReg(LSR) & LSR_TX_IDLE) == 0){// the UART transmit holding register is full,// so we cannot give it another byte.// it will interrupt when it's ready for a new byte.return;}int c = uart_tx_buf[uart_tx_r % UART_TX_BUF_SIZE];uart_tx_r += 1;// maybe uartputc() is waiting for space in the buffer.wakeup(&uart_tx_r);WriteReg(THR, c);}

}// Wake up all processes sleeping on chan.

// Must be called without any p->lock.

void

wakeup(void *chan)

{struct proc *p;for(p = proc; p < &proc[NPROC]; p++) {if(p != myproc()){acquire(&p->lock);if(p->state == SLEEPING && p->chan == chan) {p->state = RUNNABLE;}release(&p->lock);}}

}

consoleread用于读取console内容,读入指定的n个字符后才会结束,当console缓冲区中无字符时会保持休眠。当读到C(‘D’)(Ctrl+D)时,即End Of File组合键,若已读取到部分数据会回退读指针保留Ctrl+D在缓冲区中,这样下次中断读取就直接结束但本次数据会被成功读取。将读取到的数据送回给用户态。读取到换行符,即回车键,结束读取,返回从缓冲区读取字符数。

//

// user read()s from the console go here.

// copy (up to) a whole input line to dst.

// user_dist indicates whether dst is a user

// or kernel address.

//

int

consoleread(int user_dst, uint64 dst, int n)

{uint target;int c;char cbuf;target = n;acquire(&cons.lock);while(n > 0){// wait until interrupt handler has put some// input into cons.buffer.while(cons.r == cons.w){if(killed(myproc())){release(&cons.lock);return -1;}sleep(&cons.r, &cons.lock);}c = cons.buf[cons.r++ % INPUT_BUF_SIZE];if(c == C('D')){ // end-of-fileif(n < target){// Save ^D for next time, to make sure// caller gets a 0-byte result.cons.r--;}break;}// copy the input byte to the user-space buffer.cbuf = c;if(either_copyout(user_dst, dst, &cbuf, 1) == -1)break;dst++;--n;if(c == '\n'){// a whole line has arrived, return to// the user-level read().break;}}release(&cons.lock);return target - n;

}

CLINT(Core Local Interruptor)和PLIC(Platform-Level Interrupt Controller)是RISC-V架构中用于处理中断的两个重要组件。CLINT是每个处理器核心的本地中断控制器,主要用于处理软件中断和定时器中断;PLIC是平台级别的中断控制器,用于处理来自外部设备的中断,它管理多个中断源,并将中断分配给不同的处理器核心。

riscv对外部设备中断响应过程如下,参考博客https://blog.csdn.net/zzy980511/article/details/130642258:

- 外部设备发起一个中断给PLIC中的Gateways,Gateways负责将中断信号进行转换并转发给PLIC核心,这个过程中会涉及Priority、Threshold等等一系列的判断(详见上面的电路),最终会体现在PLIC核心的EIP位上。

- EIP位一旦被置1,会直接导致多个核心上的sip.SEIP位被置1。进而导致多个打开此中断的CPU核心开始竞争claim这个中断,没有竞争过其他核心时会得到中断ID为0,进而不响应,这个过程叫做claim操作。

- 响应最早的核心成功开始服务这个中断,并在服务完成之后将中断ID号再次写入claim/completion寄存器,这个过程叫做complete操作。

- 这个complete操作会被转发到Gateways,进而再次开启对这种中断的转发。

上面了解了驱动的注册和使用,还需要了解uart中断是如何触发的。qemu提供的virt虚拟硬件环境,给出了这些外部设备的物理位置。

// Physical memory layout// qemu -machine virt is set up like this,

// based on qemu's hw/riscv/virt.c:

//

// 00001000 -- boot ROM, provided by qemu

// 02000000 -- CLINT

// 0C000000 -- PLIC

// 10000000 -- uart0

// 10001000 -- virtio disk

// 80000000 -- boot ROM jumps here in machine mode

// -kernel loads the kernel here

// unused RAM after 80000000.// the kernel uses physical memory thus:

// 80000000 -- entry.S, then kernel text and data

// end -- start of kernel page allocation area

// PHYSTOP -- end RAM used by the kernel// qemu puts UART registers here in physical memory.

#define UART0 0x10000000L

#define UART0_IRQ 10// virtio mmio interface

#define VIRTIO0 0x10001000

#define VIRTIO0_IRQ 1// qemu puts platform-level interrupt controller (PLIC) here.

#define PLIC 0x0c000000L

#define PLIC_PRIORITY (PLIC + 0x0)

#define PLIC_PENDING (PLIC + 0x1000)

#define PLIC_SENABLE(hart) (PLIC + 0x2080 + (hart)*0x100)

#define PLIC_SPRIORITY(hart) (PLIC + 0x201000 + (hart)*0x2000)

#define PLIC_SCLAIM(hart) (PLIC + 0x201004 + (hart)*0x2000)

plicinit和plicinithart用来初始化外部设备中断,plicinit给不同的外部中断赋予优先级,每个IRQ的优先级寄存器通常占用4个字节(32位),因此使用UART0_IRQ*4来计算偏移量。外部中断设备有0-7一共8个优先级,数字越大优先级越高,其中0表示“无需响应的中断”,所以优先级设置为0表示屏蔽此中断。两个中断优先级一样,同时发生时优先响应中断ID号较小的。中断ID号在qemu里已经被定义好。

void

plicinit(void)

{// set desired IRQ priorities non-zero (otherwise disabled).*(uint32*)(PLIC + UART0_IRQ*4) = 1;*(uint32*)(PLIC + VIRTIO0_IRQ*4) = 1;

}

plicinithart初始化每个核心的中断使能和阈值,PLIC不会响应小于等于中断阈值的优先级的中断,为了让所有中断都被响应,这里将阈值设置为0,所以所有中断都会被响应。

void

plicinithart(void)

{int hart = cpuid();// set enable bits for this hart's S-mode// for the uart and virtio disk.*(uint32*)PLIC_SENABLE(hart) = (1 << UART0_IRQ) | (1 << VIRTIO0_IRQ);// set this hart's S-mode priority threshold to 0.*(uint32*)PLIC_SPRIORITY(hart) = 0;

}

在sh里,调用getcmd读取控制台输入,即标准输入0,最多读100字节,再调用gets去调用系统调用read,read调用consoleread。consoleread遇到cons.r == cons.w就会睡眠,等待consoleintr唤醒。使用键盘会触发uartintr一直读取键盘输入,uartintr调用consoleintr将字符存入cons.buf缓冲区中,直到遇到换行符、ctrl+D或缓冲区满,就唤醒consoleread,在唤醒前一直使用的cons.e,这样不会触发consoleread。唤醒consoleread后,会读cons.buf,直到遇到换行符、ctrl+D或者buf满,调用runcmd去执行命令。这里console的缓冲区有128,但sh的缓冲区只有100。

int

main(void)

{static char buf[100];int fd;// Ensure that three file descriptors are open.while((fd = open("console", O_RDWR)) >= 0){if(fd >= 3){close(fd);break;}}// Read and run input commands.while(getcmd(buf, sizeof(buf)) >= 0){if(buf[0] == 'c' && buf[1] == 'd' && buf[2] == ' '){// Chdir must be called by the parent, not the child.buf[strlen(buf)-1] = 0; // chop \nif(chdir(buf+3) < 0)fprintf(2, "cannot cd %s\n", buf+3);continue;}if(fork1() == 0)runcmd(parsecmd(buf));wait(0);}exit(0);

}int

getcmd(char *buf, int nbuf)

{write(2, "$ ", 2);memset(buf, 0, nbuf);gets(buf, nbuf);if(buf[0] == 0) // EOFreturn -1;return 0;

}//

// the console input interrupt handler.

// uartintr() calls this for input character.

// do erase/kill processing, append to cons.buf,

// wake up consoleread() if a whole line has arrived.

//

void

consoleintr(int c)

{acquire(&cons.lock);switch(c){case C('P'): // Print process list.procdump();break;case C('U'): // Kill line.while(cons.e != cons.w &&cons.buf[(cons.e-1) % INPUT_BUF_SIZE] != '\n'){cons.e--;consputc(BACKSPACE);}break;case C('H'): // Backspacecase '\x7f': // Delete keyif(cons.e != cons.w){cons.e--;consputc(BACKSPACE);}break;default:if(c != 0 && cons.e-cons.r < INPUT_BUF_SIZE){c = (c == '\r') ? '\n' : c;// echo back to the user.consputc(c);// store for consumption by consoleread().cons.buf[cons.e++ % INPUT_BUF_SIZE] = c;if(c == '\n' || c == C('D') || cons.e-cons.r == INPUT_BUF_SIZE){// wake up consoleread() if a whole line (or end-of-file)// has arrived.cons.w = cons.e;wakeup(&cons.r);}}break;}release(&cons.lock);

}net驱动实验

qemu模拟了E1000网卡,Makefile文件中加上了该设备,先在qemu里加入一个网络后端(-netdev),把host上的UDP端口映射到虚拟机的2000端口,并将网络流量保存到packets.pcap文件。再添加e1000到这个后端上。

ifeq ($(LAB),net)

QEMUOPTS += -netdev user,id=net0,hostfwd=udp::$(FWDPORT)-:2000 -object filter-dump,id=net0,netdev=net0,file=packets.pcap

QEMUOPTS += -device e1000,netdev=net0,bus=pcie.0

endif

e1000寄存器如下:

kernel/main.c的main中调用pci_init()进行简单的PCI-Express 初始化,e1000_regs定义了 e1000 网卡寄存器的地址为 0x40000000,ecam是PCI 配置空间地址,QEMU 的虚拟机将 PCIe 配置空间映射到这个地址。遍历总线0上的设备,找到e1000网卡。设置e1000网卡的命令和状态寄存器,启用 I/O 访问、内存访问和主设备功能。根据 PCI 规范,基地址寄存器(BAR)设置成全1是一个探测信号,在读回时返回一个值,返回值的低位为0的部分表示设备所需的地址空间大小。最后,调用e1000_init初始化网卡:

//

// simple PCI-Express initialization, only

// works for qemu and its e1000 card.

//#include "types.h"

#include "param.h"

#include "memlayout.h"

#include "riscv.h"

#include "spinlock.h"

#include "proc.h"

#include "defs.h"void

pci_init()

{// we'll place the e1000 registers at this address.// vm.c maps this range.uint64 e1000_regs = 0x40000000L;// qemu -machine virt puts PCIe config space here.// vm.c maps this range.uint32 *ecam = (uint32 *) 0x30000000L;// look at each possible PCI device on bus 0.for(int dev = 0; dev < 32; dev++){int bus = 0;int func = 0;int offset = 0;uint32 off = (bus << 16) | (dev << 11) | (func << 8) | (offset);volatile uint32 *base = ecam + off;uint32 id = base[0];// 100e:8086 is an e1000if(id == 0x100e8086){// command and status register.// bit 0 : I/O access enable// bit 1 : memory access enable// bit 2 : enable masteringbase[1] = 7;__sync_synchronize();for(int i = 0; i < 6; i++){uint32 old = base[4+i];// writing all 1's to the BAR causes it to be// replaced with its size.base[4+i] = 0xffffffff;__sync_synchronize();base[4+i] = old;}// tell the e1000 to reveal its registers at// physical address 0x40000000.base[4+0] = e1000_regs;e1000_init((uint32*)e1000_regs);}}

}e1000_init先重置网卡设备以便进行初始化。接着初始化发送缓冲区,给每个发送描述符状态置成可用。static struct tx_desc tx_ring[TX_RING_SIZE]用于发送数据,tx_ring是环结构,如下图所示,循环缓冲区中最多可以有64K个描述符。e1000中有一对寄存器维护这个环状队列,即E1000_TDH和E1000_TDT首尾指针,首指针指示正在发送的描述符,由网卡硬件维护并更新该指针,而发送尾指针则指向第一个软件可以写入的描述符的位置,由软件维护。队列在内存的地址和长度寄存器为E1000_TDBAL和E1000_TDLEN。tx_mbufs用于存放需发送的数据。rx_ring同理。接着,配置mac地址、多播地址表、传输控制寄存器、接收控制寄存器、传输间隔寄存器、中断相关寄存器。

// called by pci_init().

// xregs is the memory address at which the

// e1000's registers are mapped.

void

e1000_init(uint32 *xregs)

{int i;initlock(&e1000_lock, "e1000");regs = xregs;// Reset the deviceregs[E1000_IMS] = 0; // disable interruptsregs[E1000_CTL] |= E1000_CTL_RST;regs[E1000_IMS] = 0; // redisable interrupts__sync_synchronize();// [E1000 14.5] Transmit initializationmemset(tx_ring, 0, sizeof(tx_ring));for (i = 0; i < TX_RING_SIZE; i++) {tx_ring[i].status = E1000_TXD_STAT_DD;tx_mbufs[i] = 0;}regs[E1000_TDBAL] = (uint64) tx_ring;if(sizeof(tx_ring) % 128 != 0)panic("e1000");regs[E1000_TDLEN] = sizeof(tx_ring);regs[E1000_TDH] = regs[E1000_TDT] = 0;// [E1000 14.4] Receive initializationmemset(rx_ring, 0, sizeof(rx_ring));for (i = 0; i < RX_RING_SIZE; i++) {rx_mbufs[i] = mbufalloc(0);if (!rx_mbufs[i])panic("e1000");rx_ring[i].addr = (uint64) rx_mbufs[i]->head;}regs[E1000_RDBAL] = (uint64) rx_ring;if(sizeof(rx_ring) % 128 != 0)panic("e1000");regs[E1000_RDH] = 0;regs[E1000_RDT] = RX_RING_SIZE - 1;regs[E1000_RDLEN] = sizeof(rx_ring);// filter by qemu's MAC address, 52:54:00:12:34:56regs[E1000_RA] = 0x12005452;regs[E1000_RA+1] = 0x5634 | (1<<31);// multicast tablefor (int i = 0; i < 4096/32; i++)regs[E1000_MTA + i] = 0;// transmitter control bits.regs[E1000_TCTL] = E1000_TCTL_EN | // enableE1000_TCTL_PSP | // pad short packets(0x10 << E1000_TCTL_CT_SHIFT) | // collision stuff(0x40 << E1000_TCTL_COLD_SHIFT);regs[E1000_TIPG] = 10 | (8<<10) | (6<<20); // inter-pkt gap// receiver control bits.regs[E1000_RCTL] = E1000_RCTL_EN | // enable receiverE1000_RCTL_BAM | // enable broadcastE1000_RCTL_SZ_2048 | // 2048-byte rx buffersE1000_RCTL_SECRC; // strip CRC// ask e1000 for receive interrupts.regs[E1000_RDTR] = 0; // interrupt after every received packet (no timer)regs[E1000_RADV] = 0; // interrupt after every packet (no timer)regs[E1000_IMS] = (1 << 7); // RXDW -- Receiver Descriptor Write Back

}

tx_desc对应RDESC寄存器

// [E1000 3.2.3]

struct rx_desc

{uint64 addr; /* Address of the descriptor's data buffer */uint16 length; /* Length of data DMAed into data buffer */uint16 csum; /* Packet checksum */uint8 status; /* Descriptor status */uint8 errors; /* Descriptor Errors */uint16 special;

};

tx_desc对应TDESC寄存器,

// [E1000 3.3.3]

struct tx_desc

{uint64 addr;uint16 length;uint8 cso;uint8 cmd;uint8 status;uint8 css;uint16 special;

};

在了解完初始化和相关数据结构后,开始写e1000_transmit和e1000_recv代码。

e1000_transmit读取regs[E1000_TDT]获取到tx_ring队尾索引,检查当前发送描述符的status是否可发送(E1000_TXD_STAT_DD,该标志位会在描述符的数据被处理完成后设置),若不可发送表明发送队列已满(不是通过首尾指针相等判断)。释放发送缓冲区,更新发送描述符状态,将cmd设置为E1000_TXD_CMD_EOP(数据包结束)和E1000_TXD_CMD_RS(报告状态信息,表明发送描述符的status有效),将数据帧缓冲区 m 记录到缓冲区队列 mbuf 中用于之后的释放, 当后续尾指针又指向该位置时再将其释放。最后更新尾指针。

int

e1000_transmit(struct mbuf *m)

{//// Your code here.//// the mbuf contains an ethernet frame; program it into// the TX descriptor ring so that the e1000 sends it. Stash// a pointer so that it can be freed after sending.//acquire(&e1000_lock);uint32 index = regs[E1000_TDT];if((tx_ring[index].status & E1000_TXD_STAT_DD) == 0){printf("the E1000 hasn't finished the previous transmission request\n");release(&e1000_lock);return -1;} if(tx_mbufs[index]){mbuffree(tx_mbufs[index]);}tx_ring[index].addr = (uint64)m->head;tx_ring[index].length = (uint16)m->len;tx_ring[index].cmd = E1000_TXD_CMD_EOP | E1000_TXD_CMD_RS;tx_mbufs[index] = m;regs[E1000_TDT] = (index + 1) % TX_RING_SIZE;release(&e1000_lock);return 0;

}

e1000_recv与e1000_transmit类似,但尾指针指向队列末尾,首尾指针相等说明队列已满,而且这个节点是无法使用的,所以这个队列实际上只能接收15个包。因此,若首尾指针初始化相同就会一直卡死。但要从首指针处取出接收到的报文,就需要把尾指针+1。使用net_rx向上传递过程中会释放缓冲区内存,需要给缓冲区分配新内存用于继续接收数据。由于实验指导中说明到达的数据包会超过队列大小,这里使用循环接收网络报文。这里需要注意的是不能再net_rx之前加锁,net_rx里要获取锁,若加上锁到导致同一线程重复获取锁,这样就会导致死锁。

static void

e1000_recv(void)

{//// Your code here.//// Check for packets that have arrived from the e1000// Create and deliver an mbuf for each packet (using net_rx()).//// acquire(&e1000_lock);uint32 index = (regs[E1000_RDT]+1) % RX_RING_SIZE;while(rx_ring[index].status & E1000_RXD_STAT_DD){rx_mbufs[index]->len = rx_ring[index].length;net_rx(rx_mbufs[index]);rx_mbufs[index] = mbufalloc(0);if(rx_mbufs[index] == 0){panic("mbufalloc fail");}rx_ring[index].addr = (uint64)rx_mbufs[index]->head;rx_ring[index].status = 0;index = (index + 1) % RX_RING_SIZE;}regs[E1000_RDT] = (index - 1) % RX_RING_SIZE;// release(&e1000_lock);

}

作为client,xv6发送报文和linux类似,先调用connect获取内核中的socket,通过socket发送报文,sockwrite发送到udp,封装udp头,接着发送到ip,封装ip头,最后通过网卡发送。读取报文也通过socket获取缓冲区信息,从中读取数据。

xv6进程调度学习

内核中的进程分为内核进程和用户进程,xv6内核的用户进程切换过程如下图所示,内核进程切换也在这个过程中。进程可以主动调用调度函数进行切换,也可以通过时钟中断被动被切换。

xv6中进程在CPU上切换依靠sched()和scheduler()函数。在调用sched()函数前,需要保证获取到当前进程的进程锁,即p->lock,进程锁保护了上下文切换的状态。xv6中使用acquire()函数试图获取一个自旋锁,在acquire里会首先禁用中断,以防止在获取到锁后被中断(例如时钟中断等)打断,导致其他需要该锁的进程无法获取到锁进而发生死锁。所以获取到进程锁能够保证上下文切换的原子性。此外,该锁的主要目的是保证进程不会被其他CPU运行,导致两个CPU运行在同一个栈上。第二个if判断保证没有嵌套的关中断,关中断的数量表明存在原子执行的程序的数量,不能在这些程序未执行完就切换进程:

// Switch to scheduler. Must hold only p->lock

// and have changed proc->state. Saves and restores

// intena because intena is a property of this

// kernel thread, not this CPU. It should

// be proc->intena and proc->noff, but that would

// break in the few places where a lock is held but

// there's no process.

void

sched(void)

{int intena;struct proc *p = myproc();if(!holding(&p->lock))panic("sched p->lock");if(mycpu()->noff != 1)panic("sched locks");if(p->state == RUNNING)panic("sched running");if(intr_get())panic("sched interruptible");intena = mycpu()->intena;swtch(&p->context, &mycpu()->context);mycpu()->intena = intena;

}

之后调用swtch函数进行上下文切换,保存和恢复寄存器组。只保存callee-saved寄存器,caller-saved寄存器由调用的C代码保存在堆栈上(如果需要)。callee-saved寄存器,也称为被调用者保存寄存器,是指在函数调用过程中,被调用的函数(callee)有责任保存和恢复其值的寄存器。这些寄存器通常用于存储函数的长期状态,如函数中的局部变量、循环计数器等:

# Context switch

#

# void swtch(struct context *old, struct context *new);

#

# Save current registers in old. Load from new. .globl swtch

swtch:sd ra, 0(a0)sd sp, 8(a0)sd s0, 16(a0)sd s1, 24(a0)sd s2, 32(a0)sd s3, 40(a0)sd s4, 48(a0)sd s5, 56(a0)sd s6, 64(a0)sd s7, 72(a0)sd s8, 80(a0)sd s9, 88(a0)sd s10, 96(a0)sd s11, 104(a0)ld ra, 0(a1)ld sp, 8(a1)ld s0, 16(a1)ld s1, 24(a1)ld s2, 32(a1)ld s3, 40(a1)ld s4, 48(a1)ld s5, 56(a1)ld s6, 64(a1)ld s7, 72(a1)ld s8, 80(a1)ld s9, 88(a1)ld s10, 96(a1)ld s11, 104(a1)ret

swtch会将scheduler()函数的上下文以协程的方式切换到当前进程,并从scheduler函数的swtch的下一行开始执行,因为scheduler上次将该进程切换走的时候保存的ra地址是swtch的ra返回地址。进程的锁会在scheduler里被释放,等待下次进程调度唤醒。

// Per-CPU process scheduler.

// Each CPU calls scheduler() after setting itself up.

// Scheduler never returns. It loops, doing:

// - choose a process to run.

// - swtch to start running that process.

// - eventually that process transfers control

// via swtch back to the scheduler.

void

scheduler(void)

{struct proc *p;struct cpu *c = mycpu();c->proc = 0;for(;;){// The most recent process to run may have had interrupts// turned off; enable them to avoid a deadlock if all// processes are waiting.intr_on();int found = 0;for(p = proc; p < &proc[NPROC]; p++) {acquire(&p->lock);if(p->state == RUNNABLE) {// Switch to chosen process. It is the process's job// to release its lock and then reacquire it// before jumping back to us.p->state = RUNNING;c->proc = p;swtch(&c->context, &p->context);// Process is done running for now.// It should have changed its p->state before coming back.c->proc = 0;found = 1;}release(&p->lock);}if(found == 0) {// nothing to run; stop running on this core until an interrupt.intr_on();asm volatile("wfi");}}

}

Multithreading实验Uthread: switching between threads

这个实验并不会申请多个线程,而是在当前进程上进行任务切换,从定义上来说更接近于协程。而且linux上没有单独线程的概念,线程在后台也是一个进程,只是一些资源(如内存等)来自父进程。所以uthread.c中只有一个进程。切换上下文和xv6中切换上下文的函数一样,直接将其复制到thread_switch里,在struct thread里加上struct context context,context定义和xv6内核里一样;:

struct thread {char stack[STACK_SIZE]; /* the thread's stack */int state; /* FREE, RUNNING, RUNNABLE */struct context context; /* the thread's saved context */

};.text/** save the old thread's registers,* restore the new thread's registers.*/.globl thread_switch

thread_switch:/* YOUR CODE HERE */sd ra, 0(a0)sd sp, 8(a0)sd s0, 16(a0)sd s1, 24(a0)sd s2, 32(a0)sd s3, 40(a0)sd s4, 48(a0)sd s5, 56(a0)sd s6, 64(a0)sd s7, 72(a0)sd s8, 80(a0)sd s9, 88(a0)sd s10, 96(a0)sd s11, 104(a0)ld ra, 0(a1)ld sp, 8(a1)ld s0, 16(a1)ld s1, 24(a1)ld s2, 32(a1)ld s3, 40(a1)ld s4, 48(a1)ld s5, 56(a1)ld s6, 64(a1)ld s7, 72(a1)ld s8, 80(a1)ld s9, 88(a1)ld s10, 96(a1)ld s11, 104(a1)ret /* return to ra */在任务切换thread_schedule时调用thread_switch((uint64)&t->context, (uint64)&next_thread->context)。任务切换通过切换callee寄存器、sp栈指针和ra函数返回地址,在执行完thread_switch后跳转到对应任务。thread_create里也和xv6内核初始化类似。

void

thread_create(void (*func)())

{struct thread *t;for (t = all_thread; t < all_thread + MAX_THREAD; t++) {if (t->state == FREE) break;}t->state = RUNNABLE;// YOUR CODE HEREmemset(&t->context, 0, sizeof(t->context));t->context.sp = (uint64)t->stack + STACK_SIZE;t->context.ra = (uint64)func;

}

Multithreading实验Using threads

多线程插入同一链表,需要用到linux上的pthread库。主要是在插入过程中加锁,保证插入的安全性,不会丢失插入的节点。因为多个线程同时完成了key的查找,下一步就是插入链表,若同时进行插入就会导致多个线程一起把链表头替换了,有多个节点next为链表中第二个节点,但只能有一个节点作为head。所以需要保证插入时的原子性。

static

void put(int key, int value)

{int i = key % NBUCKET;// is the key already present?struct entry *e = 0;for (e = table[i]; e != 0; e = e->next) {if (e->key == key)break;}pthread_mutex_lock(&put_lock);if(e){// update the existing key.e->value = value;} else {// the new is new.insert(key, value, &table[i], table[i]);}pthread_mutex_unlock(&put_lock);

}

但如上插入仍然存在问题,可能会导致插入多个相同key,因为多个线程在查询同一个key不存在,在插入部分就会全都插入。所以需要在还可以再进入临界区时再坚持与线程数相同的节点,若存在就变成覆盖。实验中并不要求不存在相同的key,所以上述代码能通过测试。此外,若将只锁insert,也能通过测试,因为写和读是分开的,不然会造成读取数据出错。

Multithreading实验Barrier

同步多线程时,需要对flag(即 bstate.nthread)加锁,不然可能造成flag++被吞掉,多个线程同时对flag++时可能只执行了其中一个,从而导致死锁。pthread_cond_wait在被唤醒时会重新获取锁,所以需要在其后unlock。

static void

barrier()

{// YOUR CODE HERE//// Block until all threads have called barrier() and// then increment bstate.round.//pthread_mutex_lock(&bstate.barrier_mutex);bstate.nthread++;if(bstate.nthread == nthread){bstate.round++;bstate.nthread = 0;pthread_mutex_unlock(&bstate.barrier_mutex);pthread_cond_broadcast(&bstate.barrier_cond);} else {pthread_cond_wait(&bstate.barrier_cond, &bstate.barrier_mutex);pthread_mutex_unlock(&bstate.barrier_mutex);}return;

}

xv6文件系统学习

xv6文件系统分为7层,如图所示。磁盘层在virtio磁盘上读写块,virtio分为前端和后端,内核使用前端驱动接口,qemu提供后端以实现对应的前端驱动接口,后端与硬件接触。

一个物理磁盘可以分区成多个逻辑磁盘,盘块是磁盘存储中用于数据存储和管理的基本单位,它是磁盘上的一段连续存储空间,大小通常为扇区大小的整数倍。扇区是磁盘的最小物理存储单元,常见的扇区大小为512字节或4096字节。xv6对磁盘划分如下图所示,第0块为引导区,用于引导操作系统;第 1 块称为 superblock,包含了文件系统的元数据;从块 2 开始存放着日志;日志之后是 inodes ,每个块会包含多个inode ;在这些块之后是位图块,bitmap 记录哪些数据块在使用;其余的块是数据块。

xv6的mkfs.c用于完成上述的磁盘划分,inode用于索引硬盘盘块,每个inode有12个直接寻址和1个间接寻址的盘块用于存储,xv6里这些地址为盘块的序号:

- 先创建fs.img空文件,nmeta是boot、super、log、inodes、bit map这些特殊块的数量,其余为数据块。

- 填充super,即sb,将初始化好的super块写入fs.img。xint这些是改变字节序,即存储的大小端。wsect(i, zeroes)将fs.img的FSSIZE*1024空间填充为0,即为每个大小为1024的盘块初始化。

- 初始化文件系统的根inode,根inode是目录文件类型,即第一个文件夹,ialloc会从编号1开始依次分配inode,并将其写入到inodes盘块,iappend函数在指定inode后追加内容。将.和…(当前目录和上级目录地址)追加到根inode。

- 根据命令行中的参数,即Makefile文件中fs.img标签的执行命令(贴在下方),为每个文件创建inode放入对应盘块,并创建dirent(用于标记所属文件夹类型的inode,即在文件夹中添加该文件)将其放到根文件夹里。

- 最后更新根文件夹大小,并在bitmap部分更新已使用的盘块,每个bit位表示一个盘块。至此,文件系统创建完成。

qemu中指定了kernel的起始地址,因此并没有初始化boot部分。

mkfs/mkfs fs.img README user/_cat user/_echo user/_forktest user/_grep user/_init user/_kill user/_ln user/_ls user/_mkdir user/_rm user/_sh user/_stressfs user/_usertests user/_grind user/_wc user/_zombie

nmeta 46 (boot, super, log blocks 30 inode blocks 13, bitmap blocks 1) blocks 1954 total 2000

balloc: first 768 blocks have been allocated

balloc: write bitmap block at sector 45

int

main(int argc, char *argv[])

{int i, cc, fd;uint rootino, inum, off;struct dirent de;char buf[BSIZE];struct dinode din;static_assert(sizeof(int) == 4, "Integers must be 4 bytes!");if(argc < 2){fprintf(stderr, "Usage: mkfs fs.img files...\n");exit(1);}assert((BSIZE % sizeof(struct dinode)) == 0);assert((BSIZE % sizeof(struct dirent)) == 0);fsfd = open(argv[1], O_RDWR|O_CREAT|O_TRUNC, 0666);if(fsfd < 0)die(argv[1]);// 1 fs block = 1 disk sectornmeta = 2 + nlog + ninodeblocks + nbitmap;nblocks = FSSIZE - nmeta;sb.magic = FSMAGIC;sb.size = xint(FSSIZE);sb.nblocks = xint(nblocks);sb.ninodes = xint(NINODES);sb.nlog = xint(nlog);sb.logstart = xint(2);sb.inodestart = xint(2+nlog);sb.bmapstart = xint(2+nlog+ninodeblocks);printf("nmeta %d (boot, super, log blocks %u inode blocks %u, bitmap blocks %u) blocks %d total %d\n",nmeta, nlog, ninodeblocks, nbitmap, nblocks, FSSIZE);freeblock = nmeta; // the first free block that we can allocatefor(i = 0; i < FSSIZE; i++)wsect(i, zeroes);memset(buf, 0, sizeof(buf));memmove(buf, &sb, sizeof(sb));wsect(1, buf);rootino = ialloc(T_DIR);assert(rootino == ROOTINO);bzero(&de, sizeof(de));de.inum = xshort(rootino);strcpy(de.name, ".");iappend(rootino, &de, sizeof(de));bzero(&de, sizeof(de));de.inum = xshort(rootino);strcpy(de.name, "..");iappend(rootino, &de, sizeof(de));for(i = 2; i < argc; i++){// get rid of "user/"char *shortname;if(strncmp(argv[i], "user/", 5) == 0)shortname = argv[i] + 5;elseshortname = argv[i];assert(index(shortname, '/') == 0);if((fd = open(argv[i], 0)) < 0)die(argv[i]);// Skip leading _ in name when writing to file system.// The binaries are named _rm, _cat, etc. to keep the// build operating system from trying to execute them// in place of system binaries like rm and cat.if(shortname[0] == '_')shortname += 1;assert(strlen(shortname) <= DIRSIZ);inum = ialloc(T_FILE);bzero(&de, sizeof(de));de.inum = xshort(inum);strncpy(de.name, shortname, DIRSIZ);iappend(rootino, &de, sizeof(de));while((cc = read(fd, buf, sizeof(buf))) > 0)iappend(inum, buf, cc);close(fd);}// fix size of root inode dirrinode(rootino, &din);off = xint(din.size);off = ((off/BSIZE) + 1) * BSIZE;din.size = xint(off);winode(rootino, &din);balloc(freeblock);exit(0);

}

由于访问内存和磁盘的速度差距很大,buffer cache层用于缓存磁盘内容以加速读写文件,并保证内存和硬盘内容一致。此外,缓存还要解决多线程同步访问。xv86里buffer cache使用LRU机制,即最近使用最少,来回收buffer。

bio.c文件中用binit()函数初始化bcache,bcache是一个由buf[NBUF]组成的双端链表,但head只作为哨兵不实际用作buf。

struct {struct spinlock lock;struct buf buf[NBUF];// Linked list of all buffers, through prev/next.// Sorted by how recently the buffer was used.// head.next is most recent, head.prev is least.struct buf head;

} bcache;void

binit(void)

{struct buf *b;initlock(&bcache.lock, "bcache");// Create linked list of buffersbcache.head.prev = &bcache.head;bcache.head.next = &bcache.head;for(b = bcache.buf; b < bcache.buf+NBUF; b++){b->next = bcache.head.next;b->prev = &bcache.head;initsleeplock(&b->lock, "buffer");bcache.head.next->prev = b;bcache.head.next = b;}

}

bget函数获取一个设备 dev 上的指定块号 blockno 的缓冲区。先遍历是否已存在buf缓存了该盘块,若没有则按照LRU机制选择空闲buf,从head前向搜索第一个空闲buf。因为brelse释放结点时,会把结点从原来位置摘出来,放到head的下一个,所以越往后空闲时间越久。

// Look through buffer cache for block on device dev.

// If not found, allocate a buffer.

// In either case, return locked buffer.

static struct buf*

bget(uint dev, uint blockno)

{struct buf *b;acquire(&bcache.lock);// Is the block already cached?for(b = bcache.head.next; b != &bcache.head; b = b->next){if(b->dev == dev && b->blockno == blockno){b->refcnt++;release(&bcache.lock);acquiresleep(&b->lock);return b;}}// Not cached.// Recycle the least recently used (LRU) unused buffer.for(b = bcache.head.prev; b != &bcache.head; b = b->prev){if(b->refcnt == 0) {b->dev = dev;b->blockno = blockno;b->valid = 0;b->refcnt = 1;release(&bcache.lock);acquiresleep(&b->lock);return b;}}panic("bget: no buffers");

}// Release a locked buffer.

// Move to the head of the most-recently-used list.

void

brelse(struct buf *b)

{if(!holdingsleep(&b->lock))panic("brelse");releasesleep(&b->lock);acquire(&bcache.lock);b->refcnt--;if (b->refcnt == 0) {// no one is waiting for it.b->next->prev = b->prev;b->prev->next = b->next;b->next = bcache.head.next;b->prev = &bcache.head;bcache.head.next->prev = b;bcache.head.next = b;}release(&bcache.lock);

}

bread和bwrite函数用于读写硬盘内容,virtio_disk_rw函数向qemu指定的virtio的mmio地址写入信息(属于virtio前端),qemu后端注册ioport_write/read函数监听PCI配置空间的改变,获取前端的通知消息。qemu维护的virtqueue队列从数据共享区vring中获取数据,再将数据封装成virtioreq,最后把请求发送至硬件层:

// Return a locked buf with the contents of the indicated block.

struct buf*

bread(uint dev, uint blockno)

{struct buf *b;b = bget(dev, blockno);if(!b->valid) {virtio_disk_rw(b, 0);b->valid = 1;}return b;

}// Write b's contents to disk. Must be locked.

void

bwrite(struct buf *b)

{if(!holdingsleep(&b->lock))panic("bwrite");virtio_disk_rw(b, 1);

}

xv6中disk驱动如下,由于xv6的virtio部分只有disk,xv6把disk驱动和virtio部分结合在一起。其中一些数据结构的定义来自于qemu或virtio手册里。

virtq_desc用于存储 DMA 描述符,每个描述符包含了一个磁盘操作的详细信息,如数据缓冲区的地址、长度等;virtq_avail用于存储可用描述符的环形队列,驱动程序在这个队列中写入描述符的编号,表示这些描述符是驱动程序希望设备处理的;virtq_used用于存储已处理描述符的环形队列,设备在这个队列中写入已经完成处理的描述符的编号;virtio_blk_req用于存储磁盘命令头。

static struct disk {// a set (not a ring) of DMA descriptors, with which the// driver tells the device where to read and write individual// disk operations. there are NUM descriptors.// most commands consist of a "chain" (a linked list) of a couple of// these descriptors.struct virtq_desc *desc;// a ring in which the driver writes descriptor numbers// that the driver would like the device to process. it only// includes the head descriptor of each chain. the ring has// NUM elements.struct virtq_avail *avail;// a ring in which the device writes descriptor numbers that// the device has finished processing (just the head of each chain).// there are NUM used ring entries.struct virtq_used *used;// our own book-keeping.char free[NUM]; // is a descriptor free?uint16 used_idx; // we've looked this far in used[2..NUM].// track info about in-flight operations,// for use when completion interrupt arrives.// indexed by first descriptor index of chain.struct {struct buf *b;char status;} info[NUM];// disk command headers.// one-for-one with descriptors, for convenience.struct virtio_blk_req ops[NUM];struct spinlock vdisk_lock;} disk;

xv6通过log层解决崩溃恢复问题,保证写入的一致性。kernel可能会因为各种原因发生崩溃,例如断点、kernel崩溃等,写入过程被中断会造成各种问题。

xv6不直接写磁盘上的文件系统数据结构,而是将写入的数据记录到磁盘上的log层。一旦记录完了全部数据,就会在磁盘上写一个特殊的提交记录,表明该日志包含了一个完整的操作。然后,再将日志中的写入数据写到磁盘的对应位置,执行完成后,将日志消除。若系统崩溃重启,文件系统会尝试恢复。若日志被标记为包含一个完整的操作,则对日志数据进行写入,否则将忽略并清除该日志。

log.c的initlog函数对log进行初始化,从superblock中获取log层元数据,相关元数据在制作文件系统时被写入。commiting标志日志是否在进行提交操作,outstanding标志当前等待写日志的文件系统相关的系统调用个数。日志系统可以将多个系统调用的写操作累积到一个事务中,一次提交可能涉及多个完整系统调用的写入,将几个事务一起提交的方法被称为组提交 group commit:

// Contents of the header block, used for both the on-disk header block

// and to keep track in memory of logged block# before commit.

struct logheader {int n;int block[LOGSIZE];

};struct log {struct spinlock lock;int start;int size;int outstanding; // how many FS sys calls are executing.int committing; // in commit(), please wait.int dev;struct logheader lh;

};

struct log log;void

initlog(int dev, struct superblock *sb)

{if (sizeof(struct logheader) >= BSIZE)panic("initlog: too big logheader");initlock(&log.lock, "log");log.start = sb->logstart;log.size = sb->nlog;log.dev = dev;recover_from_log();

}

xv6使用begin_op和end_op标记事务的始终。begin_op会一直等到日志系统没有 commiting ,并且有足够的日志空间来容纳这次调用的写。log.outstanding 统计当前系统调用的数量,可以通过log.outstanding 乘以 MAXOPBLOCKS 来计算已使用的日志空间:

// called at the start of each FS system call.

void

begin_op(void)

{acquire(&log.lock);while(1){if(log.committing){sleep(&log, &log.lock);} else if(log.lh.n + (log.outstanding+1)*MAXOPBLOCKS > LOGSIZE){// this op might exhaust log space; wait for commit.sleep(&log, &log.lock);} else {log.outstanding += 1;release(&log.lock);break;}}

}

在begin_op后,使用log_write将需要被写入的buf添加到日志中。若多进程都要对同一盘块进行写入,只进行一次写入块(log.lh.block)的标记,被称为吸收(absorption)。最后使用bpin增加buffer.refcnt,防止该buffer被释放重用:

// Caller has modified b->data and is done with the buffer.

// Record the block number and pin in the cache by increasing refcnt.

// commit()/write_log() will do the disk write.

//

// log_write() replaces bwrite(); a typical use is:

// bp = bread(...)

// modify bp->data[]

// log_write(bp)

// brelse(bp)

void

log_write(struct buf *b)

{int i;acquire(&log.lock);if (log.lh.n >= LOGSIZE || log.lh.n >= log.size - 1)panic("too big a transaction");if (log.outstanding < 1)panic("log_write outside of trans");for (i = 0; i < log.lh.n; i++) {if (log.lh.block[i] == b->blockno) // log absorptionbreak;}log.lh.block[i] = b->blockno;if (i == log.lh.n) { // Add new block to log?bpin(b);log.lh.n++;}release(&log.lock);

}

之后调用end_op函数标记事务的结束,首先递减 log.outstanding(正在执行的与文件系统相关的系统调用数量)。如果计数为零,则通过调用commit() 来提交当前事务:

// called at the end of each FS system call.

// commits if this was the last outstanding operation.

void

end_op(void)

{int do_commit = 0;acquire(&log.lock);log.outstanding -= 1;if(log.committing)panic("log.committing");if(log.outstanding == 0){do_commit = 1;log.committing = 1;} else {// begin_op() may be waiting for log space,// and decrementing log.outstanding has decreased// the amount of reserved space.wakeup(&log);}release(&log.lock);if(do_commit){// call commit w/o holding locks, since not allowed// to sleep with locks.commit();acquire(&log.lock);log.committing = 0;wakeup(&log);release(&log.lock);}

}

commit函数分为四个阶段,write_log将事务中修改的buf从 buffer cache 复制到磁盘上的日志盘块中;write_head将logheader 块(包括需要写入的盘块号和数量)写到磁盘上,表明已提交,写完日志后的崩溃,会导致在重启后重新执行日志 ;install_trans从日志中读取每个块,并将其写到文件系统中对应位置;最后,修改日志块计数为0,write_head也只将硬盘日志的日志块计数改为0,表明日志块没有盘块需要写入硬盘:

static void

commit()

{if (log.lh.n > 0) {write_log(); // Write modified blocks from cache to logwrite_head(); // Write header to disk -- the real commitinstall_trans(0); // Now install writes to home locationslog.lh.n = 0;write_head(); // Erase the transaction from the log}

}

若系统崩溃了,在initlog里调用recover_from_log从日志盘块恢复:

static void

recover_from_log(void)

{read_head();install_trans(1); // if committed, copy from log to disklog.lh.n = 0;write_head(); // clear the log

}

inode用于索引各种文件,如目录、文件、设备(linux视为特殊文件)等。

ialloc函数用于分配空闲inode,遍历所有的inode条目(硬盘中的inode盘块),调用iget获取itable的空位(内存中的inode)。若inode在itable已存在,iget会返回这个inode位置:

// Allocate an inode on device dev.

// Mark it as allocated by giving it type type.

// Returns an unlocked but allocated and referenced inode,

// or NULL if there is no free inode.

struct inode*

ialloc(uint dev, short type)

{int inum;struct buf *bp;struct dinode *dip;for(inum = 1; inum < sb.ninodes; inum++){bp = bread(dev, IBLOCK(inum, sb));dip = (struct dinode*)bp->data + inum%IPB;if(dip->type == 0){ // a free inodememset(dip, 0, sizeof(*dip));dip->type = type;log_write(bp); // mark it allocated on the diskbrelse(bp);return iget(dev, inum);}brelse(bp);}printf("ialloc: no inodes\n");return 0;

}// Find the inode with number inum on device dev

// and return the in-memory copy. Does not lock

// the inode and does not read it from disk.

static struct inode*

iget(uint dev, uint inum)

{struct inode *ip, *empty;acquire(&itable.lock);// Is the inode already in the table?empty = 0;for(ip = &itable.inode[0]; ip < &itable.inode[NINODE]; ip++){if(ip->ref > 0 && ip->dev == dev && ip->inum == inum){ip->ref++;release(&itable.lock);return ip;}if(empty == 0 && ip->ref == 0) // Remember empty slot.empty = ip;}// Recycle an inode entry.if(empty == 0)panic("iget: no inodes");ip = empty;ip->dev = dev;ip->inum = inum;ip->ref = 1;ip->valid = 0;release(&itable.lock);return ip;

}

在读写inode的元数据或内容之前,代码必须使用 ilock 锁定。ilock使用sleeplock,若sleeplock已被获取则直接sleep。最后检查当前inode是否从硬盘读取:

// Lock the given inode.

// Reads the inode from disk if necessary.

void

ilock(struct inode *ip)

{struct buf *bp;struct dinode *dip;if(ip == 0 || ip->ref < 1)panic("ilock");acquiresleep(&ip->lock);if(ip->valid == 0){bp = bread(ip->dev, IBLOCK(ip->inum, sb));dip = (struct dinode*)bp->data + ip->inum%IPB;ip->type = dip->type;ip->major = dip->major;ip->minor = dip->minor;ip->nlink = dip->nlink;ip->size = dip->size;memmove(ip->addrs, dip->addrs, sizeof(ip->addrs));brelse(bp);ip->valid = 1;if(ip->type == 0)panic("ilock: no type");}

}

与iget相反的是iput,通过递减引用次数释放指向 inode 的指针。如果iput 发现没有指针指向该 inode ,并且没有任何目录项链接该 inode (不在任何目录中出现),那么该 inode 和它的数据块必须被释放:

// Drop a reference to an in-memory inode.

// If that was the last reference, the inode table entry can

// be recycled.

// If that was the last reference and the inode has no links

// to it, free the inode (and its content) on disk.

// All calls to iput() must be inside a transaction in

// case it has to free the inode.

void

iput(struct inode *ip)

{acquire(&itable.lock);if(ip->ref == 1 && ip->valid && ip->nlink == 0){// inode has no links and no other references: truncate and free.// ip->ref == 1 means no other process can have ip locked,// so this acquiresleep() won't block (or deadlock).acquiresleep(&ip->lock);release(&itable.lock);itrunc(ip);ip->type = 0;iupdate(ip);ip->valid = 0;releasesleep(&ip->lock);acquire(&itable.lock);}ip->ref--;release(&itable.lock);

}

iput调用itrunc通过遍历inode直接或间接指向的盘块,一一释放,最后调用iupdate将清除后的inode写回inode盘块,关于iput可能发生死锁和崩溃分析请看手册。若log中记录的nlink为0,但在最后一个ref的iput崩溃,log就不会记录清空inode使用的盘块。fs中会出现不在文件夹内(nlink上被删除),但bitmap中对应的盘块还未删除的情况,这样会导致硬盘资源耗尽,xv6并没有处理这种情况:

// Truncate inode (discard contents).

// Caller must hold ip->lock.

void

itrunc(struct inode *ip)

{int i, j;struct buf *bp;uint *a;for(i = 0; i < NDIRECT; i++){if(ip->addrs[i]){bfree(ip->dev, ip->addrs[i]);ip->addrs[i] = 0;}}if(ip->addrs[NDIRECT]){bp = bread(ip->dev, ip->addrs[NDIRECT]);a = (uint*)bp->data;for(j = 0; j < NINDIRECT; j++){if(a[j])bfree(ip->dev, a[j]);}brelse(bp);bfree(ip->dev, ip->addrs[NDIRECT]);ip->addrs[NDIRECT] = 0;}ip->size = 0;iupdate(ip);

}// Copy a modified in-memory inode to disk.

// Must be called after every change to an ip->xxx field

// that lives on disk.

// Caller must hold ip->lock.

void

iupdate(struct inode *ip)

{struct buf *bp;struct dinode *dip;bp = bread(ip->dev, IBLOCK(ip->inum, sb));dip = (struct dinode*)bp->data + ip->inum%IPB;dip->type = ip->type;dip->major = ip->major;dip->minor = ip->minor;dip->nlink = ip->nlink;dip->size = ip->size;memmove(dip->addrs, ip->addrs, sizeof(ip->addrs));log_write(bp);brelse(bp);

}

由于inode分直接和间接盘块,因此xv6封装了bmap(struct inode *ip, uint bn)去简化获取盘块号,bn为inode的第几个数据块。若没有第 bn 个的数据块, bmap 就会分配一个:

// Return the disk block address of the nth block in inode ip.

// If there is no such block, bmap allocates one.

// returns 0 if out of disk space.

static uint

bmap(struct inode *ip, uint bn)

{uint addr, *a;struct buf *bp;if(bn < NDIRECT){if((addr = ip->addrs[bn]) == 0){addr = balloc(ip->dev);if(addr == 0)return 0;ip->addrs[bn] = addr;}return addr;}bn -= NDIRECT;if(bn < NINDIRECT){// Load indirect block, allocating if necessary.if((addr = ip->addrs[NDIRECT]) == 0){addr = balloc(ip->dev);if(addr == 0)return 0;ip->addrs[NDIRECT] = addr;}bp = bread(ip->dev, addr);a = (uint*)bp->data;if((addr = a[bn]) == 0){addr = balloc(ip->dev);if(addr){a[bn] = addr;log_write(bp);}}brelse(bp);return addr;}panic("bmap: out of range");

}

而readi和writei则进一步封装,只需要给出偏移即可读写,循环读取inode盘块并将内容copy到指定地址:

// Read data from inode.

// Caller must hold ip->lock.

// If user_dst==1, then dst is a user virtual address;

// otherwise, dst is a kernel address.

int

readi(struct inode *ip, int user_dst, uint64 dst, uint off, uint n)

{uint tot, m;struct buf *bp;if(off > ip->size || off + n < off)return 0;if(off + n > ip->size)n = ip->size - off;for(tot=0; tot<n; tot+=m, off+=m, dst+=m){uint addr = bmap(ip, off/BSIZE);if(addr == 0)break;bp = bread(ip->dev, addr);m = min(n - tot, BSIZE - off%BSIZE);if(either_copyout(user_dst, dst, bp->data + (off % BSIZE), m) == -1) {brelse(bp);tot = -1;break;}brelse(bp);}return tot;

}

stati函数可以获取inode信息,Linux的是stat系统调用能够用来判断文件是否存在和文件类型:

// Copy stat information from inode.

// Caller must hold ip->lock.

void

stati(struct inode *ip, struct stat *st)

{st->dev = ip->dev;st->ino = ip->inum;st->type = ip->type;st->nlink = ip->nlink;st->size = ip->size;

}

目录是inode类型的一种,每个条目是一个结构体dirent,它包含一个名称和一个 inode 号:

struct dirent {ushort inum;char name[DIRSIZ];

};

dirlookup函数在一个目录中搜索一个带有给定名称的条目,如果找到返回一个指向相应 inode 的指针,并将 *poff 设置为目录中条目的字节偏移量, 以便调用者使用:

// Look for a directory entry in a directory.

// If found, set *poff to byte offset of entry.

struct inode*

dirlookup(struct inode *dp, char *name, uint *poff)

{uint off, inum;struct dirent de;if(dp->type != T_DIR)panic("dirlookup not DIR");for(off = 0; off < dp->size; off += sizeof(de)){if(readi(dp, 0, (uint64)&de, off, sizeof(de)) != sizeof(de))panic("dirlookup read");if(de.inum == 0)continue;if(namecmp(name, de.name) == 0){// entry matches path elementif(poff)*poff = off;inum = de.inum;return iget(dp->dev, inum);}}return 0;

}

dirlink函数会在当前目录dp中创建一个新的目录项,若存在同名目录则返回-1。循环找空闲条目写入:

// Write a new directory entry (name, inum) into the directory dp.

// Returns 0 on success, -1 on failure (e.g. out of disk blocks).

int

dirlink(struct inode *dp, char *name, uint inum)

{int off;struct dirent de;struct inode *ip;// Check that name is not present.if((ip = dirlookup(dp, name, 0)) != 0){iput(ip);return -1;}// Look for an empty dirent.for(off = 0; off < dp->size; off += sizeof(de)){if(readi(dp, 0, (uint64)&de, off, sizeof(de)) != sizeof(de))panic("dirlink read");if(de.inum == 0)break;}strncpy(de.name, name, DIRSIZ);de.inum = inum;if(writei(dp, 0, (uint64)&de, off, sizeof(de)) != sizeof(de))return -1;return 0;

}

namei解析路径并返回相应的 inode,nameiparent返回父目录,这俩都是通过namex实现:

struct inode*

namei(char *path)

{char name[DIRSIZ];return namex(path, 0, name);

}struct inode*

nameiparent(char *path, char *name)

{return namex(path, 1, name);

}

namex函数首先确定路径解析从哪里开始。如果路径以斜线开头,则从根目录开始解析 ;否则,从当前目录开始解析。然后使用 skipelem 遍历路径中的每个元素(/a/b/c -> a, b, c顺序遍历获取文件夹名称),返回值是去掉当前路径元素后剩余的路径部分,且去除前导斜杠。在该文件夹下继续搜索下一个文件夹或搜索到文件。namx并不能搜索绝对路径:

// Look up and return the inode for a path name.

// If parent != 0, return the inode for the parent and copy the final

// path element into name, which must have room for DIRSIZ bytes.

// Must be called inside a transaction since it calls iput().

static struct inode*

namex(char *path, int nameiparent, char *name)

{struct inode *ip, *next;if(*path == '/')ip = iget(ROOTDEV, ROOTINO);elseip = idup(myproc()->cwd);while((path = skipelem(path, name)) != 0){ilock(ip);if(ip->type != T_DIR){iunlockput(ip);return 0;}if(nameiparent && *path == '\0'){// Stop one level early.iunlock(ip);return ip;}if((next = dirlookup(ip, name, 0)) == 0){iunlockput(ip);return 0;}iunlockput(ip);ip = next;}if(nameiparent){iput(ip);return 0;}return ip;

}

xv6和linux一样,大部分资源以文件形式表示,例如pipe、inode、设备等,文件描述符层就是实现这种统一性的一层:

struct file {enum { FD_NONE, FD_PIPE, FD_INODE, FD_DEVICE } type;int ref; // reference countchar readable;char writable;struct pipe *pipe; // FD_PIPEstruct inode *ip; // FD_INODE and FD_DEVICEuint off; // FD_INODEshort major; // FD_DEVICE

};

系统中所有打开的文件都保存在一个全局文件表中,即 ftable。文件表的功能有分配文件 filealloc(找ftable的空闲file) 、创建重复引用 filedup(ref++)、释放引用 fileclose(ref–和ref==0时释放资源)、读写数据 fileeread 和 filewrite(根据file类型执行对应读写操作)、读取file信息filestat(读取inode信息)。

fs实验Large files

实验要求将inode索引的NDIRECT(12)+NINDIRECT(1)=13个盘块地址改成NDIRECT(11)+NINDIRECT(1)+NINDIRECT2(1,2级间接盘块)=13。从原来能表示12+1×256=268个盘块,变成11+1×256+1×256×256=65803个盘块。

先改变fs.h和file里inode相关定义:

通过全局搜索使用NINDIRECT的函数,发现有三个bmap(用于将逻辑块映射到硬件盘块)、itrunc(清空inode索引的硬盘空间)和iappend(在制作文件系统里,用于将数据追加到inode末尾)。

bmap里添加映射2级间接索引硬盘部分,先找到2级间接索引的第1级索引硬盘,再去第1级索引硬盘里找第2级索引硬盘,这里需要注意的是,每次bread完都需要brelse释放,否则会耗尽buffer cache:

// Return the disk block address of the nth block in inode ip.

// If there is no such block, bmap allocates one.

static uint

bmap(struct inode *ip, uint bn)

{uint addr, *a;struct buf *bp;if(bn < NDIRECT){if((addr = ip->addrs[bn]) == 0)ip->addrs[bn] = addr = balloc(ip->dev);return addr;}bn -= NDIRECT;if(bn < NINDIRECT){// Load indirect block, allocating if necessary.if((addr = ip->addrs[NDIRECT]) == 0)ip->addrs[NDIRECT] = addr = balloc(ip->dev);bp = bread(ip->dev, addr);a = (uint*)bp->data;if((addr = a[bn]) == 0){a[bn] = addr = balloc(ip->dev);log_write(bp);}brelse(bp);return addr;}bn -= NINDIRECT;if(bn < NINDIRECT2){if((addr = ip->addrs[NDIRECT+1]) == 0){ip->addrs[NDIRECT+1] = addr = balloc(ip->dev);}bp = bread(ip->dev, addr);a = (uint*)bp->data;if((addr = a[bn/NINDIRECT]) == 0){a[bn/NINDIRECT] = addr = balloc(ip->dev);log_write(bp);}brelse(bp);bp = bread(ip->dev, addr);a = (uint*)bp->data;if((addr = a[bn%NINDIRECT]) == 0){a[bn%NINDIRECT] = addr = balloc(ip->dev);log_write(bp);}brelse(bp);return addr;}panic("bmap: out of range");

}

itrunc函数同上:

// Truncate inode (discard contents).

// Caller must hold ip->lock.

void

itrunc(struct inode *ip)

{int i, j, k;struct buf *bp, *bp2;uint *a, *b;for(i = 0; i < NDIRECT; i++){if(ip->addrs[i]){bfree(ip->dev, ip->addrs[i]);ip->addrs[i] = 0;}}if(ip->addrs[NDIRECT]){bp = bread(ip->dev, ip->addrs[NDIRECT]);a = (uint*)bp->data;for(j = 0; j < NINDIRECT; j++){if(a[j])bfree(ip->dev, a[j]);}brelse(bp);bfree(ip->dev, ip->addrs[NDIRECT]);ip->addrs[NDIRECT] = 0;}if(ip->addrs[NDIRECT+1]){bp = bread(ip->dev, ip->addrs[NDIRECT+1]);a = (uint*)bp->data;for(j = 0; j < NINDIRECT; j++){if(a[j]){bp2 = bread(ip->dev, a[j]);b = (uint*)bp2->data;for(k = 0; k < NINDIRECT; k++){if(b[k]){bfree(ip->dev, b[k]);}}brelse(bp2);bfree(ip->dev, a[j]);}}brelse(bp);bfree(ip->dev, ip->addrs[NDIRECT+1]);ip->addrs[NDIRECT+1] = 0;}ip->size = 0;iupdate(ip);

}