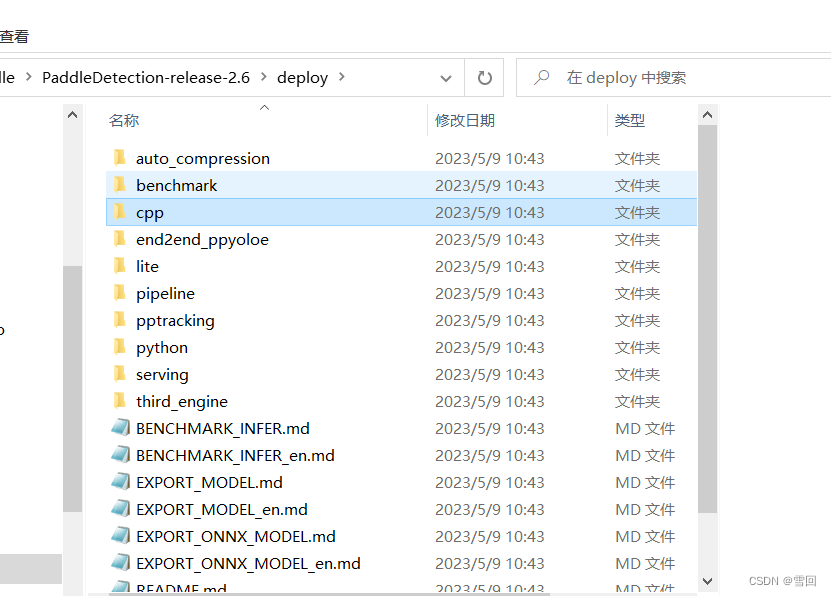

一.下载源代码

官方地址:

https://gitee.com/paddlepaddle/PaddleDetection

网盘:

paddledetection

链接:https://pan.baidu.com/s/1g0z5SYQNDR1pwe9iAtvR3A?pwd=ktl6

提取码:ktl6

paddleocr

链接:https://pan.baidu.com/s/1QcLbGJD7NB9UVPbUAulCuA?pwd=o68i

提取码:o68i

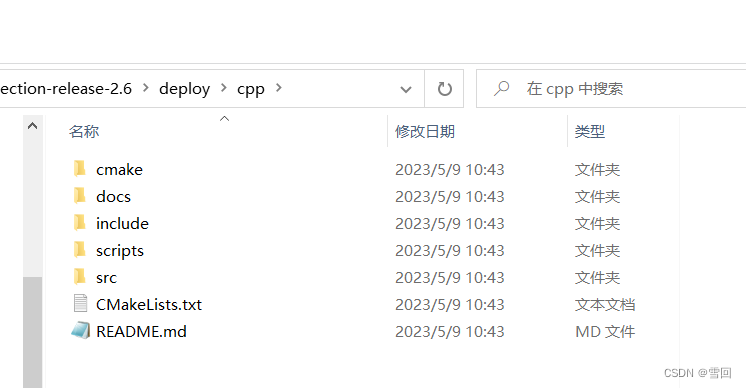

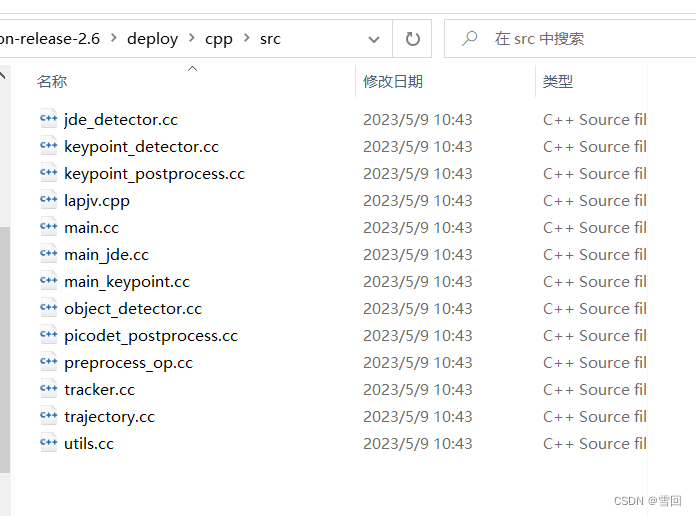

二.找到c++部署代码

我个人为了整体性把src文件里的后缀都改为了cpp

三.改CMakeLists.txt文件

原版cmake文件很繁琐,我改写了一个简洁版的

移植的时候只需要改头文件地址和库文件地址即可

1.改前

cmake_minimum_required(VERSION 3.0)

project(PaddleObjectDetector CXX C)option(WITH_MKL "Compile demo with MKL/OpenBlas support,defaultuseMKL." ON)

option(WITH_GPU "Compile demo with GPU/CPU, default use CPU." ON)

option(WITH_TENSORRT "Compile demo with TensorRT." OFF)option(WITH_KEYPOINT "Whether to Compile KeyPoint detector" OFF)

option(WITH_MOT "Whether to Compile MOT detector" OFF)SET(PADDLE_DIR "" CACHE PATH "Location of libraries")

SET(PADDLE_LIB_NAME "" CACHE STRING "libpaddle_inference")

SET(OPENCV_DIR "" CACHE PATH "Location of libraries")

SET(CUDA_LIB "" CACHE PATH "Location of libraries")

SET(CUDNN_LIB "" CACHE PATH "Location of libraries")

SET(TENSORRT_INC_DIR "" CACHE PATH "Compile demo with TensorRT")

SET(TENSORRT_LIB_DIR "" CACHE PATH "Compile demo with TensorRT")include(cmake/yaml-cpp.cmake)include_directories("${CMAKE_SOURCE_DIR}/")

include_directories("${CMAKE_CURRENT_BINARY_DIR}/ext/yaml-cpp/src/ext-yaml-cpp/include")

link_directories("${CMAKE_CURRENT_BINARY_DIR}/ext/yaml-cpp/lib")if (WITH_KEYPOINT)set(SRCS src/main_keypoint.cc src/preprocess_op.cc src/object_detector.cc src/picodet_postprocess.cc src/utils.cc src/keypoint_detector.cc src/keypoint_postprocess.cc)

elseif (WITH_MOT)set(SRCS src/main_jde.cc src/preprocess_op.cc src/object_detector.cc src/jde_detector.cc src/tracker.cc src/trajectory.cc src/lapjv.cpp src/picodet_postprocess.cc src/utils.cc)

else ()set(SRCS src/main.cc src/preprocess_op.cc src/object_detector.cc src/picodet_postprocess.cc src/utils.cc)

endif()macro(safe_set_static_flag)foreach(flag_varCMAKE_CXX_FLAGS CMAKE_CXX_FLAGS_DEBUG CMAKE_CXX_FLAGS_RELEASECMAKE_CXX_FLAGS_MINSIZEREL CMAKE_CXX_FLAGS_RELWITHDEBINFO)if(${flag_var} MATCHES "/MD")string(REGEX REPLACE "/MD" "/MT" ${flag_var} "${${flag_var}}")endif(${flag_var} MATCHES "/MD")endforeach(flag_var)

endmacro()if (WITH_MKL)ADD_DEFINITIONS(-DUSE_MKL)

endif()if (NOT DEFINED PADDLE_DIR OR ${PADDLE_DIR} STREQUAL "")message(FATAL_ERROR "please set PADDLE_DIR with -DPADDLE_DIR=/path/paddle_influence_dir")

endif()

message("PADDLE_DIR IS:" ${PADDLE_DIR})if (NOT DEFINED OPENCV_DIR OR ${OPENCV_DIR} STREQUAL "")message(FATAL_ERROR "please set OPENCV_DIR with -DOPENCV_DIR=/path/opencv")

endif()include_directories("${CMAKE_SOURCE_DIR}/")

include_directories("${PADDLE_DIR}/")

include_directories("${PADDLE_DIR}/third_party/install/protobuf/include")

include_directories("${PADDLE_DIR}/third_party/install/glog/include")

include_directories("${PADDLE_DIR}/third_party/install/gflags/include")

include_directories("${PADDLE_DIR}/third_party/install/xxhash/include")

if (EXISTS "${PADDLE_DIR}/third_party/install/snappy/include")include_directories("${PADDLE_DIR}/third_party/install/snappy/include")

endif()

if(EXISTS "${PADDLE_DIR}/third_party/install/snappystream/include")include_directories("${PADDLE_DIR}/third_party/install/snappystream/include")

endif()

include_directories("${PADDLE_DIR}/third_party/boost")

include_directories("${PADDLE_DIR}/third_party/eigen3")if (EXISTS "${PADDLE_DIR}/third_party/install/snappy/lib")link_directories("${PADDLE_DIR}/third_party/install/snappy/lib")

endif()

if(EXISTS "${PADDLE_DIR}/third_party/install/snappystream/lib")link_directories("${PADDLE_DIR}/third_party/install/snappystream/lib")

endif()link_directories("${PADDLE_DIR}/third_party/install/protobuf/lib")

link_directories("${PADDLE_DIR}/third_party/install/glog/lib")

link_directories("${PADDLE_DIR}/third_party/install/gflags/lib")

link_directories("${PADDLE_DIR}/third_party/install/xxhash/lib")

link_directories("${PADDLE_DIR}/third_party/install/paddle2onnx/lib")

link_directories("${PADDLE_DIR}/third_party/install/onnxruntime/lib")

link_directories("${PADDLE_DIR}/paddle/lib/")

link_directories("${CMAKE_CURRENT_BINARY_DIR}")if (WIN32)include_directories("${PADDLE_DIR}/paddle/fluid/inference")include_directories("${PADDLE_DIR}/paddle/include")link_directories("${PADDLE_DIR}/paddle/fluid/inference")find_package(OpenCV REQUIRED PATHS ${OPENCV_DIR}/build/ NO_DEFAULT_PATH)else ()find_package(OpenCV REQUIRED PATHS ${OPENCV_DIR}/share/OpenCV NO_DEFAULT_PATH)include_directories("${PADDLE_DIR}/paddle/include")link_directories("${PADDLE_DIR}/paddle/lib")

endif ()

include_directories(${OpenCV_INCLUDE_DIRS})if (WIN32)add_definitions("/DGOOGLE_GLOG_DLL_DECL=")set(CMAKE_C_FLAGS_DEBUG "${CMAKE_C_FLAGS_DEBUG} /bigobj /MTd")set(CMAKE_C_FLAGS_RELEASE "${CMAKE_C_FLAGS_RELEASE} /bigobj /MT")set(CMAKE_CXX_FLAGS_DEBUG "${CMAKE_CXX_FLAGS_DEBUG} /bigobj /MTd")set(CMAKE_CXX_FLAGS_RELEASE "${CMAKE_CXX_FLAGS_RELEASE} /bigobj /MT")

else()set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -g -o2 -fopenmp -std=c++11")set(CMAKE_STATIC_LIBRARY_PREFIX "")

endif()# TODO let users define cuda lib path

if (WITH_GPU)if (NOT DEFINED CUDA_LIB OR ${CUDA_LIB} STREQUAL "")message(FATAL_ERROR "please set CUDA_LIB with -DCUDA_LIB=/path/cuda-8.0/lib64")endif()if (NOT WIN32)if (NOT DEFINED CUDNN_LIB)message(FATAL_ERROR "please set CUDNN_LIB with -DCUDNN_LIB=/path/cudnn_v7.4/cuda/lib64")endif()endif(NOT WIN32)

endif()if (NOT WIN32)if (WITH_TENSORRT AND WITH_GPU)include_directories("${TENSORRT_INC_DIR}/")link_directories("${TENSORRT_LIB_DIR}/")endif()

endif(NOT WIN32)if (NOT WIN32)set(NGRAPH_PATH "${PADDLE_DIR}/third_party/install/ngraph")if(EXISTS ${NGRAPH_PATH})include(GNUInstallDirs)include_directories("${NGRAPH_PATH}/include")link_directories("${NGRAPH_PATH}/${CMAKE_INSTALL_LIBDIR}")set(NGRAPH_LIB ${NGRAPH_PATH}/${CMAKE_INSTALL_LIBDIR}/libngraph${CMAKE_SHARED_LIBRARY_SUFFIX})endif()

endif()if(WITH_MKL)include_directories("${PADDLE_DIR}/third_party/install/mklml/include")if (WIN32)set(MATH_LIB ${PADDLE_DIR}/third_party/install/mklml/lib/mklml.lib${PADDLE_DIR}/third_party/install/mklml/lib/libiomp5md.lib)else ()set(MATH_LIB ${PADDLE_DIR}/third_party/install/mklml/lib/libmklml_intel${CMAKE_SHARED_LIBRARY_SUFFIX}${PADDLE_DIR}/third_party/install/mklml/lib/libiomp5${CMAKE_SHARED_LIBRARY_SUFFIX})execute_process(COMMAND cp -r ${PADDLE_DIR}/third_party/install/mklml/lib/libmklml_intel${CMAKE_SHARED_LIBRARY_SUFFIX} /usr/lib)endif ()set(MKLDNN_PATH "${PADDLE_DIR}/third_party/install/mkldnn")if(EXISTS ${MKLDNN_PATH})include_directories("${MKLDNN_PATH}/include")if (WIN32)set(MKLDNN_LIB ${MKLDNN_PATH}/lib/mkldnn.lib)else ()set(MKLDNN_LIB ${MKLDNN_PATH}/lib/libmkldnn.so.0)endif ()endif()

else()set(MATH_LIB ${PADDLE_DIR}/third_party/install/openblas/lib/libopenblas${CMAKE_STATIC_LIBRARY_SUFFIX})

endif()if (WIN32)if(EXISTS "${PADDLE_DIR}/paddle/fluid/inference/${PADDLE_LIB_NAME}${CMAKE_STATIC_LIBRARY_SUFFIX}")set(DEPS${PADDLE_DIR}/paddle/fluid/inference/${PADDLE_LIB_NAME}${CMAKE_STATIC_LIBRARY_SUFFIX})else()set(DEPS${PADDLE_DIR}/paddle/lib/${PADDLE_LIB_NAME}${CMAKE_STATIC_LIBRARY_SUFFIX})endif()

endif()if (WIN32)set(DEPS ${PADDLE_DIR}/paddle/lib/${PADDLE_LIB_NAME}${CMAKE_STATIC_LIBRARY_SUFFIX})

else()set(DEPS ${PADDLE_DIR}/paddle/lib/${PADDLE_LIB_NAME}${CMAKE_SHARED_LIBRARY_SUFFIX})

endif()message("PADDLE_LIB_NAME:" ${PADDLE_LIB_NAME})

message("DEPS:" $DEPS)if (NOT WIN32)set(DEPS ${DEPS}${MATH_LIB} ${MKLDNN_LIB}glog gflags protobuf z xxhash yaml-cpp)if(EXISTS "${PADDLE_DIR}/third_party/install/snappystream/lib")set(DEPS ${DEPS} snappystream)endif()if (EXISTS "${PADDLE_DIR}/third_party/install/snappy/lib")set(DEPS ${DEPS} snappy)endif()

else()set(DEPS ${DEPS}${MATH_LIB} ${MKLDNN_LIB}glog gflags_static libprotobuf xxhash libyaml-cppmt)set(DEPS ${DEPS} libcmt shlwapi)if (EXISTS "${PADDLE_DIR}/third_party/install/snappy/lib")set(DEPS ${DEPS} snappy)endif()if(EXISTS "${PADDLE_DIR}/third_party/install/snappystream/lib")set(DEPS ${DEPS} snappystream)endif()

endif(NOT WIN32)if(WITH_GPU)if(NOT WIN32)if (WITH_TENSORRT)set(DEPS ${DEPS} ${TENSORRT_LIB_DIR}/libnvinfer${CMAKE_SHARED_LIBRARY_SUFFIX})set(DEPS ${DEPS} ${TENSORRT_LIB_DIR}/libnvinfer_plugin${CMAKE_SHARED_LIBRARY_SUFFIX})endif()set(DEPS ${DEPS} ${CUDA_LIB}/libcudart${CMAKE_SHARED_LIBRARY_SUFFIX})set(DEPS ${DEPS} ${CUDNN_LIB}/libcudnn${CMAKE_SHARED_LIBRARY_SUFFIX})else()set(DEPS ${DEPS} ${CUDA_LIB}/cudart${CMAKE_STATIC_LIBRARY_SUFFIX} )set(DEPS ${DEPS} ${CUDA_LIB}/cublas${CMAKE_STATIC_LIBRARY_SUFFIX} )set(DEPS ${DEPS} ${CUDNN_LIB}/cudnn${CMAKE_STATIC_LIBRARY_SUFFIX})endif()

endif()if (NOT WIN32)set(EXTERNAL_LIB "-ldl -lrt -lgomp -lz -lm -lpthread")set(DEPS ${DEPS} ${EXTERNAL_LIB})

endif()set(DEPS ${DEPS} ${OpenCV_LIBS})

add_executable(main ${SRCS})

ADD_DEPENDENCIES(main ext-yaml-cpp)

message("DEPS:" $DEPS)

target_link_libraries(main ${DEPS})if (WIN32 AND WITH_MKL)add_custom_command(TARGET main POST_BUILDCOMMAND ${CMAKE_COMMAND} -E copy_if_different ${PADDLE_DIR}/third_party/install/mklml/lib/mklml.dll ./mklml.dllCOMMAND ${CMAKE_COMMAND} -E copy_if_different ${PADDLE_DIR}/third_party/install/mklml/lib/libiomp5md.dll ./libiomp5md.dllCOMMAND ${CMAKE_COMMAND} -E copy_if_different ${PADDLE_DIR}/third_party/install/mkldnn/lib/mkldnn.dll ./mkldnn.dllCOMMAND ${CMAKE_COMMAND} -E copy_if_different ${PADDLE_DIR}/third_party/install/mklml/lib/mklml.dll ./release/mklml.dllCOMMAND ${CMAKE_COMMAND} -E copy_if_different ${PADDLE_DIR}/third_party/install/mklml/lib/libiomp5md.dll ./release/libiomp5md.dllCOMMAND ${CMAKE_COMMAND} -E copy_if_different ${PADDLE_DIR}/third_party/install/mkldnn/lib/mkldnn.dll ./release/mkldnn.dllCOMMAND ${CMAKE_COMMAND} -E copy_if_different ${PADDLE_DIR}/paddle/lib/${PADDLE_LIB_NAME}.dll ./release/${PADDLE_LIB_NAME}.dll)

endif()if (WIN32 AND NOT WITH_MKL)add_custom_command(TARGET main POST_BUILDCOMMAND ${CMAKE_COMMAND} -E copy_if_different ${PADDLE_DIR}/third_party/install/openblas/lib/openblas.dll ./openblas.dllCOMMAND ${CMAKE_COMMAND} -E copy_if_different ${PADDLE_DIR}/third_party/install/openblas/lib/openblas.dll ./release/openblas.dll)

endif()if (WIN32)add_custom_command(TARGET main POST_BUILDCOMMAND ${CMAKE_COMMAND} -E copy_if_different ${PADDLE_DIR}/third_party/install/onnxruntime/lib/onnxruntime.dll ./onnxruntime.dllCOMMAND ${CMAKE_COMMAND} -E copy_if_different ${PADDLE_DIR}/third_party/install/paddle2onnx/lib/paddle2onnx.dll ./paddle2onnx.dllCOMMAND ${CMAKE_COMMAND} -E copy_if_different ${PADDLE_DIR}/third_party/install/onnxruntime/lib/onnxruntime.dll ./release/onnxruntime.dllCOMMAND ${CMAKE_COMMAND} -E copy_if_different ${PADDLE_DIR}/third_party/install/paddle2onnx/lib/paddle2onnx.dll ./release/paddle2onnx.dllCOMMAND ${CMAKE_COMMAND} -E copy_if_different ${PADDLE_DIR}/paddle/lib/${PADDLE_LIB_NAME}.dll ./release/${PADDLE_LIB_NAME}.dll)

endif()2.改后

cmake_minimum_required(VERSION 3.5)set(CMAKE_BUILD_TYPE "Release") #option: Debug / Releaseif (CMAKE_BUILD_TYPE MATCHES "Debug" OR CMAKE_BUILD_TYPE MATCHES "None")message(STATUS "CMAKE_BUILD_TYPE is Debug")

elseif (CMAKE_BUILD_TYPE MATCHES "Release")message(STATUS "CMAKE_BUILD_TYPE is Release")

endif()# 设置c++标准

set(CMAKE_CXX_STANDARD 20)

project(DsYolo)set(CMAKE_WINDOWS_EXPORT_ALL_SYMBOLS ON)option(WITH_MKL "Compile demo with MKL/OpenBlas support, default use MKL." ON)

option(WITH_GPU "Compile demo with GPU/CPU, default use CPU." ON)

option(WITH_STATIC_LIB "Compile demo with static/shared library, default use static." ON)

option(WITH_TENSORRT "Compile demo with TensorRT." ON)macro(safe_set_static_flag)foreach(flag_varCMAKE_CXX_FLAGS CMAKE_CXX_FLAGS_DEBUG CMAKE_CXX_FLAGS_RELEASECMAKE_CXX_FLAGS_MINSIZEREL CMAKE_CXX_FLAGS_RELWITHDEBINFO)if(${flag_var} MATCHES "/MD")string(REGEX REPLACE "/MD" "/MT" ${flag_var} "${${flag_var}}")endif(${flag_var} MATCHES "/MD")endforeach(flag_var)

endmacro()if (WITH_MKL)ADD_DEFINITIONS(-DUSE_MKL)

endif()if (MSVC)add_definitions(-w)#add_definitions(-W0)

endif()if (WIN32)add_definitions("/DGOOGLE_GLOG_DLL_DECL=")set(CMAKE_C_FLAGS /source-charset:utf-8)add_definitions(-D_CRT_SECURE_NO_WARNINGS)add_definitions(-D_CRT_NONSTDC_NO_DEPRECATE)if(WITH_MKL)set(FLAG_OPENMP "/openmp")endif()set(CMAKE_C_FLAGS_DEBUG "${CMAKE_C_FLAGS_DEBUG} /bigobj /MTd ${FLAG_OPENMP}")set(CMAKE_C_FLAGS_RELEASE "${CMAKE_C_FLAGS_RELEASE} /bigobj /MT ${FLAG_OPENMP}")set(CMAKE_CXX_FLAGS_DEBUG "${CMAKE_CXX_FLAGS_DEBUG} /bigobj /MTd ${FLAG_OPENMP}")set(CMAKE_CXX_FLAGS_RELEASE "${CMAKE_CXX_FLAGS_RELEASE} /bigobj /MT ${FLAG_OPENMP}")if (WITH_STATIC_LIB)safe_set_static_flag()add_definitions(-DSTATIC_LIB)endif()message("cmake c debug flags " ${CMAKE_C_FLAGS_DEBUG})message("cmake c release flags " ${CMAKE_C_FLAGS_RELEASE})message("cmake cxx debug flags " ${CMAKE_CXX_FLAGS_DEBUG})message("cmake cxx release flags " ${CMAKE_CXX_FLAGS_RELEASE})

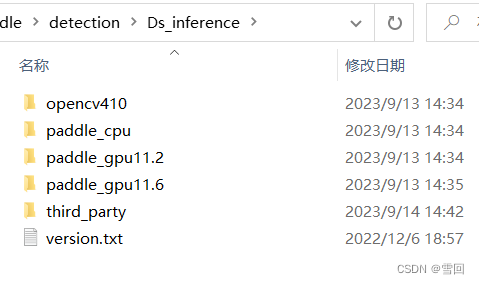

endif()# 头文件

include_directories(${PROJECT_SOURCE_DIR}/include)

#include_directories(/home/nvidia/paddleOCR/PaddleOCR-release-2.6/deploy/cpp_infer/include)

#include_directories(/home/nvidia/paddleOCR/PaddleOCR-release-2.6/deploy/cpp_infer)

#include_directories(/usr/include)

include_directories(./)

include_directories(./include)

include_directories(./Ds_inference/opencv410/include/opencv4)

include_directories(./Ds_inference/opencv410/include/opencv4/opencv2)

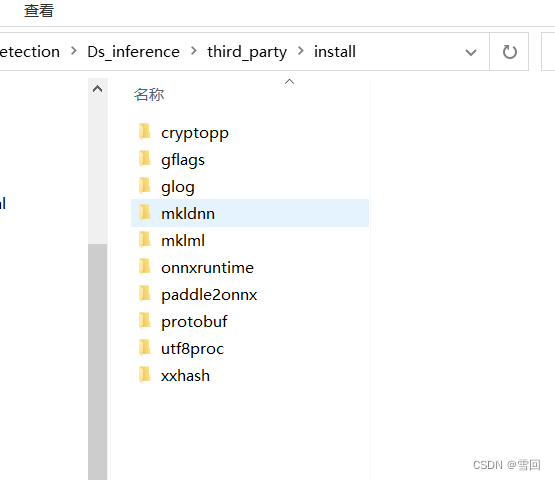

include_directories(./Ds_inference/third_party/install/mklml/include)

include_directories(./Ds_inference/third_party/install/mkldnn/include)

include_directories(./Ds_inference/third_party/install/glog/include)

include_directories(./Ds_inference/third_party/AutoLog-main)

include_directories(./Ds_inference/third_party/install/gflags/include)

include_directories(./Ds_inference/third_party/install/protobuf/include)

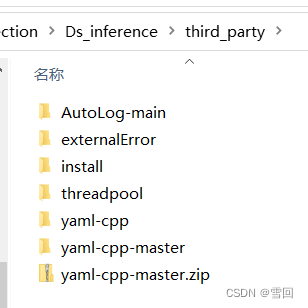

include_directories(./Ds_inference/third_party/threadpool)

include_directories(./Ds_inference/third_party/yaml-cpp)

include_directories(./Ds_inference/paddle_gpu11.6/include)

# 库文件

#link_directories(/usr/lib)

link_directories(./Ds_inference/third_party/install/mklml/lib)

link_directories(./Ds_inference/third_party/install/mkldnn/lib)

link_directories(./Ds_inference/third_party/install/glog/lib)

link_directories(./Ds_inference/opencv410/lib)

link_directories(./Ds_inference/third_party/install/protobuf/lib)

link_directories(./Ds_inference/third_party/install/gflags/lib)

link_directories(./Ds_inference/paddle_gpu11.6/lib)

link_directories(./Ds_inference/third_party/yaml-cpp/lib)aux_source_directory (src SRC_LIST)

add_executable (yoloV5 ${SRC_LIST})set(LIBRARY_OUTPUT_PATH ${PROJECT_SOURCE_DIR}/bin)

set(output)target_link_libraries(yoloV5

opencv_world410

#opencv_highgui opencv_core opencv_imgproc opencv_imgcodecs opencv_calib3d opencv_features2d opencv_videoio

paddle_inference mklml libiomp5md mkldnn glog gflags_static libprotobuf libcmt shlwapi yaml-cpp

)# 注意测试

set (EXECUTABLE_OUTPUT_PATH ${PROJECT_SOURCE_DIR}/bin)

四.(windows平台)创造一个文件夹将生成可执行文件需要的库放入

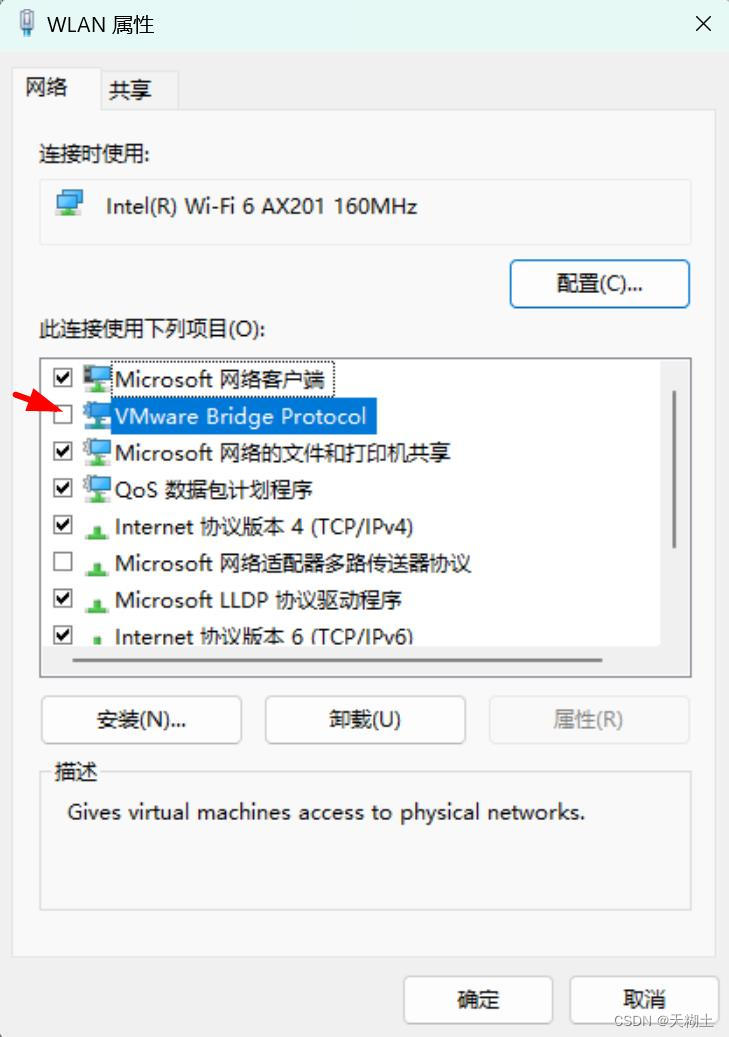

需要用到gpu,就需要事先配置好cuda这一套的环境变量,就不用在cmakelists里面专门写来引用了,配置方法见http://t.csdn.cn/mgIMD

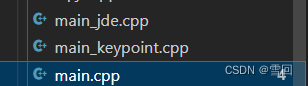

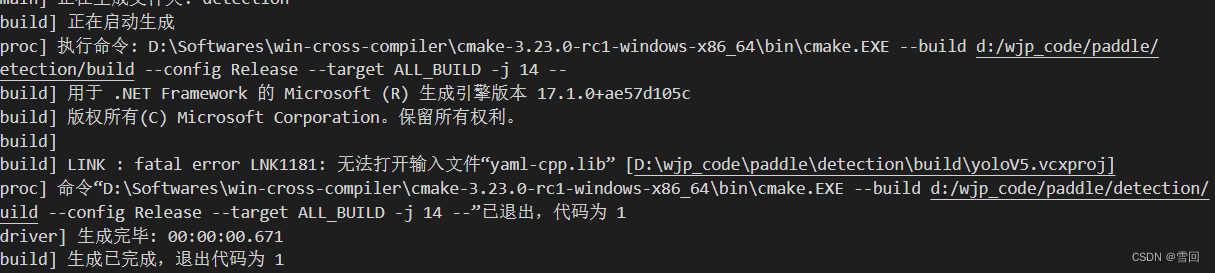

五.编译的时候遇到的bug

1.xxx已经在obj中定义

这三个关于main的cpp中只能同事存在一个,看自己需要那个,将不需要的文件里面代码全部注释,,我是使用了main.cpp,反正遇到这种报错通常把一些东西注释掉就能解决

2.error C7555: … requires at least ‘/std:c++latest’ when build in Win10

意思是c++版本低了

找到cmakelists这句函数,然后我发现我之前写的是17,我改成20之后完美解决。

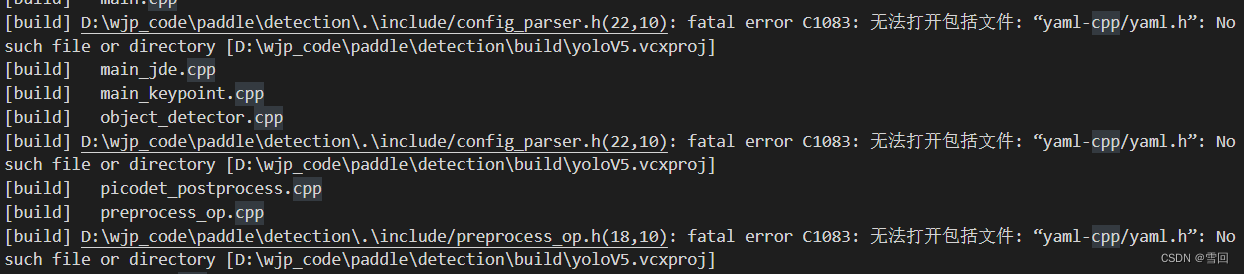

3.fatal error C1083: 无法打开包括文件: “yaml-cpp/yaml.h”: No such file or directory

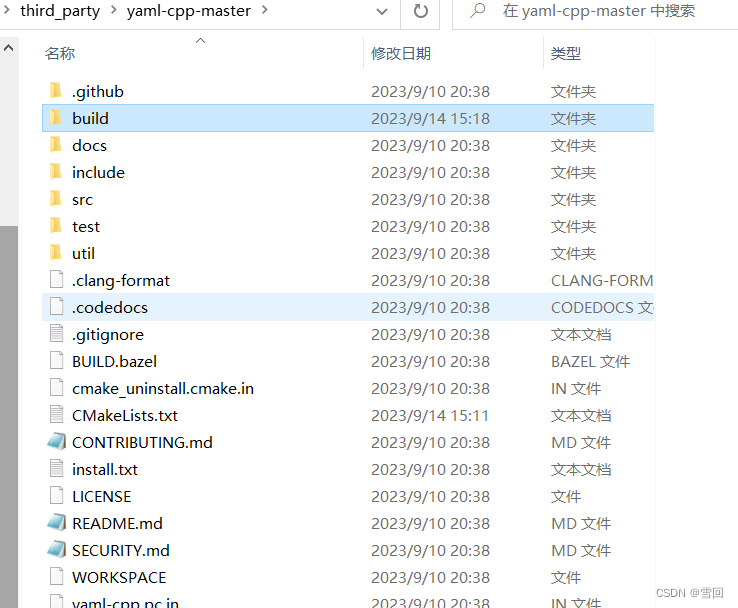

其实源cmakelists中是套了一个include(cmake/yaml-cpp.cmake),来编译

find_package(Git REQUIRED)include(ExternalProject)message("${CMAKE_BUILD_TYPE}")ExternalProject_Add(ext-yaml-cppURL https://bj.bcebos.com/paddlex/deploy/deps/yaml-cpp.zipURL_MD5 9542d6de397d1fbd649ed468cb5850e6CMAKE_ARGS-DYAML_CPP_BUILD_TESTS=OFF-DYAML_CPP_BUILD_TOOLS=OFF-DYAML_CPP_INSTALL=OFF-DYAML_CPP_BUILD_CONTRIB=OFF-DMSVC_SHARED_RT=OFF-DBUILD_SHARED_LIBS=OFF-DCMAKE_BUILD_TYPE=${CMAKE_BUILD_TYPE}-DCMAKE_CXX_FLAGS=${CMAKE_CXX_FLAGS}-DCMAKE_CXX_FLAGS_DEBUG=${CMAKE_CXX_FLAGS_DEBUG}-DCMAKE_CXX_FLAGS_RELEASE=${CMAKE_CXX_FLAGS_RELEASE}-DCMAKE_LIBRARY_OUTPUT_DIRECTORY=${CMAKE_BINARY_DIR}/ext/yaml-cpp/lib-DCMAKE_ARCHIVE_OUTPUT_DIRECTORY=${CMAKE_BINARY_DIR}/ext/yaml-cpp/libPREFIX "${CMAKE_BINARY_DIR}/ext/yaml-cpp"# Disable install stepINSTALL_COMMAND ""LOG_DOWNLOAD ONLOG_BUILD 1

)

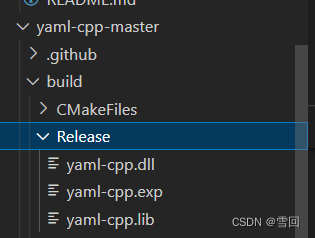

我还是想自己来编译,因为根据之前的经验,这种需要远程下载源码的总是有这样那样的问题。

下载源码

https://github.com/jbeder/yaml-cpp

我以为只是缺少头文件,cmakelists里面补上之后

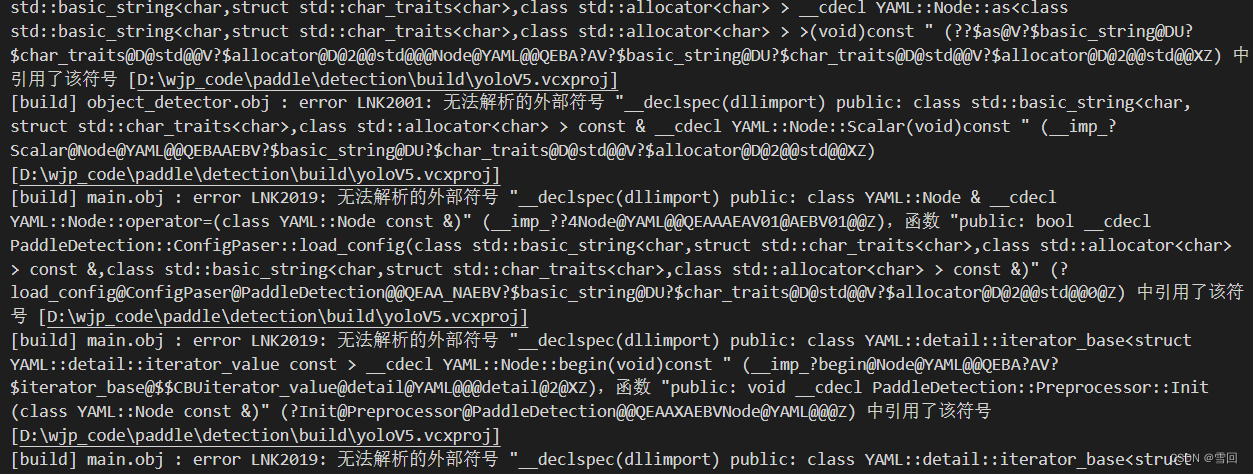

出现无法解析的外部符号,大概率是缺少库,然后我去编译yaml-cpp的库。

就是很常规的操作,用cmake … 直接就编译出了一个lib库,很顺利,但是使用的时候显示无法打开此lib

我再重新生成找原因

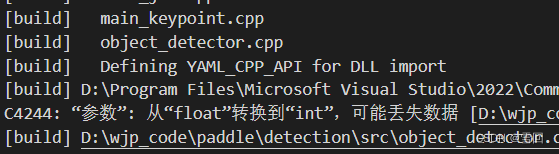

注意到这一句Defining YAML_CPP_API for DLL import,然后我搜索这个关键词,搜到了https://blog.csdn.net/m0_37833142/article/details/115180471,

即在cmake后多加了一个参数,就成功可以正常调用了,到底是什么原理,我还得继续学习

cmake -DYAML_BUILD_SHARED_LIBS=ON ..

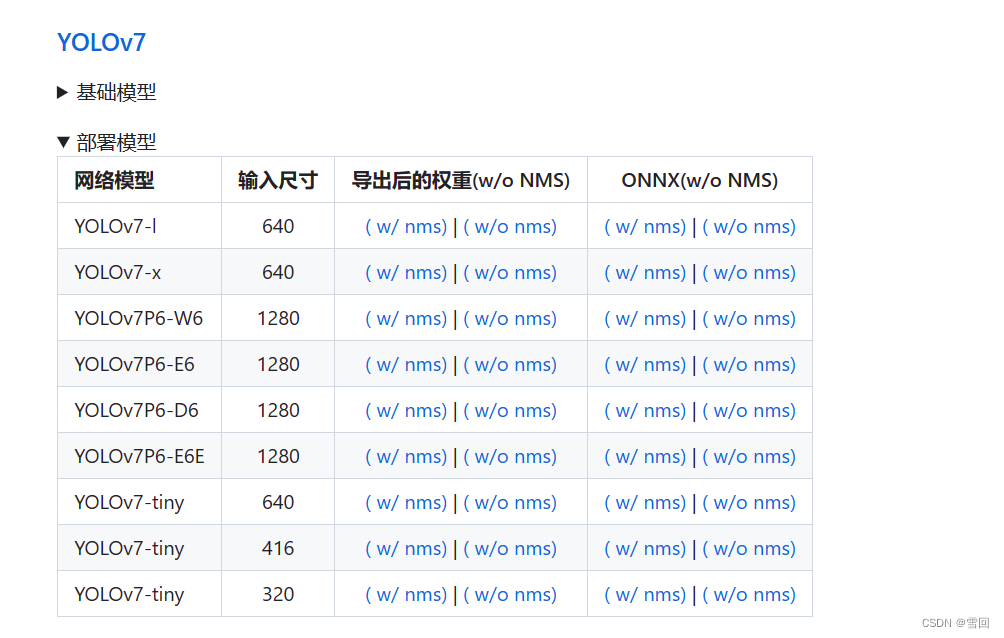

六.下载模型测试

下载地址:

https://github.com/PaddlePaddle/PaddleDetection/blob/release/2.5/docs/feature_models/PaddleYOLO_MODEL.md

里面有yolo一系列的库

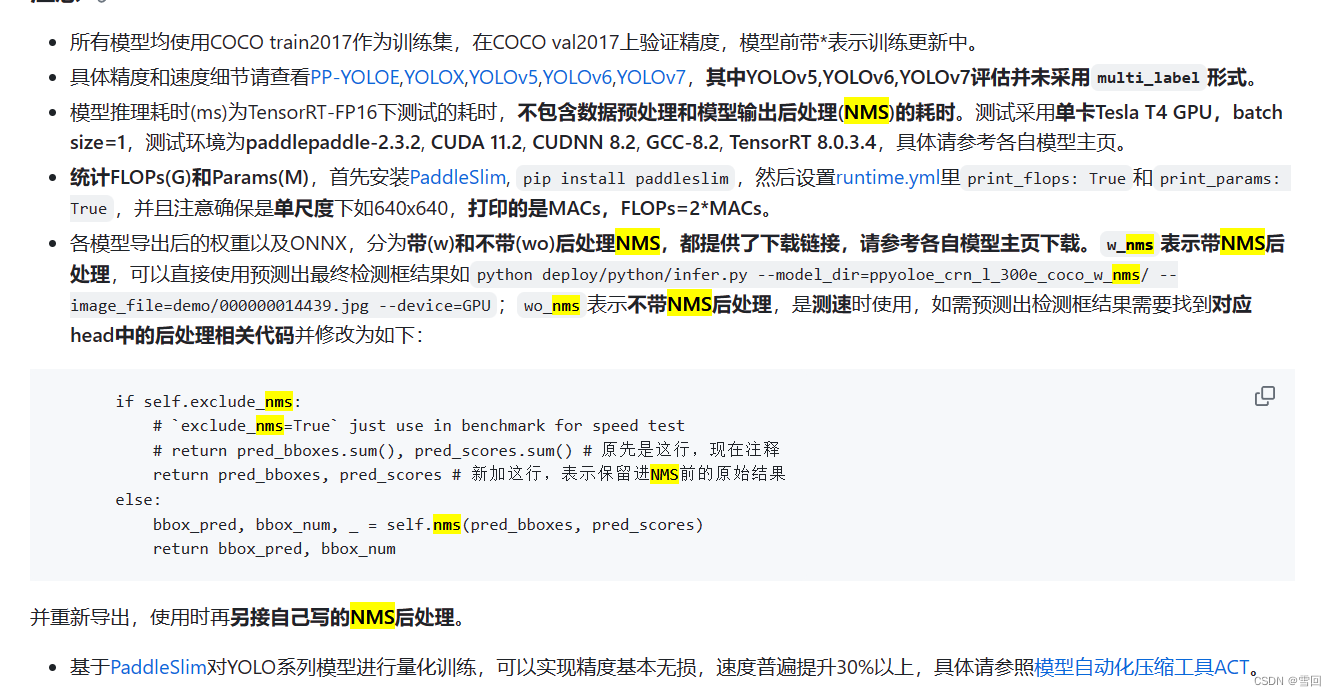

在部署模型里面选择模型下载,左边导出后的权重那些模型是paddle框架的paddle格式模型,右边onnx指onnx格式模型,_nms表示带NMS后处理,可以直接使用预测出最终检测框结果如python deploy/python/infer.py --model_dir=ppyoloe_crn_l_300e_coco_w_nms/ --image_file=demo/000000014439.jpg --device=GPU;wo_nms表示不带NMS后处理,是测速时使用

六.改源码

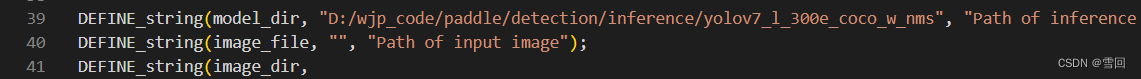

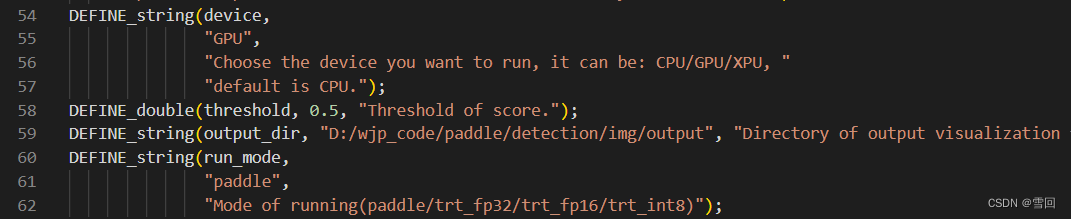

改main.cpp中的这些参数,改成自己装图片和模型的地址

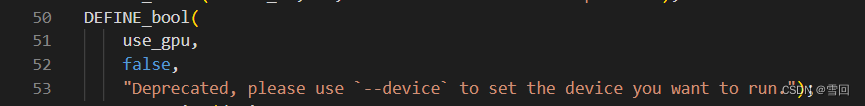

注意这个参数的值不要改,它的备注显示这个参数已经被废弃,写成true会停止运行

七.添加dll到exe目录

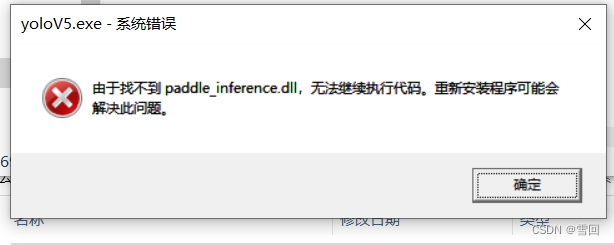

如果exe文件同一目录没有加需要的动态库,会直接报错,由于找不到 paddle inference.dll,无法继续执行代码。重新安装程序可能会

解决此问题,这种问题很简单解决,直接缺啥补啥就行

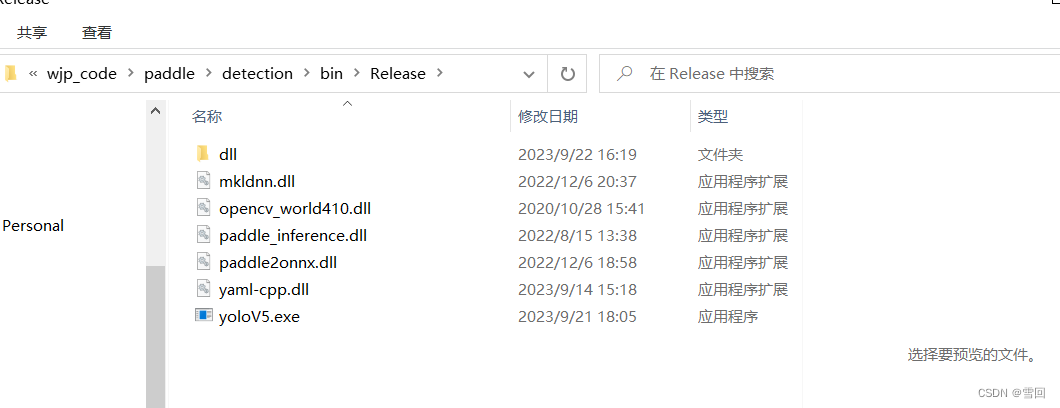

按照提示补好了的dll库是这样

然后再次运行

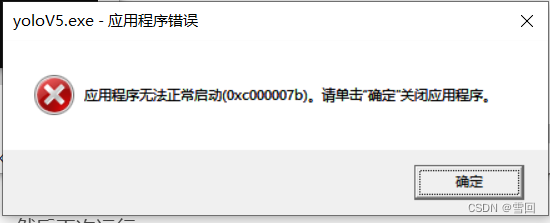

报错应用程序无法正常启动(0xc000007b)。请单击”确定”关闭应用程序,这样就很难弄了,不知道到底缺的是什么,但是可以说出现这种问题还是缺库

if (WIN32 AND WITH_MKL)add_custom_command(TARGET main POST_BUILDCOMMAND ${CMAKE_COMMAND} -E copy_if_different ${PADDLE_DIR}/third_party/install/mklml/lib/mklml.dll ./mklml.dllCOMMAND ${CMAKE_COMMAND} -E copy_if_different ${PADDLE_DIR}/third_party/install/mklml/lib/libiomp5md.dll ./libiomp5md.dllCOMMAND ${CMAKE_COMMAND} -E copy_if_different ${PADDLE_DIR}/third_party/install/mkldnn/lib/mkldnn.dll ./mkldnn.dllCOMMAND ${CMAKE_COMMAND} -E copy_if_different ${PADDLE_DIR}/third_party/install/mklml/lib/mklml.dll ./release/mklml.dllCOMMAND ${CMAKE_COMMAND} -E copy_if_different ${PADDLE_DIR}/third_party/install/mklml/lib/libiomp5md.dll ./release/libiomp5md.dllCOMMAND ${CMAKE_COMMAND} -E copy_if_different ${PADDLE_DIR}/third_party/install/mkldnn/lib/mkldnn.dll ./release/mkldnn.dllCOMMAND ${CMAKE_COMMAND} -E copy_if_different ${PADDLE_DIR}/paddle/lib/${PADDLE_LIB_NAME}.dll ./release/${PADDLE_LIB_NAME}.dll)

endif()if (WIN32 AND NOT WITH_MKL)add_custom_command(TARGET main POST_BUILDCOMMAND ${CMAKE_COMMAND} -E copy_if_different ${PADDLE_DIR}/third_party/install/openblas/lib/openblas.dll ./openblas.dllCOMMAND ${CMAKE_COMMAND} -E copy_if_different ${PADDLE_DIR}/third_party/install/openblas/lib/openblas.dll ./release/openblas.dll)

endif()if (WIN32)add_custom_command(TARGET main POST_BUILDCOMMAND ${CMAKE_COMMAND} -E copy_if_different ${PADDLE_DIR}/third_party/install/onnxruntime/lib/onnxruntime.dll ./onnxruntime.dllCOMMAND ${CMAKE_COMMAND} -E copy_if_different ${PADDLE_DIR}/third_party/install/paddle2onnx/lib/paddle2onnx.dll ./paddle2onnx.dllCOMMAND ${CMAKE_COMMAND} -E copy_if_different ${PADDLE_DIR}/third_party/install/onnxruntime/lib/onnxruntime.dll ./release/onnxruntime.dllCOMMAND ${CMAKE_COMMAND} -E copy_if_different ${PADDLE_DIR}/third_party/install/paddle2onnx/lib/paddle2onnx.dll ./release/paddle2onnx.dllCOMMAND ${CMAKE_COMMAND} -E copy_if_different ${PADDLE_DIR}/paddle/lib/${PADDLE_LIB_NAME}.dll ./release/${PADDLE_LIB_NAME}.dll)

endif()

在原cmakelists里面找到了玄机。这些都是可执行文件需要的dll

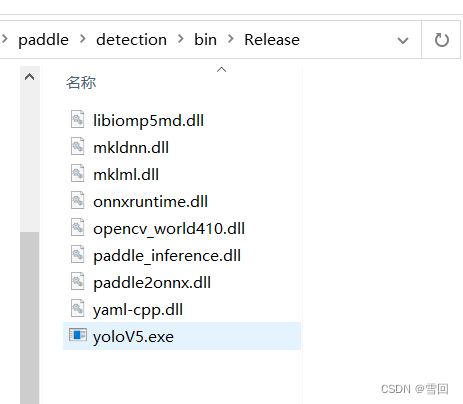

如果懒得看原代码,有个非常简单的方法

直接在这个项目依赖的第三方库里面找dll文件,全部放到exe同一文件夹就可以

bug记录

由于我下载模型的时候下载错了,只下载了一个pdparams文件,但是很无语的时候并不会有任何报错,只会运行完就停止了,没有报任何异常,看这个object_detector.cpp代码里只有函数实现,没有类实现,真的很奇怪,不知道如何排查哪里出的问题,后来我发现这个类实现在了头文件里,排查到了是我模型的原因。

打注释小窍门

在前面定义这个,然后在需要打印的地方直接输Dlog(你想打印的内容)就可以直接打印。不用每次都cout,endl

#define Dlog(x) std::cout<<x<<std::endl

查bug小窍门

exe文件运行后无输出直接闪退如何找解决办法

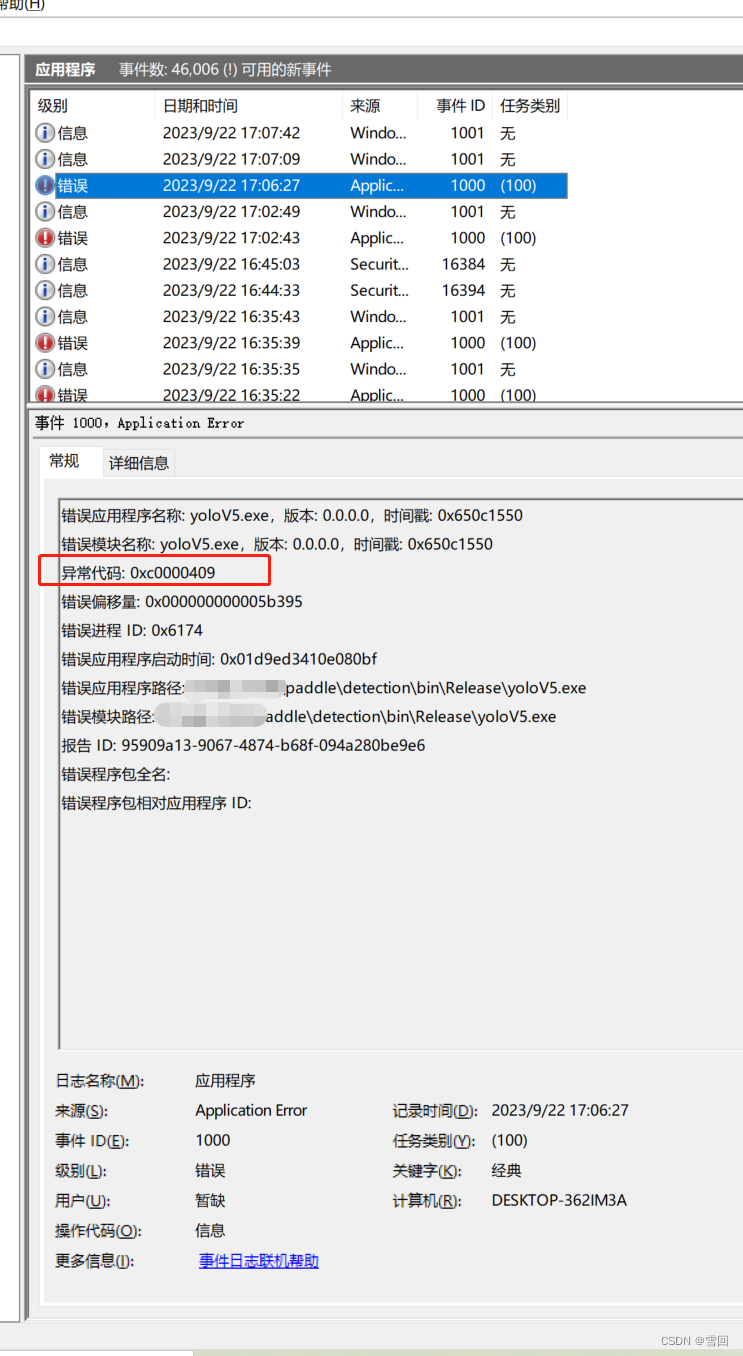

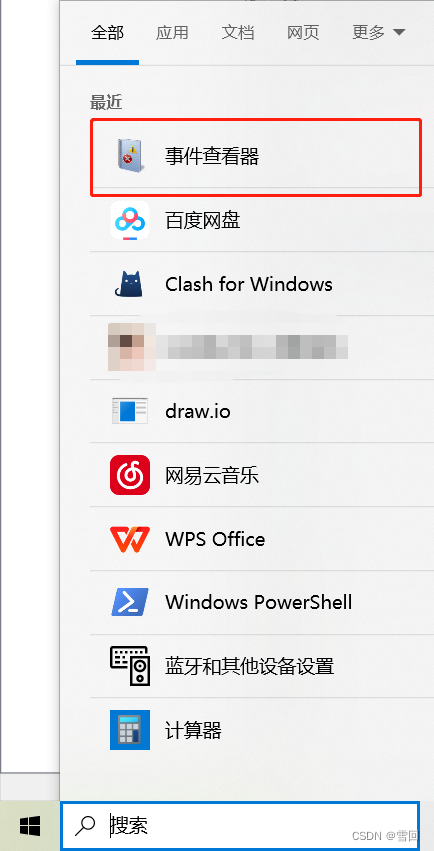

搜索栏搜事件查看器

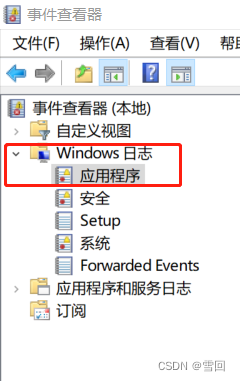

找到错误处

点开有错误的详细信息,直接用搜索引擎搜索这个异常代码能大致判断是什么问题,给了一个解决思路,不至于不知道到底哪里出了问题