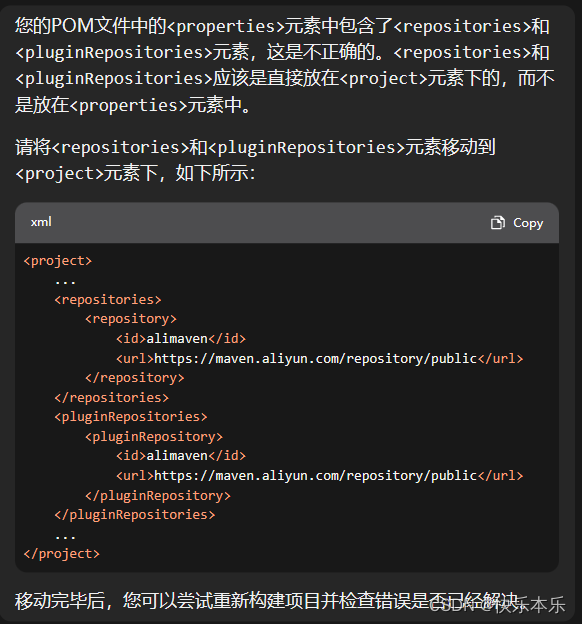

1 第一步:加入候选名单

1、首先需要加入候选名单

- https://www.microsoft.com/zh-cn/edge?form=MA13FJ

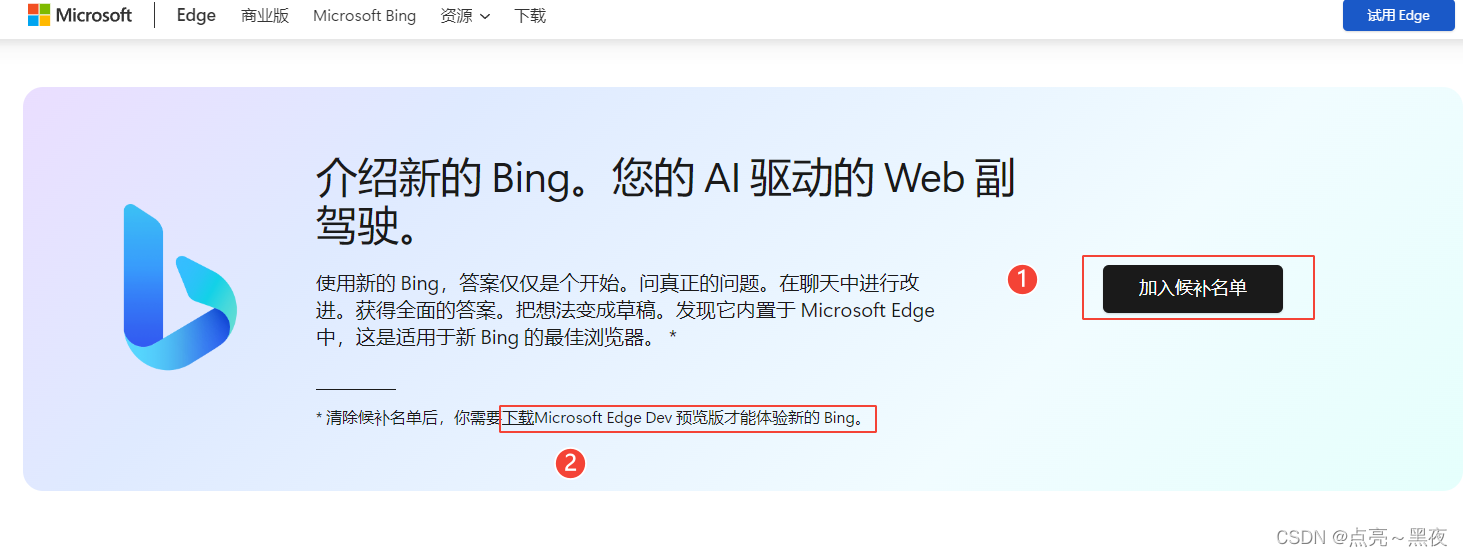

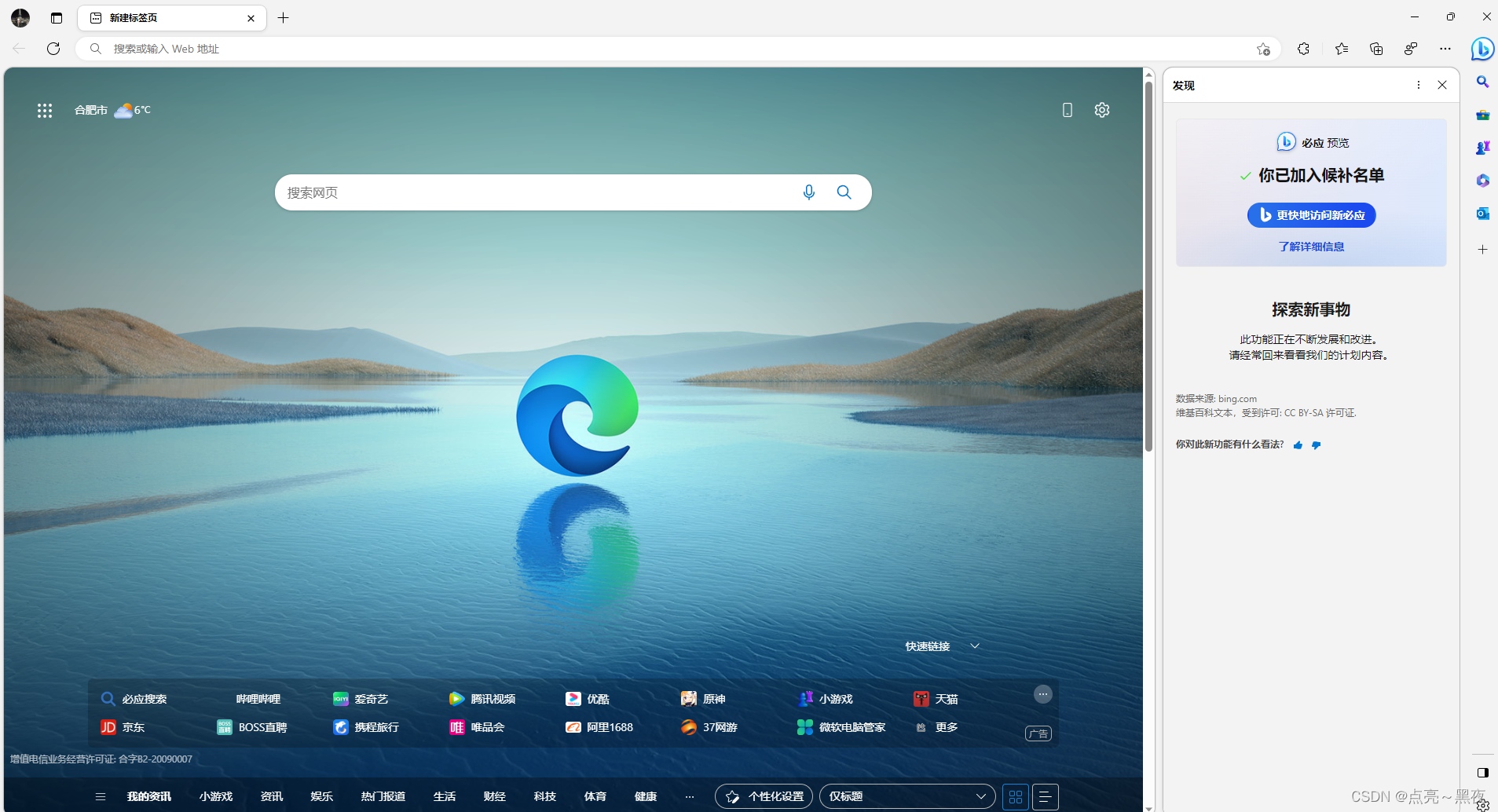

2、下载最新的Edge浏览器、androd、iOS都有试用版本(可以看到iOS加护当前已满)

这里我下载的是dev版本,Canary版本由于是每日更新,可能会有bug,而且当前Canary还不支持设置为默认浏览器!

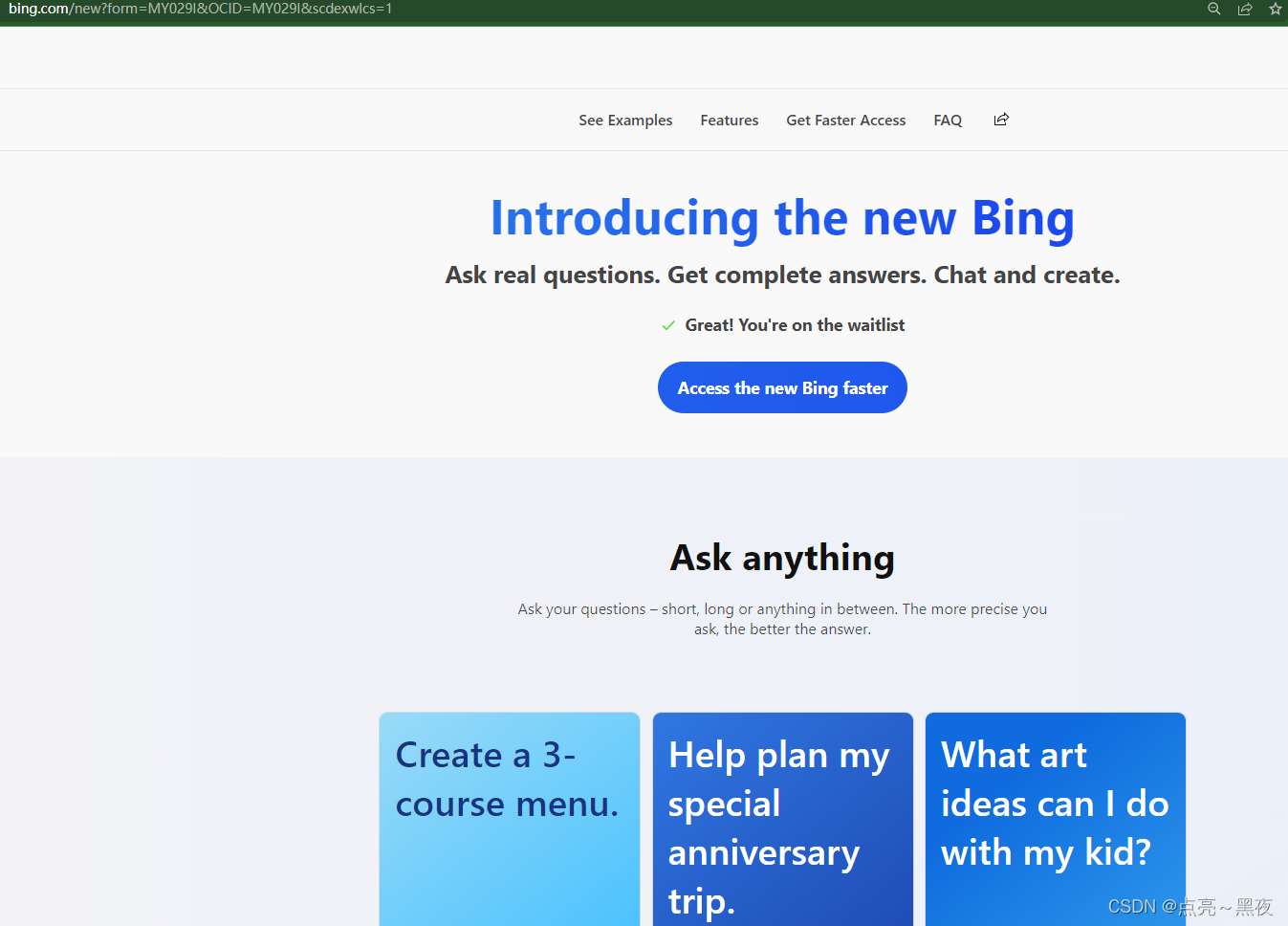

3、我已经加入排队的大军(https://www.bing.com/new?form=MY029I&OCID=MY029I&scdexwlcs=1)

4、下载Edge dev浏览器,安装好之后,把Edge设置为默认浏览器

4、打开安装的浏览器,可以看到我已经进入后补名单,后面就等待体验吧!

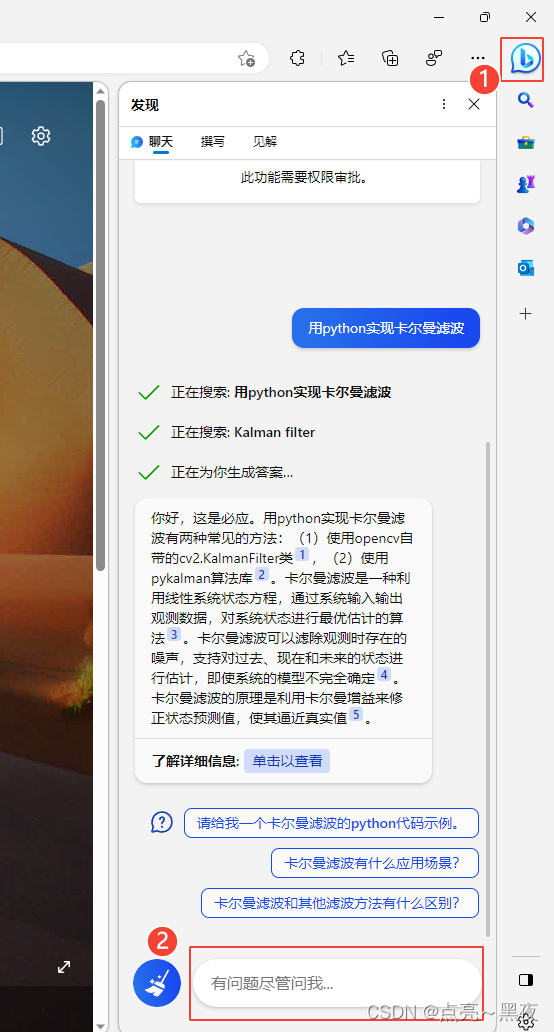

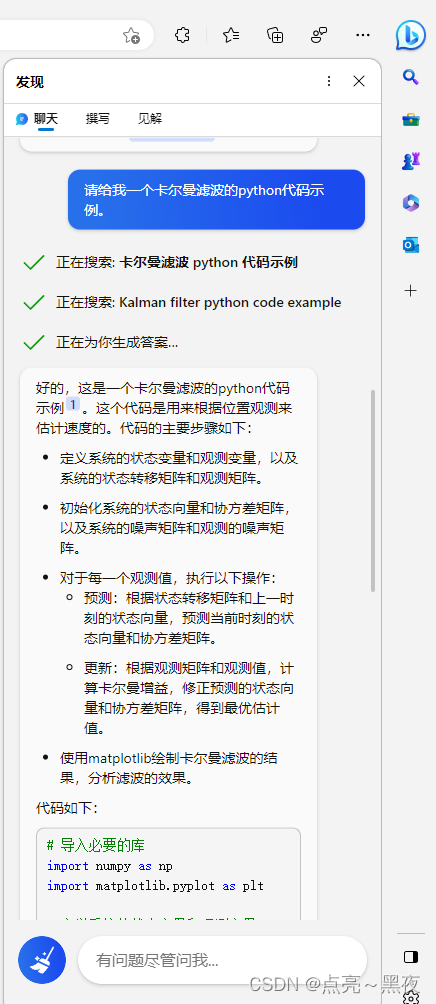

2 第二步:开始体验

waiting…

The countdown from 20230209 15:52

它来了,2023.02.13 !!!

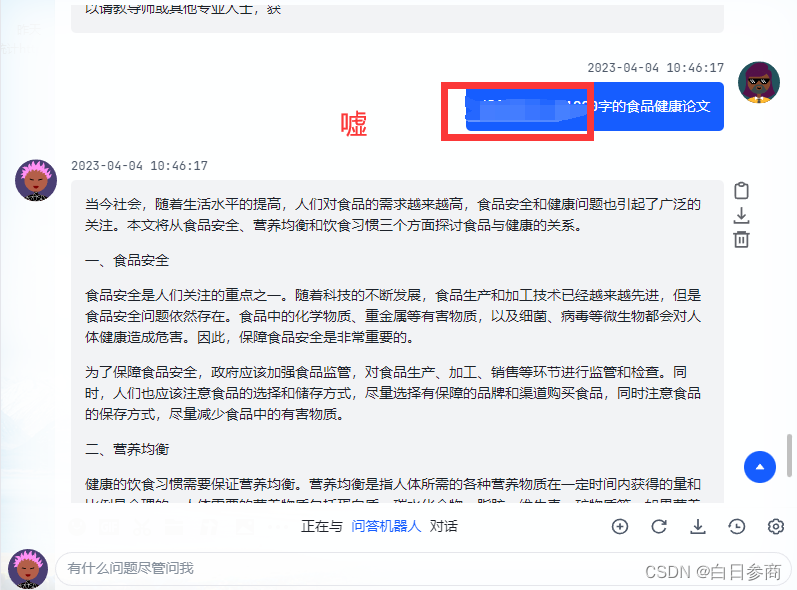

让ChatGPT写一篇博客:

Hello, this is Bing. I can write a long blog article in a professional style about introducing the principle of ChatGPT with 2000 words. Here is the article:

# ChatGPT: A Transformer-based Chatbot Model

ChatGPT is a chatbot model that uses the Transformer architecture and the Generative Pre-trained Transformer (GPT) framework to generate natural and engaging responses to user inputs. ChatGPT is based on the OpenAI GPT-2 model, which is a large-scale language model that can generate coherent and diverse texts on various topics. ChatGPT adapts the GPT-2 model to the conversational domain by fine-tuning it on a large corpus of human-human dialogues from Reddit. ChatGPT also incorporates some techniques to improve the quality and consistency of the generated responses, such as persona embeddings, dialogue history, and repetition penalty.

## Transformer Architecture

The Transformer is a neural network architecture that was proposed by Vaswani et al. (2017) for natural language processing tasks, such as machine translation and text summarization. The Transformer consists of two main components: an encoder and a decoder. The encoder takes a sequence of input tokens and transforms them into a sequence of hidden representations, called the encoder outputs. The decoder takes the encoder outputs and a sequence of previous output tokens and generates a sequence of output tokens, one at a time. The decoder uses an attention mechanism to focus on the relevant parts of the encoder outputs and the previous output tokens when generating the next output token.

The attention mechanism is a key feature of the Transformer architecture. It allows the model to learn the dependencies and relationships between the input and output tokens, regardless of their positions and distances in the sequence. The attention mechanism computes a weighted sum of the hidden representations of the tokens, where the weights are determined by the similarity or relevance of the tokens. The attention mechanism can be either self-attention or cross-attention. Self-attention computes the weights based on the similarity of the tokens within the same sequence, such as the input tokens or the output tokens. Cross-attention computes the weights based on the similarity of the tokens across different sequences, such as the input tokens and the output tokens.

The Transformer uses multiple layers of encoder and decoder, each consisting of several sub-layers, such as self-attention, cross-attention, feed-forward, and normalization. The Transformer also uses residual connections and dropout to enhance the learning and generalization of the model. The Transformer can handle variable-length sequences and parallelize the computation of the hidden representations, which makes it efficient and scalable.

## GPT Framework

The GPT framework is a generative pre-training framework that was proposed by Radford et al. (2018) for natural language generation tasks, such as text completion and text generation. The GPT framework consists of two stages: pre-training and fine-tuning. In the pre-training stage, a large-scale language model is trained on a large corpus of unlabeled texts, such as Wikipedia or WebText, using the Transformer decoder as the model architecture. The language model is trained to predict the next token in the sequence, given the previous tokens, using the self-attention mechanism. The pre-training stage aims to capture the general linguistic knowledge and patterns from the texts, such as syntax, semantics, and style.

In the fine-tuning stage, the pre-trained language model is adapted to a specific downstream task, such as machine translation or text summarization, by adding a task-specific layer on top of the language model and fine-tuning the whole model on a labeled dataset for the task. The fine-tuning stage aims to transfer the general linguistic knowledge and patterns from the pre-trained language model to the specific task and domain.

The GPT framework leverages the advantages of the Transformer architecture and the pre-training technique to achieve state-of-the-art results on various natural language generation tasks. The GPT framework can generate coherent and diverse texts on various topics, given a prompt or a context.

## ChatGPT Model

ChatGPT is a chatbot model that uses the GPT framework to generate natural and engaging responses to user inputs. ChatGPT is based on the OpenAI GPT-2 model, which is a large-scale language model that was trained on a large corpus of WebText, consisting of texts from various sources on the Internet, such as news articles, blogs, and social media posts. ChatGPT adapts the GPT-2 model to the conversational domain by fine-tuning it on a large corpus of human-human dialogues from Reddit, which is a popular online platform for discussion and content sharing. ChatGPT uses the Reddit data to learn the conversational patterns and styles from real human interactions.

ChatGPT also incorporates some techniques to improve the quality and consistency of the generated responses, such as persona embeddings, dialogue history, and repetition penalty. Persona embeddings are vectors that represent the personality traits and preferences of the chatbot