异常情况

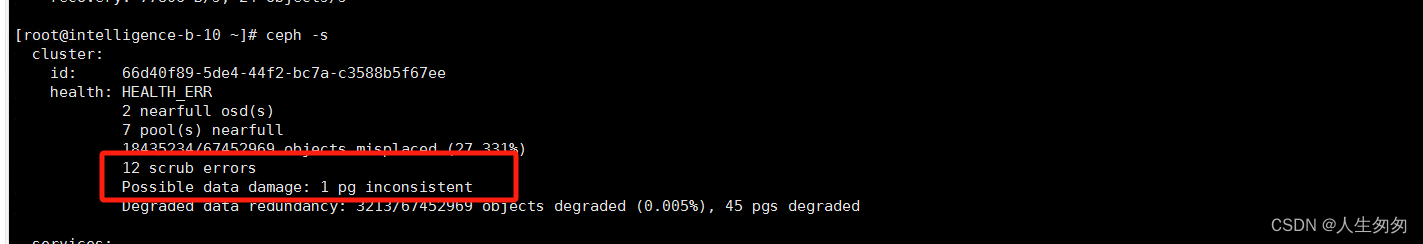

1、收到异常情况如下:

OSD_SCRUB_ERRORS 12 scrub errors

PG_DAMAGED Possible data damage: 1 pg inconsistentpg 6.d is active+remapped+inconsistent+backfill_wait, acting [5,7,4]

2、查看详细信息

登录后复制

#ceph health detail

HEALTH_ERR 12 scrub errors; Possible data damage: 1 pg inconsistent

OSD_SCRUB_ERRORS 12 scrub errors

PG_DAMAGED Possible data damage: 1 pg inconsistentpg 6.d is active+remapped+inconsistent+backfill_wait, acting [5,7,4]

2、预处理办法

集群出现pg状态变为 inconsistent 状态,inconsistent状态是pg通过scrub检测到某个或者某些对象在副本之间出现了不一致

解决方法:

1.找到pgid

ceph pg dump | grep inconsistent

2.通过repair修复对应的pg

ceph pg repair pgid

完成

但是经过观察,并不能解决。

3、我的处理过程

找出异常的 pg,然后到对应的osd所在主机上修复。

[root@intelligence-b-10 ~]# ceph osd find 5

{"osd": 5,"ip": "10.21.230.91:6802/2032","crush_location": {"host": "intelligence-b-12","root": "default"}

}

[root@intelligence-b-10 ~]# ceph osd find 7

{"osd": 7,"ip": "10.21.230.92:6801/2059","crush_location": {"host": "intelligence-b-13","root": "default"}

}

[root@intelligence-b-10 ~]# ceph osd find 4

{"osd": 4,"ip": "10.21.230.90:6800/2084","crush_location": {"host": "intelligence-b-11","root": "default"}

}这里表示是主机intelligence-b-12

然后到 进行修复

1、停止osd

systemctl stop ceph-osd@5.service

2、刷入日志

ceph-osd -i 5 --flush-journal

3、启动osd

systemctl start ceph-osd@5.service

4、修复(一般不需要)

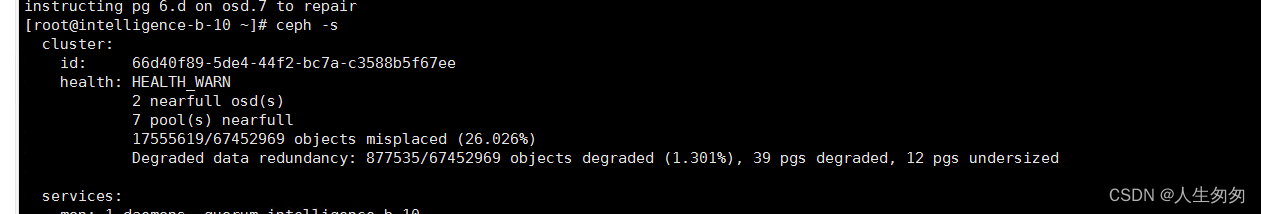

ceph pg repair 6.d5、查看ceph的状态

ceph -s

![[量子计算与量子信息] 2.1 线性代数](https://img-blog.csdnimg.cn/img_convert/29c4f41b3e830e7484fef6be74d04ae0.png)