简单神经网络模型的搭建

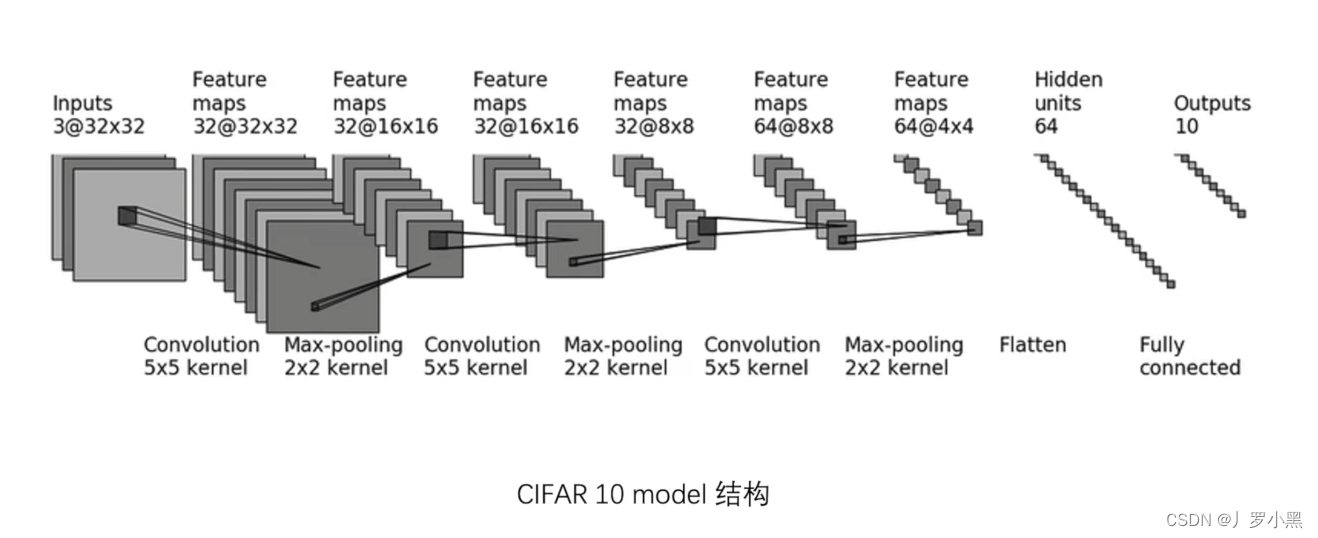

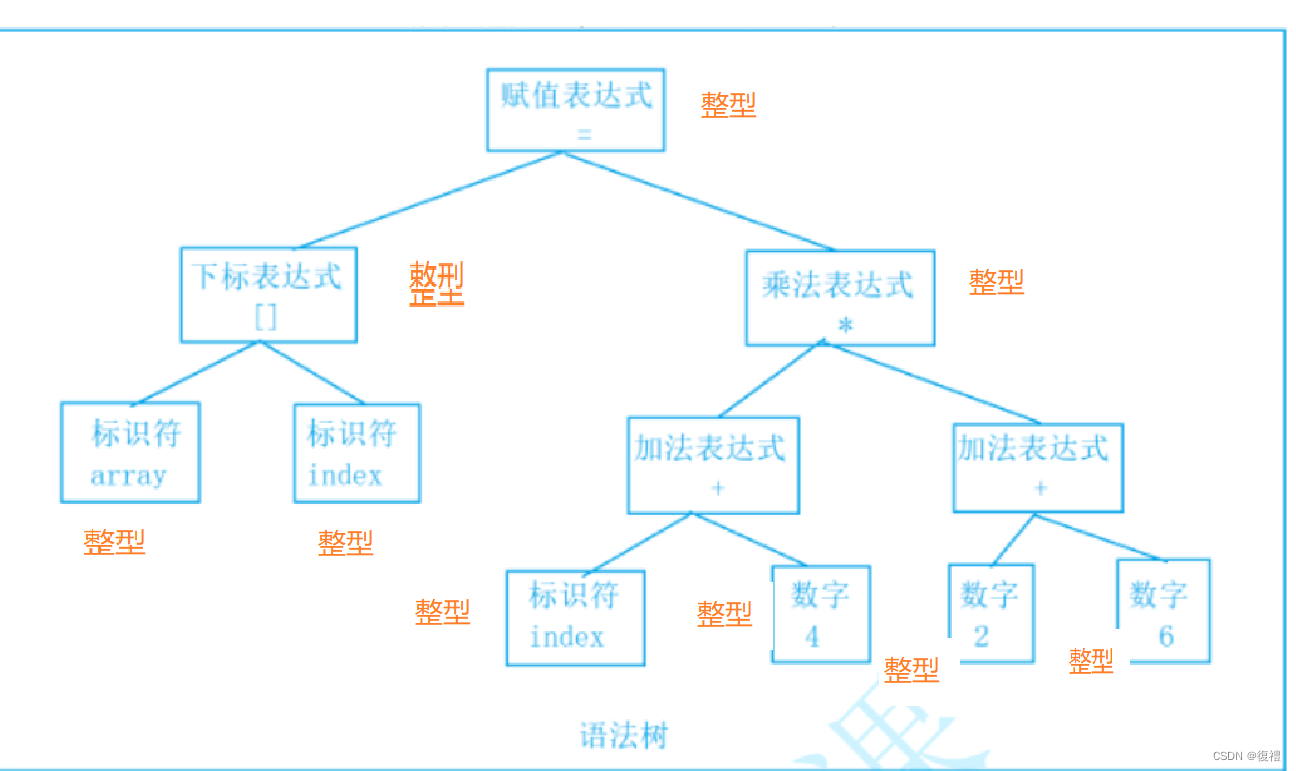

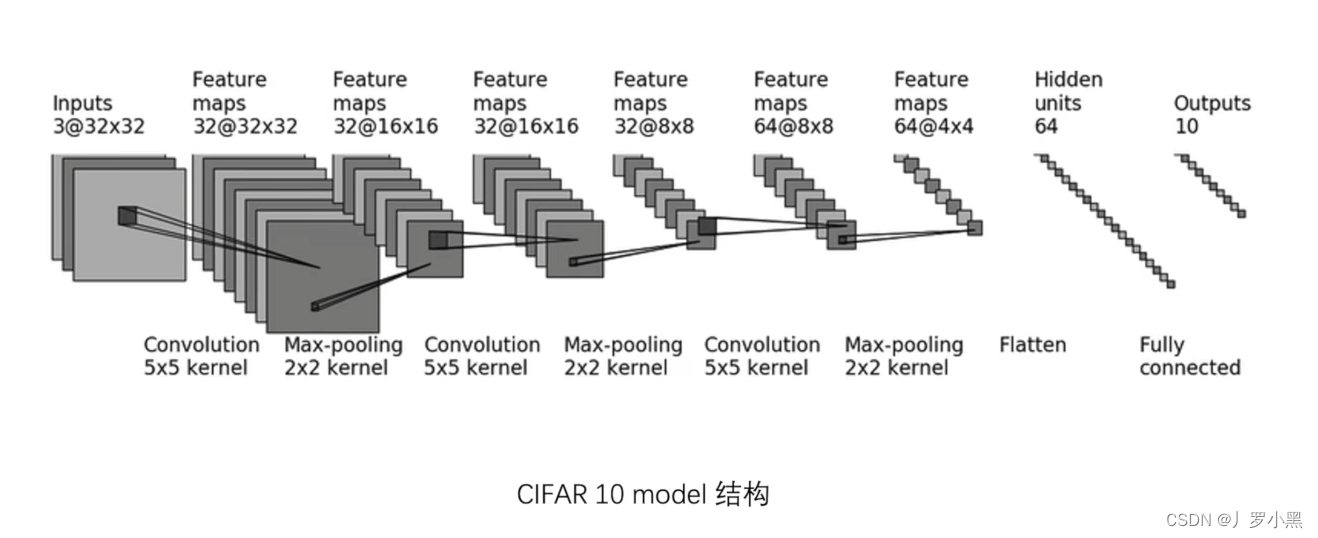

- 针对CIFAR 10数据集的神经网络模型结构如下图:

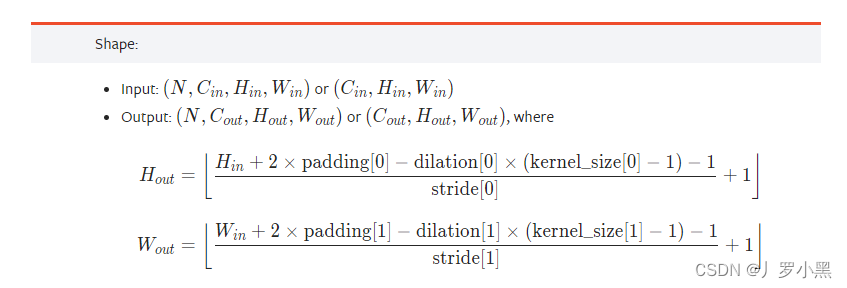

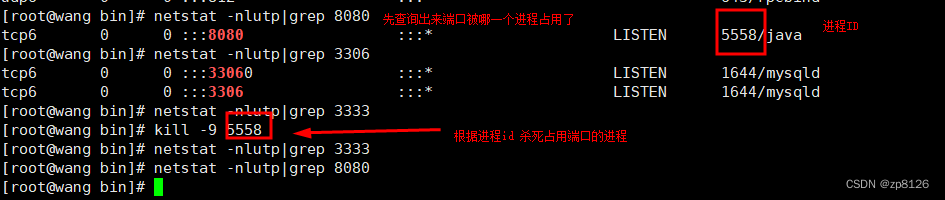

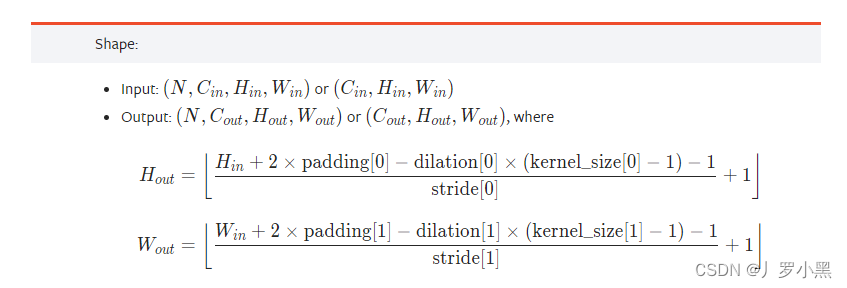

- 由于上图的结构没有给出具体的padding、stride的值,所以我们需要根据以下公式,手动推算:

- 注意:当stride太大时,padding也会变得很大,这不合理,所以stride从1开始推,dilation没有特殊说明为空洞卷积的话(默认为1)

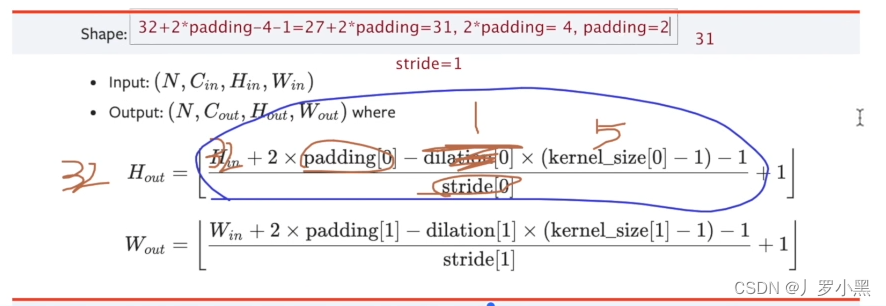

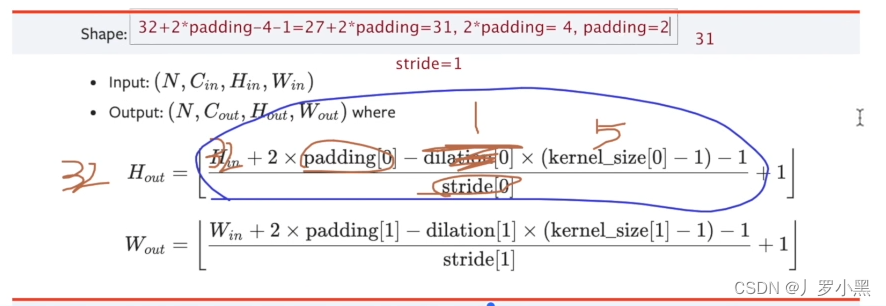

- 第一个卷积层的padding、stride如下:

- 网络模型代码如下:

import torch.nn

from torch import nnclass Tudui(nn.Module):def __init__(self):super().__init__()self.conv1 = nn.Conv2d(3, 32, 5, 1, 2)self.maxpool1 = nn.MaxPool2d(2,2)self.conv2 = nn.Conv2d(32, 32, 5, 1, 2)self.maxpool2 = nn.MaxPool2d(2,2)self.conv3 = nn.Conv2d(32, 64, 5, 1, 2)self.maxpool3 = nn.MaxPool2d(2,2)self.flatten = nn.Flatten()self.linear1 = nn.Linear(1024, 64)self.linear2 = nn.Linear(64, 10) def forward(self, input):x = self.conv1(input)x = self.maxpool1(x)x = self.conv2(x)x = self.maxpool2(x)x = self.conv3(x)x = self.maxpool3(x)x = self.flatten(x)x = self.linear1(x)output = self.linear2(x)return outputtudui = Tudui()

input = torch.ones([64,3,32,32])

print(tudui)

output = tudui(input)

print(output.shape)

- 我们可以使用sequential来合并各种层,简化代码,如下:

import torch.nn

from torch import nnclass Tudui(nn.Module):def __init__(self):super().__init__()self.module1 = nn.Sequential(nn.Conv2d(3, 32, 5, 1, 2),nn.MaxPool2d(2, 2),nn.Conv2d(32, 32, 5, 1, 2),nn.MaxPool2d(2, 2),nn.Conv2d(32, 64, 5, 1, 2),nn.MaxPool2d(2, 2),nn.Flatten(),nn.Linear(1024, 64),nn.Linear(64, 10))def forward(self, input):output = self.module1(input)return outputtudui = Tudui()

input = torch.ones([64,3,32,32])

print(tudui)

output = tudui(input)

print(output.shape)

- 也可以使用tensorboard来可视化模型,代码如下:

import torch.nn

from torch import nn

from torch.utils.tensorboard import SummaryWriterclass Tudui(nn.Module):def __init__(self):super().__init__()self.module1 = nn.Sequential(nn.Conv2d(3, 32, 5, 1, 2),nn.MaxPool2d(2, 2),nn.Conv2d(32, 32, 5, 1, 2),nn.MaxPool2d(2, 2),nn.Conv2d(32, 64, 5, 1, 2),nn.MaxPool2d(2, 2),nn.Flatten(),nn.Linear(1024, 64),nn.Linear(64, 10))def forward(self, input):output = self.module1(input)return outputwriter = SummaryWriter('logs_seq')

tudui = Tudui()

input = torch.ones([64,3,32,32])

print(tudui)

output = tudui(input)

print(output.shape)

writer.add_graph(tudui, input)

writer.close()

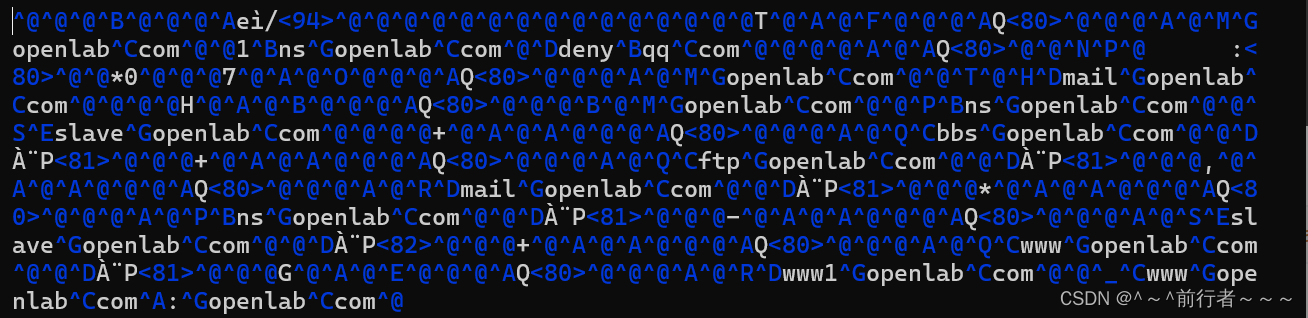

- 结果如下: