1、概述

机器视觉项目中,如何采集到合适的图像是项目的第一步,也是最重要的一步,直接关系到后面图像处理算法及最终执行的结果。所以采用不同的工业相机成像以及如何转换成图像处理库所需要的格式成为项目开发中首先要考虑的问题。

2、工业相机图像采集方式

这里工业相机选择就不赘述了,因为相对于项目或设备来讲,需要根据项目需要挑选适合的硬件,而针对图像采集也有基于SDK开发或直接采用Halcon的相机采集助手。

2.1 相机自带的SDK采图

这种方式是厂家比较推荐的,也是比较常用的方法,原因之一是每个厂家对自己的产品比较熟知,提供的SDK也是比较稳定的,而且也会有一定的技术支持;第二个原因就是基于SDK的开发可以获取更多相机及图像的原始参数,这样在做项目中的灵活度也会更高,一般大一些的品牌相机的SDK和demo程序会更详细,而且针对相机封装的函数也更完善,这里我在项目中用的较多的是Dalsa和basler,国产的用的较多的是海康、京航和方诚。这里针对Dalsa和balsa的面阵相机做一下简单的介绍。(编程环境是Qt5)

2.1.1 Dalsa相机采图方式

初始化相机

void CaptureThread::initCamera()

{m_AcqDevice = new SapAcqDevice(SapLocation("Nano-M2420_1", 0),false);BOOL Status = m_AcqDevice->Create();if(Status){qDebug()<<"相机连接成功"<<endl;m_Buffers = new SapBufferWithTrash(3,m_AcqDevice);m_AcqDevice->GetFeatureValue("Width",&Img_Width);m_AcqDevice->GetFeatureValue("Height",&Img_Height);// qDebug()<<"Width:"<<Img_Width<<"Height:"<<Img_Height<<endl;m_Xfer = new SapAcqDeviceToBuf(m_AcqDevice,m_Buffers,XferCallback,this);}if (!CreateObjects()) { return; }

}

回调函数主要是用于获取图像数据

void CaptureThread::XferCallback(SapXferCallbackInfo *pInfo)

{CaptureThread *pDlg = (CaptureThread *) pInfo->GetContext();if(pInfo->IsTrash()){qDebug()<<"IsTrash"<<endl;return;}// qDebug()<<"相机向缓存中写入数据"<<endl;HObject hv_Current_Image;BYTE *pData;pDlg->m_Buffers->GetAddress((void**)&pData);GenImage1(&hv_Current_Image,"byte",pDlg->Img_Width,pDlg->Img_Height,(Hlong)pData);// GenImageInterleaved(&hv_Current_Image,(Hlong)pData,"rgb",pDlg->Img_Width,pDlg->Img_Height,-1,"byte",0,0,0,0,-1,0);//判断图像处理工作方式if(pDlg->TestImg_WorkType == 0){emit pDlg->startImgProcess(hv_Current_Image);pDlg->TestImg_WorkType = -1;}else if(pDlg->TestImg_WorkType == 1){emit pDlg->startImgProcess(hv_Current_Image);}else{emit pDlg->canShowImg(hv_Current_Image);}pDlg->m_Buffers->ReleaseAddress((void *)pData);pDlg->m_Buffers->Clear(pDlg->m_Buffers->GetIndex());

}

最后是调用Dalsa相机的采图函数来触发

void CaptureThread::OnGrab()

{m_Xfer->Grab();

}void CaptureThread::OnSnap()

{m_Xfer->Snap();

}void CaptureThread::OnFreeze()

{SnapSignal = true;m_Xfer->Freeze();

}

2.1.2 basler相机采图方式

首先初始化相机:

void CBaslerCameraControl::initCamera()

{PylonInitialize(); //初始化相机m_basler.RegisterImageEventHandler(this, RegistrationMode_Append, Cleanup_Delete); //注册图像事件程序,模式为Append插入;// m_basler.RegisterConfiguration(this, RegistrationMode_ReplaceAll, Cleanup_None); //注册图像事件,模式为单张覆盖;m_basler.Attach(CTlFactory::GetInstance().CreateFirstDevice(),Cleanup_Delete);qDebug()<<"Using device " << m_basler.GetDeviceInfo().GetModelName()<<endl;m_basler.Open(); //打开相机if (!m_basler.IsOpen() || m_basler.IsGrabbing()){qDebug()<<"camera open failed"<<endl;return;}

}

下一步就可以调用库函数进行采集

//连续采图的回调函数

void CBaslerCameraControl::OnImageGrabbed(CInstantCamera &camera, const CGrabResultPtr &grabResult)

{m_mutexLock.lock();if (grabResult->GrabSucceeded()){m_ptrGrabResult = grabResult;//将捕获到的图像传递出去

// qDebug() <<"Captureok"<<endl;HObject hv_CurrentImg;CopyImgToHObject(m_ptrGrabResult,hv_CurrentImg);emit canShowImg(hv_CurrentImg);qDebug() <<"Captureok"<<endl;}m_mutexLock.unlock();

}void CBaslerCameraControl::StartAcquire()

{if ( !m_basler.IsGrabbing() ){GrabOnLine_Signal = true;m_basler.StartGrabbing(GrabStrategy_LatestImageOnly,GrabLoop_ProvidedByInstantCamera);}

}void CBaslerCameraControl::StartSnap()

{m_basler.StartGrabbing(1);CBaslerUniversalGrabResultPtr ptrGrabResult;m_basler.RetrieveResult( 5000, ptrGrabResult, TimeoutHandling_ThrowException);if (ptrGrabResult->GrabSucceeded()){qDebug()<<"snapok"<<endl;HObject hv_CurrentImg;CopyImgToHObject(ptrGrabResult,hv_CurrentImg);emit canShowImg(hv_CurrentImg);}

}void CBaslerCameraControl::CloseCamera()

{if(m_basler.IsOpen()) {m_basler.DetachDevice();m_basler.Close();m_basler.DestroyDevice();m_ptrGrabResult.Release();}

}void CBaslerCameraControl::deleteAll()

{//停止采集if(m_isOpenAcquire) {StopAcquire();}//关闭摄像头try{CloseCamera();m_basler.DeregisterImageEventHandler(this);//关闭库qDebug() << "SBaslerCameraControl deleteAll: PylonTerminate" ;PylonTerminate();}catch (const Pylon::GenericException& e){qDebug() << e.what();}

}

2.1.3 小结

大部分的相机SDK大体都类似,都是通过相机句柄去调用图像采集的回调函数或图像buff,在以上介绍的程序中SDK获取的是图像的buff,再通过buff里的图像数据转换成HObject或者Mat格式,这个详细操作下节再讲。通过相机SDK进行Grab或Snap,其优势是成像高效稳定,搞懂相机自带的SDK程序和Demo程序,可以很快的实现采图测试,而且BYTE格式的原始图像数据,可以使用c++进行paint或转成Qt的Qimage进行显示操作。其不便之处就在于,将BYTE转换成HObject或Mat的耗时,可能会影响图像实时显示,所以需要考虑图像显示和处理的效率问题。对于有编程基础的同学,推荐使用此方法进行图像采集。

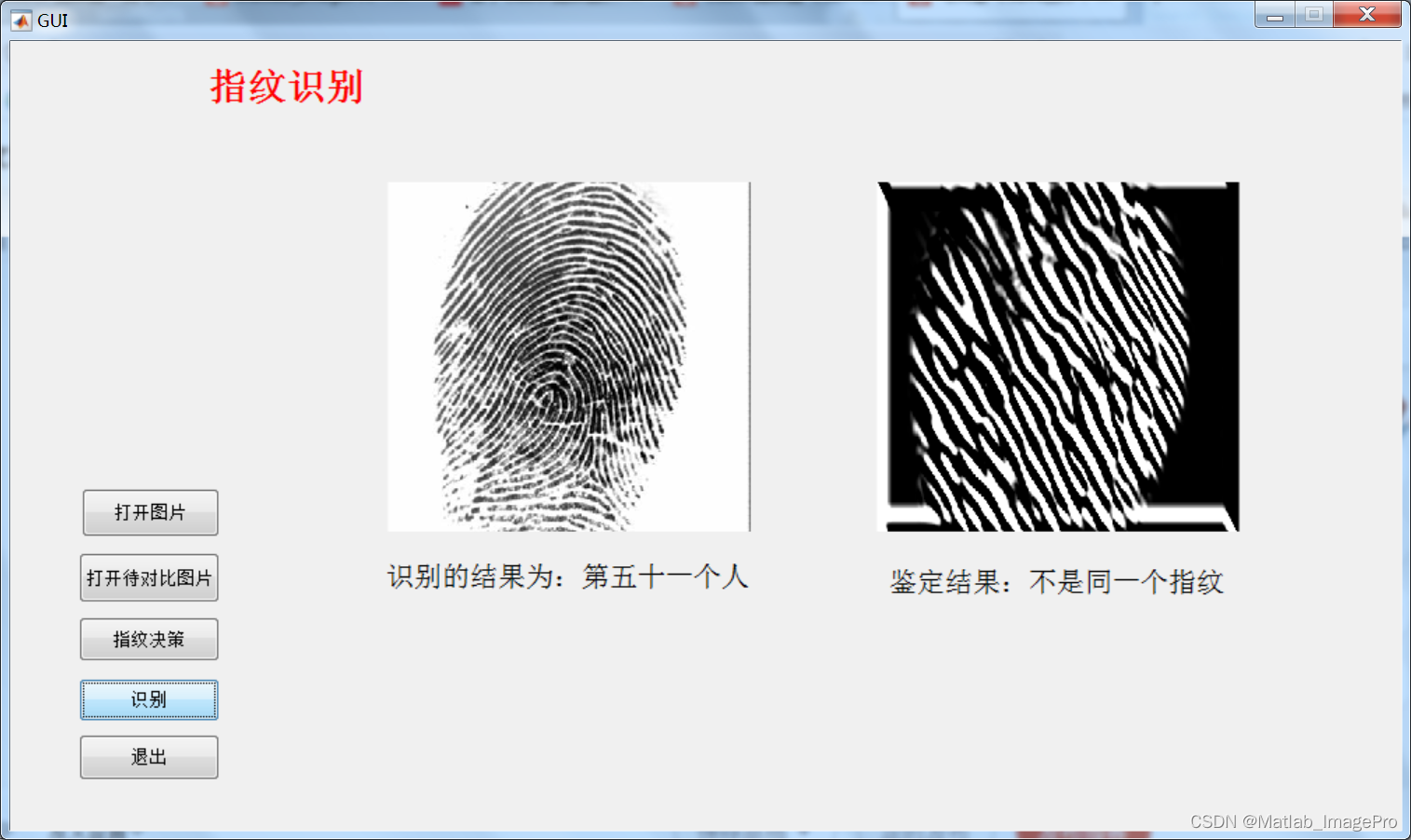

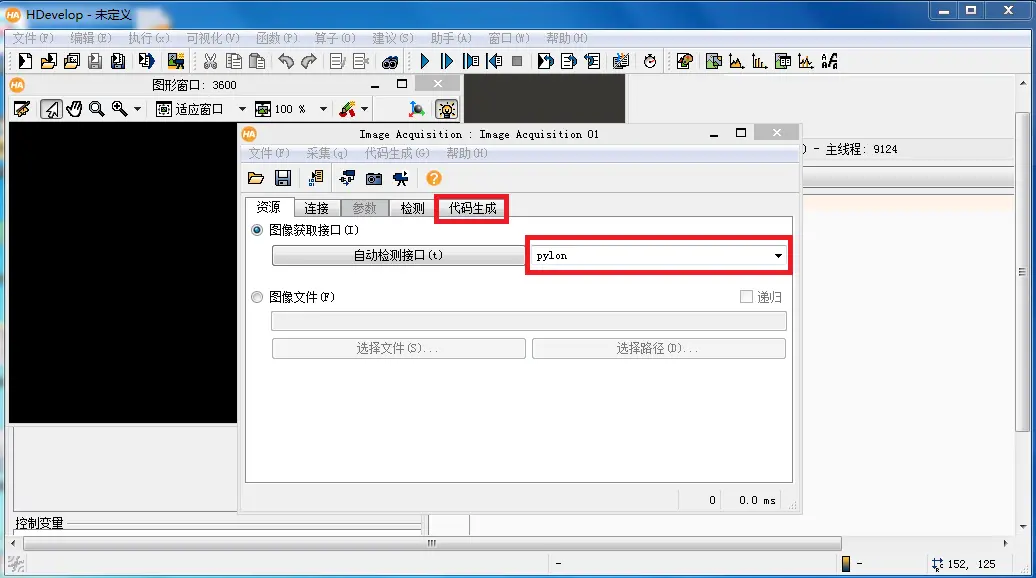

##2.2 Halcon自带的图像采集助手进行采图

1.使用basler相机自带的 IPConfigurator软件设置好电脑的ip地址,保证电脑和相机已连接成功,然后使用basler的采图工具pylon viewer测试是否能采集到图像;

2.打开halcon,助手选项下选择“打开新的Image Acquisition”

3.自动检测接口,选择对应的相机接口文件,如“pylon”,然后点击“代码生成”即可

2.3 采图方法总结

使用Halcon采图助手进行采图比较适合新手,没有太大的编程量,也可以用于项目前期的方案制定,图像处理评估等;第一种采图方法的适用性更广,可以满足不同项目的开发需求。

3、图像格式的互相转换(BYTE、HObject、Mat和QImage)

在项目开发过程中,会用到不同的图像处理库、不同的图形显示环境,所以需要针对图像的格式进行对应的转换

3.1 BYTE转HObject

这个比较常用,通过相机SDK采集后的图像数据转换成Halcon格式,便于后期的图像处理操作;

void CBaslerCameraControl::CopyImgToHObject(CGrabResultPtr pInBuffer, HObject &hv_Image)

{HBYTE *pData = (HBYTE*)pInBuffer->GetBuffer();int nHeight = pInBuffer->GetHeight();int nWidth = pInBuffer->GetWidth();GenImage1(&hv_Image,"byte",nWidth,nHeight,(Hlong)pData);

// HObject hv_Current_Image;

// //图像格式转换方法一:数据拷贝

// // int size = (pDlg->Img_Width)* (pDlg->Img_Height)*sizeof(BYTE);

// // BYTE *dataBuf = new BYTE[size];

// // dataBuf = (byte *)malloc(size);

// // pDlg->m_Buffers->Read(0,(pDlg->Img_Width)* (pDlg->Img_Height),dataBuf);

// // GenImage1(&hv_Current_Image,"byte",pDlg->Img_Width,pDlg->Img_Height,(Hlong)dataBuf);

// //图像格式转换方法二:数据指针

// BYTE *pData;

// pDlg->m_Buffers->GetAddress((void**)&pData);

// GenImage1(&hv_Current_Image,"byte",pDlg->Img_Width,pDlg->Img_Height,(Hlong)pData);

// // GenImageInterleaved(&hv_Current_Image,(Hlong)pData,"rgb",pDlg->Img_Width,pDlg->Img_Height,-1,"byte",0,0,0,0,-1,0);

}

上述转换有两种方法,一种使用数据拷贝,一种使用数据指针,相比较第二种耗时少,推荐使用。

3.2 BYTE转Mat、QImage

void CBaslerCameraControl::CopyImgToMat(CGrabResultPtr pInBuffer, Mat &Mat_Img)

{HBYTE *pData = (HBYTE*)pInBuffer->GetBuffer();int nHeight = pInBuffer->GetHeight();int nWidth = pInBuffer->GetWidth();unsigned char *pImageBuffer = (unsigned char *)pInBuffer->GetBuffer();//黑白图像const uint8_t *pImageBuffer = (uint8_t *) ptrGrabResult->GetBuffer();Mat_img =Mat(cv::Size(nWidth , nHeight ), CV_8U, (void*)pImageBuffer, cv::Mat::AUTO_STEP);QImg = QImage(pImageBuffer ,nWidth ,nHeight ,QImage::Format_Indexed8);//彩色图像// 新建pylon ImageFormatConverter对象.CImageFormatConverter formatConverter;//确定输出像素格式formatConverter.OutputPixelFormat = PixelType_BGR8packed;//将抓取的缓冲数据转化成pylon image.formatConverter.Convert(m_bitmapImage, pInBuffer);// 将 pylon image转成OpenCV image.Mat_img = cv::Mat(pInBuffer->GetHeight(), pInBuffer->GetWidth(), CV_8UC3, (uint8_t *)m_bitmapImage.GetBuffer());QImg = QImage(pImageBuffer ,nWidth /3,nHeight ,nWidth ,QImage::Format_RGB888);

}

3.3 HObject与Mat互转

using namespace cv;

using namespace Halcon;//HObject转Mat

Mat HObject2Mat(HObject Hobj)

{HTuple htCh = HTuple();HString cType;cv::Mat Image;ConvertImageType(Hobj,&Hobj,"byte");CountChannels(Hobj,&htch);Hlong wid = 0;Hlong hgt = 0;if(htch[0].I() == 1){HImage hImg(Hobj);void *ptr = hImg.GetImagePointer1(&cType,&wid,&hgt);int W = wid;int H = hgt;Image.create(H,W,CV_8UC1);unsigned char *pdata = static_case<unsigned char*>(ptr);memcpy(Image.data,pdata,W*H);}else if (htch[0].I() == 3){void *Rptr;void *Gptr;void *Bptr;HImage hImg(Hobj);hImg.GetImagePointer3(&Rptr,&Gptr,&Bptr,&cType,&wid,&hgt);int W = wid;int H = hgt;Image.create(H,W,CV_8UC3);vector<cv::Mat> VecM(3);VecM[0].create(H,W,CV_8UC1);VecM[1].create(H,W,CV_8UC1);VecM[2].create(H,W,CV_8UC1);unsigned char *R = (unsigned char *)Rptr;unsigned char *G = (unsigned char *)Gptr;unsigned char *B = (unsigned char *)Bptr;memcpy(VecM[2].data,R,W*H);memcpy(VecM[1].data,G,W*H);memcpy(VecM[0].data,B,W*H);cv::merge(VecM,Image);}return Image;

}

//Mat转Hobject

Hobject Mat2Hobject(Mat& image)

{Hobject Hobj = Hobject();int hgt = image.rows;int wid = image.cols;int i;if (image.type() == CV_8UC3){vector<Mat> imgchannel;split(image, imgchannel);Mat imgB = imgchannel[0];Mat imgG = imgchannel[1];Mat imgR = imgchannel[2];uchar* dataR = new uchar[hgt*wid];uchar* dataG = new uchar[hgt*wid];uchar* dataB = new uchar[hgt*wid];for (i = 0; i < hgt; i++){memcpy(dataR + wid*i, imgR.data + imgR.step*i, wid);memcpy(dataG + wid*i, imgG.data + imgG.step*i, wid);memcpy(dataB + wid*i, imgB.data + imgB.step*i, wid);}gen_image3(&Hobj, "byte", wid, hgt, (Hlong)dataR, (Hlong)dataG, (Hlong)dataB);delete[]dataR;delete[]dataG;delete[]dataB;dataR = NULL;dataG = NULL;dataB = NULL;}else if (image.type() == CV_8UC1){uchar* data = new uchar[hgt*wid];for (i = 0; i < hgt; i++)memcpy(data + wid*i, image.data + image.step*i, wid);gen_image1(&Hobj, "byte", wid, hgt, (Hlong)data);delete[] data;data = NULL;}return Hobj;

}

3.4 HObject转QImage

void HObjectToQImage(HObject hv_image, QImage &qimage)

{HTuple hChannels,htype,hpointer;HTuple width=0;HTuple height=0;ConvertImageType(hv_image,&hv_image,"byte");//将图片转化成byte类型CountChannels(hv_image,&hChannels); //判断图像通道数if(hChannels[0].I()==1)//单通道图{unsigned char *ptr;GetImagePointer1(hv_image,&hpointer,&htype,&width,&height);ptr=(unsigned char *)hpointer[0].L();qimage= QImage(ptr,width,height,QImage::Format_Indexed8);}else if(hChannels[0].I()==3)//三通道图{unsigned char *ptr3;HObject ho_ImageInterleaved;rgb3_to_interleaved(hv_image, &ho_ImageInterleaved);GetImagePointer1(ho_ImageInterleaved, &hpointer, &htype, &width, &height);ptr3=(unsigned char *)hpointer[0].L();qimage= QImage(ptr3,width/3,height,width,QImage::Format_RGB888);}

}

void rgb3_to_interleaved (HObject ho_ImageRGB, HObject *ho_ImageInterleaved)

{// Local iconic variablesHObject ho_ImageAffineTrans, ho_ImageRed, ho_ImageGreen;HObject ho_ImageBlue, ho_RegionGrid, ho_RegionMoved, ho_RegionClipped;// Local control variablesHTuple hv_PointerRed, hv_PointerGreen, hv_PointerBlue;HTuple hv_Type, hv_Width, hv_Height, hv_HomMat2DIdentity;HTuple hv_HomMat2DScale;GetImagePointer3(ho_ImageRGB, &hv_PointerRed, &hv_PointerGreen, &hv_PointerBlue,&hv_Type, &hv_Width, &hv_Height);GenImageConst(&(*ho_ImageInterleaved), "byte", hv_Width*3, hv_Height);//HomMat2dIdentity(&hv_HomMat2DIdentity);HomMat2dScale(hv_HomMat2DIdentity, 1, 3, 0, 0, &hv_HomMat2DScale);AffineTransImageSize(ho_ImageRGB, &ho_ImageAffineTrans, hv_HomMat2DScale, "constant",hv_Width*3, hv_Height);//Decompose3(ho_ImageAffineTrans, &ho_ImageRed, &ho_ImageGreen, &ho_ImageBlue);GenGridRegion(&ho_RegionGrid, 2*hv_Height, 3, "lines", hv_Width*3, hv_Height+1);MoveRegion(ho_RegionGrid, &ho_RegionMoved, -1, 0);ClipRegion(ho_RegionMoved, &ho_RegionClipped, 0, 0, hv_Height-1, (3*hv_Width)-1);ReduceDomain(ho_ImageRed, ho_RegionClipped, &ho_ImageRed);MoveRegion(ho_RegionGrid, &ho_RegionMoved, -1, 1);ClipRegion(ho_RegionMoved, &ho_RegionClipped, 0, 0, hv_Height-1, (3*hv_Width)-1);ReduceDomain(ho_ImageGreen, ho_RegionClipped, &ho_ImageGreen);MoveRegion(ho_RegionGrid, &ho_RegionMoved, -1, 2);ClipRegion(ho_RegionMoved, &ho_RegionClipped, 0, 0, hv_Height-1, (3*hv_Width)-1);ReduceDomain(ho_ImageBlue, ho_RegionClipped, &ho_ImageBlue);OverpaintGray((*ho_ImageInterleaved), ho_ImageRed);OverpaintGray((*ho_ImageInterleaved), ho_ImageGreen);OverpaintGray((*ho_ImageInterleaved), ho_ImageBlue);return;

}

![洛谷_P1873 [COCI 2011/2012 #5] EKO / 砍树_python写法](https://img-blog.csdnimg.cn/direct/c8ce55c3bff848ef8d807453b9ad388b.png)