文章目录

- 需求分析

- 代码

- 环境准备

- 编写Mapper类

- 编写Reducer类

- 编写Driver驱动类

- 本地运行

- 注意事项

- 运行结果

- 提交到集群测试

需求分析

在给定的文本文件中统计输出每一个单词出现的总次数

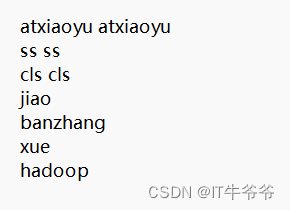

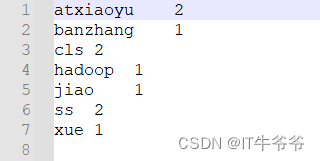

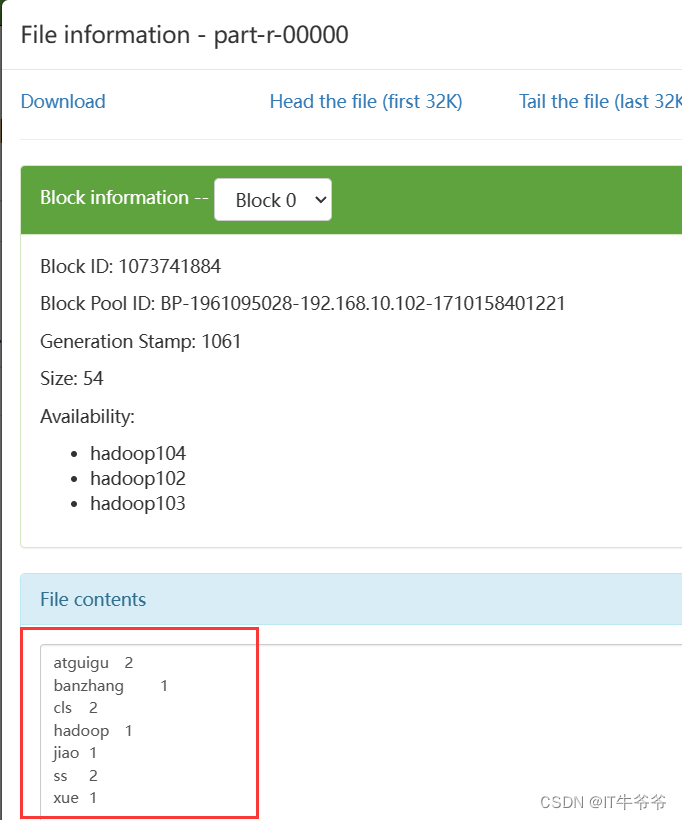

期望输出数据:

atxiaoyu 2

banzhang 1

cls 2

hadoop 1

jiao 1

ss 2

xue 1

实现过程:按照MapReduce编程规范,分别编写Mapper,Reducer,Driver。

代码

环境准备

(1)创建maven工程,MapReduceDemo

(2)在pom.xml文件中添加如下依赖

<dependencies><dependency><groupId>org.apache.hadoop</groupId><artifactId>hadoop-client</artifactId><version>3.1.3</version></dependency><dependency><groupId>junit</groupId><artifactId>junit</artifactId><version>4.12</version></dependency><dependency><groupId>org.slf4j</groupId><artifactId>slf4j-log4j12</artifactId><version>1.7.30</version></dependency>

</dependencies>

(3)在项目的src/main/resources目录下,新建一个文件,命名为“log4j.properties”,在文件中填入:

log4j.rootLogger=INFO, stdout

log4j.appender.stdout=org.apache.log4j.ConsoleAppender

log4j.appender.stdout.layout=org.apache.log4j.PatternLayout

log4j.appender.stdout.layout.ConversionPattern=%d %p [%c] - %m%n

log4j.appender.logfile=org.apache.log4j.FileAppender

log4j.appender.logfile.File=target/spring.log

log4j.appender.logfile.layout=org.apache.log4j.PatternLayout

log4j.appender.logfile.layout.ConversionPattern=%d %p [%c] - %m%n

(4)创建包名:com.atxiaoyu.mapreduce.wordcount

编写Mapper类

代码:

package com.atxiaoyu.mapreduce.wordcount;import jdk.nashorn.internal.ir.CallNode;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;import java.io.IOException;/**/

public class WordCountMapper extends Mapper<LongWritable, Text,Text, IntWritable> {private Text outK = new Text();private IntWritable outV=new IntWritable(1);@Overrideprotected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {//1 获取一行//atxiaoyu atxiaoyuString line = value.toString();//2 切割//atxiaoyu//atxiaoyuString[] words = line.split(" ");//3 循环写出for (String word : words) {outK.set(word);//写出context.write(outK,outV);}}}编写Reducer类

package com.atxiaoyu.mapreduce.wordcount;import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;import java.io.IOException;public class WordCountReducer extends Reducer<Text, IntWritable,Text,IntWritable> {private IntWritable outV=new IntWritable();@Overrideprotected void reduce(Text key, Iterable<IntWritable> values, Context context) throws IOException, InterruptedException {int sum=0;//atxiaoyu,(1,1)//累加for (IntWritable value : values) {sum=sum+value.get();}outV.set(sum);//写出context.write(key,outV);}

}编写Driver驱动类

package com.atxiaoyu.mapreduce.wordcount;import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.kerby.config.Conf;import java.io.IOException;public class WordCountDriver {public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {Configuration conf =new Configuration();//1 获取jobJob job = Job.getInstance(conf);//2 设置jar包路径job.setJarByClass(WordCountDriver.class);// 3 管理mapper和reducerjob.setMapperClass(WordCountMapper.class);job.setReducerClass(WordCountReducer.class);// 4 设置map输出的kv类型job.setMapOutputKeyClass(Text.class);job.setMapOutputValueClass(IntWritable.class);//5 设置最终输出的kv类型job.setOutputKeyClass(Text.class);job.setOutputValueClass(IntWritable.class);//6 设置输入路径和输出路径FileInputFormat.setInputPaths(job,new Path("D:\\input"));FileOutputFormat.setOutputPath(job,new Path("D:\\output"));//7 提交jobboolean result = job.waitForCompletion(true);System.exit(result?0:1);}

}本地运行

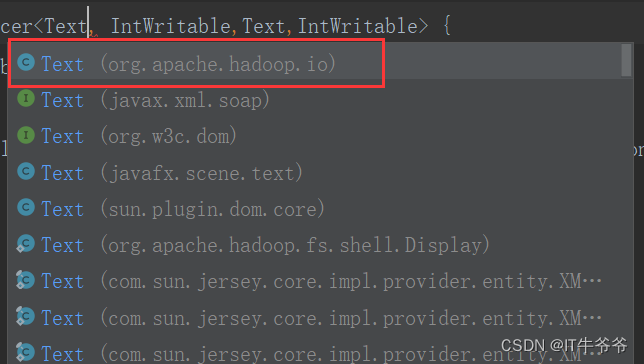

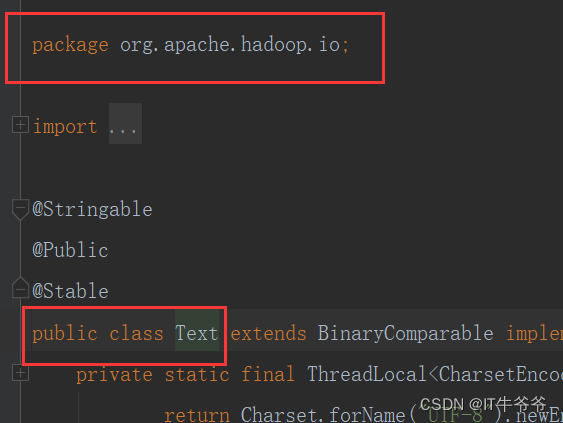

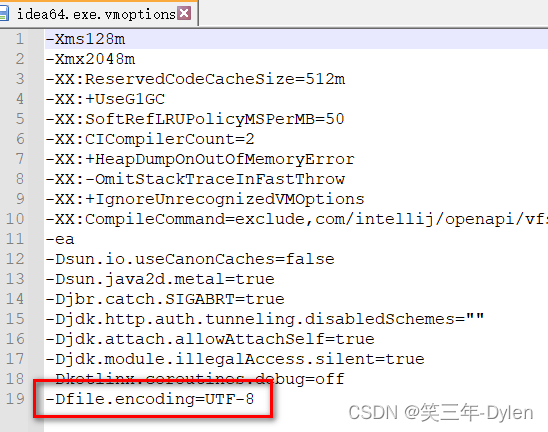

注意事项

大家引入类的时候一定注意不要引入错了,这里涉及的类太多了,我就是引入错了导致有bug找了半天才发现问题,比如这个Text,大家输入完Text不要立即点回车,一定要注意看看是否导入了正确的类。

导入后也可以进到内部检查看看是否正确:

运行结果

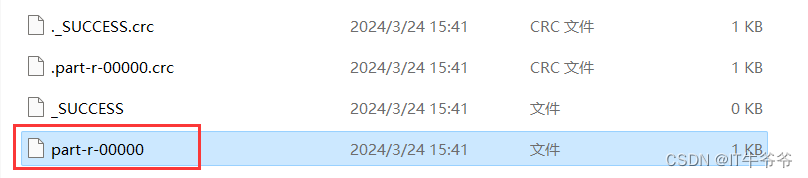

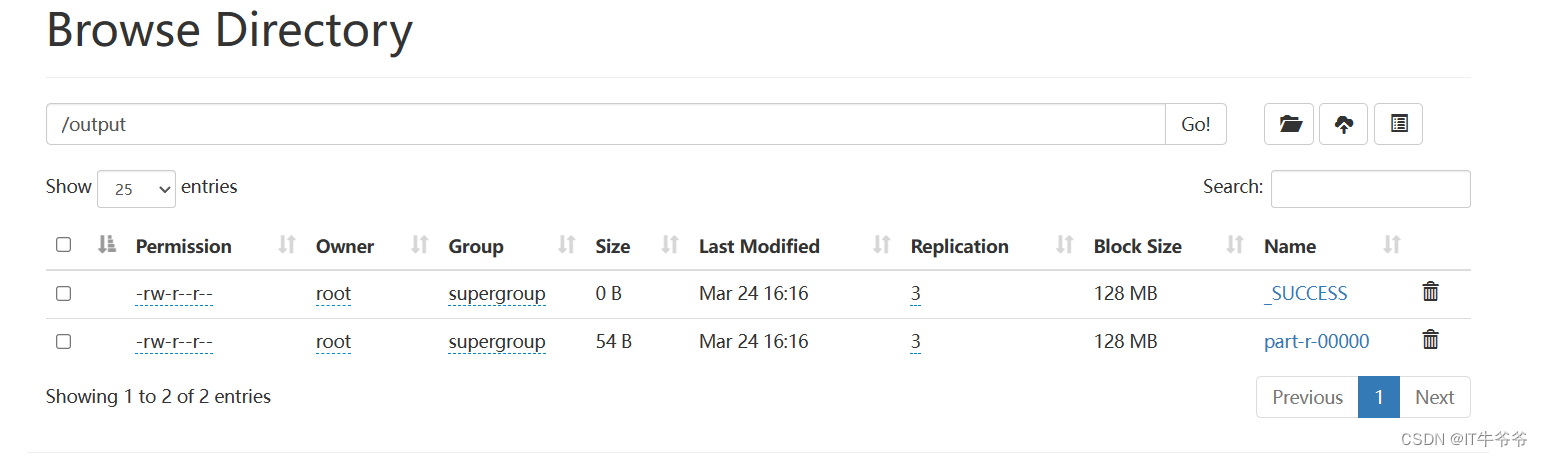

我们进入到输出的目录中来,打开这个文件:

这个文件的内容就是执行的词频统计的结果。

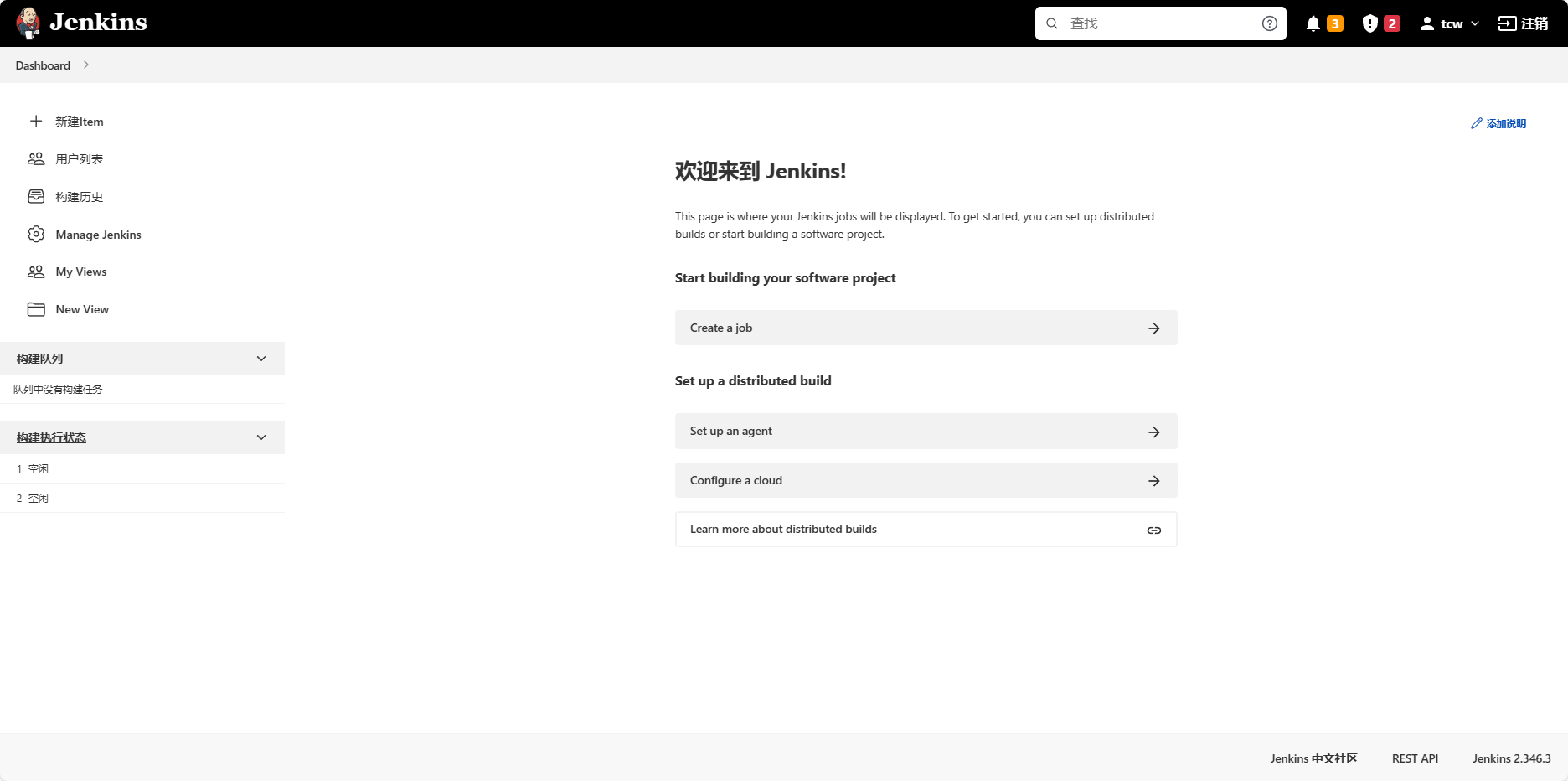

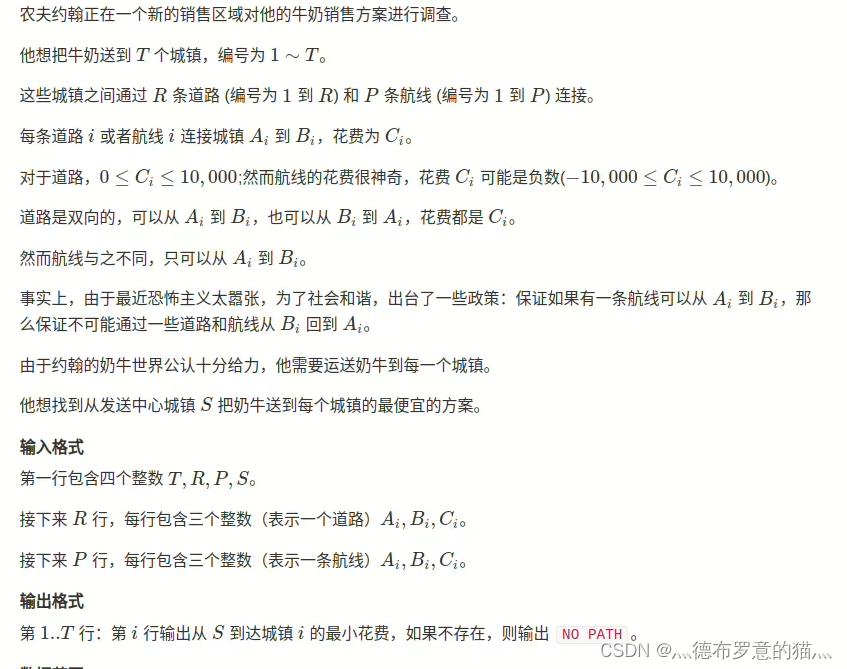

提交到集群测试

用maven打jar包,需要添加的打包插件依赖:

<build><plugins><plugin><artifactId>maven-compiler-plugin</artifactId><version>3.6.1</version><configuration><source>1.8</source><target>1.8</target></configuration></plugin><plugin><artifactId>maven-assembly-plugin</artifactId><configuration><descriptorRefs><descriptorRef>jar-with-dependencies</descriptorRef></descriptorRefs></configuration><executions><execution><id>make-assembly</id><phase>package</phase><goals><goal>single</goal></goals></execution></executions></plugin></plugins>

</build>

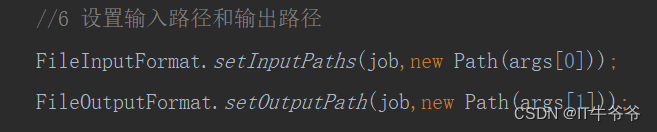

首先我们修改Driver类中的两个参数,就是两个路径,我们把它修改为可以动态的指定路径args[0]和args[1],而不是某个固定的路径:

只需修改这两处即可。

然后对项目进行打包,修改包名为wc:

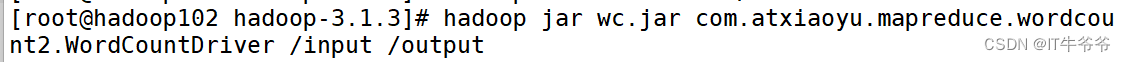

启动Hadoop集群,拷贝该jar包到Hadoop集群的/opt/module/hadoop-3.1.3路径下,然后执行WordCount程序:

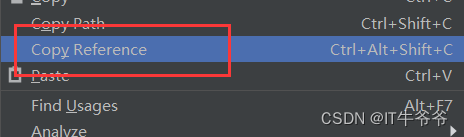

(wc.jar后面的路径是右键WordCountDriver这个类,点击Copy Reference:

)

发现执行成功:

![[leetcode] 240. 搜索二维矩阵 II](https://img-blog.csdnimg.cn/direct/a5a6570d40564dc3b6873b84f3a7ab62.png)