Spark搭建过程

Spark集群搭建-CSDN博客

1.首先先下载并解压对应的hive压缩包,要选择适配自己系统的,我这个用的是3.1.2

2.配置环境变量

vim ~/.bashrc

export HIVE_HOME=/usr/local/hive

export PATH=$PATH:$HIVE_HOME/bin

source ~/.bashrc3.修改hive-site.xml

cd $HIVE_HOME/confvim hive-site.xml<?xml version="1.0" encoding="UTF-8" standalone="no"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration><property><name>javax.jdo.option.ConnectionURL</name><value>jdbc:mysql://localhost:3306/hive?createDatabaseIfNotExist=true</value><description>JDBC connect string for a JDBC metastore</description></property><property><name>javax.jdo.option.ConnectionDriverName</name><value>com.mysql.jdbc.Driver</value><description>Driver class name for a JDBC metastore</description></property><property><name>javax.jdo.option.ConnectionUserName</name><value>hive</value><description>username to use against metastore database</description></property><property><name>javax.jdo.option.ConnectionPassword</name><value>hive</value><description>password to use against metastore database</description></property>

</configuration>4.启动hive

报错,是guava包的版本冲突导致的

解决方法:

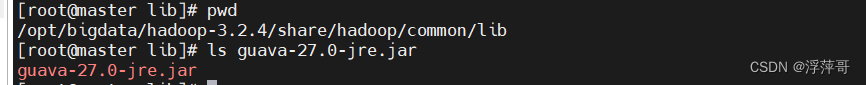

## 删除hive下的gua包

rm -rf guava-19.0.jar

## 将hadoop下的复制一份到hive下

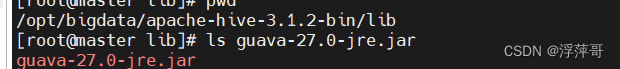

cp guava-27.0-jre.jar /opt/bigdata/apache-hive-3.1.2-bin/lib

再次启动

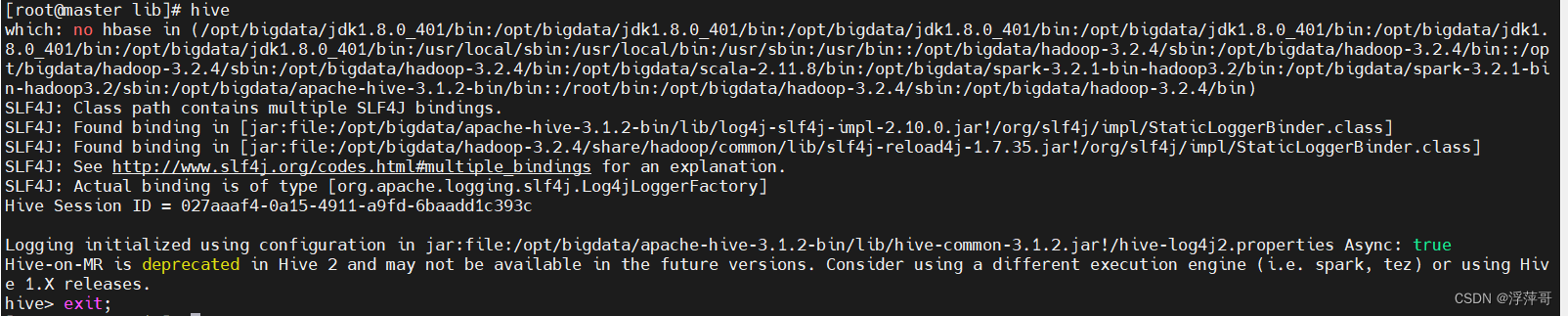

hive

启动成功