一、RTMP简介及rtmplib库:

RTMP协议是Real Time Message Protocol(实时信息传输协议)的缩写,它是由Adobe公司提出的一种应用层的协议,用来解决多媒体数据传输流的多路复用(Multiplexing)和分包(packetizing)的问题。

简介:

- RTMP是应用层协议,采用(通常)TCP来保证可靠传输;

- 在TCP完成握手链接建立后,还会进行RTMP的一些自己的握手动作,在建立RTMP Connection链接;

- 在Connection链接上会传输一些控制信息,例如SetChunkSize,SetACKWindowSize。CreateStream命令会创建一个Stream链接,用于传输具体的音视频数据和控制这些信息传输的命令信息;

-

RTMP在发送的时候会将数据格式化为Message,然后再将一个个Messsage分为带有Message ID 的Chunk(块),每个Chuck可能是一个完整的Message,可能是一个Message的一部分。在接收端会根据Message ID,长度与data将chunk还原为Message。

雷霄骅的关于rtmp及rtmplib源码解析的地址。

rtmplib:

关于外网的RTMPDump文档介绍了rtmp。

RTMPLIB库中重要方法:

- RTMP_Alloc ():创建会话句柄;

- RTMP_Init ():初始化rtmp;

- RTMP_SetupURL():设置URL;

- RTMP_Connect():建立网络连接;

- RTMP_ConnectStream():建立连接rtmp流会话;

- RTMP_Read():读取rtmp流,当返回0字节时,流已经完成。

- RTMP_Write():发布流,客户端可以在RTMP_Connect () 调用之前 调用RTMP_EnableWrite () 发布流,然后在建立会话后使用 RTMP_Write ();

- RTMP_Pause():在播放流时,暂停和取消暂停;

- RTMP_Seek():移动流播放位置;

- RTMP_Close():关闭流;

- RTMP_Free():释放会话句柄;

- RTMPPacket_Alloc():初始化RTMPPacket的内存空间;

- RTMPPacket:RTMP协议封包;

- RTMPPacket_Free():释放RTMPPacket的内存空间;

二、X264库简介:

x264库中重要方法:

- x264_param_default():给各个参数设置默认值;

- x264_param_default_preset():设置默认的preset,内部调用了x264_param_apply_preset()和x264_param_apply_tune(),在它们之中即可找到各个preset和tune的详细参数区别;

- x264_param_apply_profile():给定的文件配置,流的速率;

- x264_encoder_open():用于打开编码器,其中初始化了libx264编码所需要的各种变量,必须使用x264_encoder_close()进行释放;

- x264_picture_alloc():为图片开辟数据空间,必须使用x264_picture_clean()进行释放;

- x264_encoder_encode():编码一帧YUV为H.264码流

雷霄骅关于X264的源码分析。

三、FAAC库简介:

FAAC是一个MPEG-4和MPEG-2的AAC编码器,其特性是:可移植性好,快速,支持LC/Main/LTP,通过Dream支持DRM,代码小相对于FFMPEG的AAC转码。

libFaac库中重要方法:

- faacEncOpen():打开编码器;

- faacEncGetCurrentConfiguration():获取配置;

- faacEncSetConfiguration() :设置配置;

- faacEncGetDecoderSpecificInfo():得到解码信息;

- faacEncEnccode():对帧进行编码,并返回编码后的长度。

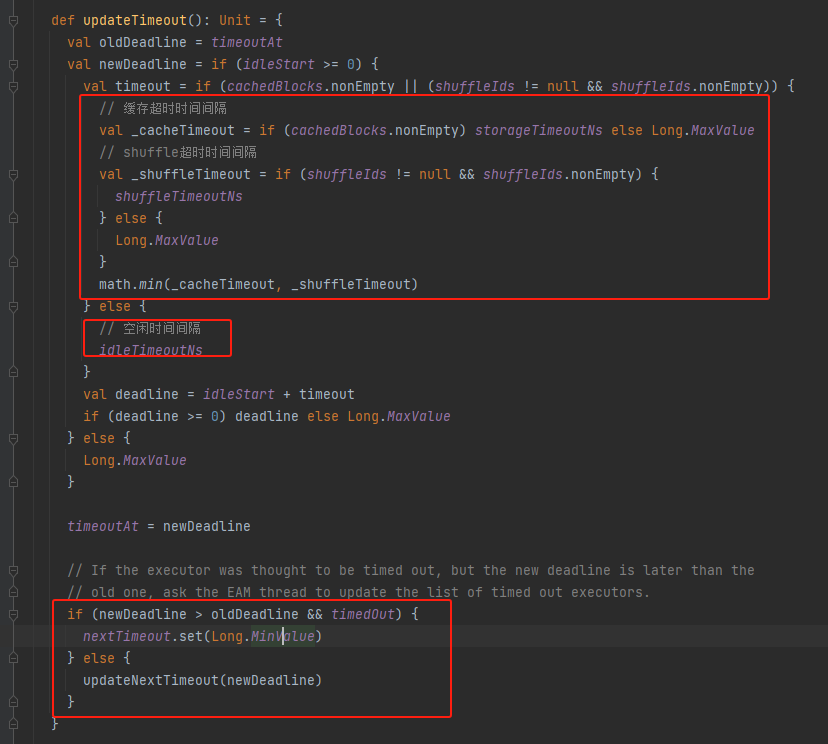

四、代码框架图及总览:

Android层获取对应的视频(YUV)及音频(PCM)数据,传入C++层分别通过libx264把YUV转成h264数据、通过libfaac把PCM转成AAC数据。然后包装h264/pcm成RTMPPacket封装包,回调push到PacketQueue队列中,调到子线程RTMP_SendPacket

五、代码实现细节解析:

1、LiveManger.java类中获取CamerX视频YUV数据:

private void updateVideoCodecInfo(int degree) {camera2Helper.updatePreviewDegree(degree);if (mRtmpLivePusher != null) {int width = previewSize.getWidth();int height = previewSize.getHeight();if (degree == 90 || degree == 270) {int temp = width;width = height;height = temp;}mRtmpLivePusher.setVideoCodecInfo(width, height, videoFrameRate, videoBitRate);}}@Overridepublic void onPreviewFrame(byte[] yuvData) {

// Log.e(TAG, "onPreviewFrame:"+yuvData.length);if (yuvData != null && isLiving && mRtmpLivePusher != null) {mRtmpLivePusher.pushVideoData(yuvData);}}2、VideoStreamPacket.cpp中设置libx264的设置属性及把YUV封装成H264:

void VideoStreamPacket::encodeVideo(int8_t *data) {

// callbackStatusMsg("VideoStreamPacket encodeVideo",0);lock_guard<mutex> lock(m_mutex);if (!pic_in)return;//YUV420解析分离int offset = 0;memcpy(pic_in->img.plane[0], data, (size_t) m_frameLen); // yoffset += m_frameLen;memcpy(pic_in->img.plane[1], data + offset, (size_t) m_frameLen / 4); // uoffset += m_frameLen / 4;memcpy(pic_in->img.plane[2], data + offset, (size_t) m_frameLen / 4); //v//YUV封装成H264x264_nal_t *pp_nal;int pi_nal;x264_picture_t pic_out;x264_encoder_encode(videoCodec, &pp_nal, &pi_nal, pic_in, &pic_out);int pps_len, sps_len = 0;uint8_t sps[100] = {0};uint8_t pps[100] = {0};//H264包装成RTMP流格式for (int i = 0; i < pi_nal; ++i) {x264_nal_t nal = pp_nal[i];if (nal.i_type == NAL_SPS) {sps_len = nal.i_payload - 4;memcpy(sps, nal.p_payload + 4, static_cast<size_t>(sps_len));} else if (nal.i_type == NAL_PPS) {pps_len = nal.i_payload - 4;memcpy(pps, nal.p_payload + 4, static_cast<size_t>(pps_len));sendSpsPps(sps, pps, sps_len, pps_len);} else {sendFrame(nal.i_type, nal.p_payload, nal.i_payload);}}}void VideoStreamPacket::encodeVideo(int8_t *data) {

// callbackStatusMsg("VideoStreamPacket encodeVideo",0);lock_guard<mutex> lock(m_mutex);if (!pic_in)return;//YUV420解析分离int offset = 0;memcpy(pic_in->img.plane[0], data, (size_t) m_frameLen); // yoffset += m_frameLen;memcpy(pic_in->img.plane[1], data + offset, (size_t) m_frameLen / 4); // uoffset += m_frameLen / 4;memcpy(pic_in->img.plane[2], data + offset, (size_t) m_frameLen / 4); //v//YUV封装成H264x264_nal_t *pp_nal;int pi_nal;x264_picture_t pic_out;x264_encoder_encode(videoCodec, &pp_nal, &pi_nal, pic_in, &pic_out);int pps_len, sps_len = 0;uint8_t sps[100] = {0};uint8_t pps[100] = {0};//H264包装成RTMP流格式for (int i = 0; i < pi_nal; ++i) {x264_nal_t nal = pp_nal[i];if (nal.i_type == NAL_SPS) {sps_len = nal.i_payload - 4;memcpy(sps, nal.p_payload + 4, static_cast<size_t>(sps_len));} else if (nal.i_type == NAL_PPS) {pps_len = nal.i_payload - 4;memcpy(pps, nal.p_payload + 4, static_cast<size_t>(pps_len));sendSpsPps(sps, pps, sps_len, pps_len);} else {sendFrame(nal.i_type, nal.p_payload, nal.i_payload);}}}3、LiveManger.java中获取AudioRecord的PCM数据:

private void initAudio() {int minBufferSize = AudioRecord.getMinBufferSize(sampleRate,channelConfig, audioFormat) * 2;int bufferSizeInBytes = Math.max(minBufferSize, mRtmpLivePusher.getInputSamplesFromNative());if (ActivityCompat.checkSelfPermission(mContext, Manifest.permission.RECORD_AUDIO) != PackageManager.PERMISSION_GRANTED) {// TODO: Consider calling// ActivityCompat#requestPermissions// here to request the missing permissions, and then overriding// public void onRequestPermissionsResult(int requestCode, String[] permissions,// int[] grantResults)// to handle the case where the user grants the permission. See the documentation// for ActivityCompat#requestPermissions for more details.return;}mRtmpLivePusher.setAudioCodecInfo(sampleRate, channelConfig);inputSamples = mRtmpLivePusher.getInputSamplesFromNative();mAudioRecord = new AudioRecord(MediaRecorder.AudioSource.MIC, sampleRate,channelConfig, audioFormat, bufferSizeInBytes);}class AudioTask implements Runnable {@Overridepublic void run() {mAudioRecord.startRecording();byte[] bytes = new byte[inputSamples];while (isLiving) {int len = mAudioRecord.read(bytes, 0, bytes.length);if (len > 0) {mRtmpLivePusher.pushAudioData(bytes);}}mAudioRecord.stop();}}4、AudioStreamPacket.cpp中设置libfaac的属性及把PCM封装成AAC:

int AudioStreamPacket::setAudioEncInfo(int samplesInHZ, int channels) {callbackStatusMsg("AudioStreamPacket setAudioEncInfo", 0);m_channels = channels;//open faac encoderm_audioCodec = faacEncOpen(static_cast<unsigned long>(samplesInHZ),static_cast<unsigned int>(channels),&m_inputSamples,&m_maxOutputBytes);m_buffer = new u_char[m_maxOutputBytes];//set encoder paramsfaacEncConfigurationPtr config = faacEncGetCurrentConfiguration(m_audioCodec);config->mpegVersion = MPEG4; //设置版本,录制MP4文件时要用MPEG4config->aacObjectType = LOW; //编码类型config->inputFormat = FAAC_INPUT_16BIT; //输入数据类型config->outputFormat = 0;return faacEncSetConfiguration(m_audioCodec, config);

}void AudioStreamPacket::encodeData(int8_t *data) {

// callbackStatusMsg("AudioStreamPacket encodeData", 0);//encode a frame, and return encoded lenint byteLen = faacEncEncode(m_audioCodec, reinterpret_cast<int32_t *>(data),static_cast<unsigned int>(m_inputSamples),m_buffer,static_cast<unsigned int>(m_maxOutputBytes));if (byteLen > 0) {int bodySize = 2 + byteLen;auto *packet = new RTMPPacket();RTMPPacket_Alloc(packet, bodySize);//stereopacket->m_body[0] = 0xAF;if (m_channels == 1) {packet->m_body[0] = 0xAE;}packet->m_body[1] = 0x01;memcpy(&packet->m_body[2], m_buffer, static_cast<size_t>(byteLen));packet->m_hasAbsTimestamp = 0;packet->m_nBodySize = bodySize;packet->m_packetType = RTMP_PACKET_TYPE_AUDIO;packet->m_nChannel = 0x11;packet->m_headerType = RTMP_PACKET_SIZE_LARGE;if (mContext != nullptr && mAudioCallback != nullptr) {mAudioCallback(mContext, packet);}}

}5、RtmpPusherManger.cpp分别把AudioStreamPacket.cpp和VideoStreamPacket.cpp回调的RTMPPacket包,然后传入到RtmpInit.cpp。包装的RTMPPacket包push到队列PacketQueue中,

void RtmpPusherManger::initVideoPacket() {RtmpStatusMessage(this, "initVideoPacket", 0);if (videoStreamPacket == nullptr) {videoStreamPacket = new VideoStreamPacket();}videoStreamPacket->setRtmpStatusCallback(this, RtmpStatusMessage);videoStreamPacket->setVideoCallback(this, callbackRtmpPacket);

}void RtmpPusherManger::initAudioPacket() {RtmpStatusMessage(this, "initAudioPacket", 0);if (audioStreamPacket == nullptr) {audioStreamPacket = new AudioStreamPacket();}audioStreamPacket->setRtmpStatusCallback(this, RtmpStatusMessage);audioStreamPacket->setAudioCallback(this, callbackRtmpPacket);

}void RtmpPusherManger::callbackRtmpPacket(void *context, RTMPPacket *packet) {if (context != nullptr && packet != nullptr) {RtmpPusherManger *pFmpegManger = static_cast<RtmpPusherManger *>(context);pFmpegManger->addRtmpPacket(packet);}}void RtmpPusherManger::addRtmpPacket(RTMPPacket *packet) {if (rtmpInit == nullptr) return;rtmpInit->addRtmpPacket(packet);

}6、RtmpInit.cpp中从RtmpPusherManger.cpp传入添加的RTMPPacket包,push到队列PacketQueue中。在子线程中初始化RTMP相关操作成功后不断从PacketQueue中pop出RTMPPacket,并最终把RTMPPacket在RTMP_SendPacket发送出去。

void RtmpInit::addRtmpPacket(RTMPPacket *packet) {

// callbackStatusMsg("Rtmp addRtmpPacket", 0);packet->m_nTimeStamp = RTMP_GetTime() - start_time;packetQueue.push(packet);

}void RtmpInit::startThread() {callbackStatusMsg("Rtmp startThread", 0);LOGE("murl:%s", mUrl);char *url = const_cast<char *>(mUrl);RTMP *rtmp = 0;do {rtmp = RTMP_Alloc();if (!rtmp) {callbackStatusMsg("Rtmp create fail", -1);break;}RTMP_Init(rtmp);rtmp->Link.timeout = 5;int ret = RTMP_SetupURL(rtmp, url);if (!ret) {callbackStatusMsg("Rtmp SetupURL fail", ret);break;}//开启输出模式RTMP_EnableWrite(rtmp);ret = RTMP_Connect(rtmp, 0);if (!ret) {callbackStatusMsg("rtmp连接地址失败", ret);break;}ret = RTMP_ConnectStream(rtmp, 0);if (!ret) {callbackStatusMsg("rtmp连接流失败", ret);break;}//start pushingisPushing = true;packetQueue.setRunning(true);//获取音频的首帧值if (mContext != nullptr) {mGetAudioTagCallback(mContext);}RTMPPacket *packet = nullptr;while (isPushing) {packetQueue.pop(packet);if (!isPushing) {break;}if (!packet) {continue;}packet->m_nInfoField2 = rtmp->m_stream_id;ret = RTMP_SendPacket(rtmp, packet, 1);releasePackets(packet);if (!ret) {LOGE("RTMP_SendPacket fail...");callbackStatusMsg("RTMP_SendPacket fail...", -2);break;}}releasePackets(packet);} while (0);isPushing = false;packetQueue.setRunning(false);packetQueue.clear();//释放rtmpif (rtmp) {RTMP_Close(rtmp);RTMP_Free(rtmp);}delete url;

}

![[CP_AUTOSAR]_分层软件架构_接口之通信模块交互介绍](https://i-blog.csdnimg.cn/direct/3f23daa4802040509583a22a34b86ac1.png#pic_center)