0,LoFTR

CVPR 2021论文《LoFTR: Detector-Free Local Feature Matching with Transformers》开源代码

1,项目主页

LoFTR: Detector-Free Local Feature Matching with Transformers

2,GItHub主页

GitHub - zju3dv/LoFTR: Code for "LoFTR: Detector-Free Local Feature Matching with Transformers", CVPR 2021, T-PAMI 2022

3,配置环境

一键运行:

conda env create -f environment.yaml

'environment.yaml'中内容如下,所以时间会比较久:

name: loftr

channels:# - https://dx-mirrors.sensetime.com/anaconda/cloud/pytorch- pytorch- conda-forge- defaults

dependencies:- python=3.8- cudatoolkit=10.2- pytorch=1.8.1- pip- pip:- -r requirements.txt

4,下载模型

作者提供了数据下载链接,其中weights文件夹里是模型文件:

https://drive.google.com/drive/folders/1DOcOPZb3-5cWxLqn256AhwUVjBPifhuf?usp=sharing

模型文件下载后,将weights文件夹放在LoFTR目录中

5,设置数据

在LoFTR/demo文件夹下创建两个文件夹,命名为images和output

其中images需要放入进行特征匹配的照片

output用来存放输出结果

图片文件随便两张带有重叠景象的图片

6,测试demo

由于我测试的是室内拍摄的照片,所以使用的是indoor权重,程序如下:

import torch

import cv2

import numpy as np

import matplotlib.cm as cm

import osfrom src.utils.plotting import make_matching_figure

from src.loftr import LoFTR, default_cfgif __name__ == '__main__':# 根据图片拍摄场景和下载的预训练模型进行选择 可选:indoor(室内)、outdoor(室外)image_type = 'indoor'# 根据个人图片路径进行修改img0_pth = "demo/images/mouse (1).jpg"img1_pth = "demo/images/mouse (2).jpg"# img0_pth = "demo/images/1.png"# img1_pth = "demo/images/2.png"image_pair = [img0_pth, img1_pth]# 默认配置使用dual-softmax最大值。# 室外和室内模型使用相同的配置。# 你可以更改默认值,比如阈值和粗匹配类型。matcher = LoFTR(config=default_cfg)# 加载预训练模型if image_type == 'indoor':matcher.load_state_dict(torch.load("weights/indoor_ds.ckpt")['state_dict'])elif image_type == 'outdoor':matcher.load_state_dict(torch.load("weights/outdoor_ds.ckpt")['state_dict'])else:raise ValueError("给定的 image_type 错误。")matcher = matcher.eval().cuda()# 如果上传了新的图片对,重新运行此单元格(及以下单元格)。img0_raw = cv2.imread(image_pair[0], cv2.IMREAD_GRAYSCALE)img1_raw = cv2.imread(image_pair[1], cv2.IMREAD_GRAYSCALE)# 检查图像是否成功读取if img0_raw is None:raise FileNotFoundError(f"无法找到或读取路径 {image_pair[0]} 下的图像。")if img1_raw is None:raise FileNotFoundError(f"无法找到或读取路径 {image_pair[1]} 下的图像。")img0_raw = cv2.resize(img0_raw, (640, 480))img1_raw = cv2.resize(img1_raw, (640, 480))img0 = torch.from_numpy(img0_raw)[None][None].cuda() / 255.img1 = torch.from_numpy(img1_raw)[None][None].cuda() / 255.batch = {'image0': img0, 'image1': img1}# 使用 LoFTR 进行推理并获得预测with torch.no_grad():matcher(batch)mkpts0 = batch['mkpts0_f'].cpu().numpy()mkpts1 = batch['mkpts1_f'].cpu().numpy()mconf = batch['mconf'].cpu().numpy()# 绘图color = cm.jet(mconf, alpha=0.7)text = ['LoFTR','Matches: {}'.format(len(mkpts0)),]fig = make_matching_figure(img0_raw, img1_raw, mkpts0, mkpts1, color, mkpts0, mkpts1, text)# 也会自动下载高分辨率的PDF。make_matching_figure(img0_raw, img1_raw, mkpts0, mkpts1, color, mkpts0, mkpts1, text, path="demo/output/LoFTR-colab-demo.pdf")

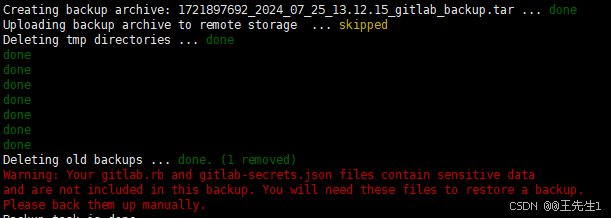

7,运行结果

运行上面的程序,在demo/output文件夹中能找到PDF文件LoFTR-colab-demo.pdf