项目背景

项目的目的,是为了对情感评论数据集进行预测打标。在训练之前,需要对数据进行数据清洗环节,前面已对数据进行清洗,详情可移步至NLP_情感分类_数据清洗

前面用机器学习方案解决,详情可移步至NLP_情感分类_机器学习方案

下面对已清洗的数据集,用预训练加微调方案进行处理

代码

导包

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

from tqdm import tqdm

import pickle

import numpy as np

import gc

import os

from sklearn.metrics import accuracy_score,f1_score,recall_score,precision_score

from transformers import AutoTokenizer, AutoModelForMaskedLM, AutoModel

import torch

import torch.nn as nn

from torch.utils.data import Dataset

import torch.utils.data as D

from tqdm import tqdm

from sklearn.model_selection import train_test_split

import warningswarnings.filterwarnings('ignore')

一些模型以及训练的参数设置

batch_size = 128

max_seq = 128

Epoch = 2

lr = 2e-5

debug_mode = False #若开启此模式,则只读入很小的一部分数据,可以用来快速调试整个流程

num_workers = 0 #多线程读取数据的worker的个数,由于win对多线程支持有bug,这里只能设置为0

seed = 4399

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

MODEL_PATH = 'pre_model/' #'juliensimon/reviews-sentiment-analysis' #预训练权重的目录 or 远程地址

tokenizer = AutoTokenizer.from_pretrained(MODEL_PATH)

定义dataset

class TextDataSet(Dataset):def __init__(self, df, tokenizer, max_seq=128, debug_mode = False):self.max_seq = max_seqself.df = dfself.tokenizer = tokenizerself.debug_mode = debug_modeif self.debug_mode:self.df = self.df[:100]def __len__(self):return len(self.df)def __getitem__(self,item):sent = self.df['text'].iloc[item]enc_code = self.tokenizer.encode_plus(sent,max_length=self.max_seq,pad_to_max_length=True,truncation=True)input_ids = enc_code['input_ids']input_mask = enc_code['attention_mask']label = self.df['label'].iloc[item]return (torch.LongTensor(input_ids), torch.LongTensor(input_mask), int(label))定义模型

class Model(nn.Module):def __init__(self,MODEL_PATH =None):super(Model, self).__init__()self.model = AutoModel.from_pretrained(MODEL_PATH)self.fc = nn.Linear(768, 2)def forward(self, input_ids, input_mask):sentence_emb = self.model(input_ids, attention_mask = input_mask).last_hidden_state # [batch,seq_len,emb_dim]sentence_emb = torch.mean(sentence_emb,dim=1)out = self.fc(sentence_emb)return out

读取数据

df = pd.read_csv('data/sentiment_analysis_clean.csv')

df = df.dropna()

声明训练及测试数据集

train_df, test_df = train_test_split(df,test_size=0.2,random_state=2024)#声明数据集

train_dataset = TextDataSet(train_df,tokenizer,debug_mode=debug_mode)

test_dataset = TextDataSet(test_df,tokenizer,debug_mode=debug_mode)#注意这里的num_workers参数,由于在win环境下对多线程支持不到位,所以这里只能设置为0,若使用服务器则可以设置其他的

train_loader = D.DataLoader(train_dataset, batch_size=batch_size, shuffle=True, num_workers=num_workers)test_loader = D.DataLoader(test_dataset, batch_size=batch_size, shuffle=False, num_workers=num_workers)

将定义模型实例化

model = Model(MODEL_PATH)

打印模型结构

model

模型训练

criterion = nn.CrossEntropyLoss()

model = model.to(device)

optimizer = torch.optim.AdamW(model.parameters(), lr=lr, betas=(0.5, 0.999))

scaler = torch.cuda.amp.GradScaler()

#*****************************************************train*********************************************

for epoch in range(Epoch):model.train()correct = 0total = 0for i, batch_data in enumerate(train_loader):(input_ids, input_mask, label) = batch_datainput_ids = input_ids.to(device)input_mask = input_mask.to(device)label = label.to(device)optimizer.zero_grad()with torch.cuda.amp.autocast():logit = model(input_ids, input_mask)loss = criterion(logit, label)scaler.scale(loss).backward()scaler.step(optimizer)scaler.update()_, predicted = torch.max(logit.data, 1)total += label.size(0)correct += (predicted == label).sum().item()if i % 10 == 0:acc = 100 * correct / totalprint(f'Epoch [{epoch+1}/{Epoch}], Step [{i+1}/{len(train_loader)}], Loss: {loss.item():.4f}, Accuracy: {acc:.2f}%')

测试集效果

#*****************************************************Test*********************************************

correct = 0

total = 0

with torch.no_grad():model.eval()for batch_data in test_loader:(input_ids, input_mask, label) = batch_datainput_ids = input_ids.to(device)input_mask = input_mask.to(device)label = label.to(device)outputs = model(input_ids, input_mask)_, predicted = torch.max(outputs.data, 1)total += label.size(0)correct += (predicted == label).sum().item()print('#'*30+'Test Accuracy:{:7.3f} '.format(100 * correct / total)+'#'*30)print()

同类型项目

阿里云-零基础入门NLP【基于机器学习的文本分类】

阿里云-零基础入门NLP【基于深度学习的文本分类3-BERT】

也可以参考进行学习

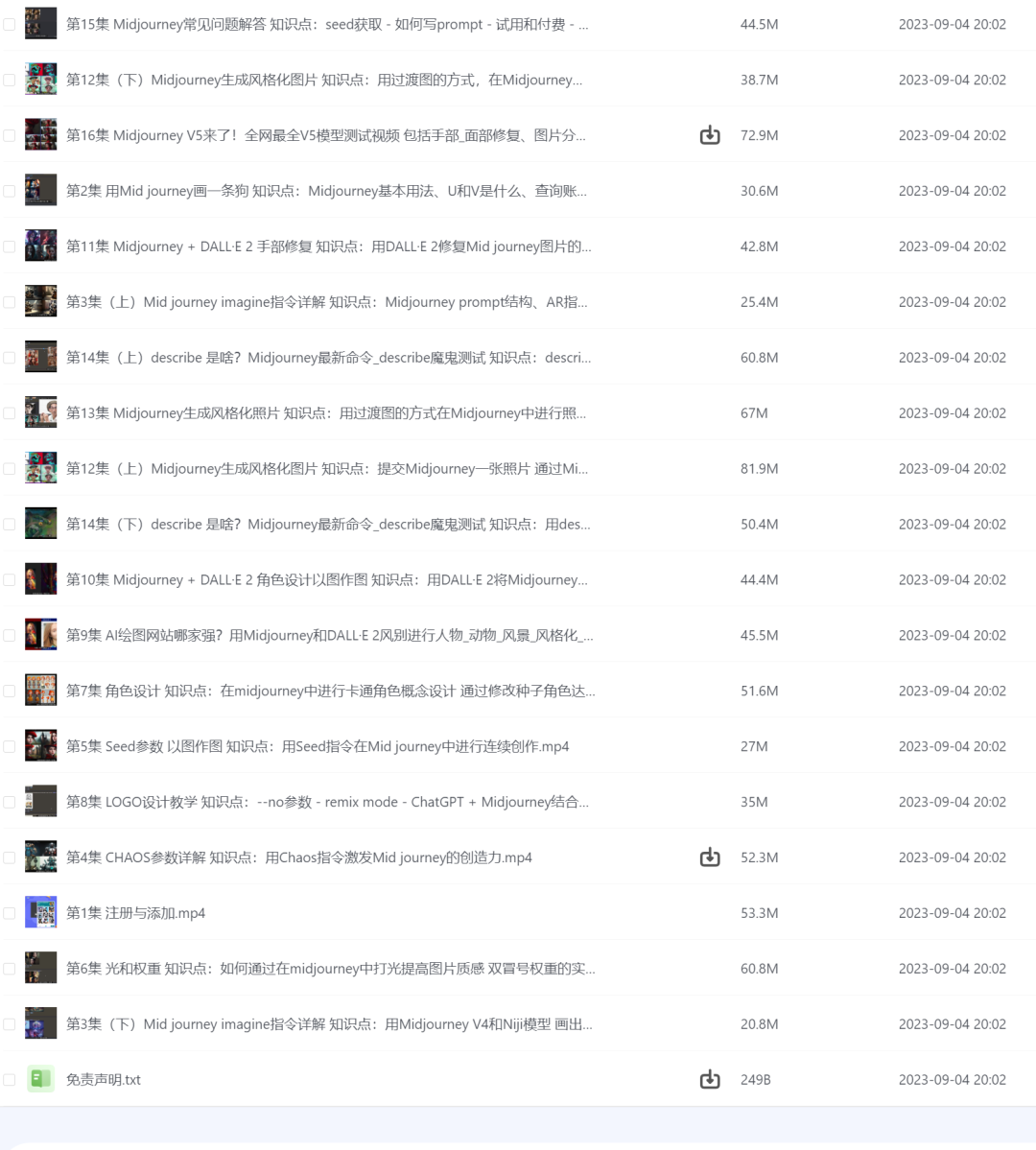

学习的参考资料:

深度之眼

![[图解]需要≠需求-《分析模式》漫谈](https://i-blog.csdnimg.cn/direct/1cc5a6af8e164ed7bd82dceb5cb71b23.png)