文章目录

- TensorRT-LLM & Triton Server 部署回顾

- 部署梳理

- Triton 架构

- 为什么要使用 backend ?

- triton_model_repo 目录结构

- Ensemble 模式

- BLS 模式

上篇文章进行了 TensorRT-LLM & Triton Server 部署 ,本篇简单讲讲 Triton Inference Server 的架构原理,便于大家更好的做配置和开发。

TensorRT-LLM & Triton Server 部署回顾

部署梳理

上一篇我们了解到部署 TRT-LLM & Triton 需要非常复杂的步骤,其实总的来说一共就四步:1. 下载模型 2. 转换&编译模型(TensorRT) 3. 配置 backend(Triton) 4. 启动 Triton Server

- 下载模型

# 下载模型

wget https://hf-mirror.com/hfd/hfd.sh

chmod a+x hfd.sh

export HF_ENDPOINT=https://hf-mirror.com

apt-get update

apt-get install -y aria2

aria2c --version

./hfd.sh meta-llama/Meta-Llama-3.1-8B-Instruct --hf_username Dong-Hua --hf_token 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 aria2c -x 4# 断点续传

aria2c --header='Authorization: Bearer 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' --console-log-level=error --file-allocation=none -x 4 -s 4 -k 1M -c 'https://hf-mirror.com/meta-llama/Meta-Llama-3.1-8B-Instruct/resolve/main/model-00002-of-00004.safetensors' -d '.' -o 'model-00002-of-00004.safetensors'

- 转换&编译模型(TensorRT)

# 转换格式

python3 convert_checkpoint.py --model_dir /data/Meta-Llama-3.1-8B-Instruct \--output_dir ./trt_ckpts/llama3.1_checkpoint_gpu_tp \--dtype float16 \--tp_size 1 \--workers 8

# 编译模型

trtllm-build --checkpoint_dir ./trt_ckpts/llama3.1_checkpoint_gpu_tp \--output_dir ./trt_engines/llama3.1_8B_128K_fp16_1-gpu \--workers 8 \--gemm_plugin auto \--max_num_tokens 131072

该步骤得到的是两个文件,一个编译后的模型引擎文件,一个是模型配置文件:

- 配置 backend(Triton)

# 在进行配置前,你需要先创建 triton_model_repo,并且把上一步骤的两个文件移入对应的 repo 下面:

cd tensorrtllm_backend

mkdir triton_model_repo

cp -r all_models/inflight_batcher_llm/* triton_model_repo/

cp /data/TensorRT-LLM/examples/llama/trt_engines/llama3.1_8B_128K_fp16_1-gpu/* triton_model_repo/tensorrt_llm/1# 进行 backend 配置

python3 tools/fill_template.py -i triton_model_repo/postprocessing/config.pbtxt \

tokenizer_dir:/data/Meta-Llama-3.1-8B-Instruct,\

tokenizer_type:auto,\

triton_max_batch_size:64,\

postprocessing_instance_count:1python3 tools/fill_template.py -i triton_model_repo/preprocessing/config.pbtxt \

tokenizer_dir:/data/Meta-Llama-3.1-8B-Instruct,\

tokenizer_type:auto,\

triton_max_batch_size:64,\

preprocessing_instance_count:1python3 tools/fill_template.py -i triton_model_repo/tensorrt_llm_bls/config.pbtxt \

triton_max_batch_size:64,\

decoupled_mode:true,\

bls_instance_count:1python3 tools/fill_template.py -i triton_model_repo/ensemble/config.pbtxt \

triton_max_batch_size:64python3 tools/fill_template.py -i triton_model_repo/tensorrt_llm/config.pbtxt \

triton_backend:tensorrtllm,\

triton_max_batch_size:64,\

decoupled_mode:true,\

engine_dir:triton_model_repo/tensorrt_llm/1,\

max_queue_delay_microseconds:10000,\

batching_strategy:inflight_fused_batching

此步骤的配置是通过脚本填充的方式修改配置,其实也可以进对应的文件进行配置,可以看到所有的配置改动都是修改的 triton_model_repo 目录下的,是的没错,Triton 的核心就是 repo 下面的 backends,本篇文章目的也是讲关于 backend 的内容。

- 启动 Triton Server

# 启动

python3 scripts/launch_triton_server.py \

--world_size 1 \

--model_repo=/data/tensorrtllm_backend/triton_model_repo/ \

--log \

--log-file=./triton_server.logs# 关闭

pkill tritonserver

Triton 架构

下图显示了 Triton 推理服务器的高级架构。

- 模型存储库是一个基于文件系统的存储库,其中包含 Triton 将用于推理的模型。

- 推理请求通过

HTTP/REST或GRPC或C API到达服务器,然后路由到适当的每个模型调度程序。 - Triton 实现了多种调度和批处理算法,可以根据每个模型进行配置。

- 每个模型的调度程序可以选择执行推理请求的批处理,然后将请求传递给与模型类型相对应的

backend。 backend 使用批处理请求中提供的输入执行推理以生成请求的输出,然后返回输出。

为什么要使用 backend ?

如上图,在 Triton上面所有部署的模型都是通过某一种 backend 部署在服务器上,例如 TensorRT 的模型,就会使用 tensorrt 类型的 backend 去启一个或者多个的 model 实例,这些 model 实例放到 GPU 上或者 CPU 上执行推理。

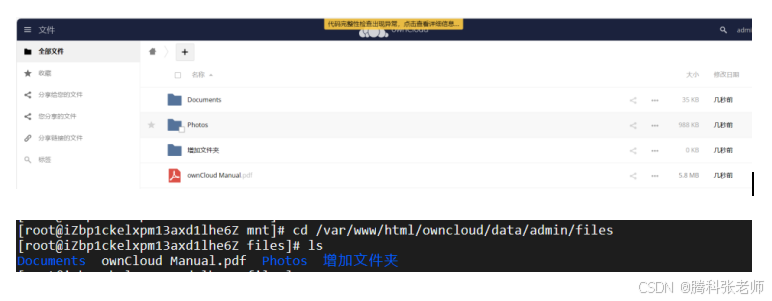

triton_model_repo 目录结构

在部署的时候我们需要配置很多 backend 的配置文件,然后再启动 server,我们修改的配置文件都在 triton_model_repo 目录下,先看目录结构:

(base) [root@iv-ycl6gxrcwka8j6ujk4bc tensorrtllm_backend]# tree triton_model_repo/

triton_model_repo/

├── ensemble

│ ├── 1

│ └── config.pbtxt

├── postprocessing

│ ├── 1

│ │ ├── model.py

│ │ └── __pycache__

│ │ └── model.cpython-310.pyc

│ └── config.pbtxt

├── preprocessing

│ ├── 1

│ │ ├── model.py

│ │ └── __pycache__

│ │ └── model.cpython-310.pyc

│ └── config.pbtxt

├── tensorrt_llm

│ ├── 1

│ │ ├── config.json # 模型配置文件

│ │ ├── model.py # backend 脚本

│ │ ├── __pycache__

│ │ │ └── model.cpython-310.pyc

│ │ └── rank0.engine # 模型引擎文件

│ └── config.pbtxt # backend 配置文件,部署时候修改的配置就是此文件

└── tensorrt_llm_bls├── 1│ ├── lib│ │ ├── decode.py│ │ ├── __pycache__│ │ │ ├── decode.cpython-310.pyc│ │ │ └── triton_decoder.cpython-310.pyc│ │ └── triton_decoder.py│ ├── model.py│ └── __pycache__│ └── model.cpython-310.pyc└── config.pbtxt

可以看到 triton_model_repo 仓库中有五个模型 backend,其中:

- ensemble model:它允许你把多个模型串联在一起,形成一个 papline,只能处理静态请求

- postprocessing model:前置处理

- preprocessing model:后置处理

- tensorrt_llm model:tensorrt 引擎部署的模型

- tensorrt_llm_bls model:bls 模式,它允许有分支结构,可以进行动态的处理

每个模型文件都是类似如下结构:

├── *****

│ ├── 1

│ │ ├── config.json # 模型配置文件

│ │ ├── model.py # backend 脚本

│ │ ├── __pycache__

│ │ │ └── model.cpython-310.pyc

│ │ └── rank0.engine # 模型引擎文件

│ └── config.pbtxt # backend 配置文件,部署时候修改的配置就是此文件

在部署完之后你可以通过以下两种方式进行大模型请求:

curl -X POST localhost:8000/v2/models/ensemble/generate -d '{"text_input": "你是什么语言模型?", "max_tokens": 1000, "bad_words": "", "stop_words": "<|eot_id|>"}'curl -X POST localhost:8000/v2/models/tensorrt_llm_bls/generate -d '{"text_input": "你是什么语言模型?", "max_tokens": 1000, "bad_words": "", "stop_words": "<|eot_id|>"}'

问题来了,他们有什么区别呢?

Ensemble 模式

Ensemble 不是一个模型,只是一个调度器。它允许把多个模型串联在一起,形成一个 pipeline,请求使用该模型的时候,只会根据配置的 pipeline 进行静态的调用处理。

config.pbtxt 配置文件内容:

# Copyright 2024, NVIDIA CORPORATION & AFFILIATES. All rights reserved.

#

# Redistribution and use in source and binary forms, with or without

# modification, are permitted provided that the following conditions

# are met:

# * Redistributions of source code must retain the above copyright

# notice, this list of conditions and the following disclaimer.

# * Redistributions in binary form must reproduce the above copyright

# notice, this list of conditions and the following disclaimer in the

# documentation and/or other materials provided with the distribution.

# * Neither the name of NVIDIA CORPORATION nor the names of its

# contributors may be used to endorse or promote products derived

# from this software without specific prior written permission.

#

# THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS ``AS IS'' AND ANY

# EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE

# IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR

# PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT OWNER OR

# CONTRIBUTORS BE LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL,

# EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT LIMITED TO,

# PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS OF USE, DATA, OR

# PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND ON ANY THEORY

# OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT

# (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE

# OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.name: "ensemble"

platform: "ensemble"

max_batch_size: 64

input [{name: "text_input"data_type: TYPE_STRINGdims: [ 1 ]},...{name: "embedding_bias_weights"data_type: TYPE_FP32dims: [ -1 ]optional: true}

]

output [{name: "text_output"data_type: TYPE_STRINGdims: [ -1 ]},...{name: "batch_index"data_type: TYPE_INT32dims: [ 1 ]}

]

ensemble_scheduling {step [{model_name: "preprocessing"model_version: -1input_map {key: "QUERY"value: "text_input"}...},{model_name: "tensorrt_llm"model_version: -1input_map {key: "input_ids"value: "_INPUT_ID"}...},{model_name: "postprocessing"model_version: -1input_map {key: "TOKENS_BATCH"value: "_TOKENS_BATCH"}...}]

}

可以看到配置文件中配置了 scheduling,当使用 ensemble 模型的时候,他的调用链就是根据配置的 scheduling 进行处理的,注意每个配置文件中都包含了输入和输出,以及下一个模型的输入输出映射(input_map),即上一个模型的输出对应为下一个模型某个字段的输入,上面列出来的内容作了省略处理,具体可自行查看配置文件。

BLS 模式

有 Ensemble 的静态模式,必然有动态模式,BLS 就是动态模式,他和静态模式相比就多了个 model.py 脚本,事实上他的动态也是根据脚本中进行的逻辑判断实现的

model.py 脚本架构:

# Copyright 2024, NVIDIA CORPORATION & AFFILIATES. All rights reserved.

#

# Redistribution and use in source and binary forms, with or without

# modification, are permitted provided that the following conditions

# are met:

# * Redistributions of source code must retain the above copyright

# notice, this list of conditions and the following disclaimer.

# * Redistributions in binary form must reproduce the above copyright

# notice, this list of conditions and the following disclaimer in the

# documentation and/or other materials provided with the distribution.

# * Neither the name of NVIDIA CORPORATION nor the names of its

# contributors may be used to endorse or promote products derived

# from this software without specific prior written permission.

#

# THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS ``AS IS'' AND ANY

# EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE

# IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR

# PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT OWNER OR

# CONTRIBUTORS BE LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL,

# EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT LIMITED TO,

# PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS OF USE, DATA, OR

# PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND ON ANY THEORY

# OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT

# (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE

# OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.import json

import tracebackimport triton_python_backend_utils as pb_utils

from lib.triton_decoder import TritonDecoderdef get_valid_param_value(param, default_value=''):value = param.get('string_value', '')return default_value if value.startswith('${') or value == '' else valueclass TritonPythonModel:def initialize(self, args):# Parse model configsmodel_config = json.loads(args['model_config'])params = model_config['parameters']accumulate_tokens_str = get_valid_param_value(params.get('accumulate_tokens', {}))self.accumulate_tokens = accumulate_tokens_str.lower() in ['true', 'yes', '1', 't']self.decoupled = pb_utils.using_decoupled_model_transaction_policy(model_config)self.logger = pb_utils.Loggerdefault_tensorrt_llm_model_name = 'tensorrt_llm'self.llm_model_name = get_valid_param_value(params.get('tensorrt_llm_model_name', {}),default_tensorrt_llm_model_name)self.draft_llm_model_name = get_valid_param_value(params.get('tensorrt_llm_draft_model_name', {}), None)self.multimodal_encoders_name = get_valid_param_value(params.get('multimodal_encoders_name', {}), None)self.decoder = TritonDecoder(streaming=self.decoupled,accumulate=self.accumulate_tokens,preproc_model_name="preprocessing",postproc_model_name="postprocessing",llm_model_name=self.llm_model_name,draft_llm_model_name=self.draft_llm_model_name,multimodal_encoders_name=self.multimodal_encoders_name)def execute(self, requests):responses = []for request in requests:if self.decoupled:response_sender = request.get_response_sender()try:req = self.decoder.convert_triton_request(request)req.validate()speculative_decode = (req.num_draft_tokens is not Noneand req.num_draft_tokens[0][0] > 0)if speculative_decode and (self.draft_llm_model_name is Noneor self.draft_llm_model_name == ""):raise Exception("cannot perform speculative decoding without draft model")is_multimodal = req.image_input is not Noneif speculative_decode and is_multimodal:raise Exception("Multimodal and speculative decoding is not currently supported")res_gen = self.decoder.decode(req,speculative_decoding=speculative_decode,is_multimodal=is_multimodal)for res in res_gen:triton_response = self.decoder.create_triton_response(res)if self.decoupled:response_sender.send(triton_response)else:responses.append(triton_response)if self.decoupled:response_sender.send(flags=pb_utils.TRITONSERVER_RESPONSE_COMPLETE_FINAL)except Exception:self.logger.log_error(traceback.format_exc())# If encountering an error, send a response with err msgerror_response = pb_utils.InferenceResponse(output_tensors=[],error=pb_utils.TritonError(traceback.format_exc()))if self.decoupled:response_sender.send(error_response)response_sender.send(flags=pb_utils.TRITONSERVER_RESPONSE_COMPLETE_FINAL)else:responses.append(error_response)self.decoder.reset_decoder()if self.decoupled:return Noneelse:assert len(responses) == len(requests)return responsesdef finalize(self):"""`finalize` is called only once when the model is being unloaded.Implementing `finalize` function is optional. This function allowsthe model to perform any necessary clean ups before exit."""print('Tensorrt_llm_bls Cleaning up...')

所有的 model.py 文件都是需要实现上面的三个方法,BLS 模式的动态实现就是 execute 的内容,可以自己编码,遇到什么条件的时候进行不同的操作(或者不同的模型调用)实现动态处理。

根据上面 config.pbtxt 配置文件和 model.py 脚本的简单介绍,大家也可以自行研究一下其他 backend 的对应文件。总之,我们在部署过程中需要进行的操作就是根据需要进行配置和代码修改,当然 triton_model_repo 仓库中并不是固定的上面这些 backend,而是可以根据需求自行增加或者删除。

系列文章:

一、大模型推理框架选型调研

二、TensorRT-LLM & Triton Server 部署过程记录

三、vLLM 大模型推理引擎调研文档

四、vLLM 推理引擎性能分析基准测试

五、vLLM 部署大模型问题记录

六、Triton Inference Server 架构原理