目的

突发奇想想会认为下面这张图片究竟是玛丽莲梦露还是爱因斯坦,主要目的顺便实践练习《Python深度学习》书中的例子,只采用了很小批量的数据,也没有深究如何提高正确率,解决过拟合的问题。详细可以参见《python深度学习》第五章前两节。

数据准备

从百度图片中找到了风格各异的爱因斯坦的图片,直接采用下载整个网页的方式获取图片。选的量不多,100张作为训练,25张用于验证。本来是留有测试的数据,不小心删掉了就跳过在新数据上测试的步骤。(数据量太小也是一个严重的问题)

手动删掉一些不合适的图片,分别放到train和validation文件夹下的E,M两个文件中。

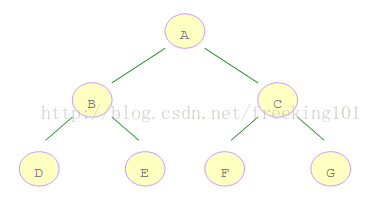

构建网络

建立序列模型,采用这个网络是因为之前在一个SAR图像的识别中表现优异,预测准确率达到96%以上(尽管并不能说明它在区分爱因斯坦和玛丽莲梦露也能表现得很好)

import os

from keras import layers

from keras import models

model = models.Sequential()

model.add(layers.Conv2D(64, (3, 3), activation='relu',input_shape=(88, 88,3)))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Conv2D(64, (3, 3), activation='relu'))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Conv2D(128, (3, 3), activation='relu'))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Conv2D(128, (3, 3), activation='relu'))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Flatten())

model.add(layers.Dropout(0.5))

model.add(layers.Dense(512, activation='relu'))

model.add(layers.Dropout(0.5))

model.add(layers.Dense(512, activation='relu'))

model.add(layers.Dense(1, activation='sigmoid'))

model.summary()

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d_1 (Conv2D) (None, 86, 86, 64) 1792

_________________________________________________________________

max_pooling2d_1 (MaxPooling2 (None, 43, 43, 64) 0

_________________________________________________________________

conv2d_2 (Conv2D) (None, 41, 41, 64) 36928

_________________________________________________________________

max_pooling2d_2 (MaxPooling2 (None, 20, 20, 64) 0

_________________________________________________________________

conv2d_3 (Conv2D) (None, 18, 18, 128) 73856

_________________________________________________________________

max_pooling2d_3 (MaxPooling2 (None, 9, 9, 128) 0

_________________________________________________________________

conv2d_4 (Conv2D) (None, 7, 7, 128) 147584

_________________________________________________________________

max_pooling2d_4 (MaxPooling2 (None, 3, 3, 128) 0

_________________________________________________________________

flatten_1 (Flatten) (None, 1152) 0

_________________________________________________________________

dropout_1 (Dropout) (None, 1152) 0

_________________________________________________________________

dense_1 (Dense) (None, 512) 590336

_________________________________________________________________

dropout_2 (Dropout) (None, 512) 0

_________________________________________________________________

dense_2 (Dense) (None, 512) 262656

_________________________________________________________________

dense_3 (Dense) (None, 1) 513

=================================================================

Total params: 1,113,665

Trainable params: 1,113,665

Non-trainable params: 0读入图片并训练

base_dir = r'dir\Einstein'

train_dir = os.path.join(base_dir, 'train')

validation_dir = os.path.join(base_dir, 'v')from keras import optimizers

model.compile(loss='binary_crossentropy',optimizer=optimizers.RMSprop(lr=1e-4),metrics=['acc'])from keras.preprocessing.image import ImageDataGenerator

train_datagen = ImageDataGenerator(rescale=1. / 255)

test_datagen = ImageDataGenerator(rescale=1. / 255)train_generator = train_datagen.flow_from_directory(train_dir,target_size=(88,88),batch_size=20,class_mode='binary')validation_generator = test_datagen.flow_from_directory(validation_dir,target_size=(88, 88),batch_size=20,class_mode='binary')

history = model.fit_generator(train_generator,steps_per_epoch=128,epochs=20,validation_data=validation_generator,validation_steps=50)#保存模型

model.save('EM.h5')

训练结果

绘制性能曲线

import matplotlib.pyplot as pltacc = history.history['acc']

val_acc = history.history['val_acc']

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs = range(1, len(acc) + 1)

plt.plot(epochs, acc, 'bo', label='Training acc')

plt.plot(epochs, val_acc, 'b', label='Validation acc')

plt.title('Training and validation accuracy')

plt.legend()

plt.figure()

plt.plot(epochs, loss, 'bo', label='Training loss')

plt.plot(epochs, val_loss, 'b', label='Validation loss')

plt.title('Training and validation loss')

plt.legend()

plt.show()

l最终结果:

l最终结果:

oss: 0.0050 - acc: 0.9996 -

val_loss: 2.4635 - val_acc: 0.6614

结果显然过拟合了,预测的正确率只有66%。但不妨碍用于用的预测。

预测

from keras.preprocessing import image

import matplotlib.image as mpimg

from keras import models

import numpy as np

img = image.load_img(r'dir\Einstein\EM.jpg',target_size=(88,88,3))

img = np.array(img)

img = img/255

model = models.load_model(r'dir\Einstein\EM.h5')

img = img.reshape(1,88,88,3)

pre = model.predict(img)

print('预测结果:',pre)预测结果: [[0.00376787]]

Keras添加的标签是E(爱因斯坦)文件夹中的为0,M(玛丽莲梦露)为1。通过网络最后的sigmoid单元,输出值为0.00376787,这个神经网络十分倾向于认为这张图片是爱因斯坦。

尝试了很多种不同的结构(数据量小训练也很快),验证集的正确率一直在70%左右,仅有一次认为该图片是玛丽莲梦露,其余结果都认为这张图片是爱因斯坦。

结论

在搭建的这样的简单的网络下,更倾向于认为这种图片里的人是爱因斯坦。

不足之处

- 样本太少

- 过拟合,验证集的识别正确率不高

参考资料

《python深度学习》