Kubernetes部署方式

官方提供Kubernetes部署3种方式

minikube

Minikube是一个工具,可以在本地快速运行一个单点的Kubernetes,尝试Kubernetes或日常开发的用户使用。不能用于生产环境。

官方文档:Install Tools | Kubernetes

二进制包

从官方下载发行版的二进制包,手动部署每个组件,组成Kubernetes集群。目前企业生产环境中主要使用该方式。

下载地址:https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG-1.11.md#v1113

Kubeadm

Kubeadm 是谷歌推出的一个专门用于快速部署 kubernetes 集群的工具。在集群部署的过程中,可以通过 kubeadm init 来初始化 master 节点,然后使用 kubeadm join 将其他的节点加入到集群中。

Kubeadm 通过简单配置可以快速将一个最小可用的集群运行起来。它在设计之初关注点是快速安装并将集群运行起来,而不是一步步关于各节点环境的准备工作。同样的,kubernetes 集群在使用过程中的各种插件也不是 kubeadm 关注的重点,比如 kubernetes集群 WEB Dashboard、prometheus 监控集群业务等。kubeadm 应用的目的是作为所有部署的基础,并通过 kubeadm 使得部署 kubernetes 集群更加容易。

Kubeadm 的简单快捷的部署可以应用到如下三方面:

- 新用户可以从 kubeadm 开始快速搭建 Kubernete 并了解。

- 熟悉 Kubernetes 的用户可以使用 kubeadm 快速搭建集群并测试他们的应用。

- 大型的项目可以将 kubeadm 配合其他的安装工具一起使用,形成一个比较复杂的系统。

- 官方文档:

Kubeadm | Kubernetes

Installing kubeadm | Kubernetes

基于kubeadm部署K8S集群

一、环境准备

| 操作系统 | IP地址 | 主机名 | 组件 |

| CentOS7.5 | 192.168.50.53 | k8s-master | kubeadm、kubelet、kubectl、docker-ce |

| CentOS7.5 | 192.168.50.51 | k8s-node01 | kubeadm、kubelet、kubectl、docker-ce |

| CentOS7.5 | 192.168.50.50 | k8s-node02 | kubeadm、kubelet、kubectl、docker-ce |

注意:所有主机配置推荐CPU:2C+ Memory:2G+

1.1、主机初始化配置

所有主机配置禁用防火墙和selinux

[root@localhost ~]# setenforce 0

[root@localhost ~]# iptables -F

[root@localhost ~]# systemctl stop firewalld

[root@localhost ~]# systemctl disable firewalld

Removed symlink /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

[root@localhost ~]# systemctl stop NetworkManager

[root@localhost ~]# systemctl disable NetworkManager

Removed symlink /etc/systemd/system/multi-user.target.wants/NetworkManager.service.

Removed symlink /etc/systemd/system/dbus-org.freedesktop.nm-dispatcher.service.

Removed symlink /etc/systemd/system/network-online.target.wants/NetworkManager-wait-online.service.

[root@localhost ~]# sed -i '/^SELINUX=/s/enforcing/disabled/' /etc/selinux/config

配置主机名并绑定hosts,不同主机名称不同

[root@localhost ~]# hostname k8s-master

[root@localhost ~]# bash

[root@k8s-master ~]# cat << EOF >> /etc/hosts

> 192.168.200.111 k8s-master

> 192.168.200.112 k8s-node01

> 192.168.200.113 k8s-node02

> EOF

[root@k8s-master ~]# cat << EOF >> /etc/hosts

> 192.168.50.53 k8s-master

> 192.168.50.51 k8s-node01

> 192.168.50.50 k8s-node02

> EOF

主机配置初始化

[root@k8s-master ~]# yum -y install vim wget net-tools lrzsz

[root@k8s-master ~]# swapoff -a

[root@k8s-master ~]# sed -i '/swap/s/^/#/' /etc/fstab

[root@k8s-master ~]# cat << EOF >> /etc/sysctl.conf

> net.bridge.bridge-nf-call-ip6tables = 1

> net.bridge.bridge-nf-call-iptables = 1

> EOF

[root@k8s-master ~]# modprobe br_netfilter

[root@k8s-master ~]# sysctl -p

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

1.2、部署docker环境

三台主机上分别部署 Docker 环境,因为 Kubernetes 对容器的编排需要 Docker 的支持。

[root@k8s-master ~]# wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

[root@k8s-master ~]# yum install -y yum-utils device-mapper-persistent-data lvm2

使用 YUM 方式安装 Docker 时,推荐使用阿里的 YUM 源。阿里的官方开源站点地址是:https://developer.aliyun.com/mirror/,可以在站点内找到 Docker 的源地址。

[root@k8s-master ~]# yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

[root@k8s-master ~]# yum clean all && yum makecache fast

[root@k8s-master ~]# yum -y install docker-ce

[root@k8s-master ~]# systemctl start docker

[root@k8s-master ~]# systemctl enable docker

Created symlink from /etc/systemd/system/multi-user.target.wants/docker.service to /usr/lib/systemd/system/docker.service.

镜像加速器(所有主机配置)

很多镜像都是在国外的服务器上,由于网络上存在的问题,经常导致无法拉取镜像的错误,所以最好将镜像拉取地址设置成国内的。目前国内很多公有云服务商都提供了镜像加速服务。镜像加速配置如下所示。

[root@k8s-master ~]# cat << END > /etc/docker/daemon.json

> {

> "registry-mirrors":[ "https://nyakyfun.mirror.aliyuncs.com" ]

> }

> END

[root@k8s-master ~]# systemctl daemon-reload

[root@k8s-master ~]# systemctl restart docker

将镜像加速地址直接写入/etc/docker/daemon.json 文件内,如果文件不存在,可直接新建文件并保存。通过该文件扩展名可以看出,daemon.json 的内容必须符合 json 格式,书写时要注意。同时,由于单一镜像服务存在不可用的情况,在配置加速时推荐配置两个或多个加速地址,从而达到冗余、高可用的目的。

二、部署kubernetes集群

2.1、组件介绍

三个节点都需要安装下面三个组件

- kubeadm:安装工具,使所有的组件都会以容器的方式运行

- kubectl:客户端连接K8S API工具

- kubelet:运行在node节点,用来启动容器的工具

2.2、配置阿里云yum源

推荐使用阿里云的yum源安装:

[root@k8s-master ~]# cat <<EOF > /etc/yum.repos.d/kubernetes.repo

> [kubernetes]

> name=Kubernetes

> baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

> enabled=1

> gpgcheck=1

> repo_gpgcheck=1

> gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

> https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

> EOF

2.3、安装kubelet kubeadm kubectl

所有主机配置

[root@k8s-master ~]# yum install -y kubelet-1.20.0 kubeadm-1.20.0 kubectl-1.20.0

[root@k8s-master ~]# systemctl enable kubelet

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.

kubelet 刚安装完成后,通过 systemctl start kubelet 方式是无法启动的,需要加入节点或初始化为 master 后才可启动成功。

如果在命令执行过程中出现索引 gpg 检查失败的情况, 请使用 yum install -y --nogpgcheck kubelet kubeadm kubectl 来安装。

2.4、配置init-config.yaml

Kubeadm 提供了很多配置项,Kubeadm 配置在 Kubernetes 集群中是存储在ConfigMap 中的,也可将这些配置写入配置文件,方便管理复杂的配置项。Kubeadm 配内容是通过 kubeadm config 命令写入配置文件的。

在master节点安装,master 定于为192.168.200.111,通过如下指令创建默认的init-config.yaml文件:

[root@k8s-master ~]# kubeadm config print init-defaults > init-config.yaml

其中,kubeadm config 除了用于输出配置项到文件中,还提供了其他一些常用功能,如下所示。

- kubeadm config view:查看当前集群中的配置值。

- kubeadm config print join-defaults:输出 kubeadm join 默认参数文件的内容。

- kubeadm config images list:列出所需的镜像列表。

- kubeadm config images pull:拉取镜像到本地。

- kubeadm config upload from-flags:由配置参数生成 ConfigMap。

init-config.yaml配置

[root@k8s-master ~]# vim init-config.yaml

[root@k8s-master ~]# cat init-config.yaml

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:- system:bootstrappers:kubeadm:default-node-tokentoken: abcdef.0123456789abcdefttl: 24h0m0susages:- signing- authentication

kind: InitConfiguration

localAPIEndpoint:advertiseAddress: 192.168.50.53 //master节点IP地址bindPort: 6443

nodeRegistration:criSocket: /var/run/dockershim.sockname: k8s-master //如果使用域名保证可以解析,或直接使用 IP 地址taints:- effect: NoSchedulekey: node-role.kubernetes.io/master

---

apiServer:timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns:type: CoreDNS

etcd:local:dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers //修改为国内地址

kind: ClusterConfiguration

kubernetesVersion: v1.20.0

networking:dnsDomain: cluster.localserviceSubnet: 10.96.0.0/12podSubnet: 10.244.0.0/16 //新增加 Pod 网段

scheduler: {}

2.5、安装master节点

拉取所需镜像

[root@k8s-master ~]# kubeadm config images list --config init-config.yaml

registry.aliyuncs.com/google_containers/kube-apiserver:v1.20.0

registry.aliyuncs.com/google_containers/kube-controller-manager:v1.20.0

registry.aliyuncs.com/google_containers/kube-scheduler:v1.20.0

registry.aliyuncs.com/google_containers/kube-proxy:v1.20.0

registry.aliyuncs.com/google_containers/pause:3.2

registry.aliyuncs.com/google_containers/etcd:3.4.13-0

registry.aliyuncs.com/google_containers/coredns:1.7.0

[root@k8s-master ~]# kubeadm config images pull --config=init-config.yaml

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-apiserver:v1.20.0

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-controller-manager:v1.20.0

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-scheduler:v1.20.0

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-proxy:v1.20.0

[config/images] Pulled registry.aliyuncs.com/google_containers/pause:3.2

[config/images] Pulled registry.aliyuncs.com/google_containers/etcd:3.4.13-0

[config/images] Pulled registry.aliyuncs.com/google_containers/coredns:1.7.0

安装matser节点

[root@k8s-master ~]# kubeadm init --config=init-config.yaml

根据提示操作

kubectl 默认会在执行的用户家目录下面的.kube 目录下寻找config 文件。这里是将在初始化时[kubeconfig]步骤生成的admin.conf 拷贝到.kube/config

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.50.53:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:95593e80f78629b2cab6fff1cad359519d84de0813f4cf66ab70787aa3d5c7fa

[root@k8s-master ~]# mkdir -p $HOME/.kube

[root@k8s-master ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@k8s-master ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

2.6、安装node节点

根据master安装时的提示信息

[root@k8s-node01 ~]# kubeadm join 192.168.50.53:6443 --token abcdef.0123456789abcdef \

> --discovery-token-ca-cert-hash sha256:95593e80f78629b2cab6fff1cad359519d84de0813f4cf66ab70787aa3d5c7fa

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[WARNING SystemVerification]: this Docker version is not on the list of validated versions: 24.0.5. Latest validated version: 19.03

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

[root@k8s-node02 ~]# kubeadm join 192.168.50.53:6443 --token abcdef.0123456789abcdef \

> --discovery-token-ca-cert-hash sha256:95593e80f78629b2cab6fff1cad359519d84de0813f4cf66ab70787aa3d5c7fa

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[WARNING SystemVerification]: this Docker version is not on the list of validated versions: 24.0.5. Latest validated version: 19.03

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

[root@k8s-master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master NotReady control-plane,master 43s v1.20.0

k8s-node01 NotReady <none> 9s v1.20.0

k8s-node02 NotReady <none> 6s v1.20.0

前面已经提到,在初始化 k8s-master 时并没有网络相关配置,所以无法跟 node 节点通信,因此状态都是“NotReady”。但是通过 kubeadm join 加入的 node 节点已经在k8s-master 上可以看到。

2.7、安装flannel

Master 节点NotReady 的原因就是因为没有使用任何的网络插件,此时Node 和Master的连接还不正常。目前最流行的Kubernetes 网络插件有Flannel、Calico、Canal、Weave 这里选择使用flannel。

所有主机:

master上传kube-flannel.yml,所有主机上传flannel_v0.12.0-amd64.tar cni-plugins-linux-amd64-v0.8.6.tgz

[root@k8s-master ~]# docker load < flannel_v0.12.0-amd64.tar

256a7af3acb1: Loading layer 5.844MB/5.844MB

d572e5d9d39b: Loading layer 10.37MB/10.37MB

57c10be5852f: Loading layer 2.249MB/2.249MB

7412f8eefb77: Loading layer 35.26MB/35.26MB

05116c9ff7bf: Loading layer 5.12kB/5.12kB

Loaded image: quay.io/coreos/flannel:v0.12.0-amd64

[root@k8s-master ~]# tar xf cni-plugins-linux-amd64-v0.8.6.tgz

[root@k8s-master ~]# cp flannel /opt/cni/bin

master主机:

[root@k8s-master ~]# kubectl apply -f kube-flannel.yml

podsecuritypolicy.policy/psp.flannel.unprivileged created

Warning: rbac.authorization.k8s.io/v1beta1 ClusterRole is deprecated in v1.17+, unavailable in v1.22+; use rbac.authorization.k8s.io/v1 ClusterRole

clusterrole.rbac.authorization.k8s.io/flannel created

Warning: rbac.authorization.k8s.io/v1beta1 ClusterRoleBinding is deprecated in v1.17+, unavailable in v1.22+; use rbac.authorization.k8s.io/v1 ClusterRoleBinding

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds-amd64 created

daemonset.apps/kube-flannel-ds-arm64 created

daemonset.apps/kube-flannel-ds-arm created

daemonset.apps/kube-flannel-ds-ppc64le created

daemonset.apps/kube-flannel-ds-s390x created

[root@k8s-master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master Ready control-plane,master 2m59s v1.20.0

k8s-node01 Ready <none> 2m25s v1.20.0

k8s-node02 Ready <none> 2m22s v1.20.0

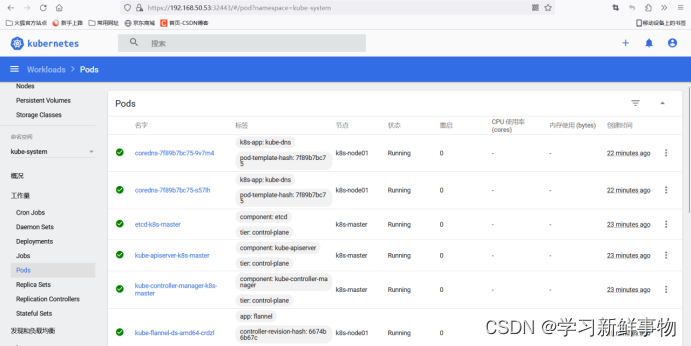

[root@k8s-master ~]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-7f89b7bc75-b652z 0/1 Running 0 2m50s

coredns-7f89b7bc75-qwcwp 0/1 Running 0 2m50s

etcd-k8s-master 1/1 Running 0 2m59s

kube-apiserver-k8s-master 1/1 Running 0 2m59s

kube-controller-manager-k8s-master 1/1 Running 0 2m59s

kube-flannel-ds-amd64-4rpxb 1/1 Running 0 21s

kube-flannel-ds-amd64-d8jh4 1/1 Running 0 21s

kube-flannel-ds-amd64-vngwj 1/1 Running 0 21s

kube-proxy-8pq9d 1/1 Running 0 2m51s

kube-proxy-gqq4t 1/1 Running 0 2m31s

kube-proxy-qgwr5 1/1 Running 0 2m34s

kube-scheduler-k8s-master 1/1 Running 0 2m59s

已经是ready状态

三、安装Dashboard UI

3.1、部署Dashboard

dashboard的github仓库地址:https://github.com/kubernetes/dashboard

代码仓库当中,有给出安装示例的相关部署文件,我们可以直接获取之后,直接部署即可

[root@k8s-master ~]# wget https://raw.githubusercontent.com/kubernetes/dashboard/master/aio/deploy/recommended.yaml

默认这个部署文件当中,会单独创建一个名为kubernetes-dashboard的命名空间,并将kubernetes-dashboard部署在该命名空间下。dashboard的镜像来自docker hub官方,所以可不用修改镜像地址,直接从官方获取即可。

3.2、开放端口设置

在默认情况下,dashboard并不对外开放访问端口,这里简化操作,直接使用nodePort的方式将其端口暴露出来,修改serivce部分的定义:

[root@k8s-master ~]# docker pull kubernetesui/dashboard:v2.0.0

v2.0.0: Pulling from kubernetesui/dashboard

2a43ce254c7f: Pull complete

Digest: sha256:06868692fb9a7f2ede1a06de1b7b32afabc40ec739c1181d83b5ed3eb147ec6e

Status: Downloaded newer image for kubernetesui/dashboard:v2.0.0

docker.io/kubernetesui/dashboard:v2.0.0

[root@k8s-master ~]# docker pull kubernetesui/metrics-scraper:v1.0.4

v1.0.4: Pulling from kubernetesui/metrics-scraper

07008dc53a3e: Pull complete

1f8ea7f93b39: Pull complete

04d0e0aeff30: Pull complete

Digest: sha256:555981a24f184420f3be0c79d4efb6c948a85cfce84034f85a563f4151a81cbf

Status: Downloaded newer image for kubernetesui/metrics-scraper:v1.0.4

docker.io/kubernetesui/metrics-scraper:v1.0.4

[root@k8s-master ~]# vim recommended.yaml

40 type: NodePort

44 nodePort: 32443

3.3、权限配置

配置一个超级管理员权限

[root@k8s-master ~]# vim recommended.yaml

164 name: cluster-admin

[root@k8s-master ~]# kubectl apply -f recommended.yaml

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-scraper created

[root@k8s-master ~]# kubectl get pods -n kubernetes-dashboard

NAME READY STATUS RESTARTS AGE

dashboard-metrics-scraper-7b59f7d4df-4pt4n 1/1 Running 0 10s

kubernetes-dashboard-74d688b6bc-4wzs6 0/1 ContainerCreating 0 10s

[root@k8s-master ~]# kubectl get pods -A -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system coredns-7f89b7bc75-b652z 1/1 Running 0 5m54s 10.244.1.3 k8s-node01 <none> <none>

kube-system coredns-7f89b7bc75-qwcwp 1/1 Running 0 5m54s 10.244.1.2 k8s-node01 <none> <none>

kube-system etcd-k8s-master 1/1 Running 0 6m3s 192.168.50.53 k8s-master <none> <none>

kube-system kube-apiserver-k8s-master 1/1 Running 0 6m3s 192.168.50.53 k8s-master <none> <none>

kube-system kube-controller-manager-k8s-master 1/1 Running 0 6m3s 192.168.50.53 k8s-master <none> <none>

kube-system kube-flannel-ds-amd64-4rpxb 1/1 Running 0 3m25s 192.168.50.50 k8s-node02 <none> <none>

kube-system kube-flannel-ds-amd64-d8jh4 1/1 Running 0 3m25s 192.168.50.51 k8s-node01 <none> <none>

kube-system kube-flannel-ds-amd64-vngwj 1/1 Running 0 3m25s 192.168.50.53 k8s-master <none> <none>

kube-system kube-proxy-8pq9d 1/1 Running 0 5m55s 192.168.50.53 k8s-master <none> <none>

kube-system kube-proxy-gqq4t 1/1 Running 0 5m35s 192.168.50.50 k8s-node02 <none> <none>

kube-system kube-proxy-qgwr5 1/1 Running 0 5m38s 192.168.50.51 k8s-node01 <none> <none>

kube-system kube-scheduler-k8s-master 1/1 Running 0 6m3s 192.168.50.53 k8s-master <none> <none>

kubernetes-dashboard dashboard-metrics-scraper-7b59f7d4df-4pt4n 1/1 Running 0 22s 10.244.1.5 k8s-node01 <none> <none>

kubernetes-dashboard kubernetes-dashboard-74d688b6bc-4wzs6 1/1 Running 0 22s 10.244.1.4 k8s-node01 <none> <none>

3.4、访问Token配置

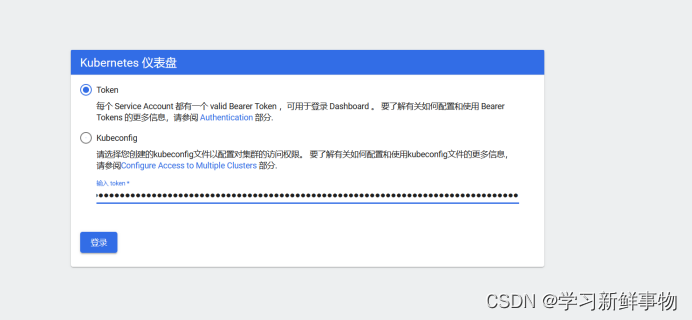

使用谷歌浏览器测试访问 https://192.168.50.53:32443

可以看到出现如上图画面,需要我们输入一个kubeconfig文件或者一个token。事实上在安装dashboard时,也为我们默认创建好了一个serviceaccount,为kubernetes-dashboard,并为其生成好了token,我们可以通过如下指令获取该sa的token:

[root@k8s-master ~]# kubectl describe secret -n kubernetes-dashboard $(kubectl get secret -n kubernetes-dashboard |grep kubernetes-dashboard-token | awk '{print $1}') |grep token | awk '{print $2}'

kubernetes-dashboard-token-95vpp

kubernetes.io/service-account-token

eyJhbGciOiJSUzI1NiIsImtpZCI6IlRvNTVCTjBUdE4ybmVjUUNuRWhQS1hCNzl6QnJyTVFCTUd0MXJCX053bkkifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC10b2tlbi05NXZwcCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6ImQyODNjMTJlLTExODgtNDE2Mi1iY2M1LWE1OTU0Njc2OWU2MSIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlcm5ldGVzLWRhc2hib2FyZDprdWJlcm5ldGVzLWRhc2hib2FyZCJ9.pjL2Iw5YYsvTL_qOwoH65tfetbAViAjnl2nyXk17e6wlcg1C8uZx2-IoIsyOiUwMzj1lsS0MTTLvyLh4eauqaVtOqw5j-SxDIrLoVtIEe-zWhJ-hNGZ642uh2xicKYFu09H1_stD7d7NSzeVY64uHCOpTSj3vNelCh8_LkH5qfa5A7NT-DcFIcG0xZIjt9iK1VPIMoAw-2vVFAPhcdUTOsoUO4wTJgVVYX1ycMJCQFzeGPiPKfUyCPvTvaFQwFXZH5To-PibDKEWA3xDdRvrOLTU0RhM2aBtmlf3B2ktgM4brmpbR_N38psMBbJjQYgAOaIZvN2hryRvffOAZRyInA

四、metrics-server服务部署

4.1、在Node节点上下载镜像

[root@k8s-master tomcat]# vim templates/deployment.yaml

eapster已经被metrics-server取代,metrics-server是K8S中的资源指标获取工具

[root@k8s-node01 ~]# docker pull bluersw/metrics-server-amd64:v0.3.6

[root@k8s-node01 ~]# docker tag bluersw/metrics-server-amd64:v0.3.6 k8s.gcr.io/metrics-server-amd64:v0.3.6

4.2、修改 Kubernetes apiserver 启动参数

在kube-apiserver项中添加如下配置选项 修改后apiserver会自动重启

[root@k8s-master ~]# vim /etc/kubernetes/manifests/kube-apiserver.yaml

44 - --enable-aggregator-routing=true

4.3、Master上进行部署

[root@k8s-master ~]# wget https://github.com/kubernetes-sigs/metrics-server/releases/download/v0.3.6/components.yaml

修改安装脚本:

[root@k8s-master ~]# vim components.yaml

89 - --cert-dir=/tmp

90 - --secure-port=4443

91 - --kubelet-preferred-address-types=InternalIP

92 - --kubelet-insecure-tls3 directories, 10 files

[root@k8s-master ~]# kubectl create -f components.yaml

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created

rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created

Warning: apiregistration.k8s.io/v1beta1 APIService is deprecated in v1.19+, unavailable in v1.22+; use apiregistration.k8s.io/v1 APIService

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created

serviceaccount/metrics-server created

deployment.apps/metrics-server created

service/metrics-server created

clusterrole.rbac.authorization.k8s.io/system:metrics-server created

clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created

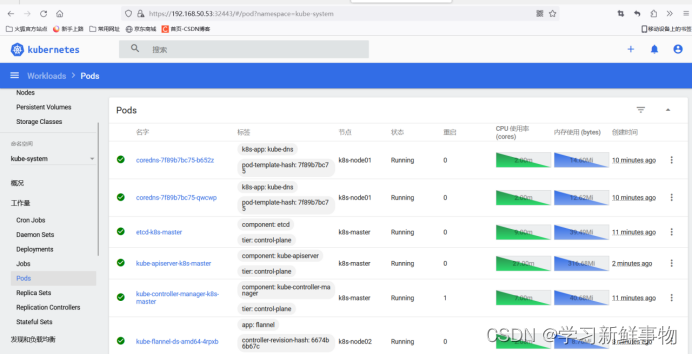

[root@k8s-master ~]# kubectl top nodes

error: metrics not available yet

等待1-2分钟后查看结果

[root@k8s-master ~]# kubectl top nodes

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

k8s-master 76m 3% 1441Mi 52%

k8s-node01 35m 1% 1051Mi 28%

k8s-node02 18m 1% 377Mi 43%

再回到dashboard界面可以看到CPU和内存使用情况了

5、Helm应用包管理器介绍

5.1、为什么需要Helm?

Kubernetes上部署的应用服务,都是由特定的资源描述组成,包括deployment、service等。每个资源对象都保存在各自文件中或者集中写到一个配置文件。然后通过kubectl apply –f demo.yaml命令进行部署。

如果业务系统只由一个或几个这样的服务组成,那么上面部署管理方式足够用了。

而对于一个复杂的业务系统,会有很多类似上面的资源描述文件,例如微服务架构应用,组成应用的服务可能多达十个,几十个。如果有更新或回滚应用的需求,可能要修改和维护所涉及的大量资源对象文件,而这种组织和管理应用的方式就显得力不从心了。

而且由于缺少对发布过的应用版本管理和控制,使Kubernetes上的应用维护和更新等面临诸多的挑战,主要面临以下问题:

- 如何将这些服务作为一个整体管理

- 这些资源文件如何高效复用

- 不支持应用级别的版本管理

5.2、Helm介绍

Helm是一个Kubernetes的包管理工具,就像Linux下的包管理器,如yum/apt-get等一样,Helm可以很方便的将之前打包好的yaml文件部署到kubernetes上。

Helm有3个重要概念:

- helm:一个命令行客户端工具,主要用于Kubernetes应用chart的创建、打包、发布和管理。

- Chart:目录或者压缩包,用于应用描述,由一系列用于描述 k8s 资源对象相关文件的集合。

- Release:基于Chart的部署实体,一个 chart 被 Helm 运行后将会生成对应的一个 release;将在k8s中创建出真实运行的资源对象。

Helm特点

- 开发的一个用于kubernetes的包管理器,每个包称为一个Chart,一个Chart是一个目录(一般情况下会将目录进行打包压缩,形成name-version.tar.gz格式的单一文件,方便传输和存储)

- 对于应用发布者而言,可以通过Helm打包应用, 管理应用依赖关系,管理应用版本并发布应用到软件仓库。

- 对于使用者而言,使用Helm后不用需要了解Kubernetes的Yaml语法并编写应用部署文件,可以通过Helm下载并在kubernetes上安装需要的应用,

- Helm提供了kubernetes上的软件部署,删除,升级, 回滚应用的强大功能

5.3、Helm V3变化

2019年11月13日, Helm团队发布 Helm v3的第一个稳定版本。该版本主要变化如下:

1)架构变化

最明显的变化是 Tiller的删除

2)Release名称可以在不同命名空间重用

3)支持将 Chart 推送至 Docker 镜像仓库Harbor中

4)使用JSONSchema验证chart values

5)其他

- 为了更好地协调其他包管理者的措辞 Helm CLI个别更名

helm delete` 更名为 `helm uninstall

helm inspect` 更名为 `helm show

helm fetch` 更名为 `helm pull

但以上旧的命令当前仍能使用。

- 移除了用于本地临时搭建 Chart Repository的 helm serve 命令。

- 自动创建名称空间

在不存在的命名空间中创建发行版时,Helm 2创建了命名空间。Helm 3遵循其他Kubernetes对象的行为,如果命名空间不存在则返回错误。

- 不再需要requirements.yaml, 依赖关系是直接在chart.yaml中定义。

6、Helm应用包管理器部署

6.1、部署Helm客户端工具

Helm客户端下载地址:Releases · helm/helm · GitHub

将源码包解压并移动到/usr/bin/目录即可。

将源码包解压并移动到/usr/bin/目录即可。

[root@k8s-master ~]# wget https://get.helm.sh/helm-v3.5.2-linux-amd64.tar.gz

[root@k8s-master ~]# tar xf helm-v3.5.2-linux-amd64.tar.gz

[root@k8s-master ~]# cd linux-amd64/

[root@k8s-master linux-amd64]# ls

helm LICENSE README.md

[root@k8s-master linux-amd64]# mv helm /usr/bin/

[root@k8s-master linux-amd64]# helm 验证命令是否可以

The Kubernetes package manager

Common actions for Helm:

- helm search: search for charts

- helm pull: download a chart to your local directory to view

- helm install: upload the chart to Kubernetes

- helm list: list releases of charts

6.2、Helm常用命令

| 命令 | 描述 |

| create | 创建一个chart并指定名字 |

| dependency | 管理chart依赖 |

| get | 下载一个release。可用子命令:all、hooks、manifest、notes、values |

| history | 获取release历史 |

| install | 安装一个chart |

| list | 列出release |

| package | 将chart目录打包到chart存档文件中 |

| pull | 从远程仓库中下载chart并解压到本地 # helm pull stable/mysql --untar |

| repo | 添加,列出,移除,更新和索引chart仓库。可用子命令:add、index、list、remove、update |

| rollback | 从之前版本回滚 |

| search | 根据关键字搜索chart。可用子命令:hub、repo |

| show | 查看chart详细信息。可用子命令:all、chart、readme、values |

| status | 显示已命名版本的状态 |

| template | 本地呈现模板 |

| uninstall | 卸载一个release |

| upgrade | 更新一个release |

| version | 查看helm客户端版本 |

6.3、配置国内的Chart仓库

- 微软仓库(Index of /kubernetes/charts/)这个仓库强烈推荐,基本上官网有的chart这里都有。

- 阿里云仓库(https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts)

- 官方仓库(Kubeapps | Home)官方chart仓库,国内有点不好使。

添加chart存储库

[root@k8s-master linux-amd64]# helm repo add stable http://mirror.azure.cn/kubernetes/charts

"stable" has been added to your repositories

[root@k8s-master linux-amd64]# helm repo add aliyun https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts

"aliyun" has been added to your repositories

[root@k8s-master linux-amd64]# helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "aliyun" chart repository

...Successfully got an update from the "stable" chart repository

Update Complete. ⎈Happy Helming!⎈

查看已配置的chart存储库

[root@k8s-master linux-amd64]# helm repo list

NAME URL

stable http://mirror.azure.cn/kubernetes/charts

aliyun https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts

删除存储库命令(知道一下)

[root@k8s-master linux-amd64]# helm repo remove aliyun

"aliyun" has been removed from your repositories

[root@k8s-master linux-amd64]# helm repo list

NAME URL

stable http://mirror.azure.cn/kubernetes/charts

[root@k8s-master linux-amd64]# helm repo add stable http://mirror.azure.cn/kubernetes/charts

"stable" already exists with the same configuration, skipping

6.4、使用chart部署一个Nginx应用

创建回来

[root@k8s-master linux-amd64]# helm repo add aliyun https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts

"aliyun" has been added to your repositories

[root@k8s-master linux-amd64]# helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "aliyun" chart repository

...Successfully got an update from the "stable" chart repository

Update Complete. ⎈Happy Helming!⎈

创建chart

[root@k8s-master linux-amd64]# helm create nginx

Creating nginx

[root@k8s-master ~]# tree nginx/

nginx/

├── charts #依赖其他包的charts文件

├── Chart.yaml #该chart的描述文件,包括ip地址,版本信息等

├── templates #存放k8s模板文件目录

│ ├── deployment.yaml #创建k8s deployment资源的yaml 模板

│ ├── _helpers.tpl #下划线开头的文件,可以被其他模板引用

│ ├── hpa.yaml #配置服务资源CPU 内存

│ ├── ingress.yaml # ingress 配合service域名访问的配置

│ ├── NOTES.txt #说明文件,helm install之后展示给用户看的内容

│ ├── serviceaccount.yaml

│ ├── service.yaml #kubernetes Serivce yaml模板

│ └── tests

│ └── test-connection.yaml

└── values.yaml #给模板文件使用的变量

修改values.yaml 里的service的type为 NodePort

[root@k8s-master linux-amd64]# cd nginx/

[root@k8s-master nginx]# vim values.yaml

39 service:

40 type: NodePort

安装chart任务(注意命令最后的点)

[root@k8s-master nginx]# helm install -f values.yaml nginx .

NAME: nginx

LAST DEPLOYED: Fri Aug 18 16:19:59 2023

NAMESPACE: default

STATUS: deployed

REVISION: 1

NOTES:

1. Get the application URL by running these commands:

export NODE_PORT=$(kubectl get --namespace default -o jsonpath="{.spec.ports[0].nodePort}" services nginx)

export NODE_IP=$(kubectl get nodes --namespace default -o jsonpath="{.items[0].status.addresses[0].address}")

echo http://$NODE_IP:$NODE_PORT

查看release

[root@k8s-master nginx]# helm ls

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

nginx default 1 2023-08-18 16:19:59.976996747 +0800 CST deployed nginx-0.1.0 1.16.0

查看pod状态

[root@k8s-master nginx]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-764c6bcc78-pslfv 1/1 Running 0 110s 10.244.1.7 k8s-node01 <none> <none>

查看svc状态

[root@k8s-master nginx]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 25m

nginx NodePort 10.98.125.95 <none> 80:31526/TCP 2m1s

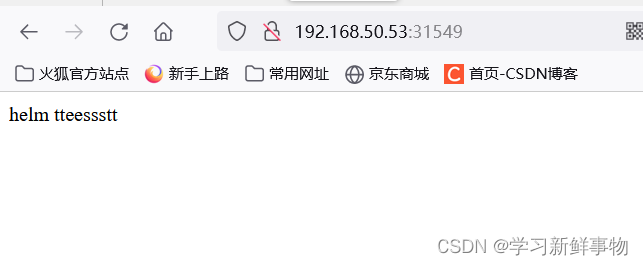

访问 k8s_node_IP:31526

6.5、使用chart部署一个Tomcat应用

[root@k8s-master nginx]# helm create tomcat

Creating tomcat

[root@k8s-master nginx]# cd tomcat/

修改deployment.yaml和service.yaml文件

[root@k8s-master tomcat]# vim templates/deployment.yaml

[root@k8s-master tomcat]# vim templates/deployment.yamlapiVersion: apps/v1

kind: Deployment

metadata:creationTimestamp: nulllabels:app: tomcatname: tomcat

spec:replicas: 2selector:matchLabels:app: tomcatstrategy: {}template:metadata:creationTimestamp: nulllabels:app: tomcatspec:containers:- image: tomcat:8name: tomcatresources: {}

status: {}[root@k8s-master tomcat]# vim templates/service.yaml

[root@k8s-master tomcat]# vim templates/service.yaml apiVersion: v1

kind: Service

metadata:labels:app: tomcatname: tomcat

spec:ports:- port: 8080 protocol: TCPtargetPort: 8080 selector:app: tomcattype: NodePort

status:loadBalancer: {}创建release

[root@k8s-master tomcat]# helm install tomcat .

NAME: tomcat

LAST DEPLOYED: Fri Aug 18 16:25:16 2023

NAMESPACE: default

STATUS: deployed

REVISION: 1

NOTES:

1. Get the application URL by running these commands:

export POD_NAME=$(kubectl get pods --namespace default -l "app.kubernetes.io/name=tomcat,app.kubernetes.io/instance=tomcat" -o jsonpath="{.items[0].metadata.name}")

export CONTAINER_PORT=$(kubectl get pod --namespace default $POD_NAME -o jsonpath="{.spec.containers[0].ports[0].containerPort}")

echo "Visit http://127.0.0.1:8080 to use your application"

kubectl --namespace default port-forward $POD_NAME 8080:$CONTAINER_PORT

查看release

[root@k8s-master tomcat]# helm ls

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

nginx default 1 2023-08-18 16:19:59.976996747 +0800 CST deployed nginx-0.1.0 1.16.0

tomcat default 1 2023-08-18 16:25:16.588946528 +0800 CST deployed tomcat-0.1.0 1.16.0

查看pod和svc

[root@k8s-master tomcat]# kubectl get pod 有点慢

NAME READY STATUS RESTARTS AGE

nginx-764c6bcc78-pslfv 1/1 Running 0 5m44s

tomcat-67df6cd4d6-2lmm5 0/1 ContainerCreating 0 28s

tomcat-67df6cd4d6-88pw9 0/1 ContainerCreating 0 28s

[root@k8s-master tomcat]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-764c6bcc78-pslfv 1/1 Running 0 6m9s 10.244.1.7 k8s-node01 <none> <none>

tomcat-67df6cd4d6-2lmm5 0/1 ContainerCreating 0 53s <none> k8s-node01 <none> <none>

tomcat-67df6cd4d6-88pw9 0/1 ContainerCreating 0 53s <none> k8s-node01 <none> <none>

NAME READY STATUS RESTARTS AGE

nginx-764c6bcc78-pslfv 1/1 Running 0 8m19s

tomcat-67df6cd4d6-2lmm5 1/1 Running 0 3m3s

tomcat-67df6cd4d6-88pw9 1/1 Running 0 3m3s

[root@k8s-master tomcat]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-764c6bcc78-pslfv 1/1 Running 0 8m29s 10.244.1.7 k8s-node01 <none> <none>

tomcat-67df6cd4d6-2lmm5 1/1 Running 0 3m13s 10.244.1.8 k8s-node01 <none> <none>

tomcat-67df6cd4d6-88pw9 1/1 Running 0 3m13s 10.244.1.9 k8s-node01 <none> <none>

[root@k8s-master tomcat]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 32m

nginx NodePort 10.98.125.95 <none> 80:31526/TCP 8m37s

tomcat NodePort 10.103.148.4 <none> 8080:31549/TCP 3m21s

准备测试页

[root@k8s-master tomcat]# kubectl exec -it tomcat-67df6cd4d6-2lmm5 /bin/bash

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

root@tomcat-67df6cd4d6-2lmm5:/usr/local/tomcat# mkdir webapps/ROOT

root@tomcat-67df6cd4d6-2lmm5:/usr/local/tomcat# echo "helm test" > webapps/ROOT/index.jsp

root@tomcat-67df6cd4d6-2lmm5:/usr/local/tomcat# exit

exit

[root@k8s-master tomcat]# kubectl exec -it tomcat-67df6cd4d6-88pw9 /bin/bash

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

root@tomcat-67df6cd4d6-88pw9:/usr/local/tomcat# mkdir webapps/ROOT

root@tomcat-67df6cd4d6-88pw9:/usr/local/tomcat# echo "helm tteessstt" > webapps/ROOT/index.jsp

root@tomcat-67df6cd4d6-88pw9:/usr/local/tomcat# exit

exit

访问 k8s_node_IP:31549

删除

[root@k8s-master tomcat]# helm delete tomcat

release "tomcat" uninstalled

[root@k8s-master tomcat]# helm ls

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

nginx default 1 2023-08-18 16:19:59.976996747 +0800 CST deployed nginx-0.1.0 1.16.0

升级(改完yaml文件之后重新应用)

[root@k8s-master tomcat]# helm install tomcat .

NAME: tomcat

LAST DEPLOYED: Fri Aug 18 16:35:43 2023

NAMESPACE: default

STATUS: deployed

REVISION: 1

NOTES:

1. Get the application URL by running these commands:

export POD_NAME=$(kubectl get pods --namespace default -l "app.kubernetes.io/name=tomcat,app.kubernetes.io/instance=tomcat" -o jsonpath="{.items[0].metadata.name}")

export CONTAINER_PORT=$(kubectl get pod --namespace default $POD_NAME -o jsonpath="{.spec.containers[0].ports[0].containerPort}")

echo "Visit http://127.0.0.1:8080 to use your application"

kubectl --namespace default port-forward $POD_NAME 8080:$CONTAINER_PORT

[root@k8s-master tomcat]# helm ls

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

nginx default 1 2023-08-18 16:19:59.976996747 +0800 CST deployed nginx-0.1.0 1.16.0

tomcat default 1 2023-08-18 16:35:43.123037795 +0800 CST deployed tomcat-0.1.0 1.16.0

[root@k8s-master tomcat]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-764c6bcc78-pslfv 1/1 Running 0 15m

tomcat-67df6cd4d6-9vsdk 1/1 Running 0 16s

tomcat-67df6cd4d6-l5m6w 1/1 Running 0 16s

[root@k8s-master tomcat]# vim templates/deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

creationTimestamp: null

labels:

app: tomcat

name: tomcat

spec:

replicas: 3

selector:

matchLabels:

app: tomcat

strategy: {}

template:

metadata:

creationTimestamp: null

labels:

app: tomcat

spec:

containers:

- image: tomcat:8

name: tomcat

resources: {}

status: {}[root@k8s-master tomcat]# helm upgrade tomcat .

查看

[root@k8s-master tomcat]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-764c6bcc78-pslfv 1/1 Running 0 16m

tomcat-67df6cd4d6-9vsdk 1/1 Running 0 61s

tomcat-67df6cd4d6-l5m6w 1/1 Running 0 61s

tomcat-67df6cd4d6-zxxq5 1/1 Running 0 7s

[root@k8s-master tomcat]# helm ls

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

nginx default 1 2023-08-18 16:19:59.976996747 +0800 CST deployed nginx-0.1.0 1.16.0

tomcat default 2 2023-08-18 16:36:37.215658642 +0800 CST deployed tomcat-0.1.0 1.16.0

版本回滚

[root@k8s-master tomcat]# helm rollback tomcat 1

Rollback was a success! Happy Helming!

[root@k8s-master tomcat]# helm ls

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

nginx default 1 2023-08-18 16:19:59.976996747 +0800 CST deployed nginx-0.1.0 1.16.0

tomcat default 3 2023-08-18 16:37:06.005122073 +0800 CST deployed tomcat-0.1.0 1.16.0

[root@k8s-master tomcat]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-764c6bcc78-pslfv 1/1 Running 0 17m

tomcat-67df6cd4d6-9vsdk 1/1 Running 0 99s

tomcat-67df6cd4d6-l5m6w 1/1 Running 0 99s

tomcat-67df6cd4d6-zxxq5 0/1 Terminating 0 45s

6.6、用变量渲染模板

测试模板是否正常

[root@k8s-master tomcat]# helm install --dry-run tomcat .

在values.yaml文件中定义变量

[root@k8s-master tomcat]# vim values.yaml

[root@k8s-master tomcat]# vim values.yaml replicas: 2

image: tomcat

tag: 8

label: tomcat

port: 8080

[root@k8s-master tomcat]# vim templates/deployment.yaml apiVersion: apps/v1

kind: Deployment

metadata:creationTimestamp: nulllabels:app: tomcatname: {{ .Release.Name }}-dp

spec:replicas: {{ .Values.replicas }}selector:matchLabels:app: {{ .Values.label }}strategy: {}template:metadata:creationTimestamp: nulllabels:app: {{ .Values.label }}spec:containers:- image: {{ .Values.image }}:{{ .Values.tag }} name: tomcatresources: {}

status: {}

[root@k8s-master tomcat]# vim templates/service.yaml apiVersion: v1

kind: Service

metadata:labels:app: tomcatname: {{ .Release.Name }}-svc

spec:ports:- port: 8080 protocol: TCPtargetPort: {{ .Values.port}}selector:app: {{ .Values.label }}type: NodePort

status:loadBalancer: {}deployment.yaml 和service.yaml两个文件的变量都是预先在values.yaml里面定义过引用过来的值。

Release.Name 代表helm install 后面的那个名字

[root@k8s-master tomcat]# helm delete tomcat

release "tomcat" uninstalled

将templates目录中多余的文件都删除,只保留两个测试文件

[root@k8s-master tomcat]# ls templates/

deployment.yaml hpa.yaml NOTES.txt service.yaml

_helpers.tpl ingress.yaml serviceaccount.yaml tests

[root@k8s-master tomcat]# cd templates/

[root@k8s-master templates]# rm -rf _helpers.tpl

[root@k8s-master templates]# rm -rf hpa.yaml

[root@k8s-master templates]# rm -rf ingress.yaml

[root@k8s-master templates]# rm -rf NOTES.txt

[root@k8s-master templates]# rm -rf serviceaccount.yaml

[root@k8s-master templates]# rm -rf tests

[root@k8s-master templates]# ll

总用量 8

-rw-r--r--. 1 root root 486 8月 18 16:42 deployment.yaml

-rw-r--r--. 1 root root 269 8月 18 16:42 service.yaml

[root@k8s-master tomcat]# helm install -f values.yaml tomcat .

NAME: tomcat

LAST DEPLOYED: Fri Aug 18 16:54:53 2023

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

[root@k8s-master tomcat]# helm ls

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

nginx default 1 2023-08-18 16:19:59.976996747 +0800 CST deployed nginx-0.1.0 1.16.0

tomcat default 1 2023-08-18 16:54:53.595821157 +0800 CST deployed tomcat-0.1.0 1.16.0

查看发布状态

[root@k8s-master tomcat]# helm status tomcat

NAME: tomcat

LAST DEPLOYED: Fri Aug 18 16:54:53 2023

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

[root@k8s-master tomcat]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-764c6bcc78-pslfv 1/1 Running 0 35m

tomcat-dp-67df6cd4d6-nv4km 1/1 Running 0 27s

tomcat-dp-67df6cd4d6-qjwns 1/1 Running 0 27s

tomcat-dp-788798c7dd-9cq65 0/1 Terminating 0 8m40s

tomcat-dp-788798c7dd-wzfdl 0/1 Terminating 0 8m40s

[root@k8s-master tomcat]# kubectl describe pod tomcat-dp-67df6cd4d6-nv4km

Name: tomcat-dp-67df6cd4d6-nv4km

Namespace: default

Priority: 0

Node: k8s-node01/192.168.50.51

Start Time: Fri, 18 Aug 2023 16:54:53 +0800

Labels: app=tomcat

pod-template-hash=67df6cd4d6

Annotations: <none>

Status: Running

IP: 10.244.1.15

IPs:

IP: 10.244.1.15

Controlled By: ReplicaSet/tomcat-dp-67df6cd4d6

Containers:

tomcat:

Container ID: docker://1049092320d8c7221284fc0b0e35c74afed6cdd2570673efb806dd0db6868247

Image: tomcat:8

Image ID: docker-pullable://tomcat@sha256:421c2a2c73f3e339c787beaacde0f7bbc30bba957ec653d41a77d08144c6a028

Port: <none>

Host Port: <none>

State: Running

Started: Fri, 18 Aug 2023 16:54:54 +0800

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-7l5jb (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

default-token-7l5jb:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-7l5jb

Optional: false

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 55s default-scheduler Successfully assigned default/tomcat-dp-67df6cd4d6-nv4km to k8s-node01

Normal Pulled 54s kubelet Container image "tomcat:8" already present on machine

Normal Created 54s kubelet Created container tomcat

Normal Started 54s kubelet Started container tomcat

![企望制造ERP系统 RCE漏洞[2023-HW]](https://img-blog.csdnimg.cn/28245cc051824be8a53585884add35df.png)