💡💡💡本文主要内容:详细介绍了暗光低光数据集检测整个过程,从数据集到训练模型到结果可视化分析,以及如何优化提升检测性能。

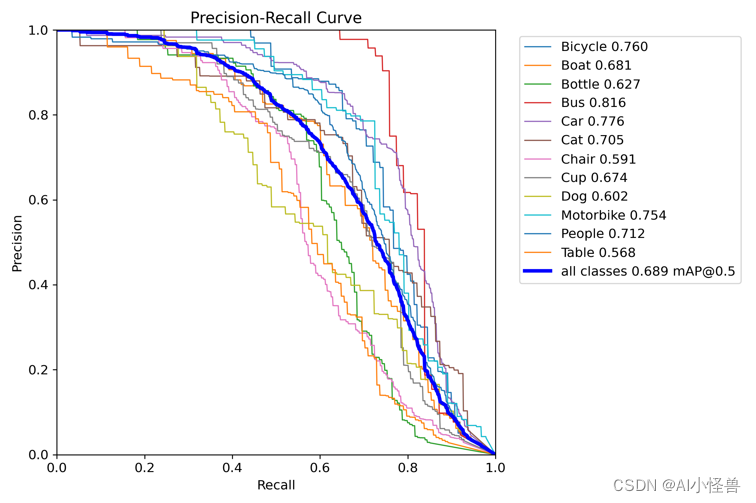

💡💡💡加入 自研CPMS注意力 mAP@0.5由原始的0.682提升至0.689

1.暗光低光数据集ExDark介绍

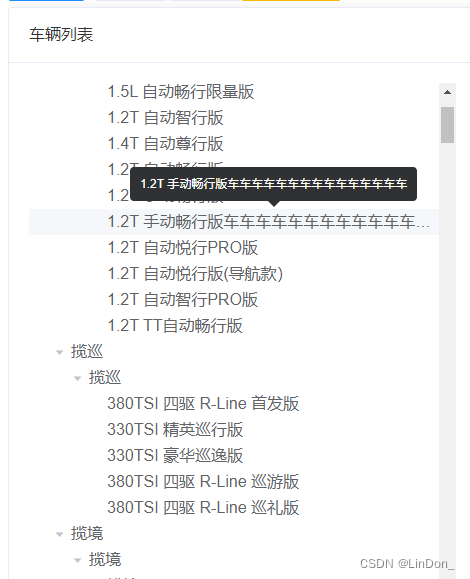

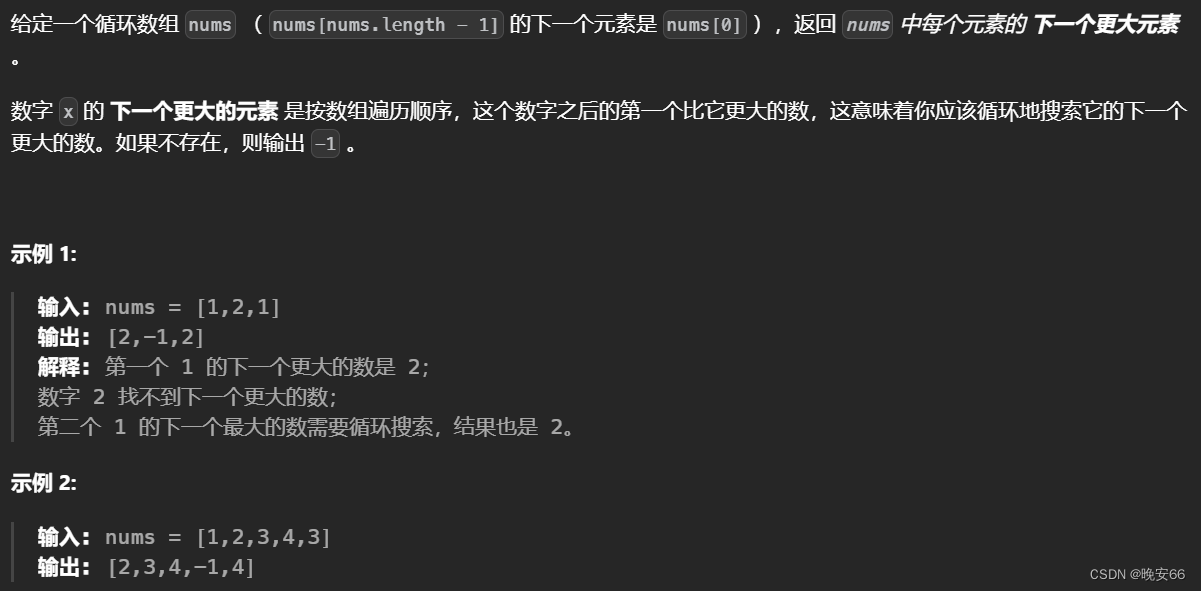

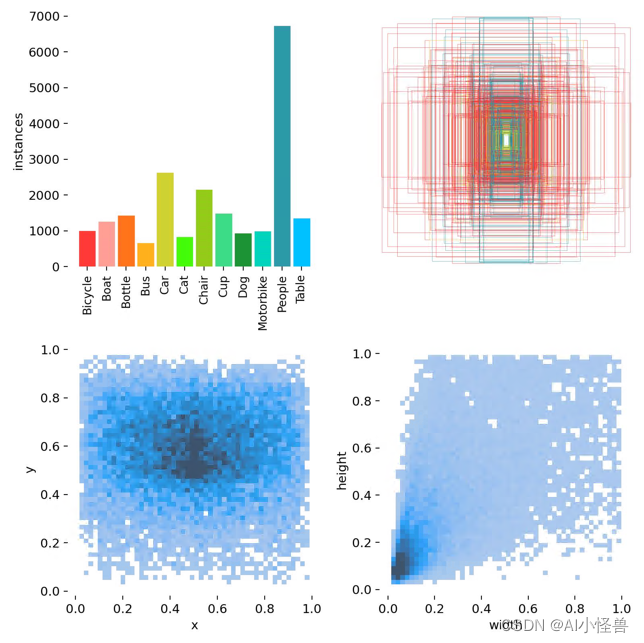

低光数据集使用ExDark,该数据集是一个专门在低光照环境下拍摄出针对低光目标检测的数据集,包括从极低光环境到暮光环境等10种不同光照条件下的图片,包含图片训练集5891张,测试集1472张,12个类别。

1.Bicycle 2.Boat 3.Bottle 4.Bus 5.Car 6.Cat 7.Chair 8.Cup 9.Dog 10.Motorbike 11.People 12.Table

细节图:

2.基于YOLOv8的暗光低光检测

2.1 修改ExDark_yolo.yaml

path: ./data/ExDark_yolo/ # dataset root dir

train: images/train # train images (relative to 'path') 1411 images

val: images/val # val images (relative to 'path') 458 images

#test: images/test # test images (optional) 937 imagesnames:0: Bicycle1: Boat2: Bottle3: Bus4: Car5: Cat6: Chair7: Cup8: Dog9: Motorbike10: People11: Table2.2 开启训练

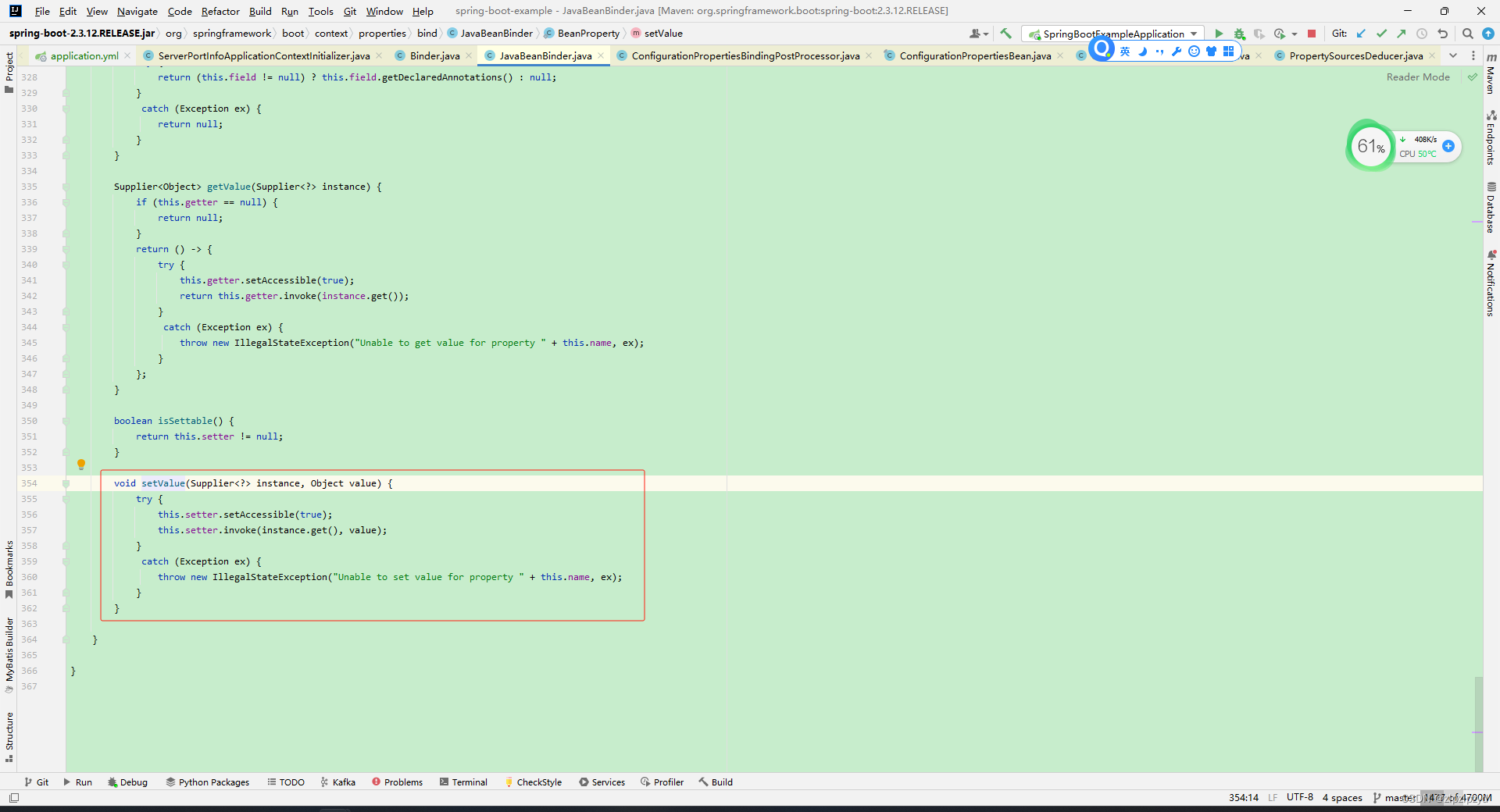

import warnings

warnings.filterwarnings('ignore')

from ultralytics import YOLOif __name__ == '__main__':model = YOLO('ultralytics/cfg/models/v8/yolov8.yaml')model.train(data='data/ExDark_yolo/ExDark_yolo.yaml',cache=False,imgsz=640,epochs=200,batch=16,close_mosaic=10,workers=0,device='0',optimizer='SGD', # using SGDproject='runs/train',name='exp',)3.结果可视化分析

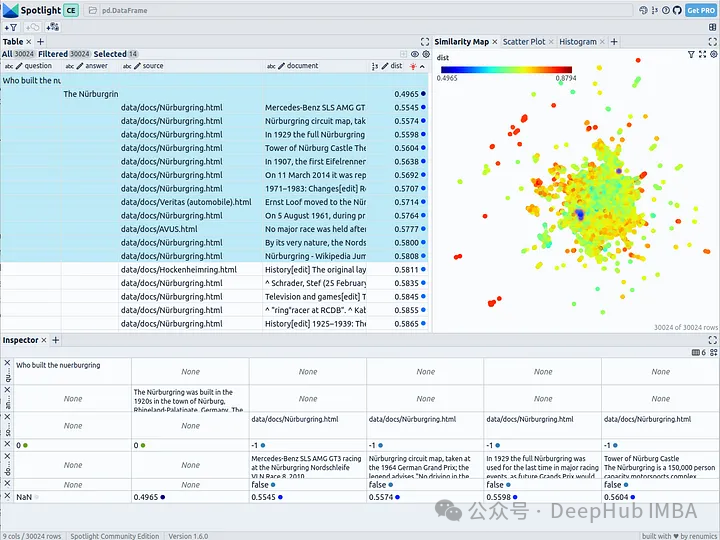

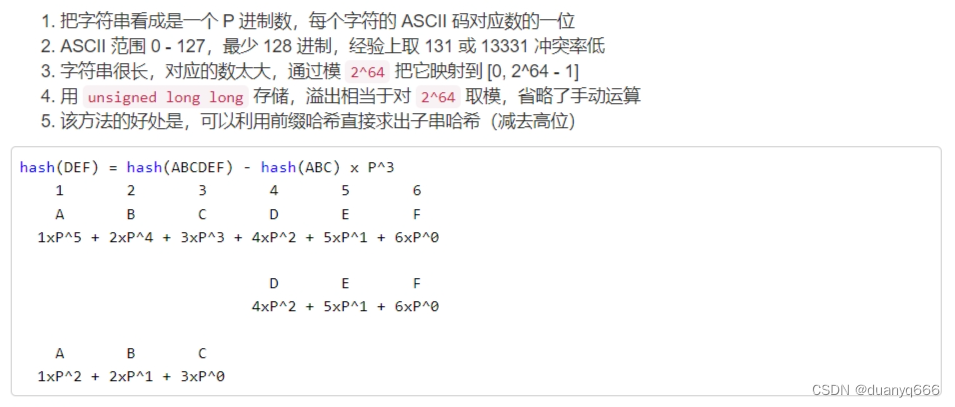

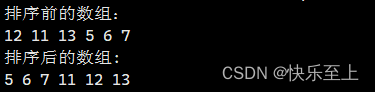

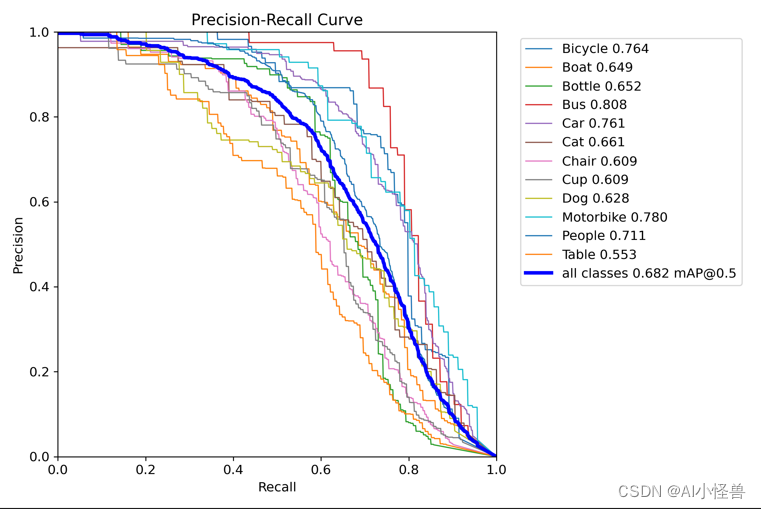

YOLOv8 summary: 225 layers, 3012500 parameters, 0 gradients, 8.2 GFLOPsClass Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 24/24 [00:25<00:00, 1.05s/it]all 737 2404 0.743 0.609 0.682 0.427Bicycle 737 129 0.769 0.697 0.764 0.498Boat 737 143 0.69 0.56 0.649 0.349Bottle 737 174 0.761 0.587 0.652 0.383Bus 737 62 0.854 0.742 0.808 0.64Car 737 311 0.789 0.672 0.761 0.5Cat 737 95 0.783 0.568 0.661 0.406Chair 737 232 0.725 0.513 0.609 0.363Cup 737 181 0.725 0.53 0.609 0.375Dog 737 94 0.634 0.617 0.628 0.421Motorbike 737 91 0.766 0.692 0.78 0.491People 737 744 0.789 0.603 0.711 0.398Table 737 148 0.637 0.52 0.553 0.296F1_curve.png:F1分数与置信度(x轴)之间的关系。F1分数是分类的一个衡量标准,是精确率和召回率的调和平均函数,介于0,1之间。越大越好。

TP:真实为真,预测为真;

FN:真实为真,预测为假;

FP:真实为假,预测为真;

TN:真实为假,预测为假;

精确率(precision)=TP/(TP+FP)

召回率(Recall)=TP/(TP+FN)

F1=2*(精确率*召回率)/(精确率+召回率)

PR_curve.png :PR曲线中的P代表的是precision(精准率),R代表的是recall(召回率),其代表的是精准率与召回率的关系。

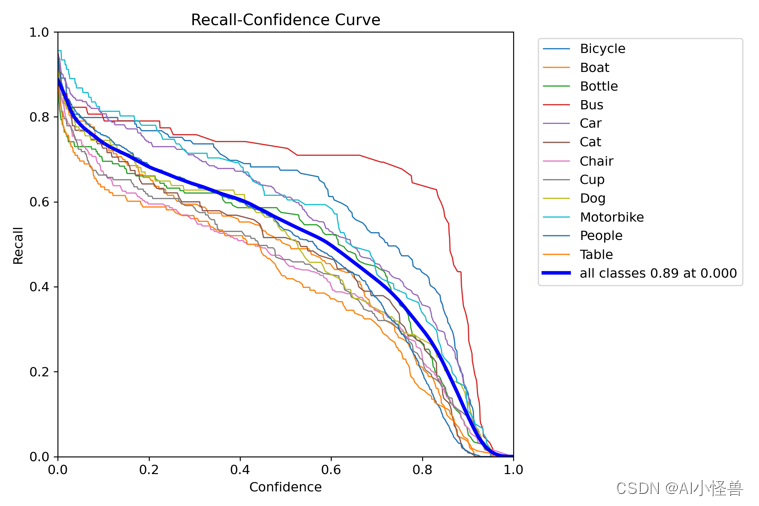

R_curve.png :召回率与置信度之间关系

results.png

mAP_0.5:0.95表示从0.5到0.95以0.05的步长上的平均mAP.

预测结果:

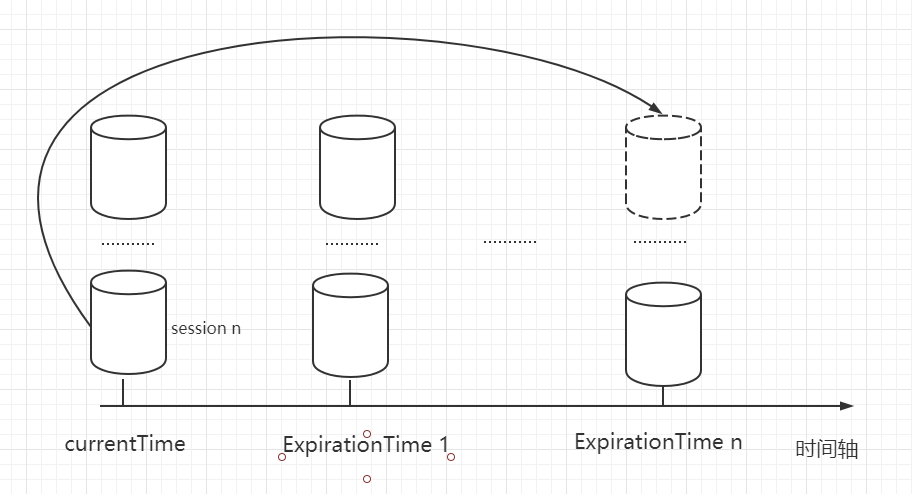

4.如何优化模型

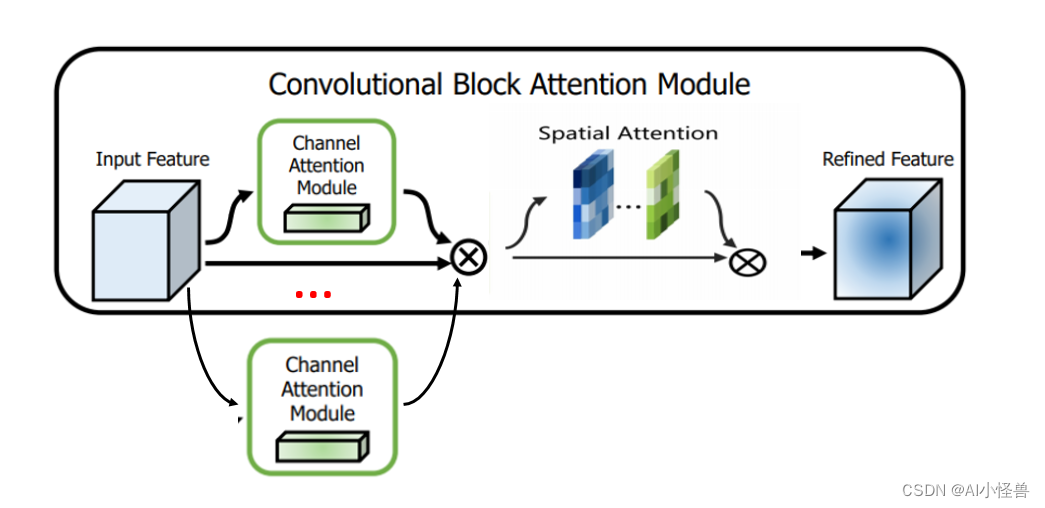

4.1 自研CPMS注意力

YOLOv8独家原创改进:原创自研 | 创新自研CPMS注意力,多尺度通道注意力具+多尺度深度可分离卷积空间注意力,全面升级CBAM-CSDN博客

自研CPMS, 多尺度通道注意力具+多尺度深度可分离卷积空间注意力,全面升级CBAM

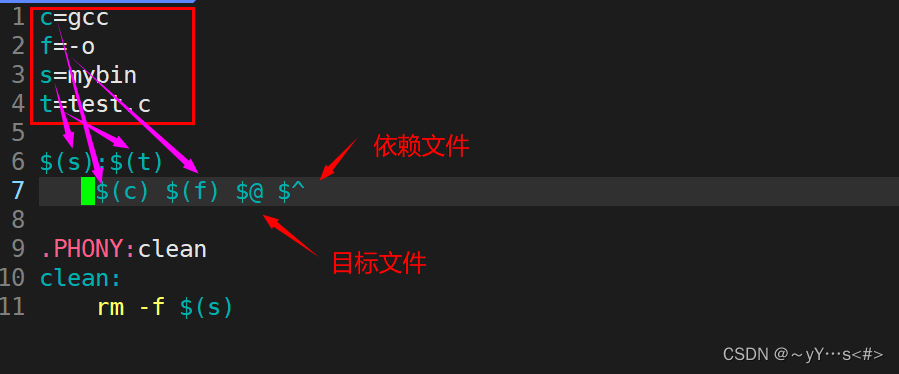

4.2 对应yaml

# Ultralytics YOLO 🚀, AGPL-3.0 license

# YOLOv8 object detection model with P3-P5 outputs. For Usage examples see https://docs.ultralytics.com/tasks/detect# Parameters

nc: 80 # number of classes

scales: # model compound scaling constants, i.e. 'model=yolov8n.yaml' will call yolov8.yaml with scale 'n'# [depth, width, max_channels]n: [0.33, 0.25, 1024] # YOLOv8n summary: 225 layers, 3157200 parameters, 3157184 gradients, 8.9 GFLOPss: [0.33, 0.50, 1024] # YOLOv8s summary: 225 layers, 11166560 parameters, 11166544 gradients, 28.8 GFLOPsm: [0.67, 0.75, 768] # YOLOv8m summary: 295 layers, 25902640 parameters, 25902624 gradients, 79.3 GFLOPsl: [1.00, 1.00, 512] # YOLOv8l summary: 365 layers, 43691520 parameters, 43691504 gradients, 165.7 GFLOPsx: [1.00, 1.25, 512] # YOLOv8x summary: 365 layers, 68229648 parameters, 68229632 gradients, 258.5 GFLOPs# YOLOv8.0n backbone

backbone:# [from, repeats, module, args]- [-1, 1, Conv, [64, 3, 2]] # 0-P1/2- [-1, 1, Conv, [128, 3, 2]] # 1-P2/4- [-1, 3, C2f, [128, True]]- [-1, 1, Conv, [256, 3, 2]] # 3-P3/8- [-1, 6, C2f, [256, True]]- [-1, 1, Conv, [512, 3, 2]] # 5-P4/16- [-1, 6, C2f, [512, True]]- [-1, 1, Conv, [1024, 3, 2]] # 7-P5/32- [-1, 3, C2f, [1024, True]]- [-1, 1, SPPF, [1024, 5]] # 9- [-1, 1, CPMS, [1024]] # 10# YOLOv8.0n head

head:- [-1, 1, nn.Upsample, [None, 2, 'nearest']]- [[-1, 6], 1, Concat, [1]] # cat backbone P4- [-1, 3, C2f, [512]] # 13- [-1, 1, nn.Upsample, [None, 2, 'nearest']]- [[-1, 4], 1, Concat, [1]] # cat backbone P3- [-1, 3, C2f, [256]] # 16 (P3/8-small)- [-1, 1, Conv, [256, 3, 2]]- [[-1, 13], 1, Concat, [1]] # cat head P4- [-1, 3, C2f, [512]] # 19 (P4/16-medium)- [-1, 1, Conv, [512, 3, 2]]- [[-1, 10], 1, Concat, [1]] # cat head P5- [-1, 3, C2f, [1024]] # 22 (P5/32-large)- [[16, 19, 22], 1, Detect, [nc]] # Detect(P3, P4, P5)

4.3 实验结果分析

mAP@0.5由原始的0.682提升至0.689

YOLOv8_CPMS summary: 244 layers, 3200404 parameters, 0 gradients, 8.4 GFLOPsClass Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 12/12 [00:25<00:00, 2.09s/it]all 737 2404 0.723 0.622 0.689 0.434Bicycle 737 129 0.724 0.721 0.76 0.475Boat 737 143 0.702 0.609 0.681 0.372Bottle 737 174 0.729 0.587 0.627 0.383Bus 737 62 0.801 0.758 0.816 0.636Car 737 311 0.798 0.682 0.776 0.508Cat 737 95 0.744 0.653 0.705 0.456Chair 737 232 0.695 0.534 0.591 0.341Cup 737 181 0.732 0.559 0.674 0.437Dog 737 94 0.532 0.553 0.602 0.39Motorbike 737 91 0.795 0.67 0.754 0.497People 737 744 0.785 0.622 0.712 0.4Table 737 148 0.634 0.514 0.568 0.311

5.系列篇

系列篇1: DCNv4结合SPPF ,助力自动驾驶

系列篇2:自研CPMS注意力,效果优于CBAM