GraphicBuffer诞生以及跨进程传递重认识

引言

对于Android的Graphics图形堆栈这块,自我感觉看了蛮多的博客啊文档(不管是比较老的还是新一点的)。但是仅仅只是看了而已,都是蜻蜓点水,没有进行记录也没有总结。所以每次哪怕阅读过程中产业了很多疑问,也就是产生了疑问而已。这次我要说不,我要把我产生的疑问都统统的解决掉,或者说绝大部分的疑问都做到在能力范围之内去解决或者找到答案。那么这篇博客重点要记录的是GraphicBuffer诞生以及跨进程传递的本质是什么,特别是App端怎么能刚好importer到SF端产生的GraphicBuffer(Android 12以前的默认方式)。并且这块的博客也比较少,所以希望能用一篇博客阐述明白这个问题!

一 重新认识GraphicBuffer

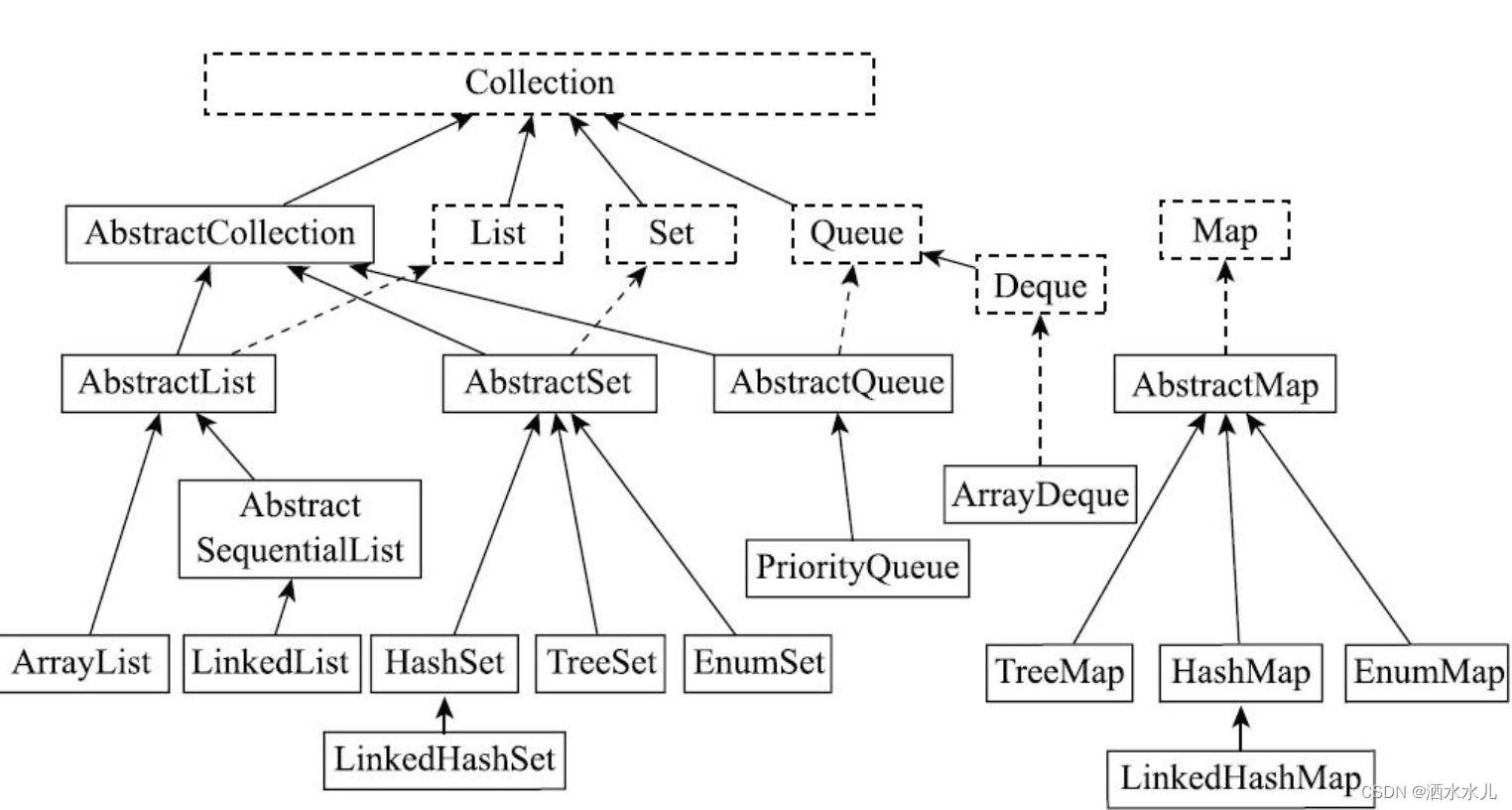

在学习一个知识点之前,我们需要做的是先认识,再了解,再理解。所以我们在阅读SurfaceFlinger HardwareComposer以及gralloc相关代码的过程中,我们经常会遇到native_handle private_handle_t ANativeWindowBuffer ANativeWindow GraphicBuffer Surface等等一系列和memory相关的struct和class,他们相互之间到底是什么区别,又有什么联系呢?

本文从struct/class的结构角度分析下上述类型之间的关联.概括来说,

-

native_handle private_handle_t ANativeWindowBuffer GraphicBuffer这四个struct/class所描述的是一块memory,

-

而ANativeWindow 和Surface所描述的是一系列上述memeory的组合和对buffer的操作方法.有的struct/class在比较低的level使用,和平台相关,而另外一些在比较高的level使用,和平台无关,还有一些介于低/高level之间,用以消除平台相关性,让Android可以方便的运行在不同的平台上.

下面让我们依次来看下上述struct/class的定义:

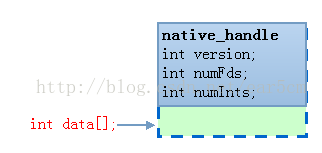

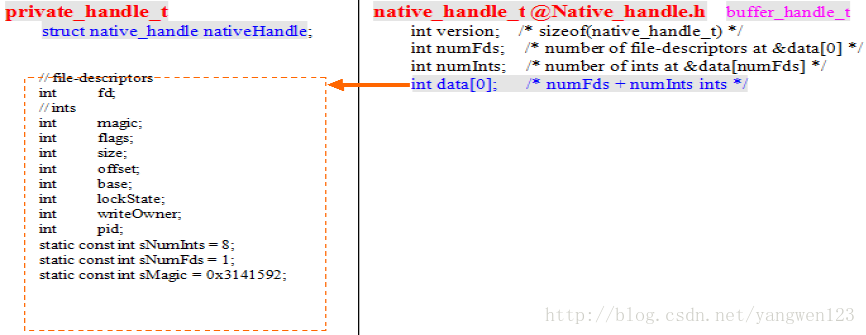

1.1 native_handle_t

system/core/libcutils/include/cutils/native_handle.h

typedef struct native_handle

{ int version; //设置为结构体native_handle的大小,用来标识结构体native_handle_t的版本int numFds; //标识结构体native_handle_t所包含的文件描述符的个数,这些文件描述符保存在成员变量data所指向的一块缓冲区中int numInts; //表示结构体native_handle_t所包含的整数值的个数,这些整数保存在成员变量data所指向的一块缓冲区中int data[0]; //指向的一块缓冲区

} native_handle_t;

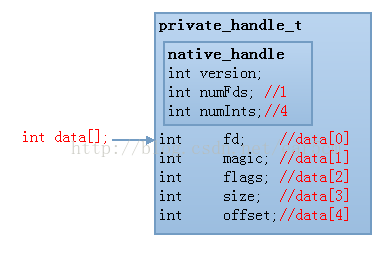

1.2 private_handle_t

private_handle_t用来描述一块缓冲区,Android对缓冲区的定义提供了C和C++两种方式,C语言编译器下的定义:

hardware/libhardware/modules/gralloc/gralloc_priv.hstruct private_handle_t {struct native_handle nativeHandle;enum {PRIV_FLAGS_FRAMEBUFFER = 0x00000001};int fd; //指向一个文件描述符,这个文件描述符要么指向帧缓冲区设备,要么指向一块匿名共享内存int magic;int flags;//用来描述一个缓冲区的标志,当一个缓冲区的标志值等于PRIV_FLAGS_FRAMEBUFFER的时候,就表示它是在帧缓冲区中分配的。int size;//用来描述一个缓冲区的大小int offset;//用来描述一个缓冲区的偏移地址int base;//用来描述一个缓冲区的实际地址int pid;//用来描述一个缓冲区的创建者的PID// 因为native_handle的data成员是一个大小为0的数组,所以data[0]其实就是指向了fd,data[1]指向magic,以此类推. // 上面提到我们可以把native_handle看成是一个纯虚的基类,那么在private_handle_t这个派生类中,numFds=1 numInts=6.

};C++编译器下的定义:

struct private_handle_t : public native_handle {enum {PRIV_FLAGS_FRAMEBUFFER = 0x00000001};int fd; //指向一个文件描述符,这个文件描述符要么指向帧缓冲区设备,要么指向一块匿名共享内存int magic;//指向一个魔数,它的值由静态成员变量sMagic来指定,用来标识一个private_handle_t结构体。int flags;//用来描述一个缓冲区的标志,它的值要么等于0,要么等于PRIV_FLAGS_FRAMEBUFFERint size;//用来描述一个缓冲区的大小。int offset;//用来描述一个缓冲区的偏移地址。int base;//用来描述一个缓冲区的实际地址,它是通过成员变量offset来计算得到的。int pid;//用来描述一个缓冲区的创建者的PID。static const int sNumInts = 6; //包含有6个整数static const int sNumFds = 1; //包含有1个文件描述符static const int sMagic = 0x3141592;

};

gralloc分配的buffer都可以用一个private_handle_t来描述,同时也可以用一个native_handle来描述.在不同的平台的实现上,private_handle_t可能会有不同的定义,所以private_handle_t在各个模块之间传递的时候很不方便,而如果用native_handle的身份来传递,就可以消除平台的差异性.在HardwareComposer中,由SurfaceFlinger传给hwc的handle即是native_handle类型,而hwc作为平台相关的模块,他需要知道native_handle中各个字段的具体含义,所以hwc往往会将native_handle指针转化为private_handle_t指针来使用。

二 native_handle_t和private_handle_t的相互转换在GraphicBuffer跨进程中的作用

地球人都知道,GraphicBuffer跨进程传输的杀手锏依肯的是Binder机制然后结合它自己实现的unflatten和flatten。这个一切的起源那就说来话长了,但是木得办法,吃这个饭的,再苦再累也得受着。哎!

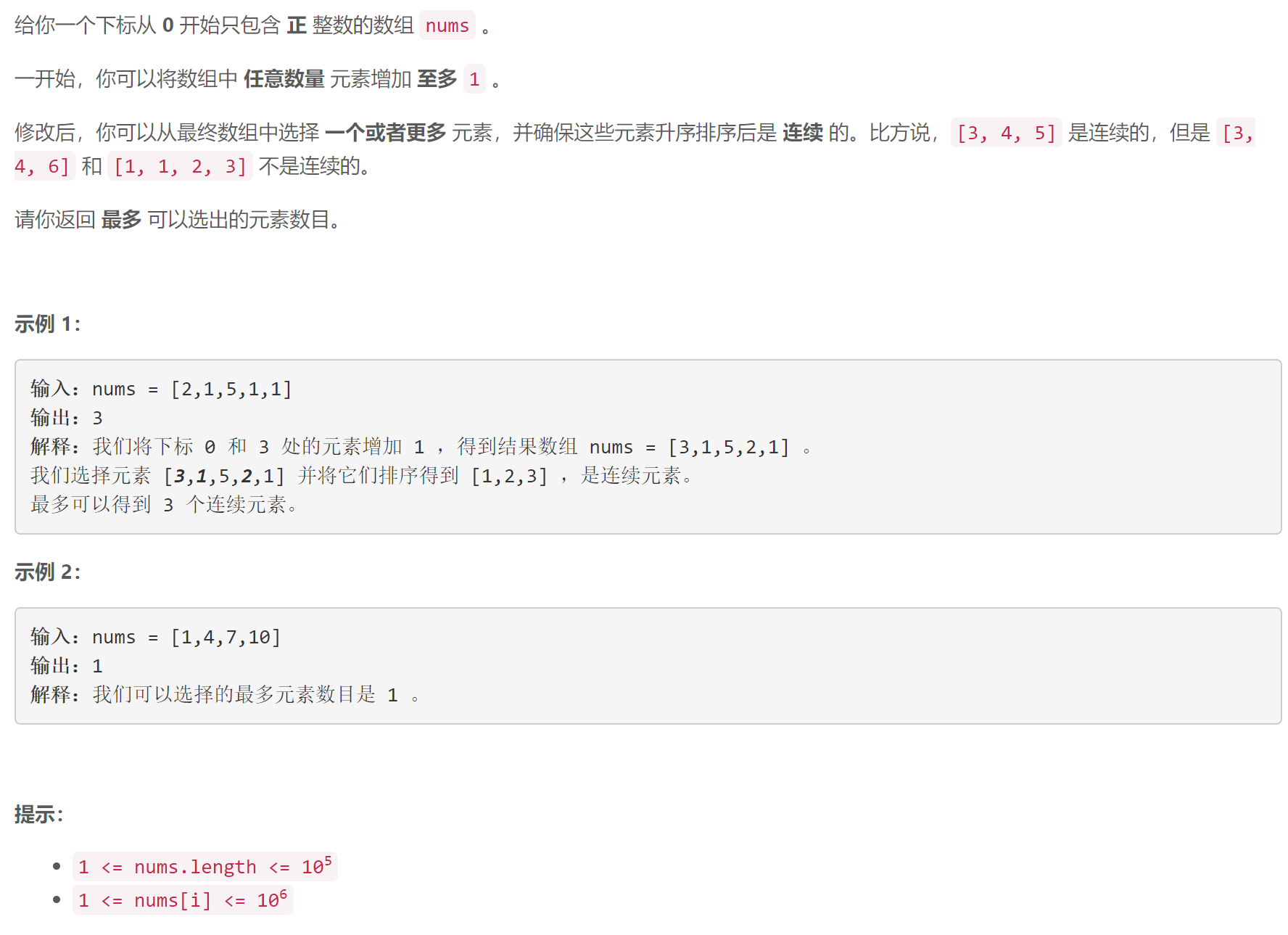

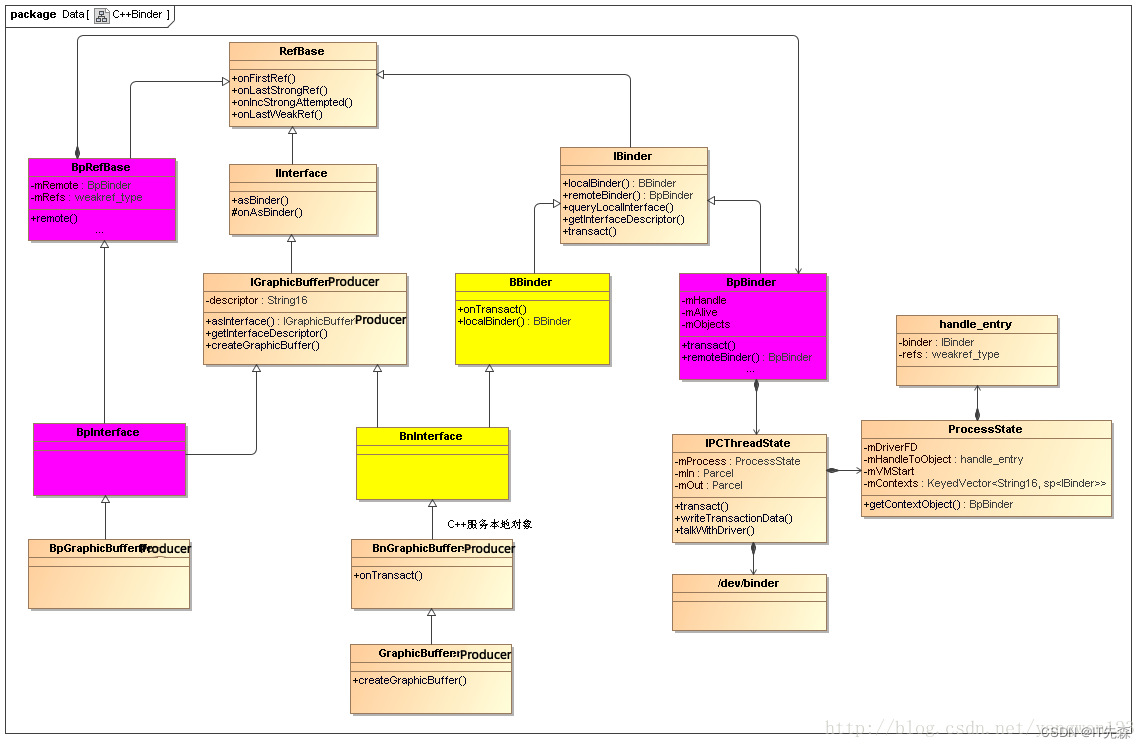

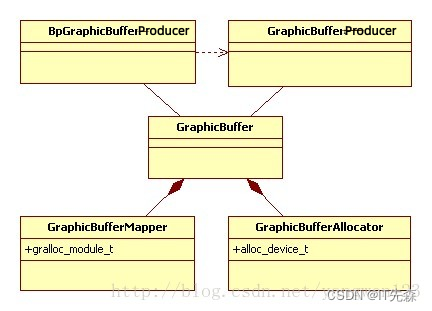

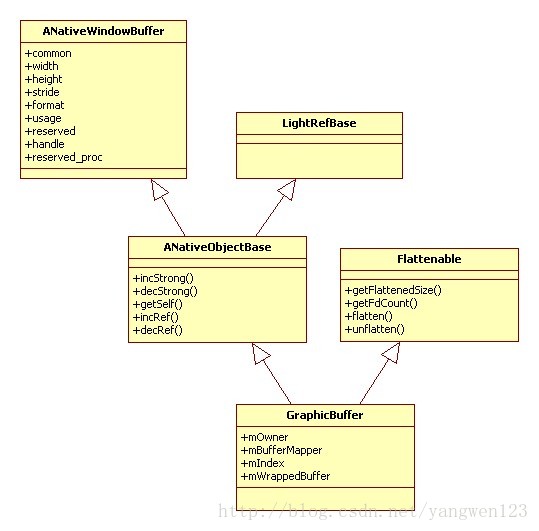

我们在分析以前,看几个重磅的类图!

上图是Android系统定的义GraphicBuffer数据类型来描述一块图形buffer,该对象可以跨进程传输!

由于IGraphicBufferProducer是基于Binder进程间通信框架设计的,因此该类的实现分客户端进程和服务端进程两方面。从上图可以知道,客户端进程通过BpGraphicBufferpRroducer对象请求服务端进程中的GraphicBufferProducer对象来创建GraphicBuffer对象。

那如何获取IGraphicBufferProducer的远程Binder代理对象呢?IGraphicBufferProducer被定义为无名Binder对象,并没有注册到ServiceManager进程中,但SurfaceFlinger是有名Binder对象,因此可以通过SurfaceFlinger创建IGraphicBufferProducer的本地对象GraphicBufferProducer,并返回其远程代理对象BpGraphicBuffer Producer给客户端进程。那客户端进程又是如何请求SurfaceFlinger创建IGraphicBufferProucer的本地对象的呢?客户端进程首先从ServiceManager进程中查询SurfaceFlinger的远程代理对象BpSurfaceComposer:

用一句话来概括总结IGraphicBufferProducer代理端在App端的获取是通过传递匿名Binder实现的,它是Activity在申请创建对应的Surface的时候被创建的。这个不是本篇博客的重点。

二.GraphicBuffer是如何跨进程传递的

在Android中GraphicBuffer跨进程传递,通常牵涉到如下几个进程之间的传递App到SurfaceFlinger,然后到grallc hal之间,下面我们分别来介绍GraphicBuffer如何在这个进程中传递的。

2.1 App端通过IGraphicBufferProducer申请GraphicBuffer

通过前面我们的吧唧吧唧应该知道了,在App端通过createSurface在SF端创建对应的Layer并且返回IGraphBufferProducer代理端以后,App端就可以申请对应的GraphicBuffer图元了。

图形内存的分配核心在于 Surface.dequeueBuffer流程,它大概可以概括为如下几个步骤:

-

Surface.dequeueBuffer会调用 BufferQueueProducer.dequeueBuffer 去 SurfaceFlinger 端获取BufferSlot数组中可用Slot的下标值

- 这个 BufferSlot 如果没有GraphicBuffer,就会去new一个,并在构造函数中申请图形缓存,并把图形缓存映射到当前进程

- 同时把 BufferQueueProducer::dequeueBuffer 返回值的标记位设置为 BUFFER_NEEDS_REALLOCATION

-

BufferQueueProducer.dequeueBuffer 的返回值如果带有 BUFFER_NEEDS_REALLOCATION标记,会调用 BufferQueueProducer.requestBuffer 获取 GraphicBuffer,同时把图形缓存映射到当前进程

其调用过程,我们就不过多的一行行分析,我们简易的看下伪代码:

Surface.dequeueBuffer(...)//App端进程

| BpGraphicBufferProducer.dequeueBuffer(...)//接口层

| BufferQueueProducer.dequeueBuffer(...)//SF进程端

| 1.dequeueBuffer 函数参数outSlot指针带回一个BufferSlot数组的下标 返回值返回标记位,但并未返回 GraphicBuffer

| 2.dequeueBuffer 函数中,在获取的 BufferSlot 没有GraphicBuffer时,会new一个GraphicBuffer,同时返回值的标记为 BUFFER_NEEDS_REALLOCATION

| new GraphicBuffer(...)//GraphicBuffer 构造函数中会调用initWithSize,内部调用分配图形缓存的代码

| initWithSize(...)

| GraphicBufferAllocator.allocate(...)

| allocateHelper(...)

| GrallocXAllocator.allocate(...)//hwbinder调用

| IAllocator::getService()->allocate(...)//此时还在SF进程

| 1.之后的代码需要看厂家的具体实现,最后无非是调用到内核驱动层分配内存,比如调用ion驱动层分配ion内存

| 2.SF 和 IAllocator 服务怎么传递 共享内存的,转“进程间图传递图形buffer详解

| 3.回调函数中调用 IMapper.importBuffer(...)//将申请的到的buffer通过mmap把ion内存映射到SF所在的进程

| 在 dequeueBuffer 返回值的标记为 BUFFER_NEEDS_REALLOCATION 时

| App端需要调用 requestBuffer,获取 GraphicBuffer 对象

| 同时,把 SurfaceFlinger 分配的图形缓存,映射到App进程

| BpGraphicBufferProducer->requestBuffer(buf, &gbuf)//此时在App端的接口层

| BufferQueueProducer.requestBuffer(...)//SF进程,请求返回 GraphicBuffer 对象

|

上面的传递过程涉及到两个很重要的跨进程传输,分别是SF和gralloc service,以及App和SF端。这里我们重点关注App和SF端的GraphicBuffer的跨进程传递逻辑:

-

SurfaceFlinger 进程端 requestBuffer 代码非常简单,仅仅是把 dequeueBuffer 过程中分配的对象赋值给参数 gbuf ,传递给 App

-

那么,图形缓存的 fd 是怎么传到App端的,App又是怎么映射的图形缓存呢?

核心在 BpGraphicBufferProducer.requestBuffer 函数中 GraphicBuffer 对象的构建过程:status_t result =remote()->transact(REQUEST_BUFFER, data, &reply);接下来GraphicBuffer 传输过程,见App进程和SF间图传递图形buffer详解*buf = new GraphicBuffer(); result = reply.read(**buf);read 过程会调用 GraphicBuffer.unflattenGraphicBuffer.unflatten 函数内部调用了 GraphicBufferMapper.importBuffer -

内部也是调用IMapper.importBuffer,最终使用 mmap 把内存映射到当前进程

调用 mmap 过程

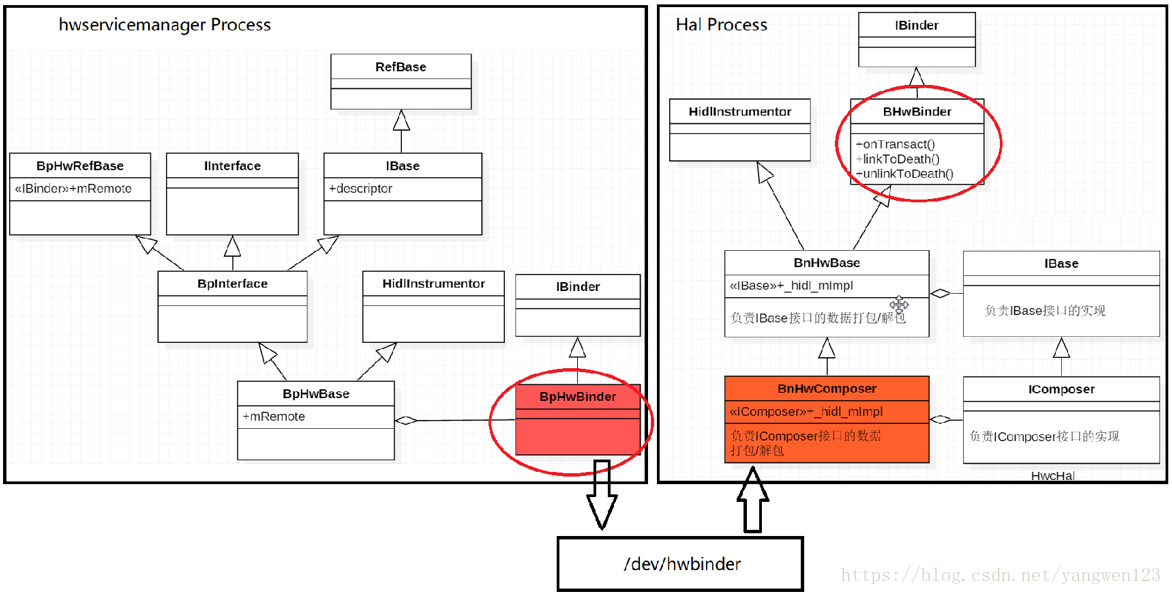

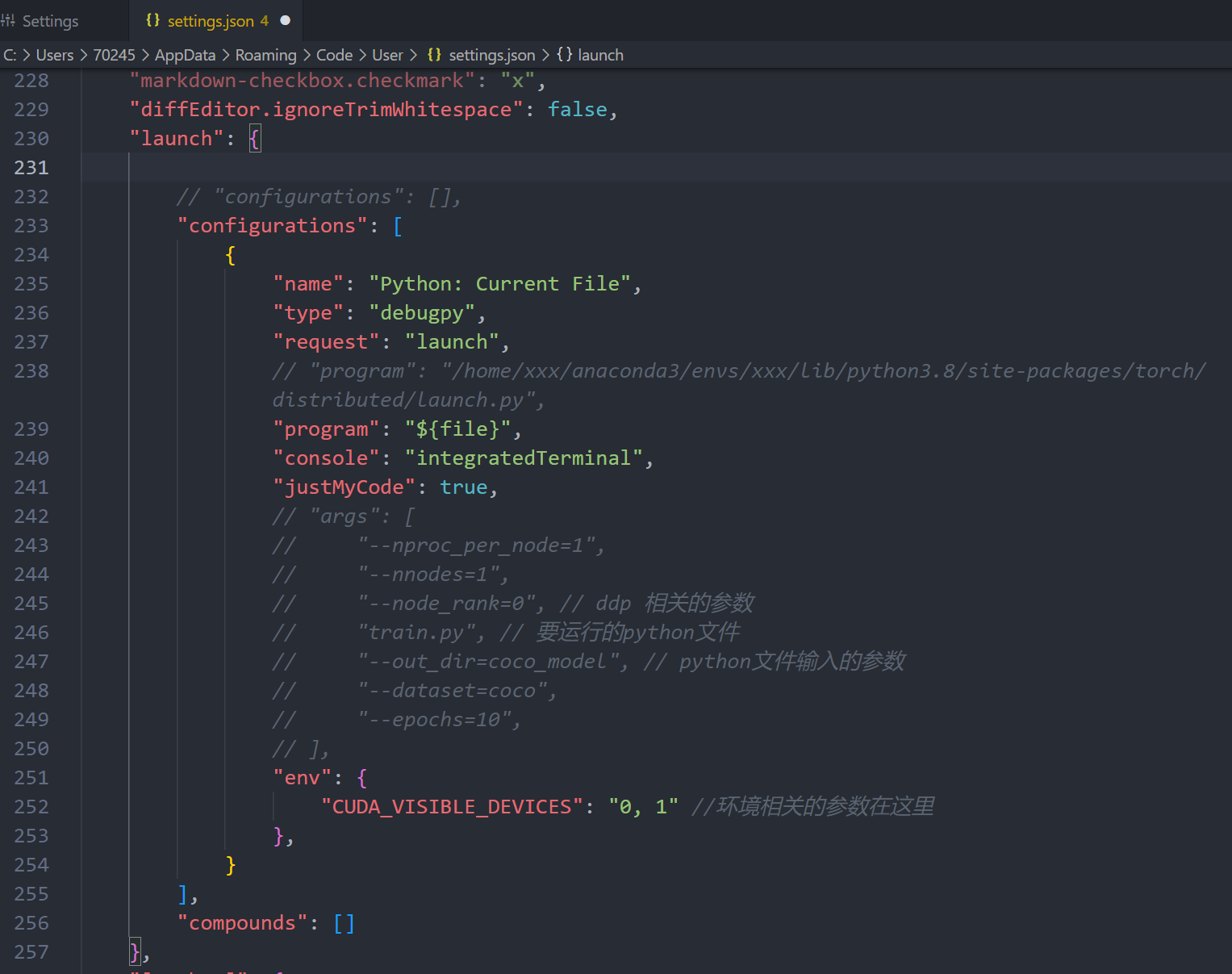

2.2 SF进程和IAllocator服务之间传递GraphicBuffer

IAllocator服务全称为android.hardware.graphics.allocator@4.0::IAllocator/default(这个也不一定,需要看具体版本或者ROM的策略),这个在高通平台上的进程名为:vendor.qti.hardware.display.allocator-service。接下来我们一步步分析GraphicBuffer是怎么在SF和IAllocator服务之间传递的。

2.2.1 SF向IAllocator通过Allocate申请构建GraphicBuffer

GraphicBuffer::GraphicBuffer(...)GraphicBuffer::initWithSize(...)GraphicBufferAllocator& allocator = GraphicBufferAllocator::get()allocator.allocate(...)GraphicBufferAllocator::allocate(...)//GraphicBufferAllocator.cppGraphicBufferAllocator::allocateHelper(...)Gralloc2Allocator::allocate(...)//Gralloc2.cpp// frameworks/native/libs/ui/Gralloc2.cpp

status_t Gralloc2Allocator::allocate(std::string requestorName, uint32_t width, uint32_t height,android::PixelFormat format, uint32_t layerCount,uint64_t usage, uint32_t bufferCount, uint32_t* outStride,buffer_handle_t* outBufferHandles, bool importBuffers) const {

//...//===================关键代码============auto ret = mAllocator->allocate(descriptor, bufferCount,[&](const auto& tmpError, const auto& tmpStride,const auto& tmpBuffers) {// const auto& tmpBuffers 是个 hidl_handle 类型error = static_cast<status_t>(tmpError);if (tmpError != Error::NONE) {return;}if (importBuffers) {for (uint32_t i = 0; i < bufferCount; i++) {error = mMapper.importBuffer(tmpBuffers[i],&outBufferHandles[i]);if (error != NO_ERROR) {for (uint32_t j = 0; j < i; j++) {mMapper.freeBuffer(outBufferHandles[j]);outBufferHandles[j] = nullptr;}return;}}} else {//....}*outStride = tmpStride;});//...return (ret.isOk()) ? error : static_cast<status_t>(kTransactionError);

}上述的代码核心有两点:

- SF向Allocate申请构建buffer_handle_t,此时的SF还不能使用这块buffer

- SF通过importBuffer将这块buffer映射到自己的进程,然后SF就阔以使用这块了

2.2.2 allocator服务端的hidl接口实现

关于hidl中binder的实现,可以类比Binder,HWBinder的实现也是类似的。

在看具体实现前,我们先看几个重要的定义:

//system/libhidl/base/include/hidl/HidlSupport.h

struct hidl_handle {hidl_handle();~hidl_handle();hidl_handle(const native_handle_t *handle);// copy constructor.hidl_handle(const hidl_handle &other);// move constructor.hidl_handle(hidl_handle &&other) noexcept;// assignment operatorshidl_handle &operator=(const hidl_handle &other);hidl_handle &operator=(const native_handle_t *native_handle);hidl_handle &operator=(hidl_handle &&other) noexcept;void setTo(native_handle_t* handle, bool shouldOwn = false);const native_handle_t* operator->() const;// implicit conversion to const native_handle_t*operator const native_handle_t *() const;// explicit conversionconst native_handle_t *getNativeHandle() const;// offsetof(hidl_handle, mHandle) exposed since mHandle is private.static const size_t kOffsetOfNativeHandle;private:void freeHandle();details::hidl_pointer<const native_handle_t> mHandle;bool mOwnsHandle;uint8_t mPad[7];

};//system/libhidl/base/HidlSupport.cpp

// explicit conversion

const native_handle_t *hidl_handle::getNativeHandle() const {return mHandle;

}这里我们不看具体的allocate函数实现,重点看数据传输过程:

//out/soong/.intermediates/hardware/interfaces/graphics/allocator/2.0/android.hardware.graphics.allocator@2.0_genc++_headers/gen/android/hardware/graphics/allocator/2.0/IAllocator.husing allocate_cb = std::function<void(::android::hardware::graphics::mapper::V2_0::Error error, uint32_t stride, const ::android::hardware::hidl_vec<::android::hardware::hidl_handle>& buffers)>;//out/soong/.intermediates/hardware/interfaces/graphics/allocator/4.0/android.hardware.graphics.allocator@4.0_genc++/gen/android/hardware/graphics/allocator/4.0/AllocatorAll.cpp

// 这部分代码是 hidl 接口编译完成后,out目录自动生成的代码,源码目录下没有

// Methods from ::android::hardware::graphics::allocator::V2_0::IAllocator follow.

::android::status_t BnHwAllocator::_hidl_allocate(::android::hidl::base::V1_0::BnHwBase* _hidl_this,const ::android::hardware::Parcel &_hidl_data,::android::hardware::Parcel *_hidl_reply,TransactCallback _hidl_cb) {//...

//========================调用服务端真正的实现=====================::android::hardware::Return<void> _hidl_ret = static_cast<IAllocator*>(_hidl_this->getImpl().get())->allocate(*descriptor, count, [&](const auto &_hidl_out_error, const auto &_hidl_out_stride, const auto &_hidl_out_buffers) {if (_hidl_callbackCalled) {LOG_ALWAYS_FATAL("allocate: _hidl_cb called a second time, but must be called once.");}_hidl_callbackCalled = true;

//===============函数调用完成后,开始写返回数据========================::android::hardware::writeToParcel(::android::hardware::Status::ok(), _hidl_reply);_hidl_err = _hidl_reply->writeInt32((int32_t)_hidl_out_error);if (_hidl_err != ::android::OK) { goto _hidl_error; }// 返回数据 tmpStride 的值_hidl_err = _hidl_reply->writeUint32(_hidl_out_stride);if (_hidl_err != ::android::OK) { goto _hidl_error; }size_t _hidl__hidl_out_buffers_parent;_hidl_err = _hidl_reply->writeBuffer(&_hidl_out_buffers, sizeof(_hidl_out_buffers), &_hidl__hidl_out_buffers_parent);if (_hidl_err != ::android::OK) { goto _hidl_error; }size_t _hidl__hidl_out_buffers_child;_hidl_err = ::android::hardware::writeEmbeddedToParcel(_hidl_out_buffers,_hidl_reply,_hidl__hidl_out_buffers_parent,0 /* parentOffset */, &_hidl__hidl_out_buffers_child);if (_hidl_err != ::android::OK) { goto _hidl_error; }//关键代码====传输上边的回调函数的参数 const auto& tmpBuffers 的每个元素, 数据类型是 hidl_handle 类型for (size_t _hidl_index_0 = 0; _hidl_index_0 < _hidl_out_buffers.size(); ++_hidl_index_0) {// 关键函数 android::hardware::writeEmbeddedToParcel_hidl_err = ::android::hardware::writeEmbeddedToParcel(_hidl_out_buffers[_hidl_index_0],_hidl_reply,_hidl__hidl_out_buffers_child,_hidl_index_0 * sizeof(::android::hardware::hidl_handle));if (_hidl_err != ::android::OK) { goto _hidl_error; }}

//...if (_hidl_err != ::android::OK) { return; }_hidl_cb(*_hidl_reply);});_hidl_ret.assertOk();if (!_hidl_callbackCalled) {LOG_ALWAYS_FATAL("allocate: _hidl_cb not called, but must be called once.");}return _hidl_err;

}::android::hardware::Return<void> BpHwAllocator::_hidl_allocate(::android::hardware::IInterface *_hidl_this, ::android::hardware::details::HidlInstrumentor *_hidl_this_instrumentor, const ::android::hardware::hidl_vec<uint32_t>& descriptor, uint32_t count, allocate_cb _hidl_cb) {#ifdef __ANDROID_DEBUGGABLE__bool mEnableInstrumentation = _hidl_this_instrumentor->isInstrumentationEnabled();const auto &mInstrumentationCallbacks = _hidl_this_instrumentor->getInstrumentationCallbacks();#else(void) _hidl_this_instrumentor;#endif // __ANDROID_DEBUGGABLE__::android::ScopedTrace PASTE(___tracer, __LINE__) (ATRACE_TAG_HAL, "HIDL::IAllocator::allocate::client");#ifdef __ANDROID_DEBUGGABLE__if (UNLIKELY(mEnableInstrumentation)) {std::vector<void *> _hidl_args;_hidl_args.push_back((void *)&descriptor);_hidl_args.push_back((void *)&count);for (const auto &callback: mInstrumentationCallbacks) {callback(InstrumentationEvent::CLIENT_API_ENTRY, "android.hardware.graphics.allocator", "2.0", "IAllocator", "allocate", &_hidl_args);}}#endif // __ANDROID_DEBUGGABLE__::android::hardware::Parcel _hidl_data;::android::hardware::Parcel _hidl_reply;::android::status_t _hidl_err;::android::status_t _hidl_transact_err;::android::hardware::Status _hidl_status;_hidl_err = _hidl_data.writeInterfaceToken(BpHwAllocator::descriptor);if (_hidl_err != ::android::OK) { goto _hidl_error; }size_t _hidl_descriptor_parent;_hidl_err = _hidl_data.writeBuffer(&descriptor, sizeof(descriptor), &_hidl_descriptor_parent);if (_hidl_err != ::android::OK) { goto _hidl_error; }size_t _hidl_descriptor_child;_hidl_err = ::android::hardware::writeEmbeddedToParcel(descriptor,&_hidl_data,_hidl_descriptor_parent,0 /* parentOffset */, &_hidl_descriptor_child);if (_hidl_err != ::android::OK) { goto _hidl_error; }_hidl_err = _hidl_data.writeUint32(count);if (_hidl_err != ::android::OK) { goto _hidl_error; }_hidl_transact_err = ::android::hardware::IInterface::asBinder(_hidl_this)->transact(2 /* allocate */, _hidl_data, &_hidl_reply, 0, [&] (::android::hardware::Parcel& _hidl_reply) {::android::hardware::graphics::mapper::V2_0::Error _hidl_out_error;uint32_t _hidl_out_stride;const ::android::hardware::hidl_vec<::android::hardware::hidl_handle>* _hidl_out_buffers;_hidl_err = ::android::hardware::readFromParcel(&_hidl_status, _hidl_reply);if (_hidl_err != ::android::OK) { return; }if (!_hidl_status.isOk()) { return; }_hidl_err = _hidl_reply.readInt32((int32_t *)&_hidl_out_error);if (_hidl_err != ::android::OK) { return; }_hidl_err = _hidl_reply.readUint32(&_hidl_out_stride);if (_hidl_err != ::android::OK) { return; }size_t _hidl__hidl_out_buffers_parent;_hidl_err = _hidl_reply.readBuffer(sizeof(*_hidl_out_buffers), &_hidl__hidl_out_buffers_parent, reinterpret_cast<const void **>(&_hidl_out_buffers));if (_hidl_err != ::android::OK) { return; }size_t _hidl__hidl_out_buffers_child;_hidl_err = ::android::hardware::readEmbeddedFromParcel(const_cast<::android::hardware::hidl_vec<::android::hardware::hidl_handle> &>(*_hidl_out_buffers),_hidl_reply,_hidl__hidl_out_buffers_parent,0 /* parentOffset */, &_hidl__hidl_out_buffers_child);if (_hidl_err != ::android::OK) { return; }for (size_t _hidl_index_0 = 0; _hidl_index_0 < _hidl_out_buffers->size(); ++_hidl_index_0) {_hidl_err = ::android::hardware::readEmbeddedFromParcel(const_cast<::android::hardware::hidl_handle &>((*_hidl_out_buffers)[_hidl_index_0]),_hidl_reply,_hidl__hidl_out_buffers_child,_hidl_index_0 * sizeof(::android::hardware::hidl_handle));if (_hidl_err != ::android::OK) { return; }}_hidl_cb(_hidl_out_error, _hidl_out_stride, *_hidl_out_buffers);#ifdef __ANDROID_DEBUGGABLE__if (UNLIKELY(mEnableInstrumentation)) {std::vector<void *> _hidl_args;_hidl_args.push_back((void *)&_hidl_out_error);_hidl_args.push_back((void *)&_hidl_out_stride);_hidl_args.push_back((void *)_hidl_out_buffers);for (const auto &callback: mInstrumentationCallbacks) {callback(InstrumentationEvent::CLIENT_API_EXIT, "android.hardware.graphics.allocator", "2.0", "IAllocator", "allocate", &_hidl_args);}}#endif // __ANDROID_DEBUGGABLE__});if (_hidl_transact_err != ::android::OK) {_hidl_err = _hidl_transact_err;goto _hidl_error;}if (!_hidl_status.isOk()) { return _hidl_status; }return ::android::hardware::Return<void>();_hidl_error:_hidl_status.setFromStatusT(_hidl_err);return ::android::hardware::Return<void>(_hidl_status);

}::android::hardware::Return<void> BpHwAllocator::allocate(const ::android::hardware::hidl_vec<uint32_t>& descriptor, uint32_t count, allocate_cb _hidl_cb){::android::hardware::Return<void> _hidl_out = ::android::hardware::graphics::allocator::V2_0::BpHwAllocator::_hidl_allocate(this, this, descriptor, count, _hidl_cb);return _hidl_out;

}android::hardware::writeEmbeddedToParcel

// system/libhidl/transport/HidlBinderSupport.cpp

status_t writeEmbeddedToParcel(const hidl_handle &handle,Parcel *parcel, size_t parentHandle, size_t parentOffset) {//此处调用了 hwbinder/Parcel.cpp 的writeEmbeddedNativeHandle 函数status_t _hidl_err = parcel->writeEmbeddedNativeHandle(handle.getNativeHandle(),parentHandle,parentOffset + hidl_handle::kOffsetOfNativeHandle);return _hidl_err;

}// system/libhwbinder/Parcel.cpp

status_t Parcel::writeEmbeddedNativeHandle(const native_handle_t *handle,size_t parent_buffer_handle,size_t parent_offset)

{return writeNativeHandleNoDup(handle, true /* embedded */, parent_buffer_handle, parent_offset);

}status_t Parcel::writeNativeHandleNoDup(const native_handle_t *handle,bool embedded,size_t parent_buffer_handle,size_t parent_offset)

{

//...struct binder_fd_array_object fd_array {.hdr = { .type = BINDER_TYPE_FDA }, // 关键代码: BINDER_TYPE_FDA 类型,binder内核驱动代码对这个类型有专门的处理.num_fds = static_cast<binder_size_t>(handle->numFds),.parent = buffer_handle,.parent_offset = offsetof(native_handle_t, data),};return writeObject(fd_array);

}之后的处理逻辑就牵涉到binder驱动对 BINDER_TYPE_FDA 、BINDER_TYPE_FD 类型的处理,这个建后面的具体分析!

2.3 App进程同SF进程之间传递GraphicBuffer对象

这里我们先看SF进程如何相应App端的请求处理逻辑:

// frameworks/native/libs/gui/IGraphicBufferProducer.cpp

status_t BnGraphicBufferProducer::onTransact(uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags)

{switch(code) {case REQUEST_BUFFER: {CHECK_INTERFACE(IGraphicBufferProducer, data, reply);int bufferIdx = data.readInt32();sp<GraphicBuffer> buffer;//关于SF怎么申请构建GraphicBuffer,这里不是本篇博客的重点讨论范围int result = requestBuffer(bufferIdx, &buffer);reply->writeInt32(buffer != nullptr);if (buffer != nullptr) {reply->write(*buffer);// GraphicBuffer 对象回写========!!!!!!!!!!!!!!=====}reply->writeInt32(result);return NO_ERROR;}

//...}

//...

}2.3.1 Parcel::write写对象流程

由于GraphicBuffer继承于Flattenable类,因此GraphicBuffer对象可以跨进程传输,服务进程端回写GraphicBuffer对象过程如下:

// frameworks/native/libs/binder/include/binder/Parcel.h

template<typename T>

status_t Parcel::write(const Flattenable<T>& val) {// 对象需要继承 Flattenableconst FlattenableHelper<T> helper(val);return write(helper);

}// frameworks/native/libs/binder/Parcel.cpp

status_t Parcel::write(const FlattenableHelperInterface& val)

{status_t err;// size if needed//写入数据长度const size_t len = val.getFlattenedSize();// val.getFdCount(); 这个值为 GraphicBuffer.mTransportNumFds// 从这个接口获取// GrallocMapper::getTransportSize(buffer_handle_t bufferHandle, uint32_t* outNumFds, uint32_t* outNumInts)const size_t fd_count = val.getFdCount();// 这个值为 GraphicBuffer.mTransportNumFds

//...........// 调用对象的 flatten 写到缓存中err = val.flatten(buf, len, fds, fd_count);// fd_count 不为0,需要写 fdfor (size_t i=0 ; i<fd_count && err==NO_ERROR ; i++) {err = this->writeDupFileDescriptor( fds[i] );}if (fd_count) {delete [] fds;}return err;

}GraphicBuffer对象的数据长度获取过程:

size_t GraphicBuffer::getFlattenedSize() const {return static_cast<size_t>(13 + (handle ? mTransportNumInts : 0)) * sizeof(int);

}

GraphicBuffer对象中的句柄个数获取过程:

size_t GraphicBuffer::getFdCount() const {return static_cast<size_t>(handle ? mTransportNumFds : 0);

}

由于val指向的是GraphicBuffer对象,因此将调用GraphicBuffer对象的flatten函数来写入native_handle等内容,GraphicBuffer类继承于Flattenable类,并实现了该类的flatten函数:

status_t GraphicBuffer::flatten(void*& buffer, size_t& size, int*& fds, size_t& count) const {size_t sizeNeeded = GraphicBuffer::getFlattenedSize();if (size < sizeNeeded) return NO_MEMORY;size_t fdCountNeeded = GraphicBuffer::getFdCount();if (count < fdCountNeeded) return NO_MEMORY;int32_t* buf = static_cast<int32_t*>(buffer);buf[0] = 'GB01';buf[1] = width;buf[2] = height;buf[3] = stride;buf[4] = format;buf[5] = static_cast<int32_t>(layerCount);buf[6] = int(usage); // low 32-bitsbuf[7] = static_cast<int32_t>(mId >> 32);buf[8] = static_cast<int32_t>(mId & 0xFFFFFFFFull);buf[9] = static_cast<int32_t>(mGenerationNumber);buf[10] = 0;buf[11] = 0;buf[12] = int(usage >> 32); // high 32-bitsif (handle) {buf[10] = int32_t(mTransportNumFds);buf[11] = int32_t(mTransportNumInts);memcpy(fds, handle->data, static_cast<size_t>(mTransportNumFds) * sizeof(int));memcpy(buf + 13, handle->data + handle->numFds,static_cast<size_t>(mTransportNumInts) * sizeof(int));}buffer = static_cast<void*>(static_cast<uint8_t*>(buffer) + sizeNeeded);size -= sizeNeeded;if (handle) {fds += mTransportNumFds;count -= static_cast<size_t>(mTransportNumFds);}return NO_ERROR;

}native_handle_t是上层抽象的可以在进程间传递的数据结构,对private_handle_t的抽象包装。

numFds=1表示有一个文件句柄:fd

numInts= 8表示后面跟了8个INT型的数据:magic,flags,size,offset,base,lockState,writeOwner,pid;.

我们继续来看下Parcel::writeDupFileDescriptor 写fd流程:

// frameworks/native/libs/binder/Parcel.cpp

status_t Parcel::writeDupFileDescriptor(int fd)

{int dupFd;if (status_t err = dupFileDescriptor(fd, &dupFd); err != OK) {return err;}//=============!!!!!!!!!!!!!===========status_t err = writeFileDescriptor(dupFd, true /*takeOwnership*/);if (err != OK) {close(dupFd);}return err;

}status_t Parcel::writeFileDescriptor(int fd, bool takeOwnership) {

//........#ifdef BINDER_WITH_KERNEL_IPC // frameworks/native/libs/binder/Android.bp 中定义了此宏 "-DBINDER_WITH_KERNEL_IPC",flat_binder_object obj;obj.hdr.type = BINDER_TYPE_FD;// 类型为 fd ,内核会自动创建fdobj.flags = 0;obj.binder = 0; /* Don't pass uninitialized stack data to a remote process */obj.handle = fd;obj.cookie = takeOwnership ? 1 : 0;return writeObject(obj, true);

#else // BINDER_WITH_KERNEL_IPCLOG_ALWAYS_FATAL("Binder kernel driver disabled at build time");(void)fd;(void)takeOwnership;return INVALID_OPERATION;

#endif // BINDER_WITH_KERNEL_IPC

}之后的代码详见见binder驱动对 BINDER_TYPE_FDA 、BINDER_TYPE_FD类型的处理。

2.3.2 Aoo端对SF写回的GraphicBuffer的处理

服务进程将创建的GraphicBuffer对象的成员变量handle写回到请求创建图形缓冲区的客户进程,这时客户进程通过以下方式就可以读取服务进程返回的关于创建图形buffer的信息数据。

//IGraphicBufferProducer.cppvirtual status_t requestBuffer(int bufferIdx, sp<GraphicBuffer>* buf) {Parcel data, reply;data.writeInterfaceToken(IGraphicBufferProducer::getInterfaceDescriptor());data.writeInt32(bufferIdx);status_t result =remote()->transact(REQUEST_BUFFER, data, &reply);if (result != NO_ERROR) {return result;}bool nonNull = reply.readInt32();if (nonNull) {*buf = new GraphicBuffer();result = reply.read(**buf);if(result != NO_ERROR) {(*buf).clear();return result;}}result = reply.readInt32();return result;}

eply为Parcel类型变量,该对象的read函数实现如下:

status_t Parcel::read(Flattenable& val) const

{// sizeconst size_t len = this->readInt32();const size_t fd_count = this->readInt32();// payloadvoid const* buf = this->readInplace(PAD_SIZE(len));if (buf == NULL)return BAD_VALUE;int* fds = NULL;if (fd_count) {fds = new int[fd_count];}status_t err = NO_ERROR;for (size_t i=0 ; i<fd_count && err==NO_ERROR ; i++) {fds[i] = dup(this->readFileDescriptor());if (fds[i] < 0) err = BAD_VALUE;}if (err == NO_ERROR) {err = val.unflatten(buf, len, fds, fd_count);}if (fd_count) {delete [] fds;}return err;

}

val同样指向的是GraphicBuffer对象,因此将调用GraphicBuffer对象的unflatten函数来读取服务进程返回来的数据内容,GraphicBuffer类继承于Flattenable类,并实现了该类的flatten函数:

status_t GraphicBuffer::unflatten(void const*& buffer, size_t& size, int const*& fds,size_t& count) {// Check if size is not smaller than buf[0] is supposed to take.if (size < sizeof(int)) {return NO_MEMORY;}int const* buf = static_cast<int const*>(buffer);// NOTE: it turns out that some media code generates a flattened GraphicBuffer manually!!!!!// see H2BGraphicBufferProducer.cppuint32_t flattenWordCount = 0;if (buf[0] == 'GB01') {// new version with 64-bits usage bitsflattenWordCount = 13;} else if (buf[0] == 'GBFR') {// old version, when usage bits were 32-bitsflattenWordCount = 12;} else {return BAD_TYPE;}if (size < 12 * sizeof(int)) {android_errorWriteLog(0x534e4554, "114223584");return NO_MEMORY;}const size_t numFds = static_cast<size_t>(buf[10]);const size_t numInts = static_cast<size_t>(buf[11]);// Limit the maxNumber to be relatively small. The number of fds or ints// should not come close to this number, and the number itself was simply// chosen to be high enough to not cause issues and low enough to prevent// overflow problems.const size_t maxNumber = 4096;if (numFds >= maxNumber || numInts >= (maxNumber - flattenWordCount)) {width = height = stride = format = usage_deprecated = 0;layerCount = 0;usage = 0;handle = nullptr;ALOGE("unflatten: numFds or numInts is too large: %zd, %zd", numFds, numInts);return BAD_VALUE;}const size_t sizeNeeded = (flattenWordCount + numInts) * sizeof(int);if (size < sizeNeeded) return NO_MEMORY;size_t fdCountNeeded = numFds;if (count < fdCountNeeded) return NO_MEMORY;if (handle) {// free previous handle if anyfree_handle();}if (numFds || numInts) {width = buf[1];height = buf[2];stride = buf[3];format = buf[4];layerCount = static_cast<uintptr_t>(buf[5]);usage_deprecated = buf[6];if (flattenWordCount == 13) {usage = (uint64_t(buf[12]) << 32) | uint32_t(buf[6]);} else {usage = uint64_t(usage_deprecated);}native_handle* h =native_handle_create(static_cast<int>(numFds), static_cast<int>(numInts));if (!h) {width = height = stride = format = usage_deprecated = 0;layerCount = 0;usage = 0;handle = nullptr;ALOGE("unflatten: native_handle_create failed");return NO_MEMORY;}memcpy(h->data, fds, numFds * sizeof(int));memcpy(h->data + numFds, buf + flattenWordCount, numInts * sizeof(int));handle = h;} else {width = height = stride = format = usage_deprecated = 0;layerCount = 0;usage = 0;handle = nullptr;}mId = static_cast<uint64_t>(buf[7]) << 32;mId |= static_cast<uint32_t>(buf[8]);mGenerationNumber = static_cast<uint32_t>(buf[9]);mOwner = ownHandle;if (handle != nullptr) {buffer_handle_t importedHandle;//这个地方很重要,非常重要,very importstatus_t err = mBufferMapper.importBuffer(handle, uint32_t(width), uint32_t(height),uint32_t(layerCount), format, usage, uint32_t(stride), &importedHandle);if (err != NO_ERROR) {width = height = stride = format = usage_deprecated = 0;layerCount = 0;usage = 0;handle = nullptr;ALOGE("unflatten: registerBuffer failed: %s (%d)", strerror(-err), err);return err;}native_handle_close(handle);native_handle_delete(const_cast<native_handle_t*>(handle));handle = importedHandle;mBufferMapper.getTransportSize(handle, &mTransportNumFds, &mTransportNumInts);}buffer = static_cast<void const*>(static_cast<uint8_t const*>(buffer) + sizeNeeded);size -= sizeNeeded;fds += numFds;count -= numFds;return NO_ERROR;

}

数据读取过程正好和上面的数据写入过程相反,由于服务进程发送了一个native_handle对象的内容到客户端进程,因此需要在客户端进程构造一个native_handle对象:

数据读取过程正好和上面的数据写入过程相反,由于服务进程发送了一个native_handle对象的内容到客户端进程,因此需要在客户端进程构造一个native_handle对象:

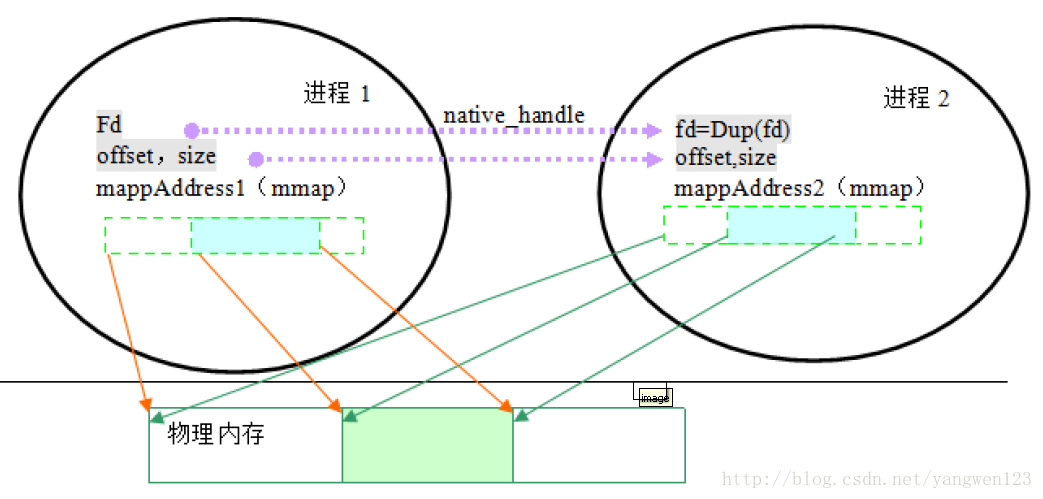

native_handle_t* native_handle_create(int numFds, int numInts)

{native_handle_t* h = malloc(sizeof(native_handle_t) + sizeof(int)*(numFds+numInts));h->version = sizeof(native_handle_t);h->numFds = numFds;h->numInts = numInts;return h;

}客户端进程读取到服务进程创建的图形buffer的描述信息native_handle后,通过GraphicBufferMapper对象mBufferMapper的registerBuffer函数将创建的图形buffer映射到客户端进程地址空间.这样就将Private_native_t中的数据:magic,flags,size,offset,base,lockState,writeOwner,pid复制到了客户端进程。服务端(SurfaceFlinger)分配了一段内存作为Surface的作图缓冲区,客户端怎样在这个作图缓冲区上绘图呢?两个进程间如何共享内存,这就需要GraphicBufferMapper将分配的图形缓冲区映射到客户端进程地址空间。对于共享缓冲区,他们操作同一物理地址的内存块。

2.4 App端和SF端对GraphicBuffer的两次importBuffer的实现

关于这个地方,原来我一直不能理解的是为啥App端能通过requestBuffer在SF端创建完成GraphicBuffer后,然后通过Binder回传过来的重新构建GraphicBuffer的buffer_handle_t能后续在vendor厂商实现的Iallocator服务里面能强换成private_handle_t,现在明白了,通过unflatten是把IAllactor的私有的private_handle_t强制构建了一个在APP端,虽然指针不是同一个,但是不同指针指向的数据是一模一样的,所以后续能在IAllacator中进行强制转换。

并且在importBuffer时候,会将此handle保存下来,供后续使用,如下:

//hardware/interfaces/graphics/mapper/2.0/utils/hal/include/mapper-hal/2.0/Mapper.hReturn<void> importBuffer(const hidl_handle& rawHandle,IMapper::importBuffer_cb hidl_cb) override {if (!rawHandle.getNativeHandle()) {hidl_cb(Error::BAD_BUFFER, nullptr);return Void();}native_handle_t* bufferHandle = nullptr;Error error = mHal->importBuffer(rawHandle.getNativeHandle(), &bufferHandle);if (error != Error::NONE) {hidl_cb(error, nullptr);return Void();}//将已经importBuffer的buffer存起来void* buffer = addImportedBuffer(bufferHandle);if (!buffer) {mHal->freeBuffer(bufferHandle);hidl_cb(Error::NO_RESOURCES, nullptr);return Void();}hidl_cb(error, buffer);return Void();}Return<Error> freeBuffer(void* buffer) override {//获取已经importBuffer的buffernative_handle_t* bufferHandle = getImportedBuffer(buffer);if (!bufferHandle) {return Error::BAD_BUFFER;}Error error = mHal->freeBuffer(bufferHandle);if (error == Error::NONE) {removeImportedBuffer(buffer);}return error;}//hardware/interfaces/graphics/mapper/2.0/utils/passthrough/include/mapper-passthrough/2.0/GrallocLoader.h

template <typename T>

class GrallocMapper : public T {protected:void* addImportedBuffer(native_handle_t* bufferHandle) override {return GrallocImportedBufferPool::getInstance().add(bufferHandle);}native_handle_t* removeImportedBuffer(void* buffer) override {return GrallocImportedBufferPool::getInstance().remove(buffer);}native_handle_t* getImportedBuffer(void* buffer) const override {return GrallocImportedBufferPool::getInstance().get(buffer);}const native_handle_t* getConstImportedBuffer(void* buffer) const override {return GrallocImportedBufferPool::getInstance().getConst(buffer);}

}; 关于这块,具体的调用流程,下面重学系列的博客分析的非常好,可以深入研究:

Android 重学系列 GraphicBuffer的诞生

2.5 linux内核部分,binder驱动对 BINDER_TYPE_FDA 、BINDER_TYPE_FD 类型的处理

我们看下Bidner驱动里面怎么对不同的FD进行不同的处理逻辑

//binder.c

static void binder_transaction(struct binder_proc *proc,struct binder_thread *thread,struct binder_transaction_data *tr, int reply,binder_size_t extra_buffers_size)

{//...case BINDER_TYPE_FD: {struct binder_fd_object *fp = to_binder_fd_object(hdr);//数据类型为 BINDER_TYPE_FD 时,调用了 binder_translate_fdint target_fd = binder_translate_fd(fp->fd, t, thread, in_reply_to);if (target_fd < 0) {return_error = BR_FAILED_REPLY;return_error_param = target_fd;return_error_line = __LINE__;goto err_translate_failed;}fp->pad_binder = 0;fp->fd = target_fd;binder_alloc_copy_to_buffer(&target_proc->alloc,t->buffer, object_offset,fp, sizeof(*fp));} break;case BINDER_TYPE_FDA: {struct binder_object ptr_object;binder_size_t parent_offset;struct binder_fd_array_object *fda =to_binder_fd_array_object(hdr);size_t num_valid = (buffer_offset - off_start_offset) /sizeof(binder_size_t);struct binder_buffer_object *parent =binder_validate_ptr(target_proc, t->buffer,&ptr_object, fda->parent,off_start_offset,&parent_offset,num_valid);if (!parent) {binder_user_error("%d:%d got transaction with invalid parent offset or type\n",proc->pid, thread->pid);return_error = BR_FAILED_REPLY;return_error_param = -EINVAL;return_error_line = __LINE__;goto err_bad_parent;}if (!binder_validate_fixup(target_proc, t->buffer,off_start_offset,parent_offset,fda->parent_offset,last_fixup_obj_off,last_fixup_min_off)) {binder_user_error("%d:%d got transaction with out-of-order buffer fixup\n",proc->pid, thread->pid);return_error = BR_FAILED_REPLY;return_error_param = -EINVAL;return_error_line = __LINE__;goto err_bad_parent;}//数据类型为 BINDER_TYPE_FDA 时,调用了 binder_translate_fdret = binder_translate_fd_array(fda, parent, t, thread, in_reply_to);if (ret < 0) {return_error = BR_FAILED_REPLY;return_error_param = ret;return_error_line = __LINE__;goto err_translate_failed;}last_fixup_obj_off = parent_offset;last_fixup_min_off =fda->parent_offset + sizeof(u32) * fda->num_fds;} break;//...

}binder_translate_fd_array 函数中对每一个fd都调用了 binder_translate_fd 函数,我们接着往下看

static int binder_translate_fd(int fd,struct binder_transaction *t,struct binder_thread *thread,struct binder_transaction *in_reply_to)

{struct binder_proc *proc = thread->proc;struct binder_proc *target_proc = t->to_proc;int target_fd;struct file *file;int ret;bool target_allows_fd;if (in_reply_to)target_allows_fd = !!(in_reply_to->flags & TF_ACCEPT_FDS);elsetarget_allows_fd = t->buffer->target_node->accept_fds;if (!target_allows_fd) {binder_user_error("%d:%d got %s with fd, %d, but target does not allow fds\n",proc->pid, thread->pid,in_reply_to ? "reply" : "transaction",fd);ret = -EPERM;goto err_fd_not_accepted;}file = fget(fd);//从fd获取 file 对象if (!file) {binder_user_error("%d:%d got transaction with invalid fd, %d\n",proc->pid, thread->pid, fd);ret = -EBADF;goto err_fget;}//se权限处理ret = security_binder_transfer_file(proc->tsk, target_proc->tsk, file);if (ret < 0) {ret = -EPERM;goto err_security;}//在目标进程中找到一个可用的fdtarget_fd = task_get_unused_fd_flags(target_proc, O_CLOEXEC);if (target_fd < 0) {ret = -ENOMEM;goto err_get_unused_fd;}// 调用task_fd_install将 file对象 关联到目标进程中的fdtask_fd_install(target_proc, target_fd, file);trace_binder_transaction_fd(t, fd, target_fd);binder_debug(BINDER_DEBUG_TRANSACTION, " fd %d -> %d\n",fd, target_fd);return target_fd;

err_get_unused_fd:

err_security:fput(file);

err_fget:

err_fd_not_accepted:return ret;

}总结

写到这里普法GraphicBuffer诞生以及跨进程传递的博文,就基本结束了,但是最后还是有必要对前面的博客进行一个收尾,总结一番。

-

SurfaceFlinger进程 和 IAllocator服务进程之间通过 hidl_handle 类型的数据传递 图形buffer共享内存的fd

- 数据传输中对 hidl_handle 类型数据特化处理,并把binder数据类型设置为 BINDER_TYPE_FDA

- binder内核对 BINDER_TYPE_FDA 类型数据特化处理

- 同时在 IAllocator.allocate 的回调函数中调用 IMapper.importBuffer 把内存映射到当前进程

-

App进程同SurfaceFlinger进程之间使用 GraphicBuffer 对象传递 图形buffer共享内存的fd

- 数据传输中对 GraphicBuffer 中的 native_handle_t 数据特化处理,并把binder数据类型设置为 BINDER_TYPE_FD

- binder内核对 BINDER_TYPE_FD 类型数据特化处理

-

同时在从binder读取数据创建GraphicBuffer对象时,调用 GraphicBuffer.unflatten,内部调用 IMapper.importBuffer 把内存映射到当前进程

补充后记

-

Android12 之后使用 BLASTBufferQueue ,虽然有些变化,但是理解了 GraphicBuffer 和 hidl_handle 传递 fd 的过程,这些都游刃有余

-

Android 的 aidl接口层、hidl接口层、binder bp 接口层 都隐藏了很多关键代码,导致看代码时,感觉总是云里雾里

- 像hidl接口,生成的大量代码,在out/soong目录下,仅仅看源码树目录下的代码根本找不到好吧。

各位,千山不改绿水长流,各位江湖见!

参阅博客:

- Android 重学系列 GraphicBuffer的诞生

- Android 进程间传递图形buffer原理

- Android中native_handle private_handle_t ANativeWindowBuffer ANativeWindow GraphicBuffer Surface的关系

- Android图形显示之硬件抽象层Gralloc

- GraphicBuffer、AHardwareBuffer、ANativeWindowBuffer关系

- Android 系统中AHardwareBuffer、ANativeWindowBuffer和GraphicBuffer的关系

![[力扣 Hot100]Day28 两数相加](https://img-blog.csdnimg.cn/direct/5bdba9fe66b34592a656017f053aa9cc.png)