1、k8s的监控方案

1.1 Heapster

Heapster是容器集群监控和性能分忻工具,天然的支持Kubernetes和CoreOS。

Kubernetes有个出名的监控agent–cAdvisor。在每个kubernetes Node上都会运行cAdvisor,它会收集本机以及容器的监控数(cpu,memory,filesystem,network,uptime.

在较新的版本中,K8S已经将cAdvisor功能集成到kubelet组件中。每个Node节卓可以直接进行web访问。

1.2 Weave Scope

weave Scope可以监控kubernetes集群中的一系列资源的状态、资源使用情况、应用拓扑、scae、还可以直接通过浏览器进入容器内部调试等,其提供的功能包括:

- 交互式拓扑界面

- 图形模式和表格模式

- 过速功能

- 搜索功能

- 实时度量

- 容器排错

- 插件扩展

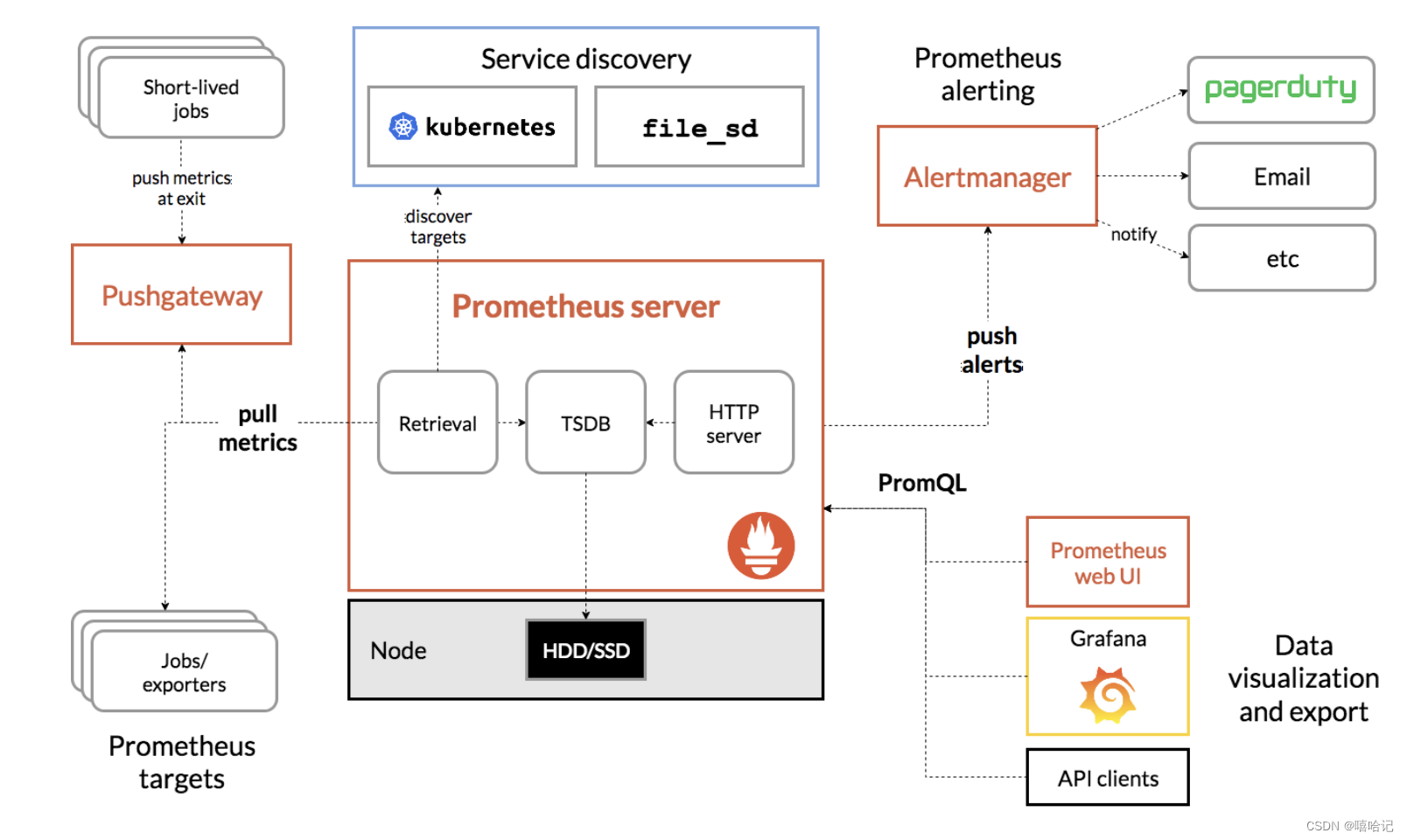

1.3 Prometheus

Prometheus是一套开的监控系统、报警、时间序列的集合,最初由Soundcloud开发,后来随着越来越多公司的使用,于是便独立成开源项目。自此以后,许多公司和组织都采用了Prometheus作为监控告警工具。

2、通过自定义配置安装prometheus

2.1 创建相关配置文件

2.1.1 创建制备器资源

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:name: managed-nfs-storage

provisioner: fuseim.pri/ifs

parameters:archiveOnDelete: "false"

2.1.2 创建制备器权限的资源

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]resources: ["persistentvolumes"]verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]resources: ["persistentvolumeclaims"]verbs: ["get", "list", "watch", "update"]

- apiGroups: [""]resources: ["endpoints"]verbs: ["get", "list", "watch", "create", "update", "patch"]

- apiGroups: ["storage.k8s.io"]resources: ["storageclasses"]verbs: ["get", "list", "watch"]

- apiGroups: [""]resources: ["events"]verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccountname: nfs-client-provisionernamespace: kube-system

roleRef:kind: ClusterRolename: nfs-client-provisioner-runnerapiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:name: leader-locking-nfs-client-provisioner

rules:

- apiGroups: [""]resources: ["endpoints"]verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:name: leader-locking-nfs-client-provisioner

subjects:

- kind: ServiceAccountname: nfs-client-provisioner# replace with namespace where provisioner is deployednamespace: kube-system

roleRef:kind: Rolename: leader-locking-nfs-client-provisionerapiGroup: rbac.authorization.k8s.io

2.1.3 创建适配器的deploy资源

kind: Deployment

apiVersion: apps/v1

metadata:namespace: kube-systemname: nfs-client-provisioner

spec:replicas: 1strategy:type: Recreateselector:matchLabels:app: nfs-client-provisionertemplate:metadata:labels:app: nfs-client-provisionerspec:serviceAccount: nfs-client-provisionercontainers:- name: nfs-client-provisioner

# image: quay.io/external_storage/nfs-client-provisioner:latestimage: registry.cn-beijing.aliyuncs.com/pylixm/nfs-subdir-external-provisioner:v4.0.0volumeMounts:- name: nfs-client-rootmountPath: /persistentvolumesenv:- name: PROVISIONER_NAMEvalue: fuseim.pri/ifs- name: NFS_SERVERvalue: 10.10.10.100- name: NFS_PATHvalue: /data/nfs/rwvolumes:- name: nfs-client-rootnfs:server: 10.10.10.100path: /data/nfs/rw

2.1.4 创建configMap的配置(普罗米修斯监控那些资源)

apiVersion: v1

kind: ConfigMap

metadata:name: prometheus-confignamespace: kube-monitoring

data:prometheus.yml: |global:scrape_interval: 15s evaluation_interval: 15sscrape_configs:- job_name: 'prometheus'static_configs:- targets: ['localhost:9090']- job_name: 'kubernetes-nodes'tls_config:ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crtbearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/tokenkubernetes_sd_configs:- role: node- job_name: 'kubernetes-service'tls_config:ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crtbearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/tokenkubernetes_sd_configs:- role: service- job_name: 'kubernetes-endpoints'tls_config:ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crtbearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/tokenkubernetes_sd_configs:- role: endpoints- job_name: 'kubernetes-ingress'tls_config:ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crtbearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/tokenkubernetes_sd_configs:- role: ingress- job_name: 'kubernetes-kubelet'scheme: httpstls_config:ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crtbearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/tokenkubernetes_sd_configs:- role: noderelabel_configs:- action: labelmapregex: __meta_kubernetes_node_label_(.+)- target_label: __address__replacement: kubernetes.default.svc:443- source_labels: [__meta_kubernetes_node_name]regex: (.+)target_label: __metrics_path__replacement: /api/v1/nodes/${1}/proxy/metrics- job_name: 'kubernetes-cadvisor'scheme: httpstls_config:ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crtbearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/tokenkubernetes_sd_configs:- role: noderelabel_configs:- target_label: __address__replacement: kubernetes.default.svc:443- source_labels: [__meta_kubernetes_node_name]regex: (.+)target_label: __metrics_path__replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor- action: labelmapregex: __meta_kubernetes_node_label_(.+)- job_name: 'kubernetes-pods'kubernetes_sd_configs:- role: podrelabel_configs:- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scrape]action: keepregex: true- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path]action: replacetarget_label: __metrics_path__regex: (.+)- source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port]action: replaceregex: ([^:]+)(?::\d+)?;(\d+)replacement: $1:$2target_label: __address__- action: labelmapregex: __meta_kubernetes_pod_label_(.+)- source_labels: [__meta_kubernetes_namespace]action: replacetarget_label: kubernetes_namespace- source_labels: [__meta_kubernetes_pod_name]action: replacetarget_label: kubernetes_pod_name- job_name: 'kubernetes-apiservers'kubernetes_sd_configs:- role: endpointsscheme: httpstls_config:ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crtbearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/tokenrelabel_configs:- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]action: keepregex: default;kubernetes;https- target_label: __address__replacement: kubernetes.default.svc:443- job_name: 'kubernetes-services'metrics_path: /probeparams:module: [http_2xx]kubernetes_sd_configs:- role: servicerelabel_configs:- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_probe]action: keepregex: true- source_labels: [__address__]target_label: __param_target- target_label: __address__replacement: blackbox-exporter.default.svc.cluster.local:9115- source_labels: [__param_target]target_label: instance- action: labelmapregex: __meta_kubernetes_service_label_(.+)- source_labels: [__meta_kubernetes_namespace]target_label: kubernetes_namespace- source_labels: [__meta_kubernetes_service_name]target_label: kubernetes_name- job_name: 'kubernetes-ingresses'metrics_path: /probeparams:module: [http_2xx]kubernetes_sd_configs:- role: ingressrelabel_configs:- source_labels: [__meta_kubernetes_ingress_annotation_prometheus_io_probe]action: keepregex: true- source_labels: [__meta_kubernetes_ingress_scheme,__address__,__meta_kubernetes_ingress_path]regex: (.+);(.+);(.+)replacement: ${1}://${2}${3}target_label: __param_target- target_label: __address__replacement: blackbox-exporter.default.svc.cluster.local:9115- source_labels: [__param_target]target_label: instance- action: labelmapregex: __meta_kubernetes_ingress_label_(.+)- source_labels: [__meta_kubernetes_namespace]target_label: kubernetes_namespace- source_labels: [__meta_kubernetes_ingress_name]target_label: kubernetes_name

2.1.5 创建prometheus主程序服务的配置

apiVersion: apps/v1

kind: Deployment

metadata:labels:name: prometheusname: prometheusnamespace: kube-monitoring

spec:replicas: 1selector:matchLabels:app: prometheustemplate:metadata:labels:app: prometheusspec:serviceAccountName: prometheusserviceAccount: prometheuscontainers:- name: prometheusimage: prom/prometheus:v2.50.1command:- "/bin/prometheus"args:- "--config.file=/etc/prometheus/prometheus.yml"ports:- containerPort: 9090protocol: TCPvolumeMounts:- mountPath: "/etc/prometheus"name: prometheus-config- mountPath: "/etc/localtime"name: timezonevolumes:- name: prometheus-configconfigMap:name: prometheus-config- name: timezonehostPath:path: /usr/share/zoneinfo/Asia/Shanghai2.1.6 创建访问权限的配置文件

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:name: prometheus

rules:

- apiGroups: [""]resources:- nodes- nodes/proxy- services- endpoints- podsverbs: ["get", "list", "watch"]

- apiGroups:- extensionsresources:- ingressesverbs: ["get", "list", "watch"]

- nonResourceURLs: ["/metrics"]verbs: ["get"]

---

apiVersion: v1

kind: ServiceAccount

metadata:name: prometheusnamespace: kube-monitoring

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:name: prometheus

roleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: prometheus

subjects:

- kind: ServiceAccountname: prometheusnamespace: kube-monitoring2.1.7 创建服务发现的配置

apiVersion: v1

kind: Service

metadata:name: prometheuslabels:name: prometheusnamespace: kube-monitoring

spec:ports:- name: prometheusprotocol: TCPport: 9090targetPort: 9090selector:app: prometheustype: NodePort 2.1.8 创建守护进程node-exporter的配置

apiVersion: apps/v1

kind: DaemonSet

metadata:name: node-exporternamespace: kube-monitoring

spec:selector:matchLabels:app: node-exportertemplate:metadata:annotations:prometheus.io/scrape: 'true'prometheus.io/port: '9100'prometheus.io/path: 'metrics'labels:app: node-exportername: node-exporterspec:containers:- image: prom/node-exporterimagePullPolicy: IfNotPresentname: node-exporterports:- containerPort: 9100hostPort: 9100name: scrapehostNetwork: truehostPID: true

2.1.9 创建监控网络服务的的配置

Docker镜像blackbox-exporter是Prometheus官方提供的一个exporter,主要用于监控数据采集,包括http、dns、tcp、icmp等。通过该镜像,可以轻松地部署blackbox-exporter服务,以便收集所需的网络服务状态信息,并将其暴露给Prometheus进行监控和告警。

具体来说,blackbox-exporter可以模拟客户端行为,对网络服务进行探测,检查服务的可达性和性能。例如,它可以检查一个HTTP服务的响应时间、一个DNS解析的正确性,或者一个TCP连接的稳定性等。通过收集这些指标,可以及时发现网络服务的异常情况,并进行相应的处理。

因此,Docker镜像blackbox-exporter是一个重要的监控工具,可以帮助运维人员及时发现和解决网络服务的问题,保证服务的稳定性和可靠性。

apiVersion: v1

kind: Service

metadata:labels:app: blackbox-exportername: blackbox-exporternamespace: kube-monitoring

spec:ports:- name: blackboxport: 9115protocol: TCPselector:app: blackbox-exportertype: ClusterIP

---

apiVersion: apps/v1

kind: Deployment

metadata:labels:app: blackbox-exportername: blackbox-exporternamespace: kube-monitoring

spec:replicas: 1selector:matchLabels:app: blackbox-exportertemplate:metadata:labels:app: blackbox-exporterspec:containers:- image: prom/blackbox-exporterimagePullPolicy: IfNotPresentname: blackbox-exporter2.1.10 创建grafana服务发现的配置

apiVersion: v1

kind: Service

metadata:name: grafananamespace: kube-monitoringlabels:app: grafanacomponent: core

spec:type: NodePortports:- port: 3000nodePort: 30011selector:app: grafanacomponent: core

2.1.11 创建grafana主程序的配置

apiVersion: apps/v1

kind: StatefulSet

metadata:name: grafana-corenamespace: kube-monitoringlabels:app: grafanacomponent: core

spec:serviceName: "grafana"selector:matchLabels:app: grafanareplicas: 1template:metadata:labels:app: grafanacomponent: corespec:containers:- image: grafana/grafana:10.2.4name: grafana-coreimagePullPolicy: IfNotPresentenv:# The following env variables set up basic auth twith the default admin user and admin password.- name: GF_AUTH_BASIC_ENABLEDvalue: "true"- name: GF_AUTH_ANONYMOUS_ENABLEDvalue: "false"# - name: GF_AUTH_ANONYMOUS_ORG_ROLE# value: Admin# does not really work, because of template variables in exported dashboards:# - name: GF_DASHBOARDS_JSON_ENABLED# value: "true"readinessProbe:httpGet:path: /loginport: 3000# initialDelaySeconds: 30# timeoutSeconds: 1volumeMounts:- name: grafana-persistent-storagemountPath: /var/lib/grafanasubPath: grafanavolumeClaimTemplates: - metadata:name: grafana-persistent-storagespec:storageClassName: managed-nfs-storageaccessModes:- ReadWriteOnceresources:requests:storage: "1Gi"

2.2 创建prometheus和grafana的资源

[root@k8s-master promethues]# ll

总用量 40

-rw-rw-r--. 1 root root 683 3月 5 2023 blackbox-exporter.yml

-rw-rw-r--. 1 root root 251 3月 5 2023 grafana-service.yml

-rw-rw-r--. 1 root root 1467 2月 29 23:38 grafana-statefulset.yml

-rw-rw-r--. 1 root root 65 3月 5 2023 kube-monitoring.yml

-rw-rw-r--. 1 root root 6090 3月 5 2023 prometheus-config.yml

-rw-rw-r--. 1 root root 644 3月 5 2023 prometheus-daemonset.yml

-rw-rw-r--. 1 root root 957 2月 29 23:37 prometheus-deployment.yml

-rw-rw-r--. 1 root root 724 3月 5 2023 prometheus-rbac-setup.yml

-rw-rw-r--. 1 root root 257 3月 5 2023 prometheus-service.yml[root@k8s-master promethues]# cd ..

[root@k8s-master k8s]# kubectl apply -f ./promethues/

service/blackbox-exporter created

deployment.apps/blackbox-exporter created

service/grafana created

statefulset.apps/grafana-core created

namespace/kube-monitoring unchanged

configmap/prometheus-config unchanged

daemonset.apps/node-exporter unchanged

deployment.apps/prometheus unchanged

clusterrole.rbac.authorization.k8s.io/prometheus unchanged

serviceaccount/prometheus unchanged

clusterrolebinding.rbac.authorization.k8s.io/prometheus unchanged

service/prometheus unchanged[root@k8s-master ~]# kubectl get all -n kube-monitoring

NAME READY STATUS RESTARTS AGE

pod/blackbox-exporter-7c76758b44-jx9px 1/1 Running 0 50m

pod/grafana-core-0 1/1 Running 0 50m

pod/node-exporter-cbrg8 1/1 Running 0 50m

pod/node-exporter-svchp 1/1 Running 0 50m

pod/prometheus-fd55b757d-6vpbk 1/1 Running 0 50mNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/blackbox-exporter ClusterIP 10.1.231.125 <none> 9115/TCP 50m

service/grafana NodePort 10.1.33.54 <none> 3000:30011/TCP 50m

service/prometheus NodePort 10.1.170.5 <none> 9090:31856/TCP 50mNAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/node-exporter 2 2 2 2 2 <none> 50mNAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/blackbox-exporter 1/1 1 1 50m

deployment.apps/prometheus 1/1 1 1 50mNAME DESIRED CURRENT READY AGE

replicaset.apps/blackbox-exporter-7c76758b44 1 1 1 50m

replicaset.apps/prometheus-fd55b757d 1 1 1 50mNAME READY AGE

statefulset.apps/grafana-core 1/1 50m

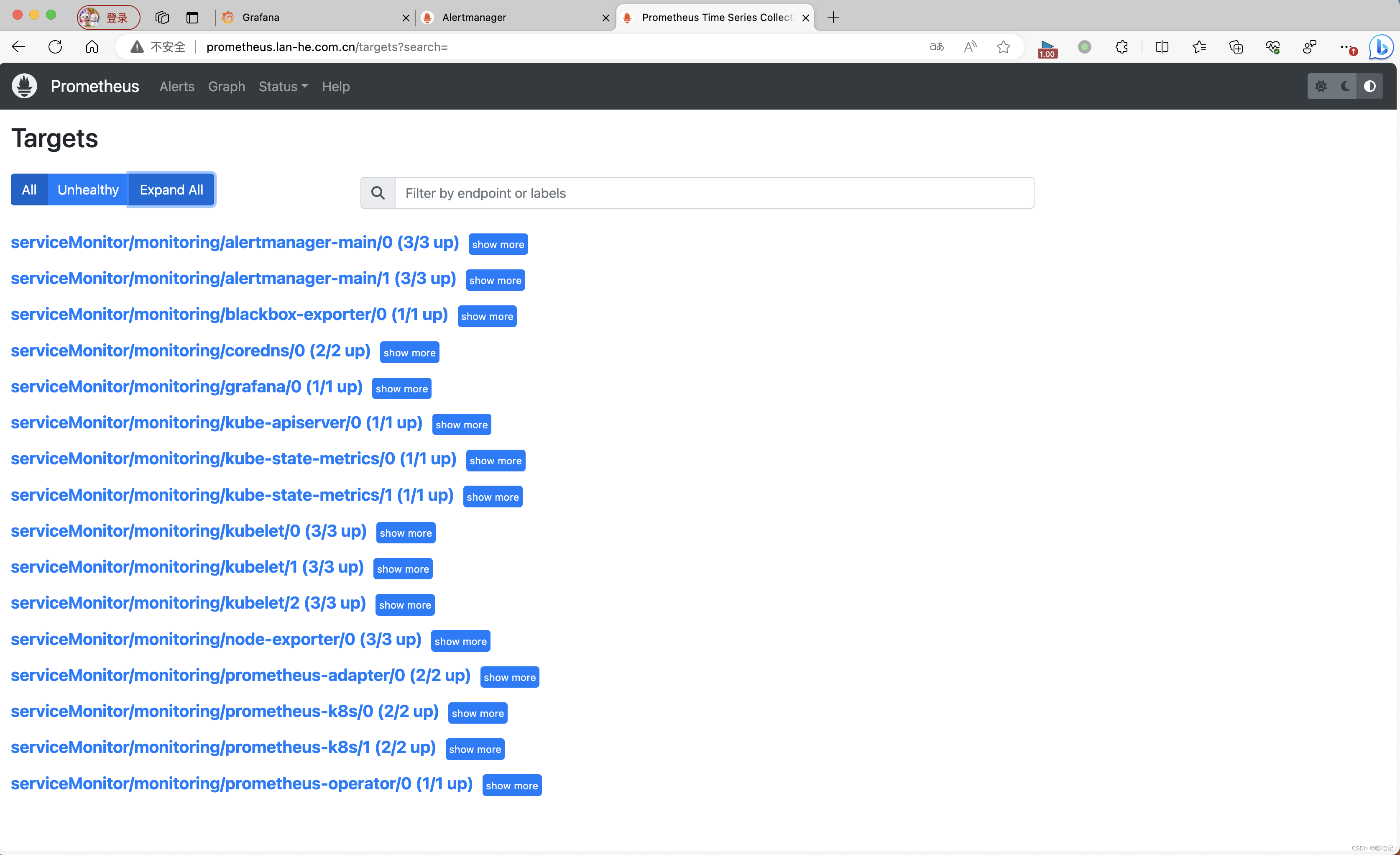

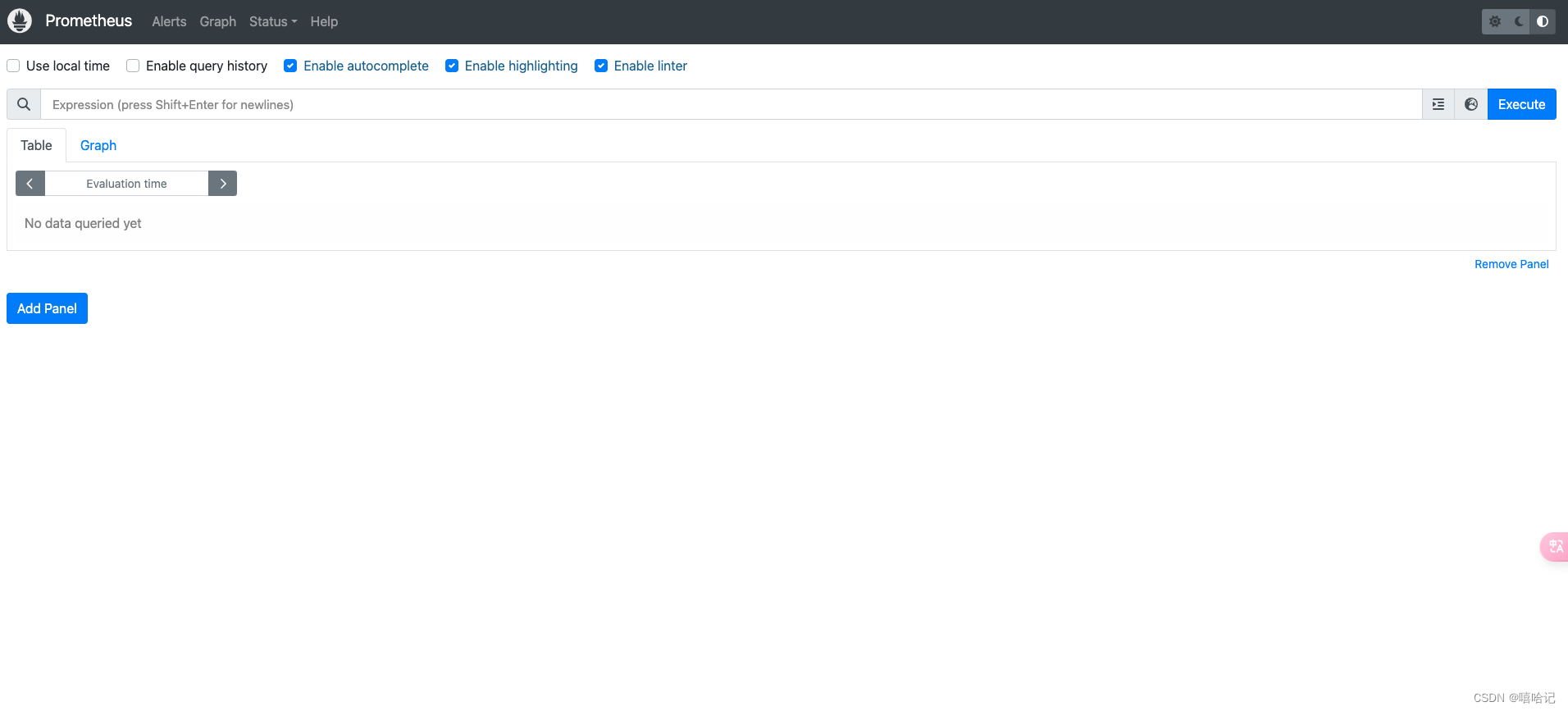

2.3 通过 10.10.10.100:31856 访问普罗米修斯

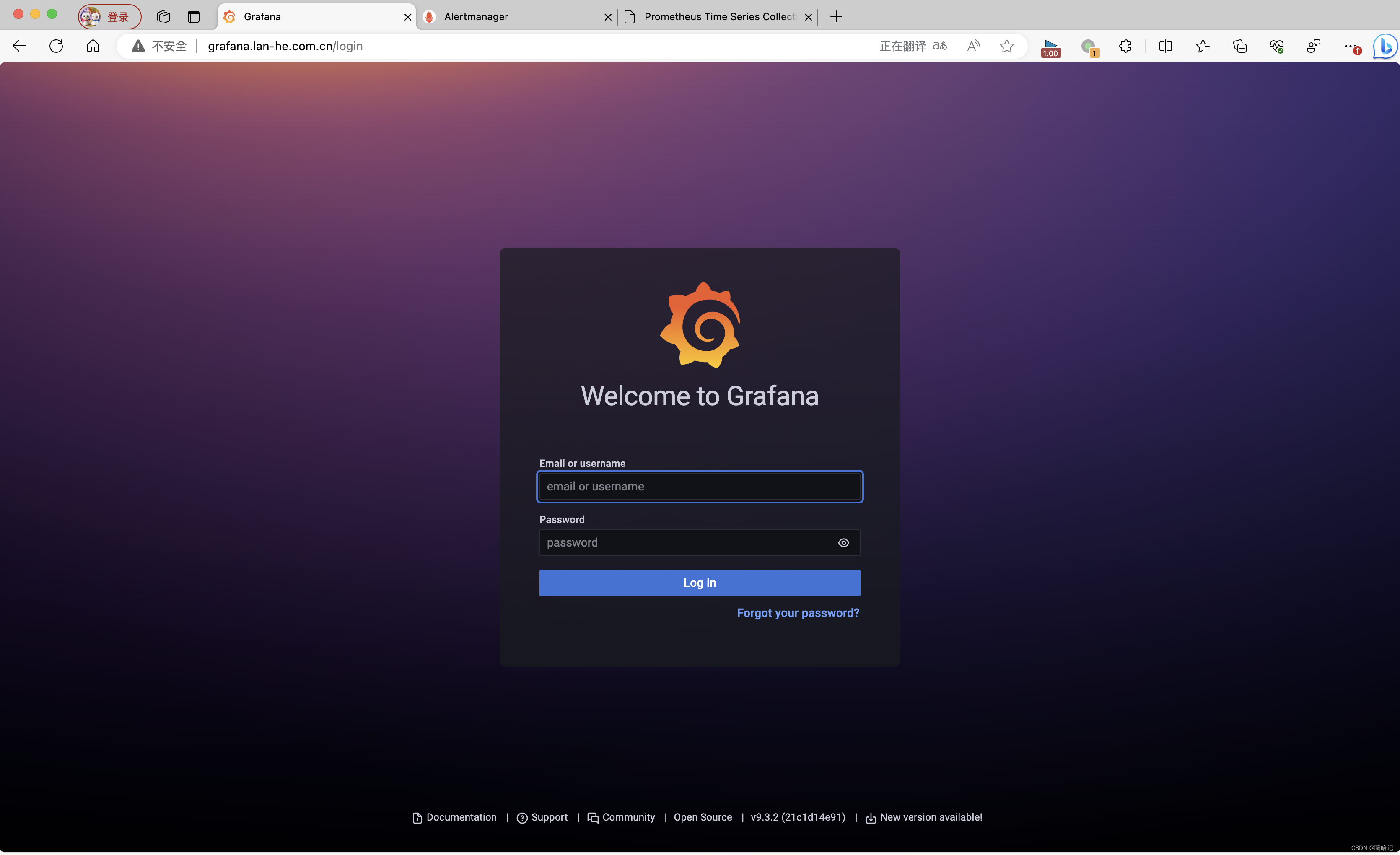

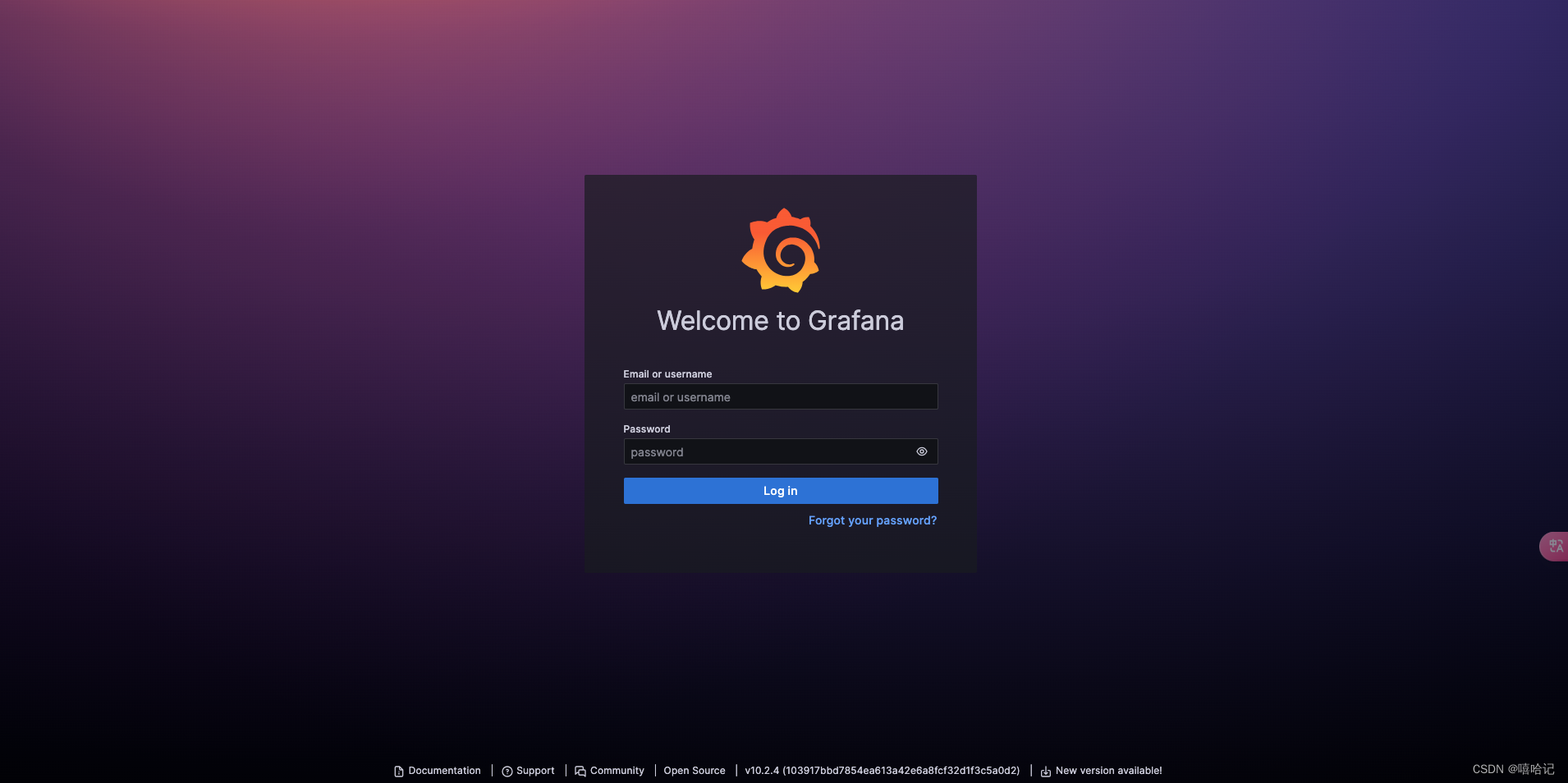

2.4 通过10.10.10.100:30011访问grafana

3、通过kube-prometheus安装

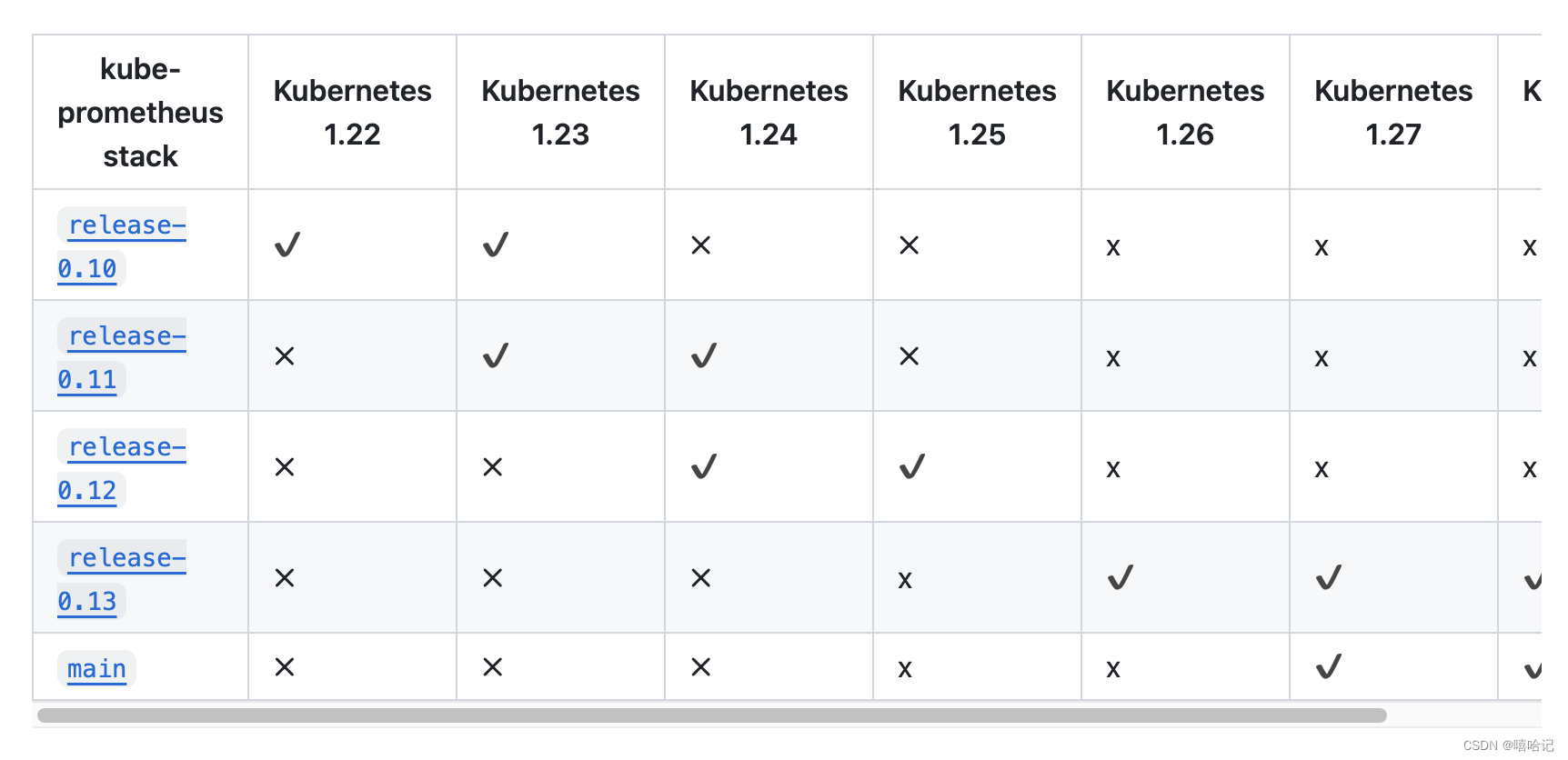

- 本次使用的k8s版本是v1.25,所以只能选择kube-prometheus:v0.12的版本。

3.1 注意kube-prometheus的版本和k8s的版本相对应

下载链接:https://github.com/prometheus-operator/kube-prometheus

[root@k8s-master ~]# kubectl create -f kube-prometheus-0.12.0/manifests/setup/

customresourcedefinition.apiextensions.k8s.io/alertmanagerconfigs.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/alertmanagers.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/podmonitors.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/probes.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/prometheuses.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/prometheusrules.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/servicemonitors.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/thanosrulers.monitoring.coreos.com created

namespace/monitoring created[root@k8s-master ~]# kubectl create -f kube-prometheus-0.12.0/manifests/

alertmanager.monitoring.coreos.com/main created

networkpolicy.networking.k8s.io/alertmanager-main created

poddisruptionbudget.policy/alertmanager-main created

prometheusrule.monitoring.coreos.com/alertmanager-main-rules created

secret/alertmanager-main created

service/alertmanager-main created

serviceaccount/alertmanager-main created

servicemonitor.monitoring.coreos.com/alertmanager-main created

clusterrole.rbac.authorization.k8s.io/blackbox-exporter created

clusterrolebinding.rbac.authorization.k8s.io/blackbox-exporter created

configmap/blackbox-exporter-configuration created

deployment.apps/blackbox-exporter created

networkpolicy.networking.k8s.io/blackbox-exporter created

service/blackbox-exporter created

serviceaccount/blackbox-exporter created

servicemonitor.monitoring.coreos.com/blackbox-exporter created

secret/grafana-config created

secret/grafana-datasources created

configmap/grafana-dashboard-alertmanager-overview created

configmap/grafana-dashboard-apiserver created

configmap/grafana-dashboard-cluster-total created

configmap/grafana-dashboard-controller-manager created

configmap/grafana-dashboard-grafana-overview created

configmap/grafana-dashboard-k8s-resources-cluster created

configmap/grafana-dashboard-k8s-resources-namespace created

configmap/grafana-dashboard-k8s-resources-node created

configmap/grafana-dashboard-k8s-resources-pod created

configmap/grafana-dashboard-k8s-resources-workload created

configmap/grafana-dashboard-k8s-resources-workloads-namespace created

configmap/grafana-dashboard-kubelet created

configmap/grafana-dashboard-namespace-by-pod created

configmap/grafana-dashboard-namespace-by-workload created

configmap/grafana-dashboard-node-cluster-rsrc-use created

configmap/grafana-dashboard-node-rsrc-use created

configmap/grafana-dashboard-nodes-darwin created

configmap/grafana-dashboard-nodes created

configmap/grafana-dashboard-persistentvolumesusage created

configmap/grafana-dashboard-pod-total created

configmap/grafana-dashboard-prometheus-remote-write created

configmap/grafana-dashboard-prometheus created

configmap/grafana-dashboard-proxy created

configmap/grafana-dashboard-scheduler created

configmap/grafana-dashboard-workload-total created

configmap/grafana-dashboards created

deployment.apps/grafana created

networkpolicy.networking.k8s.io/grafana created

prometheusrule.monitoring.coreos.com/grafana-rules created

service/grafana created

serviceaccount/grafana created

servicemonitor.monitoring.coreos.com/grafana created

prometheusrule.monitoring.coreos.com/kube-prometheus-rules created

clusterrole.rbac.authorization.k8s.io/kube-state-metrics created

clusterrolebinding.rbac.authorization.k8s.io/kube-state-metrics created

deployment.apps/kube-state-metrics created

networkpolicy.networking.k8s.io/kube-state-metrics created

prometheusrule.monitoring.coreos.com/kube-state-metrics-rules created

service/kube-state-metrics created

serviceaccount/kube-state-metrics created

servicemonitor.monitoring.coreos.com/kube-state-metrics created

prometheusrule.monitoring.coreos.com/kubernetes-monitoring-rules created

servicemonitor.monitoring.coreos.com/kube-apiserver created

servicemonitor.monitoring.coreos.com/coredns created

servicemonitor.monitoring.coreos.com/kube-controller-manager created

servicemonitor.monitoring.coreos.com/kube-scheduler created

servicemonitor.monitoring.coreos.com/kubelet created

clusterrole.rbac.authorization.k8s.io/node-exporter created

clusterrolebinding.rbac.authorization.k8s.io/node-exporter created

daemonset.apps/node-exporter created

networkpolicy.networking.k8s.io/node-exporter created

prometheusrule.monitoring.coreos.com/node-exporter-rules created

service/node-exporter created

serviceaccount/node-exporter created

servicemonitor.monitoring.coreos.com/node-exporter created

clusterrole.rbac.authorization.k8s.io/prometheus-k8s created

clusterrolebinding.rbac.authorization.k8s.io/prometheus-k8s created

networkpolicy.networking.k8s.io/prometheus-k8s created

poddisruptionbudget.policy/prometheus-k8s created

prometheus.monitoring.coreos.com/k8s created

prometheusrule.monitoring.coreos.com/prometheus-k8s-prometheus-rules created

rolebinding.rbac.authorization.k8s.io/prometheus-k8s-config created

rolebinding.rbac.authorization.k8s.io/prometheus-k8s created

rolebinding.rbac.authorization.k8s.io/prometheus-k8s created

rolebinding.rbac.authorization.k8s.io/prometheus-k8s created

role.rbac.authorization.k8s.io/prometheus-k8s-config created

role.rbac.authorization.k8s.io/prometheus-k8s created

role.rbac.authorization.k8s.io/prometheus-k8s created

role.rbac.authorization.k8s.io/prometheus-k8s created

service/prometheus-k8s created

serviceaccount/prometheus-k8s created

servicemonitor.monitoring.coreos.com/prometheus-k8s created

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created

clusterrole.rbac.authorization.k8s.io/prometheus-adapter created

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrolebinding.rbac.authorization.k8s.io/prometheus-adapter created

clusterrolebinding.rbac.authorization.k8s.io/resource-metrics:system:auth-delegator created

clusterrole.rbac.authorization.k8s.io/resource-metrics-server-resources created

configmap/adapter-config created

deployment.apps/prometheus-adapter created

networkpolicy.networking.k8s.io/prometheus-adapter created

poddisruptionbudget.policy/prometheus-adapter created

rolebinding.rbac.authorization.k8s.io/resource-metrics-auth-reader created

service/prometheus-adapter created

serviceaccount/prometheus-adapter created

servicemonitor.monitoring.coreos.com/prometheus-adapter created

clusterrole.rbac.authorization.k8s.io/prometheus-operator created

clusterrolebinding.rbac.authorization.k8s.io/prometheus-operator created

deployment.apps/prometheus-operator created

networkpolicy.networking.k8s.io/prometheus-operator created

prometheusrule.monitoring.coreos.com/prometheus-operator-rules created

service/prometheus-operator created

serviceaccount/prometheus-operator created

servicemonitor.monitoring.coreos.com/prometheus-operator created

3.2 查看prometheus的资源详情

- 有两个镜像不是很好下载

- docker pull v5cn/prometheus-adapter:v0.10.0

- docker pull qiyue0421/kube-state-metrics:v2.7.0

- 下载完成给镜像加一个标签

- docker tag v5cn/prometheus-adapter:v0.10.0 registry.k8s.io/prometheus-adapter/prometheus-adapter:v0.10.0

- docker tag qiyue0421/kube-state-metrics:v2.7.0 registry.k8s.io/kube-state-metrics/kube-state-metrics:v2.7.0

- 下载完成后可以把镜像通过 docker save -o <image.tar> image_name:tag 保存到本地

- 然后通过scp 拷贝到其他机器,通过 docker load -i <image.tar> 还原镜像

[root@k8s-master manifests]# kubectl get all -n monitoring

NAME READY STATUS RESTARTS AGE

pod/alertmanager-main-0 2/2 Running 1 (161m ago) 166m

pod/alertmanager-main-1 2/2 Running 1 (164m ago) 166m

pod/alertmanager-main-2 2/2 Running 1 (161m ago) 166m

pod/blackbox-exporter-6fd586b445-99ztl 3/3 Running 0 169m

pod/grafana-9f58f8675-jhb7v 1/1 Running 0 169m

pod/kube-state-metrics-66659c89c-gq5wl 3/3 Running 0 16m

pod/node-exporter-prrvz 2/2 Running 0 169m

pod/node-exporter-xnd8h 2/2 Running 0 169m

pod/node-exporter-z8dts 2/2 Running 0 169m

pod/prometheus-adapter-757f9b4cf9-c5vjx 1/1 Running 0 57s

pod/prometheus-adapter-757f9b4cf9-dqmd6 1/1 Running 0 57s

pod/prometheus-k8s-0 2/2 Running 0 166m

pod/prometheus-k8s-1 2/2 Running 0 166m

pod/prometheus-operator-776c6c6b87-z7k57 2/2 Running 0 169mNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/alertmanager-main ClusterIP 10.1.148.54 <none> 9093/TCP,8080/TCP 169m

service/alertmanager-operated ClusterIP None <none> 9093/TCP,9094/TCP,9094/UDP 166m

service/blackbox-exporter ClusterIP 10.1.126.236 <none> 9115/TCP,19115/TCP 169m

service/grafana ClusterIP 10.1.88.239 <none> 3000/TCP 169m

service/kube-state-metrics ClusterIP None <none> 8443/TCP,9443/TCP 169m

service/node-exporter ClusterIP None <none> 9100/TCP 169m

service/prometheus-adapter ClusterIP 10.1.245.178 <none> 443/TCP 169m

service/prometheus-k8s ClusterIP 10.1.56.132 <none> 9090/TCP,8080/TCP 169m

service/prometheus-operated ClusterIP None <none> 9090/TCP 166m

service/prometheus-operator ClusterIP None <none> 8443/TCP 169mNAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/node-exporter 3 3 3 3 3 kubernetes.io/os=linux 169mNAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/blackbox-exporter 1/1 1 1 169m

deployment.apps/grafana 1/1 1 1 169m

deployment.apps/kube-state-metrics 1/1 1 1 169m

deployment.apps/prometheus-adapter 2/2 2 2 169m

deployment.apps/prometheus-operator 1/1 1 1 169mNAME DESIRED CURRENT READY AGE

replicaset.apps/blackbox-exporter-6fd586b445 1 1 1 169m

replicaset.apps/grafana-9f58f8675 1 1 1 169m

replicaset.apps/kube-state-metrics-66659c89c 1 1 1 169m

replicaset.apps/prometheus-adapter-757f9b4cf9 2 2 2 169m

replicaset.apps/prometheus-operator-776c6c6b87 1 1 1 169mNAME READY AGE

statefulset.apps/alertmanager-main 3/3 166m

statefulset.apps/prometheus-k8s 2/2 166m

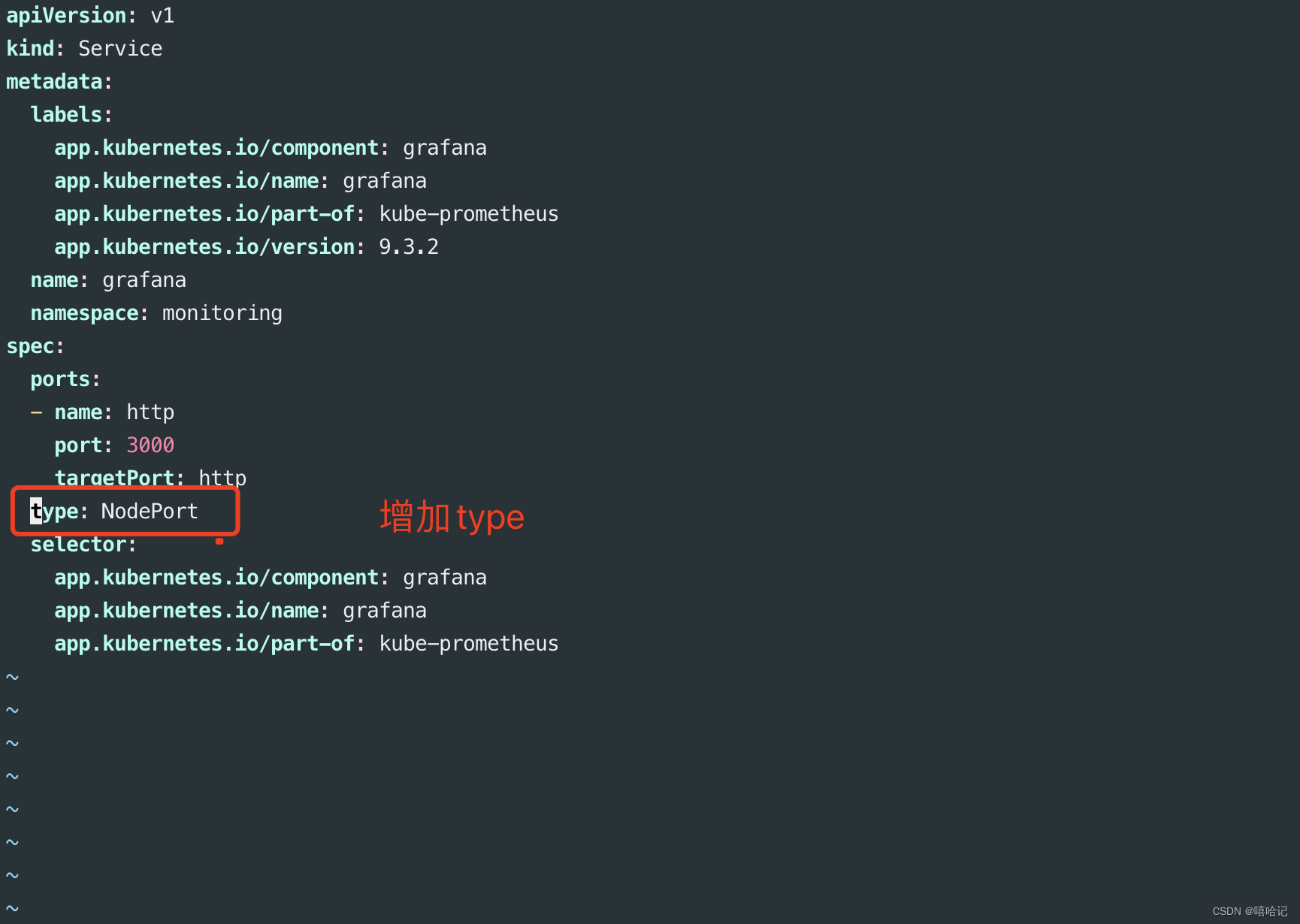

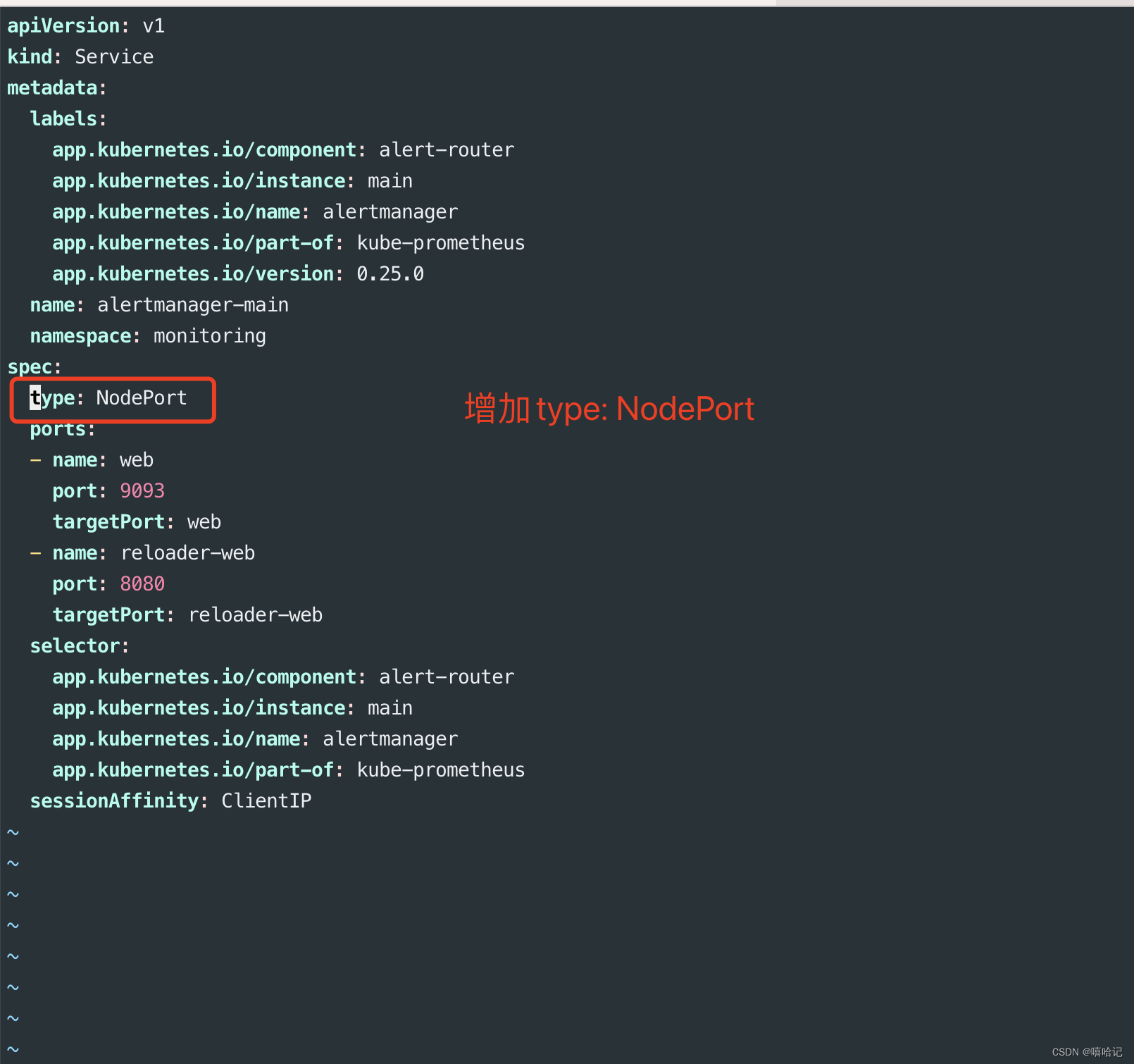

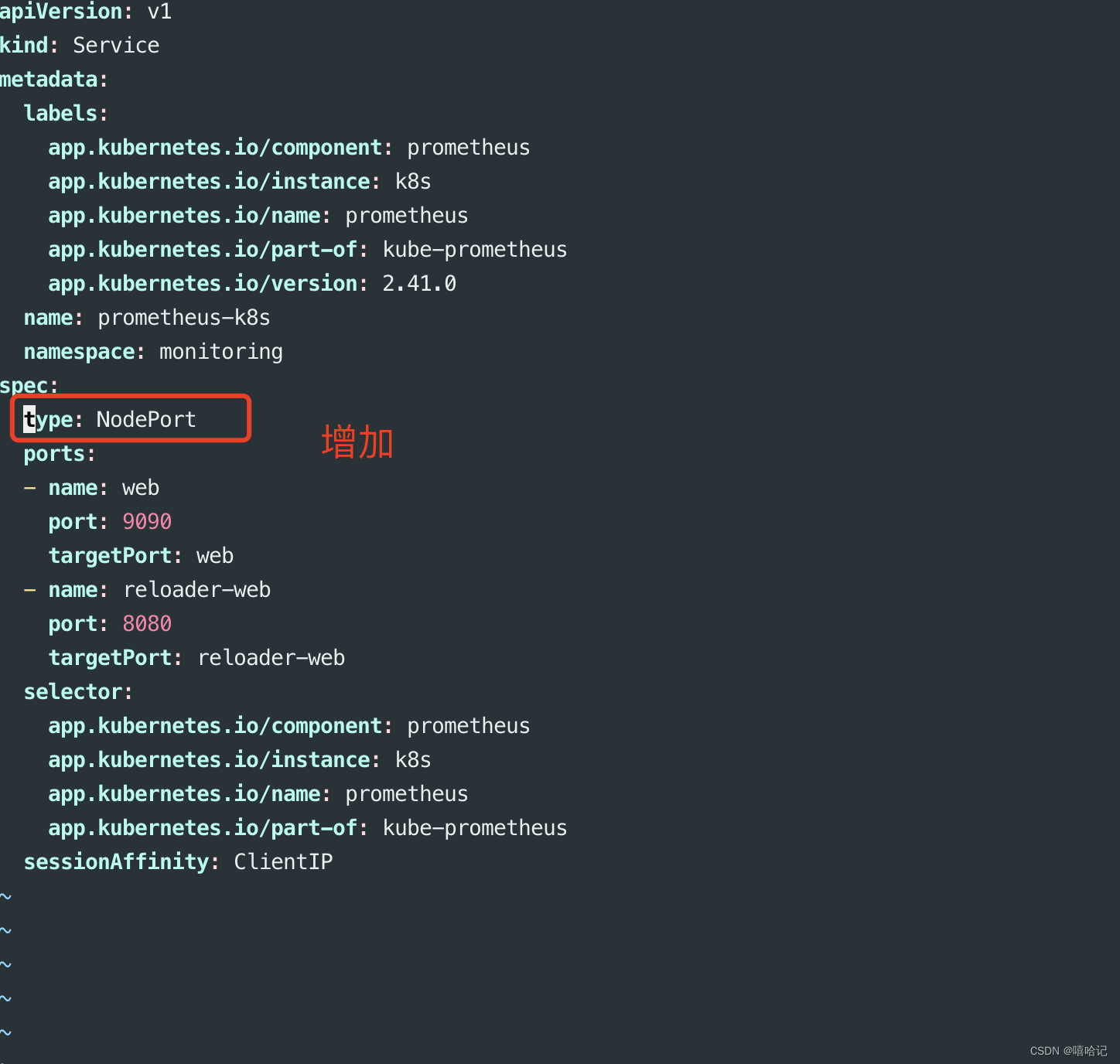

3.3 刚才的service信息里type都是ClusterIP,这个只能集群内访问,所以修改配置,增加一个prometheus的ingress的配置,通过外部访问

3.3.1 修改 grafana-service.yaml

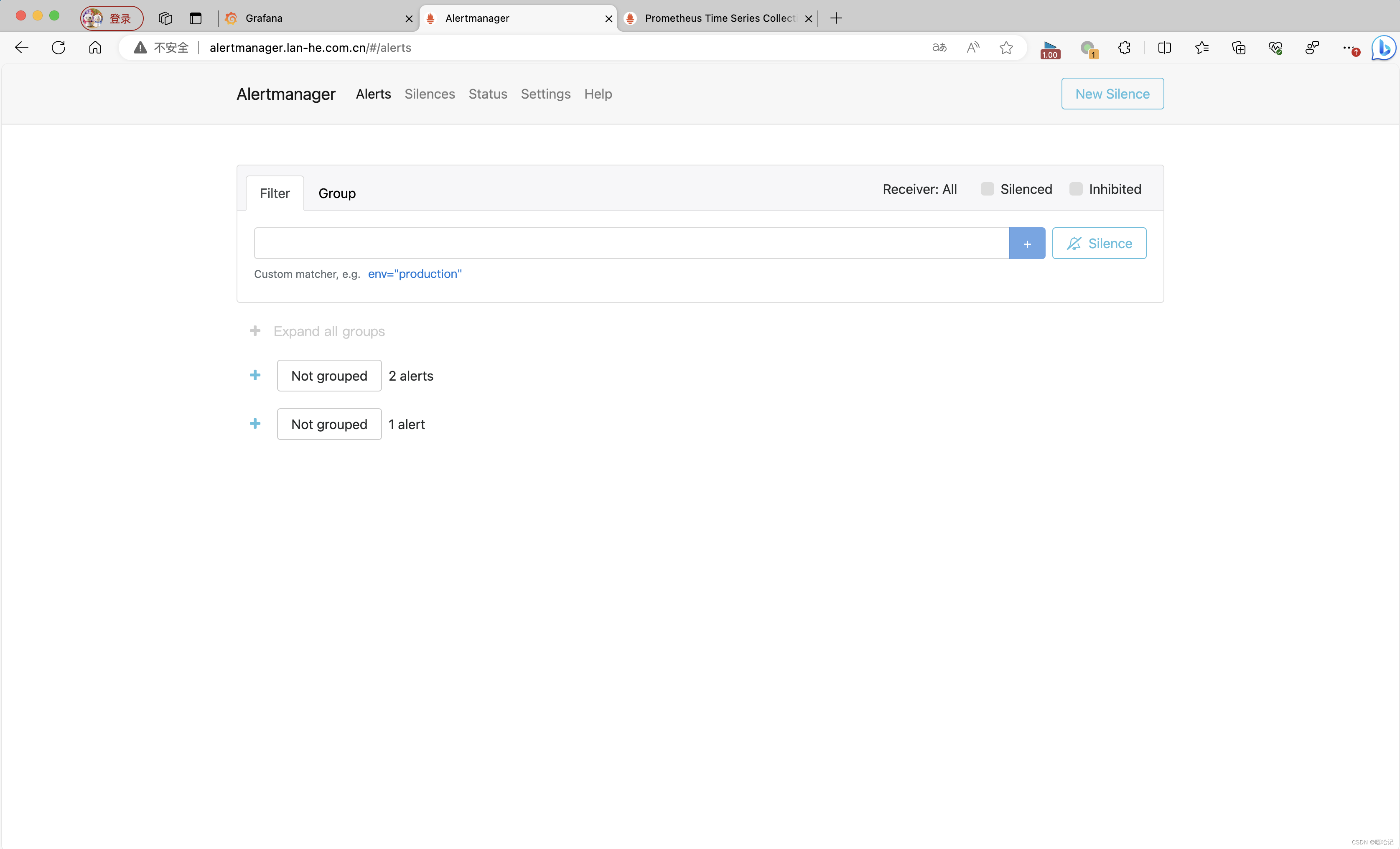

3.3.2 修改 alertmanager-service.yaml

3.3.3 修改 prometheus-service.yaml

3.3.4 增加prometheus-ingress.yaml 文件

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:namespace: monitoringname: prometheus-ingress

spec:ingressClassName: nginxrules:- host: grafana.lan-he.com.cn # 访问 Grafana 域名http:paths:- path: /pathType: Prefixbackend:service:name: grafanaport:number: 3000- host: prometheus.lan-he.com.cn.cn # 访问 Prometheus 域名http:paths:- path: /pathType: Prefixbackend:service:name: prometheus-k8s port:number: 9090- host: alertmanager.lan-he.com.cn # 访问 alertmanager 域名http:paths:- path: /pathType: Prefixbackend:service:name: alertmanager-mainport:number: 90933.3.5 更新配置

[root@k8s-master kube-prometheus-0.12.0]# kubectl apply -f manifests/

alertmanager.monitoring.coreos.com/main unchanged

networkpolicy.networking.k8s.io/alertmanager-main unchanged

poddisruptionbudget.policy/alertmanager-main configured

prometheusrule.monitoring.coreos.com/alertmanager-main-rules unchanged

secret/alertmanager-main configured

service/alertmanager-main unchanged

serviceaccount/alertmanager-main unchanged

servicemonitor.monitoring.coreos.com/alertmanager-main unchanged

clusterrole.rbac.authorization.k8s.io/blackbox-exporter unchanged

clusterrolebinding.rbac.authorization.k8s.io/blackbox-exporter unchanged

configmap/blackbox-exporter-configuration unchanged

deployment.apps/blackbox-exporter unchanged

networkpolicy.networking.k8s.io/blackbox-exporter unchanged

service/blackbox-exporter unchanged

serviceaccount/blackbox-exporter unchanged

servicemonitor.monitoring.coreos.com/blackbox-exporter unchanged

secret/grafana-config configured

secret/grafana-datasources configured

configmap/grafana-dashboard-alertmanager-overview unchanged

configmap/grafana-dashboard-apiserver unchanged

configmap/grafana-dashboard-cluster-total unchanged

configmap/grafana-dashboard-controller-manager unchanged

configmap/grafana-dashboard-grafana-overview unchanged

configmap/grafana-dashboard-k8s-resources-cluster unchanged

configmap/grafana-dashboard-k8s-resources-namespace unchanged

configmap/grafana-dashboard-k8s-resources-node unchanged

configmap/grafana-dashboard-k8s-resources-pod unchanged

configmap/grafana-dashboard-k8s-resources-workload unchanged

configmap/grafana-dashboard-k8s-resources-workloads-namespace unchanged

configmap/grafana-dashboard-kubelet unchanged

configmap/grafana-dashboard-namespace-by-pod unchanged

configmap/grafana-dashboard-namespace-by-workload unchanged

configmap/grafana-dashboard-node-cluster-rsrc-use unchanged

configmap/grafana-dashboard-node-rsrc-use unchanged

configmap/grafana-dashboard-nodes-darwin unchanged

configmap/grafana-dashboard-nodes unchanged

configmap/grafana-dashboard-persistentvolumesusage unchanged

configmap/grafana-dashboard-pod-total unchanged

configmap/grafana-dashboard-prometheus-remote-write unchanged

configmap/grafana-dashboard-prometheus unchanged

configmap/grafana-dashboard-proxy unchanged

configmap/grafana-dashboard-scheduler unchanged

configmap/grafana-dashboard-workload-total unchanged

configmap/grafana-dashboards unchanged

deployment.apps/grafana configured

networkpolicy.networking.k8s.io/grafana unchanged

prometheusrule.monitoring.coreos.com/grafana-rules unchanged

service/grafana unchanged

serviceaccount/grafana unchanged

servicemonitor.monitoring.coreos.com/grafana unchanged

prometheusrule.monitoring.coreos.com/kube-prometheus-rules unchanged

clusterrole.rbac.authorization.k8s.io/kube-state-metrics unchanged

clusterrolebinding.rbac.authorization.k8s.io/kube-state-metrics unchanged

deployment.apps/kube-state-metrics unchanged

networkpolicy.networking.k8s.io/kube-state-metrics unchanged

prometheusrule.monitoring.coreos.com/kube-state-metrics-rules unchanged

service/kube-state-metrics unchanged

serviceaccount/kube-state-metrics unchanged

servicemonitor.monitoring.coreos.com/kube-state-metrics unchanged

prometheusrule.monitoring.coreos.com/kubernetes-monitoring-rules unchanged

servicemonitor.monitoring.coreos.com/kube-apiserver unchanged

servicemonitor.monitoring.coreos.com/coredns unchanged

servicemonitor.monitoring.coreos.com/kube-controller-manager unchanged

servicemonitor.monitoring.coreos.com/kube-scheduler unchanged

servicemonitor.monitoring.coreos.com/kubelet configured

clusterrole.rbac.authorization.k8s.io/node-exporter unchanged

clusterrolebinding.rbac.authorization.k8s.io/node-exporter unchanged

daemonset.apps/node-exporter unchanged

networkpolicy.networking.k8s.io/node-exporter unchanged

prometheusrule.monitoring.coreos.com/node-exporter-rules unchanged

service/node-exporter unchanged

serviceaccount/node-exporter unchanged

servicemonitor.monitoring.coreos.com/node-exporter unchanged

clusterrole.rbac.authorization.k8s.io/prometheus-k8s unchanged

clusterrolebinding.rbac.authorization.k8s.io/prometheus-k8s unchanged

ingress.networking.k8s.io/prometheus-ingress configured

networkpolicy.networking.k8s.io/prometheus-k8s unchanged

poddisruptionbudget.policy/prometheus-k8s configured

prometheus.monitoring.coreos.com/k8s unchanged

prometheusrule.monitoring.coreos.com/prometheus-k8s-prometheus-rules unchanged

rolebinding.rbac.authorization.k8s.io/prometheus-k8s-config unchanged

rolebinding.rbac.authorization.k8s.io/prometheus-k8s unchanged

rolebinding.rbac.authorization.k8s.io/prometheus-k8s unchanged

rolebinding.rbac.authorization.k8s.io/prometheus-k8s unchanged

role.rbac.authorization.k8s.io/prometheus-k8s-config unchanged

role.rbac.authorization.k8s.io/prometheus-k8s unchanged

role.rbac.authorization.k8s.io/prometheus-k8s unchanged

role.rbac.authorization.k8s.io/prometheus-k8s unchanged

service/prometheus-k8s unchanged

serviceaccount/prometheus-k8s unchanged

servicemonitor.monitoring.coreos.com/prometheus-k8s unchanged

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io unchanged

clusterrole.rbac.authorization.k8s.io/prometheus-adapter unchanged

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader unchanged

clusterrolebinding.rbac.authorization.k8s.io/prometheus-adapter unchanged

clusterrolebinding.rbac.authorization.k8s.io/resource-metrics:system:auth-delegator unchanged

clusterrole.rbac.authorization.k8s.io/resource-metrics-server-resources unchanged

configmap/adapter-config unchanged

deployment.apps/prometheus-adapter configured

networkpolicy.networking.k8s.io/prometheus-adapter unchanged

poddisruptionbudget.policy/prometheus-adapter configured

rolebinding.rbac.authorization.k8s.io/resource-metrics-auth-reader unchanged

service/prometheus-adapter unchanged

serviceaccount/prometheus-adapter unchanged

servicemonitor.monitoring.coreos.com/prometheus-adapter unchanged

clusterrole.rbac.authorization.k8s.io/prometheus-operator unchanged

clusterrolebinding.rbac.authorization.k8s.io/prometheus-operator unchanged

deployment.apps/prometheus-operator unchanged

networkpolicy.networking.k8s.io/prometheus-operator unchanged

prometheusrule.monitoring.coreos.com/prometheus-operator-rules unchanged

service/prometheus-operator unchanged

serviceaccount/prometheus-operator unchanged

servicemonitor.monitoring.coreos.com/prometheus-operator unchanged

3.4 创建ingress-nginx控制器

3.4.1 通过helm 下载ingress-nginx

[root@k8s-master ~]# helm repo add ingress-nginx https://kubernetes.github.io/ingress-nginx[root@k8s-master ~]# helm pull ingress-nginx/ingress-nginx[root@k8s-master ~]# tar -xf ingress-nginx-4.9.1.tgz[root@k8s-master ~]# kubectl label node k8s-node-01 ingress=true[root@k8s-master ~]# cd ingress-nginx/[root@k8s-master ingress-nginx]#

3.4.2 ingress-nginx 配置文件

## nginx configuration

## Ref: https://github.com/kubernetes/ingress-nginx/blob/main/docs/user-guide/nginx-configuration/index.md

#### Overrides for generated resource names

# See templates/_helpers.tpl

# nameOverride:

# fullnameOverride:# -- Override the deployment namespace; defaults to .Release.Namespace

namespaceOverride: "monitoring"

## Labels to apply to all resources

##

commonLabels: {}

# scmhash: abc123

# myLabel: aakkmdcontroller:name: controllerenableAnnotationValidations: falseimage:## Keep false as default for now!chroot: falseregistry: registry.cn-hangzhou.aliyuncs.comimage: google_containers/nginx-ingress-controller## for backwards compatibility consider setting the full image url via the repository value below## use *either* current default registry/image or repository format or installing chart by providing the values.yaml will fail## repository:tag: "v1.10.0"#digest: sha256:42b3f0e5d0846876b1791cd3afeb5f1cbbe4259d6f35651dcc1b5c980925379c#digestChroot: sha256:7eb46ff733429e0e46892903c7394aff149ac6d284d92b3946f3baf7ff26a096pullPolicy: IfNotPresentrunAsNonRoot: true# www-data -> uid 101runAsUser: 101allowPrivilegeEscalation: falseseccompProfile:type: RuntimeDefaultreadOnlyRootFilesystem: false# -- Use an existing PSP instead of creating oneexistingPsp: ""# -- Configures the controller container namecontainerName: controller# -- Configures the ports that the nginx-controller listens oncontainerPort:http: 80https: 443# -- Will add custom configuration options to Nginx https://kubernetes.github.io/ingress-nginx/user-guide/nginx-configuration/configmap/config: {}# -- Annotations to be added to the controller config configuration configmap.configAnnotations: {}# -- Will add custom headers before sending traffic to backends according to https://github.com/kubernetes/ingress-nginx/tree/main/docs/examples/customization/custom-headersproxySetHeaders: {}# -- Will add custom headers before sending response traffic to the client according to: https://kubernetes.github.io/ingress-nginx/user-guide/nginx-configuration/configmap/#add-headersaddHeaders: {}# -- Optionally customize the pod dnsConfig.dnsConfig: {}# -- Optionally customize the pod hostAliases.hostAliases: []# - ip: 127.0.0.1# hostnames:# - foo.local# - bar.local# - ip: 10.1.2.3# hostnames:# - foo.remote# - bar.remote# -- Optionally customize the pod hostname.hostname: {}# -- Optionally change this to ClusterFirstWithHostNet in case you have 'hostNetwork: true'.# By default, while using host network, name resolution uses the host's DNS. If you wish nginx-controller# to keep resolving names inside the k8s network, use ClusterFirstWithHostNet.dnsPolicy: ClusterFirstWithHostNet# -- Bare-metal considerations via the host network https://kubernetes.github.io/ingress-nginx/deploy/baremetal/#via-the-host-network# Ingress status was blank because there is no Service exposing the Ingress-Nginx Controller in a configuration using the host network, the default --publish-service flag used in standard cloud setups does not applyreportNodeInternalIp: false# -- Process Ingress objects without ingressClass annotation/ingressClassName field# Overrides value for --watch-ingress-without-class flag of the controller binary# Defaults to falsewatchIngressWithoutClass: false# -- Process IngressClass per name (additionally as per spec.controller).ingressClassByName: false# -- This configuration enables Topology Aware Routing feature, used together with service annotation service.kubernetes.io/topology-mode="auto"# Defaults to falseenableTopologyAwareRouting: false# -- This configuration defines if Ingress Controller should allow users to set# their own *-snippet annotations, otherwise this is forbidden / dropped# when users add those annotations.# Global snippets in ConfigMap are still respectedallowSnippetAnnotations: false# -- Required for use with CNI based kubernetes installations (such as ones set up by kubeadm),# since CNI and hostport don't mix yet. Can be deprecated once https://github.com/kubernetes/kubernetes/issues/23920# is mergedhostNetwork: true## Use host ports 80 and 443## Disabled by defaulthostPort:# -- Enable 'hostPort' or notenabled: falseports:# -- 'hostPort' http porthttp: 80# -- 'hostPort' https porthttps: 443# NetworkPolicy for controller component.networkPolicy:# -- Enable 'networkPolicy' or notenabled: false# -- Election ID to use for status update, by default it uses the controller name combined with a suffix of 'leader'electionID: ""## This section refers to the creation of the IngressClass resource## IngressClass resources are supported since k8s >= 1.18 and required since k8s >= 1.19ingressClassResource:# -- Name of the ingressClassname: nginx# -- Is this ingressClass enabled or notenabled: true# -- Is this the default ingressClass for the clusterdefault: false# -- Controller-value of the controller that is processing this ingressClasscontrollerValue: "k8s.io/ingress-nginx"# -- Parameters is a link to a custom resource containing additional# configuration for the controller. This is optional if the controller# does not require extra parameters.parameters: {}# -- For backwards compatibility with ingress.class annotation, use ingressClass.# Algorithm is as follows, first ingressClassName is considered, if not present, controller looks for ingress.class annotationingressClass: nginx# -- Labels to add to the pod container metadatapodLabels: {}# key: value# -- Security context for controller podspodSecurityContext: {}# -- sysctls for controller pods## Ref: https://kubernetes.io/docs/tasks/administer-cluster/sysctl-cluster/sysctls: {}# sysctls:# "net.core.somaxconn": "8192"# -- Security context for controller containerscontainerSecurityContext: {}# -- Allows customization of the source of the IP address or FQDN to report# in the ingress status field. By default, it reads the information provided# by the service. If disable, the status field reports the IP address of the# node or nodes where an ingress controller pod is running.publishService:# -- Enable 'publishService' or notenabled: true# -- Allows overriding of the publish service to bind to# Must be <namespace>/<service_name>pathOverride: ""# Limit the scope of the controller to a specific namespacescope:# -- Enable 'scope' or notenabled: false# -- Namespace to limit the controller to; defaults to $(POD_NAMESPACE)namespace: ""# -- When scope.enabled == false, instead of watching all namespaces, we watching namespaces whose labels# only match with namespaceSelector. Format like foo=bar. Defaults to empty, means watching all namespaces.namespaceSelector: ""# -- Allows customization of the configmap / nginx-configmap namespace; defaults to $(POD_NAMESPACE)configMapNamespace: ""tcp:# -- Allows customization of the tcp-services-configmap; defaults to $(POD_NAMESPACE)configMapNamespace: ""# -- Annotations to be added to the tcp config configmapannotations: {}udp:# -- Allows customization of the udp-services-configmap; defaults to $(POD_NAMESPACE)configMapNamespace: ""# -- Annotations to be added to the udp config configmapannotations: {}# -- Maxmind license key to download GeoLite2 Databases.## https://blog.maxmind.com/2019/12/18/significant-changes-to-accessing-and-using-geolite2-databasesmaxmindLicenseKey: ""# -- Additional command line arguments to pass to Ingress-Nginx Controller# E.g. to specify the default SSL certificate you can useextraArgs: {}## extraArgs:## default-ssl-certificate: "<namespace>/<secret_name>"## time-buckets: "0.005,0.01,0.025,0.05,0.1,0.25,0.5,1,2.5,5,10"## length-buckets: "10,20,30,40,50,60,70,80,90,100"## size-buckets: "10,100,1000,10000,100000,1e+06,1e+07"# -- Additional environment variables to setextraEnvs: []# extraEnvs:# - name: FOO# valueFrom:# secretKeyRef:# key: FOO# name: secret-resource# -- Use a `DaemonSet` or `Deployment`kind: DaemonSet# -- Annotations to be added to the controller Deployment or DaemonSet##annotations: {}# keel.sh/pollSchedule: "@every 60m"# -- Labels to be added to the controller Deployment or DaemonSet and other resources that do not have option to specify labels##labels: {}# keel.sh/policy: patch# keel.sh/trigger: poll# -- The update strategy to apply to the Deployment or DaemonSet##updateStrategy: {}# rollingUpdate:# maxUnavailable: 1# type: RollingUpdate# -- `minReadySeconds` to avoid killing pods before we are ready##minReadySeconds: 0# -- Node tolerations for server scheduling to nodes with taints## Ref: https://kubernetes.io/docs/concepts/configuration/assign-pod-node/##tolerations: []# - key: "key"# operator: "Equal|Exists"# value: "value"# effect: "NoSchedule|PreferNoSchedule|NoExecute(1.6 only)"# -- Affinity and anti-affinity rules for server scheduling to nodes## Ref: https://kubernetes.io/docs/concepts/configuration/assign-pod-node/#affinity-and-anti-affinity##affinity: {}# # An example of preferred pod anti-affinity, weight is in the range 1-100# podAntiAffinity:# preferredDuringSchedulingIgnoredDuringExecution:# - weight: 100# podAffinityTerm:# labelSelector:# matchExpressions:# - key: app.kubernetes.io/name# operator: In# values:# - ingress-nginx# - key: app.kubernetes.io/instance# operator: In# values:# - ingress-nginx# - key: app.kubernetes.io/component# operator: In# values:# - controller# topologyKey: kubernetes.io/hostname# # An example of required pod anti-affinity# podAntiAffinity:# requiredDuringSchedulingIgnoredDuringExecution:# - labelSelector:# matchExpressions:# - key: app.kubernetes.io/name# operator: In# values:# - ingress-nginx# - key: app.kubernetes.io/instance# operator: In# values:# - ingress-nginx# - key: app.kubernetes.io/component# operator: In# values:# - controller# topologyKey: "kubernetes.io/hostname"# -- Topology spread constraints rely on node labels to identify the topology domain(s) that each Node is in.## Ref: https://kubernetes.io/docs/concepts/workloads/pods/pod-topology-spread-constraints/##topologySpreadConstraints: []# - labelSelector:# matchLabels:# app.kubernetes.io/name: '{{ include "ingress-nginx.name" . }}'# app.kubernetes.io/instance: '{{ .Release.Name }}'# app.kubernetes.io/component: controller# topologyKey: topology.kubernetes.io/zone# maxSkew: 1# whenUnsatisfiable: ScheduleAnyway# - labelSelector:# matchLabels:# app.kubernetes.io/name: '{{ include "ingress-nginx.name" . }}'# app.kubernetes.io/instance: '{{ .Release.Name }}'# app.kubernetes.io/component: controller# topologyKey: kubernetes.io/hostname# maxSkew: 1# whenUnsatisfiable: ScheduleAnyway# -- `terminationGracePeriodSeconds` to avoid killing pods before we are ready## wait up to five minutes for the drain of connections##terminationGracePeriodSeconds: 300# -- Node labels for controller pod assignment## Ref: https://kubernetes.io/docs/concepts/scheduling-eviction/assign-pod-node/##nodeSelector:kubernetes.io/os: linuxingress: "true"## Liveness and readiness probe values## Ref: https://kubernetes.io/docs/concepts/workloads/pods/pod-lifecycle/#container-probes#### startupProbe:## httpGet:## # should match container.healthCheckPath## path: "/healthz"## port: 10254## scheme: HTTP## initialDelaySeconds: 5## periodSeconds: 5## timeoutSeconds: 2## successThreshold: 1## failureThreshold: 5livenessProbe:httpGet:# should match container.healthCheckPathpath: "/healthz"port: 10254scheme: HTTPinitialDelaySeconds: 10periodSeconds: 10timeoutSeconds: 1successThreshold: 1failureThreshold: 5readinessProbe:httpGet:# should match container.healthCheckPathpath: "/healthz"port: 10254scheme: HTTPinitialDelaySeconds: 10periodSeconds: 10timeoutSeconds: 1successThreshold: 1failureThreshold: 3# -- Path of the health check endpoint. All requests received on the port defined by# the healthz-port parameter are forwarded internally to this path.healthCheckPath: "/healthz"# -- Address to bind the health check endpoint.# It is better to set this option to the internal node address# if the Ingress-Nginx Controller is running in the `hostNetwork: true` mode.healthCheckHost: ""# -- Annotations to be added to controller pods##podAnnotations: {}replicaCount: 1# -- Minimum available pods set in PodDisruptionBudget.# Define either 'minAvailable' or 'maxUnavailable', never both.minAvailable: 1# -- Maximum unavailable pods set in PodDisruptionBudget. If set, 'minAvailable' is ignored.# maxUnavailable: 1## Define requests resources to avoid probe issues due to CPU utilization in busy nodes## ref: https://github.com/kubernetes/ingress-nginx/issues/4735#issuecomment-551204903## Ideally, there should be no limits.## https://engineering.indeedblog.com/blog/2019/12/cpu-throttling-regression-fix/resources:## limits:## cpu: 100m## memory: 90Mirequests:cpu: 100mmemory: 90Mi# Mutually exclusive with keda autoscalingautoscaling:enabled: falseannotations: {}minReplicas: 1maxReplicas: 11targetCPUUtilizationPercentage: 50targetMemoryUtilizationPercentage: 50behavior: {}# scaleDown:# stabilizationWindowSeconds: 300# policies:# - type: Pods# value: 1# periodSeconds: 180# scaleUp:# stabilizationWindowSeconds: 300# policies:# - type: Pods# value: 2# periodSeconds: 60autoscalingTemplate: []# Custom or additional autoscaling metrics# ref: https://kubernetes.io/docs/tasks/run-application/horizontal-pod-autoscale/#support-for-custom-metrics# - type: Pods# pods:# metric:# name: nginx_ingress_controller_nginx_process_requests_total# target:# type: AverageValue# averageValue: 10000m# Mutually exclusive with hpa autoscalingkeda:apiVersion: "keda.sh/v1alpha1"## apiVersion changes with keda 1.x vs 2.x## 2.x = keda.sh/v1alpha1## 1.x = keda.k8s.io/v1alpha1enabled: falseminReplicas: 1maxReplicas: 11pollingInterval: 30cooldownPeriod: 300# fallback:# failureThreshold: 3# replicas: 11restoreToOriginalReplicaCount: falsescaledObject:annotations: {}# Custom annotations for ScaledObject resource# annotations:# key: valuetriggers: []# - type: prometheus# metadata:# serverAddress: http://<prometheus-host>:9090# metricName: http_requests_total# threshold: '100'# query: sum(rate(http_requests_total{deployment="my-deployment"}[2m]))behavior: {}# scaleDown:# stabilizationWindowSeconds: 300# policies:# - type: Pods# value: 1# periodSeconds: 180# scaleUp:# stabilizationWindowSeconds: 300# policies:# - type: Pods# value: 2# periodSeconds: 60# -- Enable mimalloc as a drop-in replacement for malloc.## ref: https://github.com/microsoft/mimalloc##enableMimalloc: true## Override NGINX templatecustomTemplate:configMapName: ""configMapKey: ""service:# -- Enable controller services or not. This does not influence the creation of either the admission webhook or the metrics service.enabled: trueexternal:# -- Enable the external controller service or not. Useful for internal-only deployments.enabled: true# -- Annotations to be added to the external controller service. See `controller.service.internal.annotations` for annotations to be added to the internal controller service.annotations: {}# -- Labels to be added to both controller services.labels: {}# -- Type of the external controller service.# Ref: https://kubernetes.io/docs/concepts/services-networking/service/#publishing-services-service-typestype: ClusterIP# -- Pre-defined cluster internal IP address of the external controller service. Take care of collisions with existing services.# This value is immutable. Set once, it can not be changed without deleting and re-creating the service.# Ref: https://kubernetes.io/docs/concepts/services-networking/service/#choosing-your-own-ip-addressclusterIP: ""# -- List of node IP addresses at which the external controller service is available.# Ref: https://kubernetes.io/docs/concepts/services-networking/service/#external-ipsexternalIPs: []# -- Deprecated: Pre-defined IP address of the external controller service. Used by cloud providers to connect the resulting load balancer service to a pre-existing static IP.# Ref: https://kubernetes.io/docs/concepts/services-networking/service/#loadbalancerloadBalancerIP: ""# -- Restrict access to the external controller service. Values must be CIDRs. Allows any source address by default.loadBalancerSourceRanges: []# -- Load balancer class of the external controller service. Used by cloud providers to select a load balancer implementation other than the cloud provider default.# Ref: https://kubernetes.io/docs/concepts/services-networking/service/#load-balancer-classloadBalancerClass: ""# -- Enable node port allocation for the external controller service or not. Applies to type `LoadBalancer` only.# Ref: https://kubernetes.io/docs/concepts/services-networking/service/#load-balancer-nodeport-allocation# allocateLoadBalancerNodePorts: true# -- External traffic policy of the external controller service. Set to "Local" to preserve source IP on providers supporting it.# Ref: https://kubernetes.io/docs/tasks/access-application-cluster/create-external-load-balancer/#preserving-the-client-source-ipexternalTrafficPolicy: ""# -- Session affinity of the external controller service. Must be either "None" or "ClientIP" if set. Defaults to "None".# Ref: https://kubernetes.io/docs/reference/networking/virtual-ips/#session-affinitysessionAffinity: ""# -- Specifies the health check node port (numeric port number) for the external controller service.# If not specified, the service controller allocates a port from your cluster's node port range.# Ref: https://kubernetes.io/docs/tasks/access-application-cluster/create-external-load-balancer/#preserving-the-client-source-ip# healthCheckNodePort: 0# -- Represents the dual-stack capabilities of the external controller service. Possible values are SingleStack, PreferDualStack or RequireDualStack.# Fields `ipFamilies` and `clusterIP` depend on the value of this field.# Ref: https://kubernetes.io/docs/concepts/services-networking/dual-stack/#servicesipFamilyPolicy: SingleStack# -- List of IP families (e.g. IPv4, IPv6) assigned to the external controller service. This field is usually assigned automatically based on cluster configuration and the `ipFamilyPolicy` field.# Ref: https://kubernetes.io/docs/concepts/services-networking/dual-stack/#servicesipFamilies:- IPv4# -- Enable the HTTP listener on both controller services or not.enableHttp: true# -- Enable the HTTPS listener on both controller services or not.enableHttps: trueports:# -- Port the external HTTP listener is published with.http: 80# -- Port the external HTTPS listener is published with.https: 443targetPorts:# -- Port of the ingress controller the external HTTP listener is mapped to.http: http# -- Port of the ingress controller the external HTTPS listener is mapped to.https: https# -- Declare the app protocol of the external HTTP and HTTPS listeners or not. Supersedes provider-specific annotations for declaring the backend protocol.# Ref: https://kubernetes.io/docs/concepts/services-networking/service/#application-protocolappProtocol: truenodePorts:# -- Node port allocated for the external HTTP listener. If left empty, the service controller allocates one from the configured node port range.http: ""# -- Node port allocated for the external HTTPS listener. If left empty, the service controller allocates one from the configured node port range.https: ""# -- Node port mapping for external TCP listeners. If left empty, the service controller allocates them from the configured node port range.# Example:# tcp:# 8080: 30080tcp: {}# -- Node port mapping for external UDP listeners. If left empty, the service controller allocates them from the configured node port range.# Example:# udp:# 53: 30053udp: {}internal:# -- Enable the internal controller service or not. Remember to configure `controller.service.internal.annotations` when enabling this.enabled: false# -- Annotations to be added to the internal controller service. Mandatory for the internal controller service to be created. Varies with the cloud service.# Ref: https://kubernetes.io/docs/concepts/services-networking/service/#internal-load-balancerannotations: {}# -- Type of the internal controller service.# Defaults to the value of `controller.service.type`.# Ref: https://kubernetes.io/docs/concepts/services-networking/service/#publishing-services-service-typestype: ""# -- Pre-defined cluster internal IP address of the internal controller service. Take care of collisions with existing services.# This value is immutable. Set once, it can not be changed without deleting and re-creating the service.# Ref: https://kubernetes.io/docs/concepts/services-networking/service/#choosing-your-own-ip-addressclusterIP: ""# -- List of node IP addresses at which the internal controller service is available.# Ref: https://kubernetes.io/docs/concepts/services-networking/service/#external-ipsexternalIPs: []# -- Deprecated: Pre-defined IP address of the internal controller service. Used by cloud providers to connect the resulting load balancer service to a pre-existing static IP.# Ref: https://kubernetes.io/docs/concepts/services-networking/service/#loadbalancerloadBalancerIP: ""# -- Restrict access to the internal controller service. Values must be CIDRs. Allows any source address by default.loadBalancerSourceRanges: []# -- Load balancer class of the internal controller service. Used by cloud providers to select a load balancer implementation other than the cloud provider default.# Ref: https://kubernetes.io/docs/concepts/services-networking/service/#load-balancer-classloadBalancerClass: ""# -- Enable node port allocation for the internal controller service or not. Applies to type `LoadBalancer` only.# Ref: https://kubernetes.io/docs/concepts/services-networking/service/#load-balancer-nodeport-allocation# allocateLoadBalancerNodePorts: true# -- External traffic policy of the internal controller service. Set to "Local" to preserve source IP on providers supporting it.# Ref: https://kubernetes.io/docs/tasks/access-application-cluster/create-external-load-balancer/#preserving-the-client-source-ipexternalTrafficPolicy: ""# -- Session affinity of the internal controller service. Must be either "None" or "ClientIP" if set. Defaults to "None".# Ref: https://kubernetes.io/docs/reference/networking/virtual-ips/#session-affinitysessionAffinity: ""# -- Specifies the health check node port (numeric port number) for the internal controller service.# If not specified, the service controller allocates a port from your cluster's node port range.# Ref: https://kubernetes.io/docs/tasks/access-application-cluster/create-external-load-balancer/#preserving-the-client-source-ip# healthCheckNodePort: 0# -- Represents the dual-stack capabilities of the internal controller service. Possible values are SingleStack, PreferDualStack or RequireDualStack.# Fields `ipFamilies` and `clusterIP` depend on the value of this field.# Ref: https://kubernetes.io/docs/concepts/services-networking/dual-stack/#servicesipFamilyPolicy: SingleStack# -- List of IP families (e.g. IPv4, IPv6) assigned to the internal controller service. This field is usually assigned automatically based on cluster configuration and the `ipFamilyPolicy` field.# Ref: https://kubernetes.io/docs/concepts/services-networking/dual-stack/#servicesipFamilies:- IPv4ports: {}# -- Port the internal HTTP listener is published with.# Defaults to the value of `controller.service.ports.http`.# http: 80# -- Port the internal HTTPS listener is published with.# Defaults to the value of `controller.service.ports.https`.# https: 443targetPorts: {}# -- Port of the ingress controller the internal HTTP listener is mapped to.# Defaults to the value of `controller.service.targetPorts.http`.# http: http# -- Port of the ingress controller the internal HTTPS listener is mapped to.# Defaults to the value of `controller.service.targetPorts.https`.# https: https# -- Declare the app protocol of the internal HTTP and HTTPS listeners or not. Supersedes provider-specific annotations for declaring the backend protocol.# Ref: https://kubernetes.io/docs/concepts/services-networking/service/#application-protocolappProtocol: truenodePorts:# -- Node port allocated for the internal HTTP listener. If left empty, the service controller allocates one from the configured node port range.http: ""# -- Node port allocated for the internal HTTPS listener. If left empty, the service controller allocates one from the configured node port range.https: ""# -- Node port mapping for internal TCP listeners. If left empty, the service controller allocates them from the configured node port range.# Example:# tcp:# 8080: 30080tcp: {}# -- Node port mapping for internal UDP listeners. If left empty, the service controller allocates them from the configured node port range.# Example:# udp:# 53: 30053udp: {}# shareProcessNamespace enables process namespace sharing within the pod.# This can be used for example to signal log rotation using `kill -USR1` from a sidecar.shareProcessNamespace: false# -- Additional containers to be added to the controller pod.# See https://github.com/lemonldap-ng-controller/lemonldap-ng-controller as example.extraContainers: []# - name: my-sidecar# image: nginx:latest# - name: lemonldap-ng-controller# image: lemonldapng/lemonldap-ng-controller:0.2.0# args:# - /lemonldap-ng-controller# - --alsologtostderr# - --configmap=$(POD_NAMESPACE)/lemonldap-ng-configuration# env:# - name: POD_NAME# valueFrom:# fieldRef:# fieldPath: metadata.name# - name: POD_NAMESPACE# valueFrom:# fieldRef:# fieldPath: metadata.namespace# volumeMounts:# - name: copy-portal-skins# mountPath: /srv/var/lib/lemonldap-ng/portal/skins# -- Additional volumeMounts to the controller main container.extraVolumeMounts: []# - name: copy-portal-skins# mountPath: /var/lib/lemonldap-ng/portal/skins# -- Additional volumes to the controller pod.extraVolumes: []# - name: copy-portal-skins# emptyDir: {}# -- Containers, which are run before the app containers are started.extraInitContainers: []# - name: init-myservice# image: busybox# command: ['sh', '-c', 'until nslookup myservice; do echo waiting for myservice; sleep 2; done;']# -- Modules, which are mounted into the core nginx image. See values.yaml for a sample to add opentelemetry moduleextraModules: []# - name: mytestmodule# image:# registry: registry.k8s.io# image: ingress-nginx/mytestmodule# ## for backwards compatibility consider setting the full image url via the repository value below# ## use *either* current default registry/image or repository format or installing chart by providing the values.yaml will fail# ## repository:# tag: "v1.0.0"# digest: ""# distroless: false# containerSecurityContext:# runAsNonRoot: true# runAsUser: <user-id># allowPrivilegeEscalation: false# seccompProfile:# type: RuntimeDefault# capabilities:# drop:# - ALL# readOnlyRootFilesystem: true# resources: {}## The image must contain a `/usr/local/bin/init_module.sh` executable, which# will be executed as initContainers, to move its config files within the# mounted volume.opentelemetry:enabled: falsename: opentelemetryimage:registry: registry.k8s.ioimage: ingress-nginx/opentelemetry## for backwards compatibility consider setting the full image url via the repository value below## use *either* current default registry/image or repository format or installing chart by providing the values.yaml will fail## repository:tag: "v20230721-3e2062ee5"digest: sha256:13bee3f5223883d3ca62fee7309ad02d22ec00ff0d7033e3e9aca7a9f60fd472distroless: truecontainerSecurityContext:runAsNonRoot: true# -- The image's default user, inherited from its base image `cgr.dev/chainguard/static`.runAsUser: 65532allowPrivilegeEscalation: falseseccompProfile:type: RuntimeDefaultcapabilities:drop:- ALLreadOnlyRootFilesystem: trueresources: {}admissionWebhooks:name: admissionannotations: {}# ignore-check.kube-linter.io/no-read-only-rootfs: "This deployment needs write access to root filesystem".## Additional annotations to the admission webhooks.## These annotations will be added to the ValidatingWebhookConfiguration and## the Jobs Spec of the admission webhooks.enabled: false# -- Additional environment variables to setextraEnvs: []# extraEnvs:# - name: FOO# valueFrom:# secretKeyRef:# key: FOO# name: secret-resource# -- Admission Webhook failure policy to usefailurePolicy: Fail# timeoutSeconds: 10port: 8443certificate: "/usr/local/certificates/cert"key: "/usr/local/certificates/key"namespaceSelector: {}objectSelector: {}# -- Labels to be added to admission webhookslabels: {}# -- Use an existing PSP instead of creating oneexistingPsp: ""service:annotations: {}# clusterIP: ""externalIPs: []# loadBalancerIP: ""loadBalancerSourceRanges: []servicePort: 443type: ClusterIPcreateSecretJob:name: create# -- Security context for secret creation containerssecurityContext:runAsNonRoot: truerunAsUser: 65532allowPrivilegeEscalation: falseseccompProfile:type: RuntimeDefaultcapabilities:drop:- ALLreadOnlyRootFilesystem: trueresources: {}# limits:# cpu: 10m# memory: 20Mi# requests:# cpu: 10m# memory: 20MipatchWebhookJob:name: patch# -- Security context for webhook patch containerssecurityContext:runAsNonRoot: truerunAsUser: 65532allowPrivilegeEscalation: falseseccompProfile:type: RuntimeDefaultcapabilities:drop:- ALLreadOnlyRootFilesystem: trueresources: {}patch:enabled: trueimage:registry: registry.cn-hangzhou.aliyuncs.comimage: google_containers/kube-webhook-certgen## for backwards compatibility consider setting the full image url via the repository value below## use *either* current default registry/image or repository format or installing chart by providing the values.yaml will fail## repository:tag: v1.4.0# digest: sha256:44d1d0e9f19c63f58b380c5fddaca7cf22c7cee564adeff365225a5df5ef3334pullPolicy: IfNotPresent# -- Provide a priority class name to the webhook patching job##priorityClassName: ""podAnnotations: {}# NetworkPolicy for webhook patchnetworkPolicy:# -- Enable 'networkPolicy' or notenabled: falsenodeSelector:kubernetes.io/os: linuxtolerations: []# -- Labels to be added to patch job resourceslabels: {}# -- Security context for secret creation & webhook patch podssecurityContext: {}# Use certmanager to generate webhook certscertManager:enabled: false# self-signed root certificaterootCert:# default to be 5yduration: ""admissionCert:# default to be 1yduration: ""# issuerRef:# name: "issuer"# kind: "ClusterIssuer"metrics:port: 10254portName: metrics# if this port is changed, change healthz-port: in extraArgs: accordinglyenabled: falseservice:annotations: {}# prometheus.io/scrape: "true"# prometheus.io/port: "10254"# -- Labels to be added to the metrics service resourcelabels: {}# clusterIP: ""# -- List of IP addresses at which the stats-exporter service is available## Ref: https://kubernetes.io/docs/concepts/services-networking/service/#external-ips##externalIPs: []# loadBalancerIP: ""loadBalancerSourceRanges: []servicePort: 10254type: ClusterIP# externalTrafficPolicy: ""# nodePort: ""serviceMonitor:enabled: falseadditionalLabels: {}annotations: {}## The label to use to retrieve the job name from.## jobLabel: "app.kubernetes.io/name"namespace: ""namespaceSelector: {}## Default: scrape .Release.Namespace or namespaceOverride only## To scrape all, use the following:## namespaceSelector:## any: truescrapeInterval: 30s# honorLabels: truetargetLabels: []relabelings: []metricRelabelings: []prometheusRule:enabled: falseadditionalLabels: {}# namespace: ""rules: []# # These are just examples rules, please adapt them to your needs# - alert: NGINXConfigFailed# expr: count(nginx_ingress_controller_config_last_reload_successful == 0) > 0# for: 1s# labels:# severity: critical# annotations:# description: bad ingress config - nginx config test failed# summary: uninstall the latest ingress changes to allow config reloads to resume# # By default a fake self-signed certificate is generated as default and# # it is fine if it expires. If `--default-ssl-certificate` flag is used# # and a valid certificate passed please do not filter for `host` label!# # (i.e. delete `{host!="_"}` so also the default SSL certificate is# # checked for expiration)# - alert: NGINXCertificateExpiry# expr: (avg(nginx_ingress_controller_ssl_expire_time_seconds{host!="_"}) by (host) - time()) < 604800# for: 1s# labels:# severity: critical# annotations:# description: ssl certificate(s) will expire in less then a week# summary: renew expiring certificates to avoid downtime# - alert: NGINXTooMany500s# expr: 100 * ( sum( nginx_ingress_controller_requests{status=~"5.+"} ) / sum(nginx_ingress_controller_requests) ) > 5# for: 1m# labels:# severity: warning# annotations:# description: Too many 5XXs# summary: More than 5% of all requests returned 5XX, this requires your attention# - alert: NGINXTooMany400s# expr: 100 * ( sum( nginx_ingress_controller_requests{status=~"4.+"} ) / sum(nginx_ingress_controller_requests) ) > 5# for: 1m# labels:# severity: warning# annotations:# description: Too many 4XXs# summary: More than 5% of all requests returned 4XX, this requires your attention# -- Improve connection draining when ingress controller pod is deleted using a lifecycle hook:# With this new hook, we increased the default terminationGracePeriodSeconds from 30 seconds# to 300, allowing the draining of connections up to five minutes.# If the active connections end before that, the pod will terminate gracefully at that time.# To effectively take advantage of this feature, the Configmap feature# worker-shutdown-timeout new value is 240s instead of 10s.##lifecycle:preStop:exec:command:- /wait-shutdownpriorityClassName: ""

# -- Rollback limit

##

revisionHistoryLimit: 10

## Default 404 backend

##

defaultBackend:##enabled: falsename: defaultbackendimage:registry: registry.k8s.ioimage: defaultbackend-amd64## for backwards compatibility consider setting the full image url via the repository value below## use *either* current default registry/image or repository format or installing chart by providing the values.yaml will fail## repository:tag: "1.5"pullPolicy: IfNotPresentrunAsNonRoot: true# nobody user -> uid 65534runAsUser: 65534allowPrivilegeEscalation: falseseccompProfile:type: RuntimeDefaultreadOnlyRootFilesystem: true# -- Use an existing PSP instead of creating oneexistingPsp: ""extraArgs: {}serviceAccount:create: truename: ""automountServiceAccountToken: true# -- Additional environment variables to set for defaultBackend podsextraEnvs: []port: 8080## Readiness and liveness probes for default backend## Ref: https://kubernetes.io/docs/tasks/configure-pod-container/configure-liveness-readiness-probes/##livenessProbe:failureThreshold: 3initialDelaySeconds: 30periodSeconds: 10successThreshold: 1timeoutSeconds: 5readinessProbe:failureThreshold: 6initialDelaySeconds: 0periodSeconds: 5successThreshold: 1timeoutSeconds: 5# -- The update strategy to apply to the Deployment or DaemonSet##updateStrategy: {}# rollingUpdate:# maxUnavailable: 1# type: RollingUpdate# -- `minReadySeconds` to avoid killing pods before we are ready##minReadySeconds: 0# -- Node tolerations for server scheduling to nodes with taints## Ref: https://kubernetes.io/docs/concepts/configuration/assign-pod-node/##tolerations: []# - key: "key"# operator: "Equal|Exists"# value: "value"# effect: "NoSchedule|PreferNoSchedule|NoExecute(1.6 only)"affinity: {}# -- Security context for default backend podspodSecurityContext: {}# -- Security context for default backend containerscontainerSecurityContext: {}# -- Labels to add to the pod container metadatapodLabels: {}# key: value# -- Node labels for default backend pod assignment## Ref: https://kubernetes.io/docs/concepts/scheduling-eviction/assign-pod-node/##nodeSelector:kubernetes.io/os: linux# -- Annotations to be added to default backend pods##podAnnotations: {}replicaCount: 1minAvailable: 1resources: {}# limits:# cpu: 10m# memory: 20Mi# requests:# cpu: 10m# memory: 20MiextraVolumeMounts: []## Additional volumeMounts to the default backend container.# - name: copy-portal-skins# mountPath: /var/lib/lemonldap-ng/portal/skinsextraVolumes: []## Additional volumes to the default backend pod.# - name: copy-portal-skins# emptyDir: {}extraConfigMaps: []## Additional configmaps to the default backend pod.# - name: my-extra-configmap-1# labels:# type: config-1# data:# extra_file_1.html: |# <!-- Extra HTML content for ConfigMap 1 --># - name: my-extra-configmap-2# labels:# type: config-2# data:# extra_file_2.html: |# <!-- Extra HTML content for ConfigMap 2 -->autoscaling:annotations: {}enabled: falseminReplicas: 1maxReplicas: 2targetCPUUtilizationPercentage: 50targetMemoryUtilizationPercentage: 50# NetworkPolicy for default backend component.networkPolicy:# -- Enable 'networkPolicy' or notenabled: falseservice:annotations: {}# clusterIP: ""# -- List of IP addresses at which the default backend service is available## Ref: https://kubernetes.io/docs/concepts/services-networking/service/#external-ips##externalIPs: []# loadBalancerIP: ""loadBalancerSourceRanges: []servicePort: 80type: ClusterIPpriorityClassName: ""# -- Labels to be added to the default backend resourceslabels: {}

## Enable RBAC as per https://github.com/kubernetes/ingress-nginx/blob/main/docs/deploy/rbac.md and https://github.com/kubernetes/ingress-nginx/issues/266

rbac:create: truescope: false

## If true, create & use Pod Security Policy resources

## https://kubernetes.io/docs/concepts/policy/pod-security-policy/

podSecurityPolicy:enabled: false

serviceAccount:create: truename: ""automountServiceAccountToken: true# -- Annotations for the controller service accountannotations: {}

# -- Optional array of imagePullSecrets containing private registry credentials

## Ref: https://kubernetes.io/docs/tasks/configure-pod-container/pull-image-private-registry/

imagePullSecrets: []

# - name: secretName# -- TCP service key-value pairs

## Ref: https://github.com/kubernetes/ingress-nginx/blob/main/docs/user-guide/exposing-tcp-udp-services.md

##

tcp: {}

# 8080: "default/example-tcp-svc:9000"# -- UDP service key-value pairs

## Ref: https://github.com/kubernetes/ingress-nginx/blob/main/docs/user-guide/exposing-tcp-udp-services.md

##

udp: {}

# 53: "kube-system/kube-dns:53"# -- Prefix for TCP and UDP ports names in ingress controller service

## Some cloud providers, like Yandex Cloud may have a requirements for a port name regex to support cloud load balancer integration

portNamePrefix: ""

# -- (string) A base64-encoded Diffie-Hellman parameter.

# This can be generated with: `openssl dhparam 4096 2> /dev/null | base64`

## Ref: https://github.com/kubernetes/ingress-nginx/tree/main/docs/examples/customization/ssl-dh-param

dhParam: ""3.4.3 helm 安装ingress-nginx

[root@k8s-master ingress-nginx]# helm install ingress-nginx -n monitoring .Release "ingress-nginx" has been upgraded. Happy Helming!

NAME: ingress-nginx

LAST DEPLOYED: Sat Mar 2 03:54:37 2024

NAMESPACE: ingress-nginx

STATUS: deployed

REVISION: 2

TEST SUITE: None

NOTES:

The ingress-nginx controller has been installed.

Get the application URL by running these commands:export POD_NAME="$(kubectl get pods --namespace monitoring --selector app.kubernetes.io/name=ingress-nginx,app.kubernetes.io/instance=ingress-nginx,app.kubernetes.io/component=controller --output jsonpath="{.items[0].metadata.name}")"kubectl port-forward --namespace monitoring "${POD_NAME}" 8080:80echo "Visit http://127.0.0.1:8080 to access your application."An example Ingress that makes use of the controller:apiVersion: networking.k8s.io/v1kind: Ingressmetadata:name: examplenamespace: foospec:ingressClassName: nginxrules:- host: www.example.comhttp:paths:- pathType: Prefixbackend:service:name: exampleServiceport:number: 80path: /# This section is only required if TLS is to be enabled for the Ingresstls:- hosts:- www.example.comsecretName: example-tlsIf TLS is enabled for the Ingress, a Secret containing the certificate and key must also be provided:apiVersion: v1kind: Secretmetadata:name: example-tlsnamespace: foodata:tls.crt: <base64 encoded cert>tls.key: <base64 encoded key>type: kubernetes.io/tls

3.4.4 查看inrgess的ip

[root@k8s-master ingress-nginx]# kubectl get po ingress-nginx-controller-6gz5k -n monitoring -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

ingress-nginx-controller-6gz5k 1/1 Running 0 32m 10.10.10.177 k8s-node-01 <none> <none>

3.4.5 通过写入hosts,通过浏览器可以直接访问