目录

- 端到端语音识别模型LAS

- 介绍:

- 模型:

- 模型代码片段

端到端语音识别模型LAS

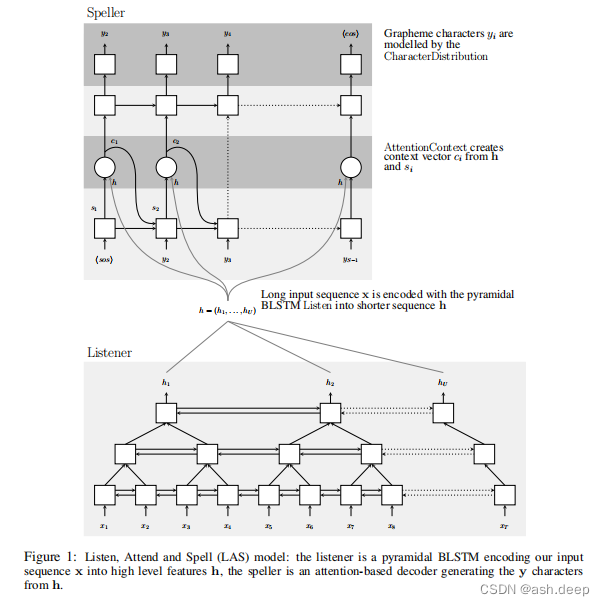

Listen, Attend and Spell (LAS)的神经网络结构,由listener和speller组成,listener是以fbank为输入的pyramidal RNN encoder,speller是基于attention的RNN decoder,输出为建模的字符;模型所需的所有组件的训练是jointly的;每个输出的字符之间没有传统CTC模型的独立性假设要求。

介绍:

目前的端到端ASR(CTC & sequence to sequence)系统存在的问题:

1、CTC是建立在输出字符之间彼此条件独立的假设上;

2、sequence to sequence方法只是应用在phoneme sequence,对ASR系统来说并不是端到端的训练。

文章作者使用pyramidal RNN(pRNN)最为编码器的主要原因:

1、在time step 上降维,减少信息的冗余性,有助于注意力模型捕获更关键的信息;

2、OOV的字符和低频率的单词会自动处理,因为一次只输出一个字符;

3、解决拼写变体的问题,如“triple a”和“aaa”。

模型:

示意图:

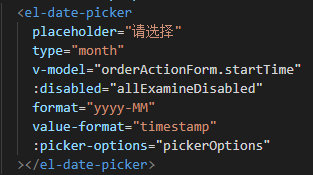

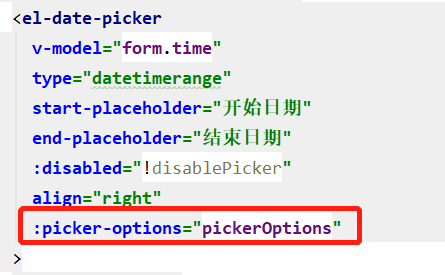

模型代码片段

1、Encoder 代码片.

class Encoder(nn.Module):r"""Applies a multi-layer LSTM to an variable length input sequence."""def __init__(self, input_size=320, hidden_size=256, num_layers=3,dropout=0.0, bidirectional=True, rnn_type='lstm'):super(Encoder, self).__init__()self.input_size = input_sizeself.hidden_size = hidden_sizeself.num_layers = num_layersself.bidirectional = bidirectionalself.rnn_type = rnn_typeself.dropout = dropoutself.lstm = nn.LSTM(input_size=input_size, hidden_size=hidden_size, num_layers=num_layers,batch_first=True,dropout=dropout,bidirectional=bidirectional)

2、Dncoder 代码片.

class Decoder(nn.Module):""""""def __init__(self, vocab_size=vocab_size, embedding_dim=512, sos_id=sos_id, eos_id=eos_id, hidden_size=512,num_layers=1, bidirectional_encoder=True):super(Decoder, self).__init__()# Hyper parameters# embedding + outputself.vocab_size = vocab_sizeself.embedding_dim = embedding_dimself.sos_id = sos_id # Start of Sentenceself.eos_id = eos_id # End of Sentence# rnnself.hidden_size = hidden_sizeself.num_layers = num_layersself.bidirectional_encoder = bidirectional_encoder # useless nowself.encoder_hidden_size = hidden_size # must be equal now# Componentsself.embedding = nn.Embedding(self.vocab_size, self.embedding_dim) # 将每个词编码成d维向量self.rnn = nn.ModuleList()self.rnn += [nn.LSTMCell(self.embedding_dim +self.encoder_hidden_size, self.hidden_size)]for l in range(1, self.num_layers):self.rnn += [nn.LSTMCell(self.hidden_size, self.hidden_size)]self.attention = DotProductAttention() # 点乘注意力机制self.mlp = nn.Sequential(nn.Linear(self.encoder_hidden_size + self.hidden_size,self.hidden_size),nn.Tanh(),nn.Linear(self.hidden_size, self.vocab_size))

3、Attention 代码片.

class DotProductAttention(nn.Module):r"""Dot product attention.Given a set of vector values, and a vector query, attention is a techniqueto compute a weighted sum of the values, dependent on the query.NOTE: Here we use the terminology in Stanford cs224n-2018-lecture11."""def __init__(self):super(DotProductAttention, self).__init__()# TODO: move this out of this class?# self.linear_out = nn.Linear(dim*2, dim)def forward(self, queries, values):"""Args:queries: N x To x Hvalues : N x Ti x HReturns:output: N x To x Hattention_distribution: N x To x Ti"""batch_size = queries.size(0)hidden_size = queries.size(2)input_lengths = values.size(1)# (N, To, H) * (N, H, Ti) -> (N, To, Ti)attention_scores = torch.bmm(queries, values.transpose(1, 2))attention_distribution = F.softmax(attention_scores.view(-1, input_lengths), dim=1).view(batch_size, -1, input_lengths)# (N, To, Ti) * (N, Ti, H) -> (N, To, H)attention_output = torch.bmm(attention_distribution, values)# # concat -> (N, To, 2*H)# concated = torch.cat((attention_output, queries), dim=2)# # TODO: Move this out of this class?# # output -> (N, To, H)# output = torch.tanh(self.linear_out(# concated.view(-1, 2*hidden_size))).view(batch_size, -1, hidden_size)return attention_output, attention_distribution

gitcode链接:https://gitcode.net/weixin_47276710/end-to-end-asr/-/tree/master