目录

- zookeeper集群部署

- 创建zookeeper文件夹

- namespace.yaml

- scripts-configmap.yaml

- serviceaccount.yaml

- statefulset.yaml

- svc-headless.yaml

- svc.yaml

- metrics-svc.yaml

- 执行部署

- 接入prometheus

- 访问prometheus查看接入情况

- 导入zookeeper监控模版

- 监控展示

zookeeper集群部署

复制粘贴即用

创建zookeeper文件夹

# 创建文件夹

mkdir -p /opt/zookeeper

cd /opt/zookeeper

namespace.yaml

apiVersion: v1

kind: Namespace

metadata:labels:kubernetes.io/metadata.name: zookeepername: zookeeper

scripts-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:name: zookeeper-scriptsnamespace: zookeeperlabels:app.kubernetes.io/name: zookeeperapp.kubernetes.io/version: 3.9.2app.kubernetes.io/component: zookeeper

data:init-certs.sh: |-#!/bin/bashsetup.sh: |-#!/bin/bash# Execute entrypoint as usual after obtaining ZOO_SERVER_ID# check ZOO_SERVER_ID in persistent volume via myid# if not present, set based on POD hostnameif [[ -f "/bitnami/zookeeper/data/myid" ]]; thenexport ZOO_SERVER_ID="$(cat /bitnami/zookeeper/data/myid)"elseHOSTNAME="$(hostname -s)"if [[ $HOSTNAME =~ (.*)-([0-9]+)$ ]]; thenORD=${BASH_REMATCH[2]}export ZOO_SERVER_ID="$((ORD + 1 ))"elseecho "Failed to get index from hostname $HOSTNAME"exit 1fifiexec /entrypoint.sh /run.sh

serviceaccount.yaml

apiVersion: v1

kind: ServiceAccount

metadata:name: zookeepernamespace: zookeeperlabels:app.kubernetes.io/name: zookeeperapp.kubernetes.io/version: 3.9.2app.kubernetes.io/component: zookeeperrole: zookeeper

automountServiceAccountToken: false

statefulset.yaml

---

# Source: zookeeper/templates/statefulset.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:name: zookeepernamespace: zookeeperlabels:app.kubernetes.io/name: zookeeperapp.kubernetes.io/version: 3.9.2app.kubernetes.io/component: zookeeperrole: zookeeper

spec:replicas: 3revisionHistoryLimit: 10podManagementPolicy: Parallelselector:matchLabels:app.kubernetes.io/name: zookeeperapp.kubernetes.io/component: zookeeperserviceName: zookeeper-headlessupdateStrategy:rollingUpdate: {}type: RollingUpdatetemplate:metadata:annotations:labels:app.kubernetes.io/name: zookeeperapp.kubernetes.io/version: 3.9.2app.kubernetes.io/component: zookeeperspec:enableServiceLinks: trueserviceAccountName: zookeeper# 取消为 service account 自动挂载 API 凭证automountServiceAccountToken: falseaffinity:podAffinity:# pod反亲和,将pod打散至不同节点,实现高可用podAntiAffinity:preferredDuringSchedulingIgnoredDuringExecution:- podAffinityTerm:labelSelector:matchLabels:app.kubernetes.io/name: zookeeperapp.kubernetes.io/component: zookeepertopologyKey: kubernetes.io/hostnameweight: 1nodeAffinity:# 配置安全上下文 securityContext:fsGroup: 1001fsGroupChangePolicy: AlwayssupplementalGroups: []sysctls: []initContainers:containers:- name: zookeeperimage: docker.io/bitnami/zookeeper:3.9.2-debian-12-r2imagePullPolicy: "IfNotPresent"securityContext:allowPrivilegeEscalation: falsecapabilities:drop:- ALLprivileged: falsereadOnlyRootFilesystem: truerunAsGroup: 1001runAsNonRoot: truerunAsUser: 1001seLinuxOptions: {}seccompProfile:type: RuntimeDefaultcommand:- /scripts/setup.shresources:limits:cpu: 375mephemeral-storage: 1024Mimemory: 384Mirequests:cpu: 250mephemeral-storage: 50Mimemory: 256Mienv:- name: BITNAMI_DEBUGvalue: "false"- name: ZOO_DATA_LOG_DIRvalue: ""- name: ZOO_PORT_NUMBERvalue: "2181"- name: ZOO_TICK_TIMEvalue: "2000"- name: ZOO_INIT_LIMITvalue: "10"- name: ZOO_SYNC_LIMITvalue: "5"- name: ZOO_PRE_ALLOC_SIZEvalue: "65536"- name: ZOO_SNAPCOUNTvalue: "100000"- name: ZOO_MAX_CLIENT_CNXNSvalue: "60"- name: ZOO_4LW_COMMANDS_WHITELISTvalue: "srvr, mntr, ruok"- name: ZOO_LISTEN_ALLIPS_ENABLEDvalue: "no"- name: ZOO_AUTOPURGE_INTERVALvalue: "1"- name: ZOO_AUTOPURGE_RETAIN_COUNTvalue: "10"- name: ZOO_MAX_SESSION_TIMEOUTvalue: "40000"- name: ZOO_SERVERSvalue: zookeeper-0.zookeeper-headless.zookeeper.svc.cluster.local:2888:3888::1 zookeeper-1.zookeeper-headless.zookeeper.svc.cluster.local:2888:3888::2 zookeeper-2.zookeeper-headless.zookeeper.svc.cluster.local:2888:3888::3 - name: ZOO_ENABLE_AUTHvalue: "no"- name: ZOO_ENABLE_QUORUM_AUTHvalue: "no"- name: ZOO_HEAP_SIZEvalue: "1024"- name: ZOO_LOG_LEVELvalue: "ERROR"- name: ALLOW_ANONYMOUS_LOGINvalue: "yes"- name: ZOO_ENABLE_PROMETHEUS_METRICSvalue: "yes"- name: ZOO_PROMETHEUS_METRICS_PORT_NUMBERvalue: "9141"- name: POD_NAMEvalueFrom:fieldRef:apiVersion: v1fieldPath: metadata.name- name: ZOO_ADMIN_SERVER_PORT_NUMBERvalue: "8080"ports:- name: clientcontainerPort: 2181- name: followercontainerPort: 2888- name: electioncontainerPort: 3888# 开启metrics,供prometheus监控- name: metricscontainerPort: 9141- name: http-admincontainerPort: 8080livenessProbe:failureThreshold: 6initialDelaySeconds: 30periodSeconds: 10successThreshold: 1timeoutSeconds: 5exec:command:- /bin/bash- -ec- ZOO_HC_TIMEOUT=2 /opt/bitnami/scripts/zookeeper/healthcheck.shreadinessProbe:failureThreshold: 6initialDelaySeconds: 5periodSeconds: 10successThreshold: 1timeoutSeconds: 5exec:command:- /bin/bash- -ec- ZOO_HC_TIMEOUT=2 /opt/bitnami/scripts/zookeeper/healthcheck.shvolumeMounts:- name: empty-dirmountPath: /tmpsubPath: tmp-dir- name: empty-dirmountPath: /opt/bitnami/zookeeper/confsubPath: app-conf-dir- name: empty-dirmountPath: /opt/bitnami/zookeeper/logssubPath: app-logs-dir- name: scriptsmountPath: /scripts/setup.shsubPath: setup.sh- name: datamountPath: /bitnami/zookeepervolumes:- name: empty-diremptyDir: {}- name: scriptsconfigMap:name: zookeeper-scriptsdefaultMode: 493volumeClaimTemplates:- metadata:name: dataspec:accessModes:- "ReadWriteOnce"resources:requests:storage: "10Gi"# 按当前集群存储类修改 storageClassName: nfs-client

svc-headless.yaml

apiVersion: v1

kind: Service

metadata:name: zookeeper-headlessnamespace: zookeeperlabels:app.kubernetes.io/name: zookeeperapp.kubernetes.io/version: 3.9.2app.kubernetes.io/component: zookeeper

spec:type: ClusterIPclusterIP: NonepublishNotReadyAddresses: trueports:- name: tcp-clientport: 2181targetPort: client- name: tcp-followerport: 2888targetPort: follower- name: tcp-electionport: 3888targetPort: electionselector:app.kubernetes.io/name: zookeeperapp.kubernetes.io/component: zookeeper

svc.yaml

apiVersion: v1

kind: Service

metadata:name: zookeepernamespace: zookeeperlabels:app.kubernetes.io/name: zookeeperapp.kubernetes.io/version: 3.9.2app.kubernetes.io/component: zookeeper

spec:type: ClusterIPsessionAffinity: Noneports:- name: tcp-clientport: 2181targetPort: clientnodePort: null- name: tcp-followerport: 2888targetPort: follower- name: tcp-electionport: 3888targetPort: electionselector:app.kubernetes.io/name: zookeeperapp.kubernetes.io/component: zookeeper

metrics-svc.yaml

apiVersion: v1

kind: Service

metadata:name: zookeeper-metricsnamespace: zookeeperlabels:app.kubernetes.io/name: zookeeperapp.kubernetes.io/version: 3.9.2app.kubernetes.io/component: metricsannotations:prometheus.io/path: /metricsprometheus.io/port: "9141"# 开启prometheus监控prometheus.io/scrape: "true"

spec:type: ClusterIPports:- name: tcp-metricsport: 9141targetPort: metricsselector:app.kubernetes.io/name: zookeeperapp.kubernetes.io/component: zookeeper

执行部署

# 执行部署

kubectl apply -f .

# 查看部署情况

kubectl get pod,svc,pvc -n zookeeper

NAME READY STATUS RESTARTS AGE

pod/zookeeper-0 1/1 Running 0 16h

pod/zookeeper-1 1/1 Running 0 16h

pod/zookeeper-2 1/1 Running 0 16hNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/zookeeper ClusterIP 10.0.6.237 <none> 2181/TCP,2888/TCP,3888/TCP 19h

service/zookeeper-headless ClusterIP None <none> 2181/TCP,2888/TCP,3888/TCP 19h

service/zookeeper-metrics ClusterIP 10.0.8.208 <none> 9141/TCP 19hNAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/data-zookeeper-0 Bound pvc-a6ac5f14-bd56-4d20-8e8b-64b8efe4ab52 10Gi RWO nfs-client 19h

persistentvolumeclaim/data-zookeeper-1 Bound pvc-7beccb60-202f-4be4-90e0-2178385055fb 10Gi RWO nfs-client 19h

persistentvolumeclaim/data-zookeeper-2 Bound pvc-7c5ed75d-0467-4007-b5f1-7a7701b91b92 10Gi RWO nfs-client 19h

接入prometheus

prometheus部署可查看历史博客:k8s-prometheus+grafana+alertmanager监控加邮件告警

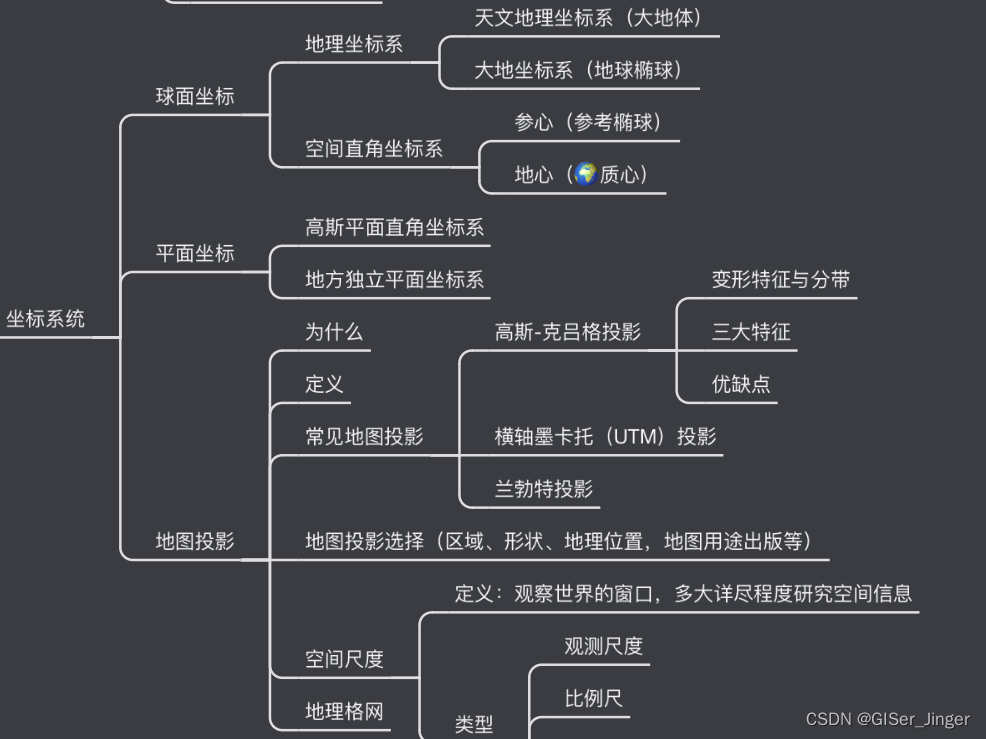

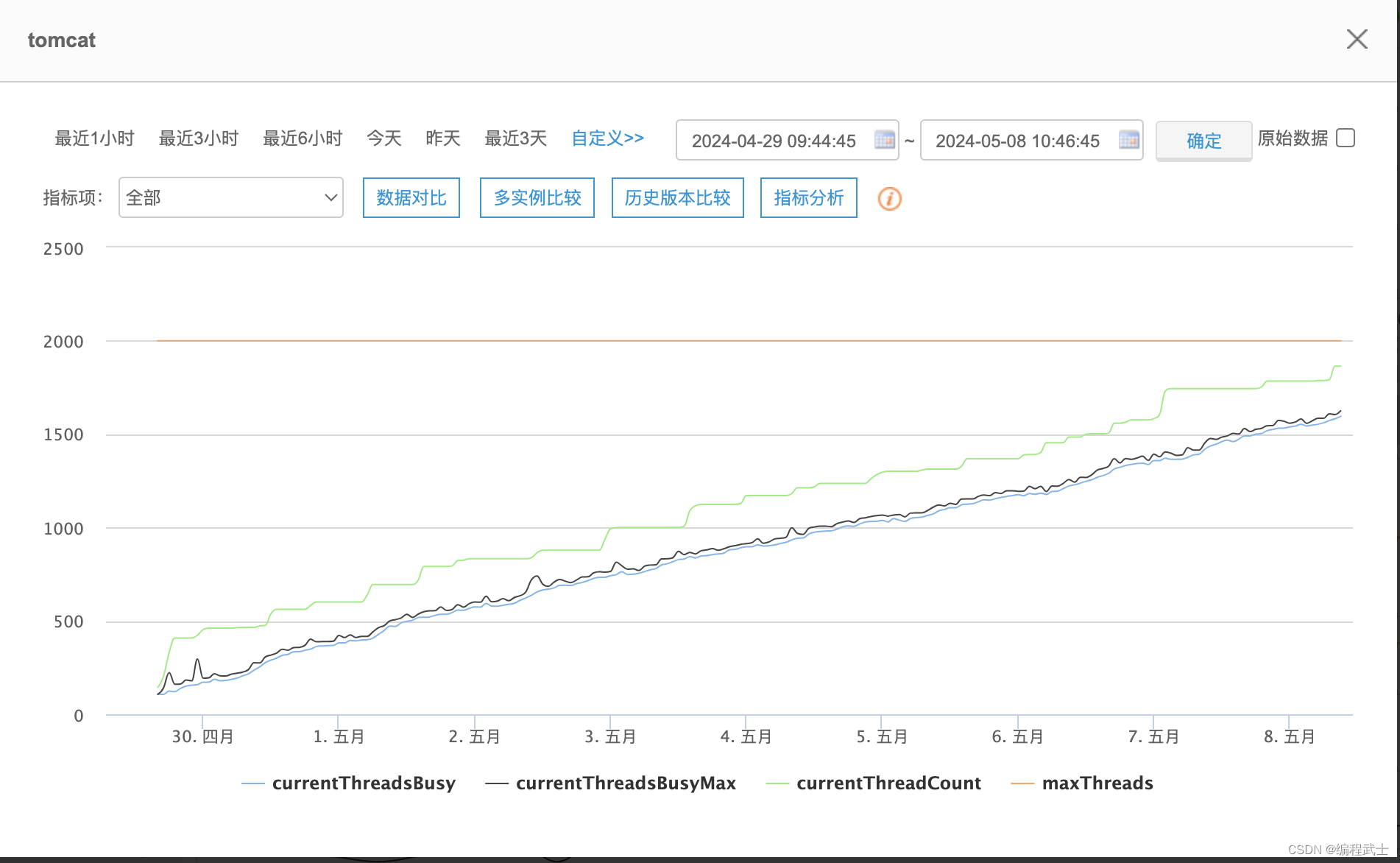

访问prometheus查看接入情况

导入zookeeper监控模版

模版编号: 10465

监控展示

注:刚部署完监控值皆为0,图中为接入业务后有数据显示