一、导言

Gold-YOLO是一种高效的物体检测模型,它通过一种新的机制——Gather-and-Distribute(GD)机制来增强多尺度特征融合的能力,从而在保证实时性能的同时提高了检测精度。下面是对Gold-YOLO的主要特点和创新点的概述:

关键特点

- Gather-and-Distribute (GD) 机制:这是Gold-YOLO的核心贡献之一。GD机制通过卷积和自注意力操作来实现对多尺度特征的有效融合,从而提升模型在不同大小物体检测上的性能。

- 平衡速度与精度:Gold-YOLO在保证实时性能的同时,也达到了较高的检测精度,特别是在不同模型规模下的平衡。

- 预训练方法:Gold-YOLO首次在YOLO系列中实现了MAE-style预训练方法,允许模型从无监督学习中受益,进一步提高了模型的性能。

创新点

-

GD机制的设计:GD机制由三个主要模块组成:特征对齐模块(FAM)、信息融合模块(IFM)和信息注入模块(Inject)。FAM负责收集和对齐来自不同层级的特征,IFM则融合这些对齐后的特征以生成全局信息。最后,通过简单的注意力操作,Inject模块将这些全局信息分配到各个层级,进而增强各个分支的检测能力。

- 低级Gather-and-Distribute分支(Low-GD):专门针对小目标和中等目标,通过融合高层级的特征来保留高分辨率信息。

- 高级Gather-and-Distribute分支(High-GD):主要关注大目标的检测,通过融合低层级的特征来获得更大的感受野。

- 轻量级相邻层融合模块(LAF):增强两个分支之间的信息交流,进一步提升模型性能。

-

高效的信息融合:通过上述的GD机制,Gold-YOLO能够有效解决传统FPN和PANet中存在的信息融合问题,实现了更好的多尺度特征融合,从而在检测不同大小的目标时表现出色。

-

MAE-style预训练:Gold-YOLO在主干网络上采用了掩码自编码(MAE)式的预训练方法,这有助于模型在有监督训练前就具备良好的特征表示能力,进而加速收敛并提高最终性能。

性能评估

Gold-YOLO在多个方面都展示了出色的性能,包括但不限于:

- 速度与精度的平衡:Gold-YOLO的不同版本(如Gold-YOLO-N、Gold-YOLO-S、Gold-YOLO-M等)均能在保持或超越竞争对手的速度的同时,提供更高的平均精度(AP)。

- 跨模型规模的一致性:无论是在小型、中型还是大型模型版本中,Gold-YOLO都能够保持一致的高性能,证明了其机制的有效性和通用性。

- 跨任务扩展性:除了物体检测任务外,GD机制还被应用于实例分割和语义分割任务中,同样取得了显著的性能提升。

实验结果

- 物体检测:在COCO数据集上,Gold-YOLO的不同版本均优于或与同类模型相当,例如YOLov6-3.0、YOLOX和PPYOLOE等,在速度和精度上都有所提升。

- 实例分割:在Mask R-CNN上应用GD机制后,在COCO实例分割数据集上也获得了显著的性能提升。

- 语义分割:在PointRend模型上替换为GD机制后,在Cityscapes数据集上的语义分割任务中同样显示出了更好的性能。

综上所述,Gold-YOLO通过其创新的GD机制和其他优化措施,在保持高效运行的同时显著提高了物体检测的准确性,并且证明了其机制的广泛适用性。

二、准备工作

首先在YOLOv5/v7的models文件夹下新建文件goldyolo.py,导入如下代码

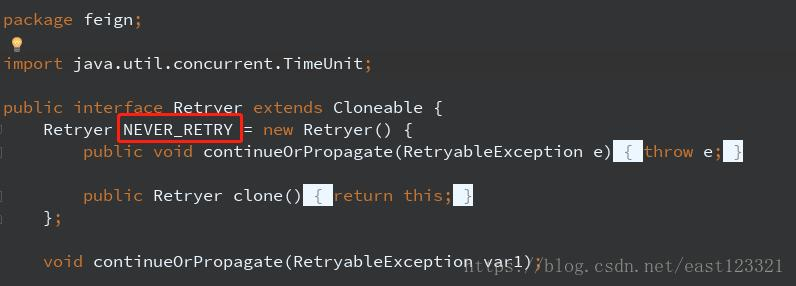

from models.common import *import torch.nn.functional as F# https://arxiv.org/pdf/2309.11331v4def conv_bn(in_channels, out_channels, kernel_size, stride, padding, groups=1, bias=False):'''Basic cell for rep-style block, including conv and bn'''result = nn.Sequential()result.add_module('conv', nn.Conv2d(in_channels=in_channels, out_channels=out_channels,kernel_size=kernel_size, stride=stride, padding=padding, groups=groups,bias=bias))result.add_module('bn', nn.BatchNorm2d(num_features=out_channels))return resultclass RepVGGBlock(nn.Module):'''RepVGGBlock is a basic rep-style block, including training and deploy statusThis code is based on https://github.com/DingXiaoH/RepVGG/blob/main/repvgg.py'''def __init__(self, in_channels, out_channels, kernel_size=3,stride=1, padding=1, dilation=1, groups=1, padding_mode='zeros', deploy=False, use_se=False):super(RepVGGBlock, self).__init__()""" Initialization of the class.Args:in_channels (int): Number of channels in the input imageout_channels (int): Number of channels produced by the convolutionkernel_size (int or tuple): Size of the convolving kernelstride (int or tuple, optional): Stride of the convolution. Default: 1padding (int or tuple, optional): Zero-padding added to both sides ofthe input. Default: 1dilation (int or tuple, optional): Spacing between kernel elements. Default: 1groups (int, optional): Number of blocked connections from inputchannels to output channels. Default: 1padding_mode (string, optional): Default: 'zeros'deploy: Whether to be deploy status or training status. Default: Falseuse_se: Whether to use se. Default: False"""self.deploy = deployself.groups = groupsself.in_channels = in_channelsself.out_channels = out_channelsassert kernel_size == 3assert padding == 1padding_11 = padding - kernel_size // 2self.nonlinearity = nn.ReLU()if use_se:raise NotImplementedError("se block not supported yet")else:self.se = nn.Identity()if deploy:self.rbr_reparam = nn.Conv2d(in_channels=in_channels, out_channels=out_channels, kernel_size=kernel_size,stride=stride,padding=padding, dilation=dilation, groups=groups, bias=True,padding_mode=padding_mode)else:self.rbr_identity = nn.BatchNorm2d(num_features=in_channels) if out_channels == in_channels and stride == 1 else Noneself.rbr_dense = conv_bn(in_channels=in_channels, out_channels=out_channels, kernel_size=kernel_size,stride=stride, padding=padding, groups=groups)self.rbr_1x1 = conv_bn(in_channels=in_channels, out_channels=out_channels, kernel_size=1, stride=stride,padding=padding_11, groups=groups)def forward(self, inputs):'''Forward process'''if hasattr(self, 'rbr_reparam'):return self.nonlinearity(self.se(self.rbr_reparam(inputs)))if self.rbr_identity is None:id_out = 0else:id_out = self.rbr_identity(inputs)return self.nonlinearity(self.se(self.rbr_dense(inputs) + self.rbr_1x1(inputs) + id_out))def get_equivalent_kernel_bias(self):kernel3x3, bias3x3 = self._fuse_bn_tensor(self.rbr_dense)kernel1x1, bias1x1 = self._fuse_bn_tensor(self.rbr_1x1)kernelid, biasid = self._fuse_bn_tensor(self.rbr_identity)return kernel3x3 + self._pad_1x1_to_3x3_tensor(kernel1x1) + kernelid, bias3x3 + bias1x1 + biasiddef _pad_1x1_to_3x3_tensor(self, kernel1x1):if kernel1x1 is None:return 0else:return torch.nn.functional.pad(kernel1x1, [1, 1, 1, 1])def _fuse_bn_tensor(self, branch):if branch is None:return 0, 0if isinstance(branch, nn.Sequential):kernel = branch.conv.weightrunning_mean = branch.bn.running_meanrunning_var = branch.bn.running_vargamma = branch.bn.weightbeta = branch.bn.biaseps = branch.bn.epselse:assert isinstance(branch, nn.BatchNorm2d)if not hasattr(self, 'id_tensor'):input_dim = self.in_channels // self.groupskernel_value = np.zeros((self.in_channels, input_dim, 3, 3), dtype=np.float32)for i in range(self.in_channels):kernel_value[i, i % input_dim, 1, 1] = 1self.id_tensor = torch.from_numpy(kernel_value).to(branch.weight.device)kernel = self.id_tensorrunning_mean = branch.running_meanrunning_var = branch.running_vargamma = branch.weightbeta = branch.biaseps = branch.epsstd = (running_var + eps).sqrt()t = (gamma / std).reshape(-1, 1, 1, 1)return kernel * t, beta - running_mean * gamma / stddef switch_to_deploy(self):if hasattr(self, 'rbr_reparam'):returnkernel, bias = self.get_equivalent_kernel_bias()self.rbr_reparam = nn.Conv2d(in_channels=self.rbr_dense.conv.in_channels,out_channels=self.rbr_dense.conv.out_channels,kernel_size=self.rbr_dense.conv.kernel_size, stride=self.rbr_dense.conv.stride,padding=self.rbr_dense.conv.padding, dilation=self.rbr_dense.conv.dilation,groups=self.rbr_dense.conv.groups, bias=True)self.rbr_reparam.weight.data = kernelself.rbr_reparam.bias.data = biasfor para in self.parameters():para.detach_()self.__delattr__('rbr_dense')self.__delattr__('rbr_1x1')if hasattr(self, 'rbr_identity'):self.__delattr__('rbr_identity')if hasattr(self, 'id_tensor'):self.__delattr__('id_tensor')self.deploy = Truedef onnx_AdaptiveAvgPool2d(x, output_size):stride_size = np.floor(np.array(x.shape[-2:]) / output_size).astype(np.int32)kernel_size = np.array(x.shape[-2:]) - (output_size - 1) * stride_sizeavg = nn.AvgPool2d(kernel_size=list(kernel_size), stride=list(stride_size))x = avg(x)return xdef get_avg_pool():if torch.onnx.is_in_onnx_export():avg_pool = onnx_AdaptiveAvgPool2delse:avg_pool = nn.functional.adaptive_avg_pool2dreturn avg_poolclass SimFusion_3in(nn.Module):def __init__(self, in_channel_list, out_channels):super().__init__()self.cv1 = Conv(in_channel_list[0], out_channels, act=nn.ReLU()) if in_channel_list[0] != out_channels else nn.Identity()self.cv2 = Conv(in_channel_list[1], out_channels, act=nn.ReLU()) if in_channel_list[1] != out_channels else nn.Identity()self.cv3 = Conv(in_channel_list[2], out_channels, act=nn.ReLU()) if in_channel_list[2] != out_channels else nn.Identity()self.cv_fuse = Conv(out_channels * 3, out_channels, act=nn.ReLU())self.downsample = nn.functional.adaptive_avg_pool2ddef forward(self, x):N, C, H, W = x[1].shapeoutput_size = (H, W)if torch.onnx.is_in_onnx_export():self.downsample = onnx_AdaptiveAvgPool2doutput_size = np.array([H, W])x0 = self.cv1(self.downsample(x[0], output_size))x1 = self.cv2(x[1])x2 = self.cv3(F.interpolate(x[2], size=(H, W), mode='bilinear', align_corners=False))return self.cv_fuse(torch.cat((x0, x1, x2), dim=1))class SimFusion_4in(nn.Module):def __init__(self):super().__init__()self.avg_pool = nn.functional.adaptive_avg_pool2ddef forward(self, x):x_l, x_m, x_s, x_n = xB, C, H, W = x_s.shapeoutput_size = np.array([H, W])if torch.onnx.is_in_onnx_export():self.avg_pool = onnx_AdaptiveAvgPool2dx_l = self.avg_pool(x_l, output_size)x_m = self.avg_pool(x_m, output_size)x_n = F.interpolate(x_n, size=(H, W), mode='bilinear', align_corners=False)out = torch.cat([x_l, x_m, x_s, x_n], 1)return outclass IFM(nn.Module):def __init__(self, inc, ouc, embed_dim_p=96, fuse_block_num=3) -> None:super().__init__()self.conv = nn.Sequential(Conv(inc, embed_dim_p),*[RepVGGBlock(embed_dim_p, embed_dim_p) for _ in range(fuse_block_num)],Conv(embed_dim_p, sum(ouc)))def forward(self, x):return self.conv(x)class h_sigmoid(nn.Module):def __init__(self, inplace=True):super(h_sigmoid, self).__init__()self.relu = nn.ReLU6(inplace=inplace)def forward(self, x):return self.relu(x + 3) / 6class InjectionMultiSum_Auto_pool(nn.Module):def __init__(self,inp: int,oup: int,global_inp: list,flag: int) -> None:super().__init__()self.global_inp = global_inpself.flag = flagself.local_embedding = Conv(inp, oup, 1, act=False)self.global_embedding = Conv(global_inp[self.flag], oup, 1, act=False)self.global_act = Conv(global_inp[self.flag], oup, 1, act=False)self.act = h_sigmoid()def forward(self, x):'''x_g: global featuresx_l: local features'''x_l, x_g = xB, C, H, W = x_l.shapeg_B, g_C, g_H, g_W = x_g.shapeuse_pool = H < g_Hgloabl_info = x_g.split(self.global_inp, dim=1)[self.flag]local_feat = self.local_embedding(x_l)global_act = self.global_act(gloabl_info)global_feat = self.global_embedding(gloabl_info)if use_pool:avg_pool = get_avg_pool()output_size = np.array([H, W])sig_act = avg_pool(global_act, output_size)global_feat = avg_pool(global_feat, output_size)else:sig_act = F.interpolate(self.act(global_act), size=(H, W), mode='bilinear', align_corners=False)global_feat = F.interpolate(global_feat, size=(H, W), mode='bilinear', align_corners=False)out = local_feat * sig_act + global_featreturn outdef get_shape(tensor):shape = tensor.shapeif torch.onnx.is_in_onnx_export():shape = [i.cpu().numpy() for i in shape]return shapeclass PyramidPoolAgg(nn.Module):def __init__(self, inc, ouc, stride, pool_mode='torch'):super().__init__()self.stride = strideif pool_mode == 'torch':self.pool = nn.functional.adaptive_avg_pool2delif pool_mode == 'onnx':self.pool = onnx_AdaptiveAvgPool2dself.conv = Conv(inc, ouc)def forward(self, inputs):B, C, H, W = get_shape(inputs[-1])H = (H - 1) // self.stride + 1W = (W - 1) // self.stride + 1output_size = np.array([H, W])if not hasattr(self, 'pool'):self.pool = nn.functional.adaptive_avg_pool2dif torch.onnx.is_in_onnx_export():self.pool = onnx_AdaptiveAvgPool2dout = [self.pool(inp, output_size) for inp in inputs]return self.conv(torch.cat(out, dim=1))def drop_path(x, drop_prob: float = 0., training: bool = False):"""Drop paths (Stochastic Depth) per sample (when applied in main path of residual blocks).This is the same as the DropConnect impl I created for EfficientNet, etc networks, however,the original name is misleading as 'Drop Connect' is a different form of dropout in a separate paper...See discussion: https://github.com/tensorflow/tpu/issues/494#issuecomment-532968956 ... I've opted forchanging the layer and argument names to 'drop path' rather than mix DropConnect as a layer name and use'survival rate' as the argument."""if drop_prob == 0. or not training:return xkeep_prob = 1 - drop_probshape = (x.shape[0],) + (1,) * (x.ndim - 1) # work with diff dim tensors, not just 2D ConvNetsrandom_tensor = keep_prob + torch.rand(shape, dtype=x.dtype, device=x.device)random_tensor.floor_() # binarizeoutput = x.div(keep_prob) * random_tensorreturn outputclass Mlp(nn.Module):def __init__(self, in_features, hidden_features=None, out_features=None, drop=0.):super().__init__()out_features = out_features or in_featureshidden_features = hidden_features or in_featuresself.fc1 = Conv(in_features, hidden_features, act=False)self.dwconv = nn.Conv2d(hidden_features, hidden_features, 3, 1, 1, bias=True, groups=hidden_features)self.act = nn.ReLU6()self.fc2 = Conv(hidden_features, out_features, act=False)self.drop = nn.Dropout(drop)def forward(self, x):x = self.fc1(x)x = self.dwconv(x)x = self.act(x)x = self.drop(x)x = self.fc2(x)x = self.drop(x)return xclass DropPath(nn.Module):"""Drop paths (Stochastic Depth) per sample (when applied in main path of residual blocks)."""def __init__(self, drop_prob=None):super(DropPath, self).__init__()self.drop_prob = drop_probdef forward(self, x):return drop_path(x, self.drop_prob, self.training)class Attention(torch.nn.Module):def __init__(self, dim, key_dim, num_heads, attn_ratio=4):super().__init__()self.num_heads = num_headsself.scale = key_dim ** -0.5self.key_dim = key_dimself.nh_kd = nh_kd = key_dim * num_heads # num_head key_dimself.d = int(attn_ratio * key_dim)self.dh = int(attn_ratio * key_dim) * num_headsself.attn_ratio = attn_ratioself.to_q = Conv(dim, nh_kd, 1, act=False)self.to_k = Conv(dim, nh_kd, 1, act=False)self.to_v = Conv(dim, self.dh, 1, act=False)self.proj = torch.nn.Sequential(nn.ReLU6(), Conv(self.dh, dim, act=False))def forward(self, x): # x (B,N,C)B, C, H, W = get_shape(x)qq = self.to_q(x).reshape(B, self.num_heads, self.key_dim, H * W).permute(0, 1, 3, 2)kk = self.to_k(x).reshape(B, self.num_heads, self.key_dim, H * W)vv = self.to_v(x).reshape(B, self.num_heads, self.d, H * W).permute(0, 1, 3, 2)attn = torch.matmul(qq, kk)attn = attn.softmax(dim=-1) # dim = kxx = torch.matmul(attn, vv)xx = xx.permute(0, 1, 3, 2).reshape(B, self.dh, H, W)xx = self.proj(xx)return xxclass top_Block(nn.Module):def __init__(self, dim, key_dim, num_heads, mlp_ratio=4., attn_ratio=2., drop=0.,drop_path=0.):super().__init__()self.dim = dimself.num_heads = num_headsself.mlp_ratio = mlp_ratioself.attn = Attention(dim, key_dim=key_dim, num_heads=num_heads, attn_ratio=attn_ratio)# NOTE: drop path for stochastic depth, we shall see if this is better than dropout hereself.drop_path = DropPath(drop_path) if drop_path > 0. else nn.Identity()mlp_hidden_dim = int(dim * mlp_ratio)self.mlp = Mlp(in_features=dim, hidden_features=mlp_hidden_dim, drop=drop)def forward(self, x1):x1 = x1 + self.drop_path(self.attn(x1))x1 = x1 + self.drop_path(self.mlp(x1))return x1class TopBasicLayer(nn.Module):def __init__(self, embedding_dim, ouc_list, block_num=2, key_dim=8, num_heads=4,mlp_ratio=4., attn_ratio=2., drop=0., attn_drop=0., drop_path=0.):super().__init__()self.block_num = block_numself.transformer_blocks = nn.ModuleList()for i in range(self.block_num):self.transformer_blocks.append(top_Block(embedding_dim, key_dim=key_dim, num_heads=num_heads,mlp_ratio=mlp_ratio, attn_ratio=attn_ratio,drop=drop, drop_path=drop_path[i] if isinstance(drop_path, list) else drop_path))self.conv = nn.Conv2d(embedding_dim, sum(ouc_list), 1)def forward(self, x):# token * Nfor i in range(self.block_num):x = self.transformer_blocks[i](x)return self.conv(x)class AdvPoolFusion(nn.Module):def forward(self, x):x1, x2 = xif torch.onnx.is_in_onnx_export():self.pool = onnx_AdaptiveAvgPool2delse:self.pool = nn.functional.adaptive_avg_pool2dN, C, H, W = x2.shapeoutput_size = np.array([H, W])x1 = self.pool(x1, output_size)return torch.cat([x1, x2], 1)

其次在在YOLOv5/v7项目文件下的models/yolo.py中在文件首部添加代码

from models.goldyolo import *并搜索def parse_model(d, ch)

定位到如下行添加以下代码

elif m is SimFusion_4in: # goldyoloc2 = sum(ch[x] for x in f)elif m is SimFusion_3in:c2 = args[0]if c2 != no: # if not outputc2 = make_divisible(c2 * gw, 8)args = [[ch[f_] for f_ in f], c2]elif m is IFM:c1 = ch[f]c2 = sum(args[0])args = [c1, *args]elif m is InjectionMultiSum_Auto_pool:c1 = ch[f[0]]c2 = args[0]args = [c1, *args]elif m is PyramidPoolAgg:c2 = args[0]args = [sum([ch[f_] for f_ in f]), *args]elif m is AdvPoolFusion:c2 = sum(ch[x] for x in f)elif m is TopBasicLayer:c2 = sum(args[1]) # goldyolo

三、YOLOv7-tiny改进工作

完成二后,在YOLOv7项目文件下的models文件夹下创建新的文件yolov7-tiny-goldyolo.yaml,导入如下代码。

# parameters

nc: 80 # number of classes

depth_multiple: 1.0 # model depth multiple

width_multiple: 1.0 # layer channel multiple# anchors

anchors:- [10,13, 16,30, 33,23] # P3/8- [30,61, 62,45, 59,119] # P4/16- [116,90, 156,198, 373,326] # P5/32# yolov7-tiny backbone

backbone:# [from, number, module, args] c2, k=1, s=1, p=None, g=1, act=True[[-1, 1, Conv, [32, 3, 2, None, 1, nn.LeakyReLU(0.1)]], # 0-P1/2[-1, 1, Conv, [64, 3, 2, None, 1, nn.LeakyReLU(0.1)]], # 1-P2/4[-1, 1, Conv, [32, 1, 1, None, 1, nn.LeakyReLU(0.1)]],[-2, 1, Conv, [32, 1, 1, None, 1, nn.LeakyReLU(0.1)]],[-1, 1, Conv, [32, 3, 1, None, 1, nn.LeakyReLU(0.1)]],[-1, 1, Conv, [32, 3, 1, None, 1, nn.LeakyReLU(0.1)]],[[-1, -2, -3, -4], 1, Concat, [1]],[-1, 1, Conv, [64, 1, 1, None, 1, nn.LeakyReLU(0.1)]], # 7[-1, 1, MP, []], # 8-P3/8[-1, 1, Conv, [64, 1, 1, None, 1, nn.LeakyReLU(0.1)]],[-2, 1, Conv, [64, 1, 1, None, 1, nn.LeakyReLU(0.1)]],[-1, 1, Conv, [64, 3, 1, None, 1, nn.LeakyReLU(0.1)]],[-1, 1, Conv, [64, 3, 1, None, 1, nn.LeakyReLU(0.1)]],[[-1, -2, -3, -4], 1, Concat, [1]],[-1, 1, Conv, [128, 1, 1, None, 1, nn.LeakyReLU(0.1)]], # 14[-1, 1, MP, []], # 15-P4/16[-1, 1, Conv, [128, 1, 1, None, 1, nn.LeakyReLU(0.1)]],[-2, 1, Conv, [128, 1, 1, None, 1, nn.LeakyReLU(0.1)]],[-1, 1, Conv, [128, 3, 1, None, 1, nn.LeakyReLU(0.1)]],[-1, 1, Conv, [128, 3, 1, None, 1, nn.LeakyReLU(0.1)]],[[-1, -2, -3, -4], 1, Concat, [1]],[-1, 1, Conv, [256, 1, 1, None, 1, nn.LeakyReLU(0.1)]], # 21[-1, 1, MP, []], # 22-P5/32[-1, 1, Conv, [256, 1, 1, None, 1, nn.LeakyReLU(0.1)]],[-2, 1, Conv, [256, 1, 1, None, 1, nn.LeakyReLU(0.1)]],[-1, 1, Conv, [256, 3, 1, None, 1, nn.LeakyReLU(0.1)]],[-1, 1, Conv, [256, 3, 1, None, 1, nn.LeakyReLU(0.1)]],[[-1, -2, -3, -4], 1, Concat, [1]],[-1, 1, Conv, [512, 1, 1, None, 1, nn.LeakyReLU(0.1)]], # 28]# yolov7-tiny head

head:[[-1, 1, Conv, [256, 1, 1, None, 1, nn.LeakyReLU(0.1)]],[-2, 1, Conv, [256, 1, 1, None, 1, nn.LeakyReLU(0.1)]],[-1, 1, SP, [5]],[-2, 1, SP, [9]],[-3, 1, SP, [13]],[[-1, -2, -3, -4], 1, Concat, [1]],[-1, 1, Conv, [256, 1, 1, None, 1, nn.LeakyReLU(0.1)]],[[-1, -7], 1, Concat, [1]],[-1, 1, Conv, [256, 1, 1, None, 1, nn.LeakyReLU(0.1)]], # 37[[7, 14, 21, 37], 1, SimFusion_4in, []], # 38[-1, 1, IFM, [[64, 32]]], # 39[37, 1, Conv, [256, 1, 1]], # 40[[14, 21, -1], 1, SimFusion_3in, [256]], # 41[[-1, 39], 1, InjectionMultiSum_Auto_pool, [256, [64, 32], 0]], # 42[-1, 1, Conv, [64, 1, 1, None, 1, nn.LeakyReLU(0.1)]],[-2, 1, Conv, [64, 1, 1, None, 1, nn.LeakyReLU(0.1)]],[-1, 1, Conv, [64, 3, 1, None, 1, nn.LeakyReLU(0.1)]],[-1, 1, Conv, [64, 3, 1, None, 1, nn.LeakyReLU(0.1)]],[[-1, -2, -3, -4], 1, Concat, [1]],[-1, 1, Conv, [128, 1, 1, None, 1, nn.LeakyReLU(0.1)]], # 48[21, 1, Conv, [128, 1, 1]], # 49[[7, 21, -1], 1, SimFusion_3in, [128]], # 50[[-1, 39], 1, InjectionMultiSum_Auto_pool, [128, [64, 32], 1]], # 51[-1, 1, Conv, [32, 1, 1, None, 1, nn.LeakyReLU(0.1)]],[-2, 1, Conv, [32, 1, 1, None, 1, nn.LeakyReLU(0.1)]],[-1, 1, Conv, [32, 3, 1, None, 1, nn.LeakyReLU(0.1)]],[-1, 1, Conv, [32, 3, 1, None, 1, nn.LeakyReLU(0.1)]],[[-1, -2, -3, -4], 1, Concat, [1]],[-1, 1, Conv, [64, 1, 1, None, 1, nn.LeakyReLU(0.1)]], # 57[[57, 48, 37], 1, PyramidPoolAgg, [352, 2]], # 58[-1, 1, TopBasicLayer, [352, [64, 128]]], # 59[[57, 49], 1, AdvPoolFusion, []], # 60[[-1, 59], 1, InjectionMultiSum_Auto_pool, [128, [64, 128], 0]], # 61[-1, 1, Conv, [64, 1, 1, None, 1, nn.LeakyReLU(0.1)]],[-2, 1, Conv, [64, 1, 1, None, 1, nn.LeakyReLU(0.1)]],[-1, 1, Conv, [64, 3, 1, None, 1, nn.LeakyReLU(0.1)]],[-1, 1, Conv, [64, 3, 1, None, 1, nn.LeakyReLU(0.1)]],[[-1, -2, -3, -4], 1, Concat, [1]],[-1, 1, Conv, [128, 1, 1, None, 1, nn.LeakyReLU(0.1)]], # 67[[-1, 40], 1, AdvPoolFusion, []], # 68[[-1, 59], 1, InjectionMultiSum_Auto_pool, [256, [64, 128], 1]], # 69[-1, 1, Conv, [128, 1, 1, None, 1, nn.LeakyReLU(0.1)]],[-2, 1, Conv, [128, 1, 1, None, 1, nn.LeakyReLU(0.1)]],[-1, 1, Conv, [128, 3, 1, None, 1, nn.LeakyReLU(0.1)]],[-1, 1, Conv, [128, 3, 1, None, 1, nn.LeakyReLU(0.1)]],[[-1, -2, -3, -4], 1, Concat, [1]],[-1, 1, Conv, [256, 1, 1, None, 1, nn.LeakyReLU(0.1)]], # 75[57, 1, Conv, [128, 3, 1, None, 1, nn.LeakyReLU(0.1)]],[67, 1, Conv, [256, 3, 1, None, 1, nn.LeakyReLU(0.1)]],[75, 1, Conv, [512, 3, 1, None, 1, nn.LeakyReLU(0.1)]],[[76,77,78], 1, IDetect, [nc, anchors]], # Detect(P3, P4, P5)]

from n params module arguments 0 -1 1 928 models.common.Conv [3, 32, 3, 2, None, 1, LeakyReLU(negative_slope=0.1)]1 -1 1 18560 models.common.Conv [32, 64, 3, 2, None, 1, LeakyReLU(negative_slope=0.1)]2 -1 1 2112 models.common.Conv [64, 32, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]3 -2 1 2112 models.common.Conv [64, 32, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]4 -1 1 9280 models.common.Conv [32, 32, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]5 -1 1 9280 models.common.Conv [32, 32, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]6 [-1, -2, -3, -4] 1 0 models.common.Concat [1] 7 -1 1 8320 models.common.Conv [128, 64, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]8 -1 1 0 models.common.MP [] 9 -1 1 4224 models.common.Conv [64, 64, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]10 -2 1 4224 models.common.Conv [64, 64, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]11 -1 1 36992 models.common.Conv [64, 64, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]12 -1 1 36992 models.common.Conv [64, 64, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]13 [-1, -2, -3, -4] 1 0 models.common.Concat [1] 14 -1 1 33024 models.common.Conv [256, 128, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]15 -1 1 0 models.common.MP [] 16 -1 1 16640 models.common.Conv [128, 128, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]17 -2 1 16640 models.common.Conv [128, 128, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]18 -1 1 147712 models.common.Conv [128, 128, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]19 -1 1 147712 models.common.Conv [128, 128, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]20 [-1, -2, -3, -4] 1 0 models.common.Concat [1] 21 -1 1 131584 models.common.Conv [512, 256, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]22 -1 1 0 models.common.MP [] 23 -1 1 66048 models.common.Conv [256, 256, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]24 -2 1 66048 models.common.Conv [256, 256, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]25 -1 1 590336 models.common.Conv [256, 256, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]26 -1 1 590336 models.common.Conv [256, 256, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]27 [-1, -2, -3, -4] 1 0 models.common.Concat [1] 28 -1 1 525312 models.common.Conv [1024, 512, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]29 -1 1 131584 models.common.Conv [512, 256, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]30 -2 1 131584 models.common.Conv [512, 256, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]31 -1 1 0 models.common.SP [5] 32 -2 1 0 models.common.SP [9] 33 -3 1 0 models.common.SP [13] 34 [-1, -2, -3, -4] 1 0 models.common.Concat [1] 35 -1 1 262656 models.common.Conv [1024, 256, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]36 [-1, -7] 1 0 models.common.Concat [1] 37 -1 1 131584 models.common.Conv [512, 256, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]38 [7, 14, 21, 37] 1 0 models.goldyolo.SimFusion_4in [] 39 -1 1 355392 models.goldyolo.IFM [704, [64, 32]] 40 37 1 66048 models.common.Conv [256, 256, 1, 1] 41 [14, 21, -1] 1 230400 models.goldyolo.SimFusion_3in [[128, 256, 256], 256] 42 [-1, 39] 1 99840 models.goldyolo.InjectionMultiSum_Auto_pool[256, 256, [64, 32], 0] 43 -1 1 16512 models.common.Conv [256, 64, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]44 -2 1 16512 models.common.Conv [256, 64, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]45 -1 1 36992 models.common.Conv [64, 64, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]46 -1 1 36992 models.common.Conv [64, 64, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]47 [-1, -2, -3, -4] 1 0 models.common.Concat [1] 48 -1 1 33024 models.common.Conv [256, 128, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]49 21 1 33024 models.common.Conv [256, 128, 1, 1] 50 [7, 21, -1] 1 90880 models.goldyolo.SimFusion_3in [[64, 256, 128], 128] 51 [-1, 39] 1 25344 models.goldyolo.InjectionMultiSum_Auto_pool[128, 128, [64, 32], 1] 52 -1 1 4160 models.common.Conv [128, 32, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]53 -2 1 4160 models.common.Conv [128, 32, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]54 -1 1 9280 models.common.Conv [32, 32, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]55 -1 1 9280 models.common.Conv [32, 32, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]56 [-1, -2, -3, -4] 1 0 models.common.Concat [1] 57 -1 1 8320 models.common.Conv [128, 64, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]58 [57, 48, 37] 1 158400 models.goldyolo.PyramidPoolAgg [448, 352, 2] 59 -1 1 2222528 models.goldyolo.TopBasicLayer [352, [64, 128]] 60 [57, 49] 1 0 models.goldyolo.AdvPoolFusion [] 61 [-1, 59] 1 41728 models.goldyolo.InjectionMultiSum_Auto_pool[192, 128, [64, 128], 0] 62 -1 1 8320 models.common.Conv [128, 64, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]63 -2 1 8320 models.common.Conv [128, 64, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]64 -1 1 36992 models.common.Conv [64, 64, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]65 -1 1 36992 models.common.Conv [64, 64, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]66 [-1, -2, -3, -4] 1 0 models.common.Concat [1] 67 -1 1 33024 models.common.Conv [256, 128, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]68 [-1, 40] 1 0 models.goldyolo.AdvPoolFusion [] 69 [-1, 59] 1 165376 models.goldyolo.InjectionMultiSum_Auto_pool[384, 256, [64, 128], 1] 70 -1 1 33024 models.common.Conv [256, 128, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]71 -2 1 33024 models.common.Conv [256, 128, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]72 -1 1 147712 models.common.Conv [128, 128, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]73 -1 1 147712 models.common.Conv [128, 128, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]74 [-1, -2, -3, -4] 1 0 models.common.Concat [1] 75 -1 1 131584 models.common.Conv [512, 256, 1, 1, None, 1, LeakyReLU(negative_slope=0.1)]76 57 1 73984 models.common.Conv [64, 128, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]77 67 1 295424 models.common.Conv [128, 256, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]78 75 1 1180672 models.common.Conv [256, 512, 3, 1, None, 1, LeakyReLU(negative_slope=0.1)]79 [76, 77, 78] 1 17132 models.yolo.IDetect [1, [[10, 13, 16, 30, 33, 23], [30, 61, 62, 45, 59, 119], [116, 90, 156, 198, 373, 326]], [128, 256, 512]]Model Summary: 443 layers, 8969932 parameters, 8969932 gradients, 16.1 GFLOPS

运行后若打印出如上文本代表改进成功。

四、YOLOv5s改进工作

完成二后,在YOLOv5项目文件下的models文件夹下创建新的文件yolov5s-goldyolo.yaml,导入如下代码。

# YOLOv5 🚀 by Ultralytics, AGPL-3.0 license# Parameters

nc: 80 # number of classes

depth_multiple: 0.33 # model depth multiple

width_multiple: 0.50 # layer channel multiple

anchors:- [10,13, 16,30, 33,23] # P3/8- [30,61, 62,45, 59,119] # P4/16- [116,90, 156,198, 373,326] # P5/32# YOLOv5 v6.0 backbone

backbone:# [from, number, module, args][[-1, 1, Conv, [64, 6, 2, 2]], # 0-P1/2[-1, 1, Conv, [128, 3, 2]], # 1-P2/4[-1, 3, C3, [128]],[-1, 1, Conv, [256, 3, 2]], # 3-P3/8[-1, 6, C3, [256]],[-1, 1, Conv, [512, 3, 2]], # 5-P4/16[-1, 9, C3, [512]],[-1, 1, Conv, [1024, 3, 2]], # 7-P5/32[-1, 3, C3, [1024]],[-1, 1, SPPF, [1024, 5]], # 9]# YOLOv5 v6.0 head

head:[[[2, 4, 6, 9], 1, SimFusion_4in, []], # 10[-1, 1, IFM, [[64, 32]]], # 11[9, 1, Conv, [512, 1, 1]], # 12[[4, 6, -1], 1, SimFusion_3in, [512]], # 13[[-1, 11], 1, InjectionMultiSum_Auto_pool, [512, [64, 32], 0]], # 14[-1, 3, C3, [512, False]], # 15[6, 1, Conv, [256, 1, 1]], # 16[[2, 4, -1], 1, SimFusion_3in, [256]], # 17[[-1, 11], 1, InjectionMultiSum_Auto_pool, [256, [64, 32], 1]], # 18[-1, 3, C3, [256, False]], # 19[[19, 15, 9], 1, PyramidPoolAgg, [352, 2]], # 20[-1, 1, TopBasicLayer, [352, [64, 128]]], # 21[[19, 16], 1, AdvPoolFusion, []], # 22[[-1, 21], 1, InjectionMultiSum_Auto_pool, [256, [64, 128], 0]], # 23[-1, 3, C3, [256, False]], # 24[[-1, 12], 1, AdvPoolFusion, []], # 25[[-1, 21], 1, InjectionMultiSum_Auto_pool, [512, [64, 128], 1]], # 26[-1, 3, C3, [512, False]], # 27[[19, 24, 27], 1, Detect, [nc, anchors]] # 28]from n params module arguments 0 -1 1 3520 models.common.Conv [3, 32, 6, 2, 2] 1 -1 1 18560 models.common.Conv [32, 64, 3, 2] 2 -1 1 18816 models.common.C3 [64, 64, 1] 3 -1 1 73984 models.common.Conv [64, 128, 3, 2] 4 -1 2 115712 models.common.C3 [128, 128, 2] 5 -1 1 295424 models.common.Conv [128, 256, 3, 2] 6 -1 3 625152 models.common.C3 [256, 256, 3] 7 -1 1 1180672 models.common.Conv [256, 512, 3, 2] 8 -1 1 1182720 models.common.C3 [512, 512, 1] 9 -1 1 656896 models.common.SPPF [512, 512, 5] 10 [2, 4, 6, 9] 1 0 models.common.SimFusion_4in [] 11 -1 1 379968 models.common.IFM [960, [64, 32]] 12 9 1 131584 models.common.Conv [512, 256, 1, 1] 13 [4, 6, -1] 1 230400 models.common.SimFusion_3in [[128, 256, 256], 256] 14 [-1, 11] 1 199680 models.common.InjectionMultiSum_Auto_pool[256, 512, [64, 32], 0] 15 -1 1 361984 models.common.C3 [512, 256, 1, False] 16 6 1 33024 models.common.Conv [256, 128, 1, 1] 17 [2, 4, -1] 1 57856 models.common.SimFusion_3in [[64, 128, 128], 128] 18 [-1, 11] 1 50688 models.common.InjectionMultiSum_Auto_pool[128, 256, [64, 32], 1] 19 -1 1 90880 models.common.C3 [256, 128, 1, False] 20 [19, 15, 9] 1 316096 models.common.PyramidPoolAgg [896, 352, 2] 21 -1 1 2222528 models.common.TopBasicLayer [352, [64, 128]] 22 [19, 16] 1 0 models.common.AdvPoolFusion [] 23 [-1, 21] 1 99840 models.common.InjectionMultiSum_Auto_pool[256, 256, [64, 128], 0] 24 -1 1 90880 models.common.C3 [256, 128, 1, False] 25 [-1, 12] 1 0 models.common.AdvPoolFusion [] 26 [-1, 21] 1 330752 models.common.InjectionMultiSum_Auto_pool[384, 512, [64, 128], 1] 27 -1 1 361984 models.common.C3 [512, 256, 1, False] 28 [19, 24, 27] 1 9270 models.yolo.Detect [1, [[10, 13, 16, 30, 33, 23], [30, 61, 62, 45, 59, 119], [116, 90, 156, 198, 373, 326]], [128, 128, 256]]Model Summary: 455 layers, 9138870 parameters, 9138870 gradients, 20.1 GFLOPs运行后若打印出如上文本代表改进成功。

五、YOLOv5n改进工作

完成二后,在YOLOv5项目文件下的models文件夹下创建新的文件yolov5n-goldyolo.yaml,导入如下代码。

# YOLOv5 🚀 by Ultralytics, AGPL-3.0 license# Parameters

nc: 80 # number of classes

depth_multiple: 0.33 # model depth multiple

width_multiple: 0.25 # layer channel multiple

anchors:- [10,13, 16,30, 33,23] # P3/8- [30,61, 62,45, 59,119] # P4/16- [116,90, 156,198, 373,326] # P5/32# YOLOv5 v6.0 backbone

backbone:# [from, number, module, args][[-1, 1, Conv, [64, 6, 2, 2]], # 0-P1/2[-1, 1, Conv, [128, 3, 2]], # 1-P2/4[-1, 3, C3, [128]],[-1, 1, Conv, [256, 3, 2]], # 3-P3/8[-1, 6, C3, [256]],[-1, 1, Conv, [512, 3, 2]], # 5-P4/16[-1, 9, C3, [512]],[-1, 1, Conv, [1024, 3, 2]], # 7-P5/32[-1, 3, C3, [1024]],[-1, 1, SPPF, [1024, 5]], # 9]# YOLOv5 v6.0 head

head:[[[2, 4, 6, 9], 1, SimFusion_4in, []], # 10[-1, 1, IFM, [[64, 32]]], # 11[9, 1, Conv, [512, 1, 1]], # 12[[4, 6, -1], 1, SimFusion_3in, [512]], # 13[[-1, 11], 1, InjectionMultiSum_Auto_pool, [512, [64, 32], 0]], # 14[-1, 3, C3, [512, False]], # 15[6, 1, Conv, [256, 1, 1]], # 16[[2, 4, -1], 1, SimFusion_3in, [256]], # 17[[-1, 11], 1, InjectionMultiSum_Auto_pool, [256, [64, 32], 1]], # 18[-1, 3, C3, [256, False]], # 19[[19, 15, 9], 1, PyramidPoolAgg, [352, 2]], # 20[-1, 1, TopBasicLayer, [352, [64, 128]]], # 21[[19, 16], 1, AdvPoolFusion, []], # 22[[-1, 21], 1, InjectionMultiSum_Auto_pool, [256, [64, 128], 0]], # 23[-1, 3, C3, [256, False]], # 24[[-1, 12], 1, AdvPoolFusion, []], # 25[[-1, 21], 1, InjectionMultiSum_Auto_pool, [512, [64, 128], 1]], # 26[-1, 3, C3, [512, False]], # 27[[19, 24, 27], 1, Detect, [nc, anchors]] # 28]from n params module arguments 0 -1 1 1760 models.common.Conv [3, 16, 6, 2, 2] 1 -1 1 4672 models.common.Conv [16, 32, 3, 2] 2 -1 1 4800 models.common.C3 [32, 32, 1] 3 -1 1 18560 models.common.Conv [32, 64, 3, 2] 4 -1 2 29184 models.common.C3 [64, 64, 2] 5 -1 1 73984 models.common.Conv [64, 128, 3, 2] 6 -1 3 156928 models.common.C3 [128, 128, 3] 7 -1 1 295424 models.common.Conv [128, 256, 3, 2] 8 -1 1 296448 models.common.C3 [256, 256, 1] 9 -1 1 164608 models.common.SPPF [256, 256, 5] 10 [2, 4, 6, 9] 1 0 models.common.SimFusion_4in [] 11 -1 1 333888 models.common.IFM [480, [64, 32]] 12 9 1 33024 models.common.Conv [256, 128, 1, 1] 13 [4, 6, -1] 1 57856 models.common.SimFusion_3in [[64, 128, 128], 128] 14 [-1, 11] 1 134144 models.common.InjectionMultiSum_Auto_pool[128, 512, [64, 32], 0] 15 -1 1 123648 models.common.C3 [512, 128, 1, False] 16 6 1 8320 models.common.Conv [128, 64, 1, 1] 17 [2, 4, -1] 1 14592 models.common.SimFusion_3in [[32, 64, 64], 64] 18 [-1, 11] 1 34304 models.common.InjectionMultiSum_Auto_pool[64, 256, [64, 32], 1] 19 -1 1 31104 models.common.C3 [256, 64, 1, False] 20 [19, 15, 9] 1 158400 models.common.PyramidPoolAgg [448, 352, 2] 21 -1 1 2222528 models.common.TopBasicLayer [352, [64, 128]] 22 [19, 16] 1 0 models.common.AdvPoolFusion [] 23 [-1, 21] 1 67072 models.common.InjectionMultiSum_Auto_pool[128, 256, [64, 128], 0] 24 -1 1 31104 models.common.C3 [256, 64, 1, False] 25 [-1, 12] 1 0 models.common.AdvPoolFusion [] 26 [-1, 21] 1 232448 models.common.InjectionMultiSum_Auto_pool[192, 512, [64, 128], 1] 27 -1 1 123648 models.common.C3 [512, 128, 1, False] 28 [19, 24, 27] 1 4662 models.yolo.Detect [1, [[10, 13, 16, 30, 33, 23], [30, 61, 62, 45, 59, 119], [116, 90, 156, 198, 373, 326]], [64, 64, 128]]Model Summary: 455 layers, 4657110 parameters, 4657110 gradients, 8.3 GFLOPs运行后打印如上代码说明改进成功。

更多文章产出中,主打简洁和准确,欢迎关注我,共同探讨!