文章地址:[2206.09474] 3D Object Detection for Autonomous Driving: A Comprehensive Survey (arxiv.org)

这篇综述简单易懂,非常合适对自动驾驶和3D目标检测感兴趣的小白阅读,对相关算法进行初步理解。

目录

一、摘要

(一)原文

(二)翻译

(三)关键词

二、介绍

(一)原文

(二)翻译

三、背景

(一)原文

(二)翻译

(三)理解

一、摘要

(一)原文

Autonomous driving, in recent years, has been receiving increasing attention for its potential to relieve drivers' burdens and improve the safety of driving. In modern autonomous driving pipelines, the perception system is an indispensable component, aiming to accurately estimate the status of surrounding environments and provide reliable observations for prediction and planning. 3D object detection, which aims to predict the locations, sizes, and categories of the 3D objects near an autonomous vehicle, is an important part of a perception system. This paper reviews the advances in 3D object detection for autonomous driving. First, we introduce the background of 3D object detection and discuss the challenges in this task. Second, we conduct a comprehensive survey of the progress in 3D object detection from the aspects of models and sensory inputs, including LiDAR-based, camera-based, and multi-modal detection approaches. We also provide an in-depth analysis of the potentials and challenges in each category of methods. Additionally, we systematically investigate the applications of 3D object detection in driving systems. Finally, we conduct a performance analysis of the 3D object detection approaches, and we further summarize the research trends over the years and prospect the future directions of this area.

(二)翻译

近年来,自动驾驶因其减轻驾驶员负担和提高驾驶安全性的潜力而受到越来越多的关注。在现代自动驾驶管道中,感知系统是一个不可或缺的组成部分,旨在准确估计周围环境的状态,并为预测和规划提供可靠的观察。3D 对象检测旨在预测自动驾驶汽车附近 3D 对象的位置、大小和类别,是感知系统的重要组成部分。本文回顾了自动驾驶三维目标检测的研究进展。首先,我们介绍了 3D 对象检测的背景并讨论了这项任务的挑战。其次,我们从模型和感官输入方面对三维物体检测的进展进行了全面的综述,包括基于lidar、基于摄像头和多模态检测方法。我们还对每一类方法的潜力和挑战进行了深入的分析。此外,我们系统地研究了 3D 对象检测在驾驶系统中的应用。最后,我们对 3D 对象检测方法进行了性能分析,我们进一步总结了多年来的研究趋势,并展望了该领域的未来方向。

(三)关键词

3D目标检测、感知、自动驾驶、深度学习、计算机视觉、机器人学

二、介绍

(一)原文

Autonomous driving, which aims to enable vehicles to perceive the surrounding environments intelligently and move safely with little or no human effort, has attained rapid progress in recent years. Autonomous driving techniques have been broadly applied in many scenarios, including self-driving trucks, robotaxis, delivery robots, etc., and are capable of reducing human error and enhancing road safety. As a core component of autonomous driving systems, automotive perception helps autonomous vehicles understand the surrounding environments with sensory input. Perception systems generally take multi-modality data (images from cameras, point clouds from LiDAR scanners, high-definition maps etc.) as input, and predict the geometric and semantic information of critical elements on a road. High-quality perception results serve as reliable observations for the following steps such as object tracking, trajectory prediction, and path planning.

To obtain a comprehensive understanding of driving environments, many vision tasks can be involved in a perception system, e.g. object detection and tracking, lane detection, and semantic and instance segmentation. Among these perception tasks, 3D object detection is one of the most indispensable tasks in an automotive perception system. 3D object detection aims to predict the locations, sizes, and classes of critical objects, e.g. cars, pedestrians, cyclists, in the 3D space. In contrast to 2D object detection which only generates 2D bounding boxes on images and ignores the actual distance information of objects from the ego-vehicle, 3D object detection focuses on the localization and recognition of objects in the real-world 3D coordinate system.

3D object detection methods have evolved rapidly with the advances of deep learning techniques in computer vision and robotics. These methods have been trying to address the 3D object detection problem from a particular aspect, e.g. detection from a particular sensory type or data representation, and lack a systematic comparison with the methods of other categories. Hence a comprehensive analysis of the strengths and weaknesses of all types of 3D object detection methods is desirable and can provide some valuable findings to the research community.

To this end, we propose to comprehensively review the 3D object detection methods for autonomous driving applications and provide in-depth analysis and a systematic comparison on different categories of approaches. Compared to the existing surveys (Arnold et al., 2019; Liang et al., 2021b; Qian et al., 2021b), our paper broadly covers the recent advances in this area, e.g. 3D object detection from range images, self-/semi-/weakly-supervised 3D object detection, 3D detection in end-to-end driving systems. In contrast to the previous surveys that only focus on detection from point cloud (Guo et al., 2020; Fernandes et al., 2021; Zamanakos et al., 2021), from monocular images (Wu et al., 2020a; Ma et al., 2022), and from multi-modal inputs (Wang et al., 2021h), our paper systematically investigate the 3D object detection methods from all sensory types and in most application scenarios. The major contributions of this work can be summarized as follows:

- We provide a comprehensive review of the 3D object detection methods from different perspectives, including detection from different sensory inputs (LiDAR-based, camera-based, and multi-modal detection), detection from temporal sequences, label-efficient detection, as well as the applications of 3D object detection in driving systems.

– We summarize 3D object detection approaches structurally and hierarchically, conduct a systematic analysis of these methods, and provide valuable insights for the potentials and challenges of different categories of methods.

– We conduct a comprehensive performance and speed analysis on the 3D object detection approaches, identify the research trends over years, and provide insightful views on the future directions of 3D object detection.

The structure of this paper is organized as follows. First, we introduce the problem definition, datasets, and evaluation metrics of 3D object detection in Sect. 2. Then, we review and analyze the 3D object detection methods based on LiDAR sensors (Sect. 3), cameras (Sect. 4), multisensor fusion (Sect. 5), and Transformer-based architectures (Sect. 6). Next, we introduce the detection methods that leverage temporal data in Sect. 7 and utilize fewer labels in Sect. 8. We subsequently discuss some critical problems of 3D object detection in driving systems in Sect. 9. Finally, we conduct a speed and performance analysis, investigate the research trends, and prospect the future directions of 3D object detection in Sect. 10. A hierarchically-structured taxonomy is shown in Fig. 1. We also provide a constantly updated project page here.

(二)翻译

近年来,自动驾驶旨在使车辆能够智能地感知周围环境,并以很少或没有人力安全地移动,取得了快速进展。自动驾驶技术已广泛应用于自动驾驶卡车、机器人轴、送货机器人等诸多场景中,能够减少人为错误,提高道路安全。作为自动驾驶系统的核心组成部分,汽车感知帮助自动驾驶汽车通过感官输入了解周围环境。感知系统通常采用多模态数据(摄像机图像、激光雷达扫描仪的点云、高清地图等)作为输入,预测道路上关键元素的几何和语义信息。高质量的感知结果可作为后续步骤的可靠观察,如目标跟踪、轨迹预测和路径规划。

为了全面了解驾驶环境,感知系统可以涉及许多视觉任务,例如目标检测和跟踪、车道检测和语义分割。在这些感知任务中,三维物体检测是汽车感知系统中最重要的任务之一。3D 对象检测旨在预测 3D 空间中关键对象(例如汽车、行人、骑自行车的人)的位置、大小和类别。与仅在图像上生成 2D 边界框并忽略来自自我车辆的对象的实际距离信息的 2D 对象检测相比,3D 对象检测侧重于现实世界 3D 坐标系中的对象的定位和识别。三维目标检测在现实坐标中预测的几何信息可以直接用于测量自我车辆与关键物体之间的距离,并进一步帮助规划行驶路线并避免碰撞。

随着深度学习技术在计算机视觉和机器人技术方面的进步,三维目标检测方法发展迅速。这些方法试图从特定方面解决 3D 对象检测问题,例如从特定感官类型或数据表示中检测,并且缺乏对其他类别方法的系统比较。因此,需要对所有类型的 3D 对象检测方法的优缺点进行全面分析,可以为研究界提供一些有价值的发现。

为此,我们建议全面回顾自动驾驶应用的 3D 对象检测方法,并对不同类别的方法进行了深入分析和系统比较。与现有的调查相比(Arnold 等人,2019;Liang 等人,2021b;Qian 等人,2021b),我们的论文广泛涵盖了该领域的最新进展,例如来自距离图像的 3D 对象检测、自/半/弱监督 3D 对象检测、端到端驾驶系统中的 3D 检测。与之前只关注点云检测的调查(Guo et al., 2020; Fernandes et al., 2021; Zamanakos et al., 2021)相比,来自单目图像(Wu et al., 2020a; Ma et al., 2022)和多模态输入(Wang et al., 2021h),我们的论文系统地研究了所有感官类型和大多数应用场景的 3D 对象检测方法。这项工作的主要贡献可以总结如下:

我们从不同的角度全面回顾 3D 对象检测方法,包括从不同的感官输入(基于 LiDAR、基于相机和多模态检测)、从时间序列检测、标签高效检测以及 3D 对象检测在驾驶系统中的应用。

- 我们在结构上和分层总结了 3D 对象检测方法,对这些方法进行了系统的分析,并为不同类别的方法的潜力和挑战提供了有价值的见解。

– 我们对 3D 对象检测方法进行了综合性能和速度分析,确定了多年来的研究趋势,并对 3D 对象检测未来方向提供了深刻的看法。

本文的结构组织如下。首先,我们在第 2 节中介绍了 3D 对象检测的问题定义、数据集和评估指标。然后,我们回顾和分析基于 LiDAR 传感器的 3D 对象检测方法(第 3 节)、相机(第 4 节)、多传感器融合(第 5 节)和基于 Transformer 的架构(第 6 节)。接下来,我们介绍了利用第7节中的时态数据的检测方法,并在第8节中利用较少的标签。随后,我们在第9节中讨论了驾驶系统中三维物体检测的一些关键问题。最后,我们进行了速度和性能分析,研究了研究趋势,并在第10节中展望了三维物体检测的未来方向。分层结构的分类如图1所示。我们在这里还提供了一个不断更新的项目页面。

三、背景

(一)原文

2.1 What is 3D Object Detection?

Problem definition 3D object detection aims to predict bounding boxes of 3D objects in driving scenarios from sensory inputs. A general formula of 3D object detection can be represented as

where B = {B1, · · · , B N } is a set of N 3D objects in a scene, f det is a 3D object detection model, and Isensor is one or more sensory inputs. How to represent a 3D object Bi is a crucial problem in this task, since it determines what 3D information should be provided for the following prediction and planning steps. In most cases, a 3D object is represented as a 3D cuboid that includes this object, that is

where (x c, yc, z c) is the 3D center coordinate of a cuboid, l,w, h is the length, width, and height of a cuboid respectively,θ is the heading angle, i.e. the yaw angle, of a cuboid on the ground plane, and class denotes the category of a 3D object, e.g. cars, trucks, pedestrians, cyclists. In Caesar et al. (2020), additional parameters vx and vy that describe the speed of a 3D object along x and y axes on the ground are employed.

Sensory inputs There are many types of sensors that can provide raw data for 3D object detection. Among the sensors, radars, cameras, and LiDAR (Light Detection And Ranging) sensors are the three most widely adopted sensory types. Radars have long detection range and are robust to different weather conditions. Due to the Doppler effect, radars could provide additional velocity measurements. Cameras are cheap and easily accessible, and can be crucial for understanding semantics, e.g. the type of traffic sign. Cameras produce images Icam ∈ R W ×H ×3 for 3D object detection, where W , H are the width and height of an image, and each pixel has 3 RGB channels. Albeit cheap, cameras have intrinsic limitations to be utilized for 3D object detection. First, cameras only capture appearance information, and are not capable of directly obtaining 3D structural information about a scene. On the other hand, 3D object detection normally requires accurate localization in the 3D space, while the 3D information, e.g. depth, estimated from images normally has large errors. In addition, detection from images is generally vulnerable to extreme weather and time conditions. Detecting objects from images at night or on foggy days is much harder than detection on sunny days, which leads to the challenge of attaining sufficient robustness for autonomous driving.

As an alternative solution, LiDAR sensors can obtain finegrained 3D structures of a scene by emitting laser beams and then measuring their reflective information. A LiDAR sensor that emits m beams and conducts measurements for n times in one scan cycle can produce a range image Irange ∈ R m×n×3 , where each pixel of a range image contains range r , azimuthα, and inclination φ in the spherical coordinate system as well as the reflective intensity. Range images are the raw data for- mat obtained by LiDAR sensors, and can be further converted into point clouds by transforming spherical coordinates into Cartesian coordinates. A point cloud can be represented asIpoint ∈ R N ×3 , where N denotes the number of points in a scene, and each point has 3 channels of xyz coordinates. Both range images and point clouds contain accurate 3D information directly acquired by LiDAR sensors. Hence in contrast to cameras, LiDAR sensors are more suitable for detecting objects in the 3D space, and LiDAR sensors are also less vulnerable to time and weather changes. However, LiDAR sensors are much more expensive than cameras, which may limit the applications in driving scenarios. An illustration of 3D object detection is shown in Fig. 2.

Analysis: comparisons with 2D object detection 2D object detection, which aims to generate 2D bounding boxes on images, is a fundamental problem in computer vision. 3D object detection methods have borrowed many design paradigms from the 2D counterparts: proposals generation and refinement, anchors, non maximum suppression, etc. However, from many aspects, 3D object detection is not a naive adaptation of 2D object detection methods to the 3D space. (1) 3D object detection methods have to deal with heterogeneous data representations. Detection from point clouds requires novel operators and networks to handle irregular point data, and detection from both point clouds and images needs special fusion mechanisms.(2) 3D object detection methods normally leverage distinct projected views to generate object predictions. As opposed to 2D object detection methods that detect objects from the perspective view, 3D methods have to consider different views to detect 3D objects, e.g. from the bird's-eye view, point view, and cylindrical view. (3) 3D object detection has a high demand for accurate localization of objects in the 3D space. A decimeter-level localization error can lead to a detection failure of small objects such as pedestrians and cyclists, while in 2D object detection, a localization error of several pixels may still maintain a high Intersection over Union (IoU) between predicted and ground truth bounding boxes. Hence accurate 3D geometric information is indispensable for 3D object detection from either point clouds or images.

Analysis: comparisons with indoor 3D object detection There is also a branch of works (Qi et al., 2018, 2019, 2020; Liu et al., 2021d) on 3D object detection in indoor scenarios. Indoor datasets, e.g. ScanNet (Dai et al., 2017), SUN RGB-D (Song et al., 2015), provide 3D structures of rooms reconstructed from RGB-D sensors and 3D annotations including doors, windows, beds, chairs, etc. 3D object detection in indoor scenes is also based on point clouds or images. However, compared to indoor 3D object detection, there are unique challenges of detection in driving scenarios. (1) Point cloud distributions from LiDAR and RGB-D sensors are different. In indoor scenes, points are relatively uniformly distributed on the scanned surfaces and most 3D objects receive a sufficient number of points on their surfaces. However, in driving scenes most points fall in a near neighborhood of the LiDAR sensor, and those 3D objects that are far away from the sensor will receive only a few points. Thus methods in driving scenarios are specially required to handle various point cloud densities of 3D objects and accurately detect those faraway and sparse objects. (2) Detection in driving scenarios has a special demand for inference latency. Perception in driving scenes has to be real-time to avoid accidents. Hence those methods are required to be computationally efficient, otherwise they will not be applied in real-world applications.

2.2 Datasets

A large number of driving datasets have been built to provide multi-modal sensory data and 3D annotations for 3D object detection. Table 1 lists the datasets that collect data in driving scenarios and provide 3D cuboid annotations. KITTI (Geiger et al., 2012) is a pioneering work that proposes a standard data collection and annotation paradigm: equipping a vehicle with cameras and LiDAR sensors, driving the vehicle on roads for data collection, and annotating 3D objects from the collected data. The following works made improvements mainly from the 4 aspects.(1) Increasing the scale of data. Compared to Geiger et al. (2012), the recent large-scale datasets (Sun et al., 2020c; Caesar et al., 2020; Mao et al., 2021b) have more than 10x point clouds, images and annotations. (2) Improving the diversity of data. Geiger et al. (2012) only contains driving data obtained in the daytime and in good weather, while recent datasets (Choi et al., 2018; Chang et al., 2019; Pham et al., 2020; Caesar et al., 2020; Sun et al., 2020c; Xiao et al., 2021; Mao et al., 2021b; Wilson et al., 2021) provide data captured at night or in rainy days. (3) Providing more annotated categories. Some datasets (Liao et al., 2021; Xiao et al., 2021; Geyer et al., 2020; Wilson et al., 2021; Caesar et al., 2020) can provide more fine-grained object classes, including animals, barriers, traffic cones, etc. They also provide fine-grained sub-categories of existing classes, e.g. the adult and child category of the existing pedestrian class in Caesar et al. (2020). (4) Providing data of more modalities. In addition to images and point clouds, recent datasets provide more data types, including high-definition maps (Kesten et al., 2019; Chang et al., 2019; Sun et al., 2020c; Wilson et al., 2021), radar data (Caesar et al., 2020), long-range LiDAR data (Weng et al., 2020; Wang et al., 2021j), thermal images (Choi et al., 2018).

Analysis: future prospects of driving datasets The research community has witnessed an explosion of datasets for 3D object detection in autonomous driving scenarios. A subsequent question may be asked: what will the next-generation autonomous driving datasets look like? Considering the fact that 3D object detection is not an independent task but a component in driving systems, we propose that future datasets will include all important tasks in autonomous driving: perception, prediction, planning, and mapping, as a whole and in an end-to-end manner, so that the development and evaluation of 3D object detection methods will be considered from an overall and systematic view. There are some datasets (Sun et al., 2020c; Caesar et al., 2020; Yogamani et al., 2019) working towards this goal.

2.2 Evaluation Metrics

Various evaluation metrics have been proposed to measure the performance of 3D object detection methods. Those evaluation metrics can be divided into two categories. The first category tries to extend the Average Precision (AP) metric (Lin et al., 2014) in 2D object detection to the 3D space:

where p(r ) is the precision-recall curve same as (Lin et al., 2014). The major difference with the 2D AP metric lies in the matching criterion between ground truths and predictions when calculating precision and recall. KITTI Geiger et al. (2012) proposes two widely-used AP metrics: A P3D andA PB E V , where A P3D matches the predicted objects to the respective ground truths if the 3D Intersection over Union (3D IoU) of two cuboids is above a certain threshold, andA PB E V is based on the IoU of two cuboids from the bird'seye view (BEV IoU). NuScenes Caesar et al. (2020) proposesA Pcenter where a predicted object is matched to a ground truth object if the distance of their center locations is below a certain threshold, and NuScenes Detection Score (NDS) is further proposed to take both A Pcenter and the error of other parameters, i.e. size, heading, velocity, into consideration. Waymo Sun et al. (2020c) proposes A Phungarian that applies the Hungarian algorithm to match the ground truths and predictions, and AP weighted by Heading (A P H ) is proposed to incorporate heading errors as a coefficient into the AP calculation.

The other category of approaches tries to resolve the evaluation problem from a more practical perspective. The idea is that the quality of 3D object detection should be relevant to the downstream task, i.e. motion planning, so that the best detection methods should be most helpful to planners to ensure the safety of driving in practical applications. Toward this goal, PKL (Philion et al., 2020) measures the detection quality using the KL-divergence of the ego vehicle's future planned states based on the predicted and ground truth detections respectively. SDE Deng et al. (2021a) leverages the minimal distance from the object boundary to the ego vehicle as the support distance and measures the support distance error.

Analysis: pros and cons of different evaluation metrics AP-based evaluation metrics (Geiger et al., 2012; Caesar et al., 2020; Sun et al., 2020c) can naturally inherit the advantages from 2D detection. However, those metrics overlook the influence of detection on safety issues, which are also critical in real-world applications. For instance, a misdetection of an object near the ego vehicle and far away from the ego vehicle may receive a similar level of punishment in AP calculation, but a misdetection of nearby objects is substantially more dangerous than a misdetection of faraway objects in practical applications. Thus AP-based metrics may not be the optimal solution from the perspective of safe driving. PKL Philion et al. (2020) and SDE Deng et al. (2021a) partly resolve the problem by considering the effects of detection in downstream tasks, but additional challenges will be introduced when modeling those effects. PKL Philion et al. (2020) requires a pre-trained motion planner for evaluating the detection performance, but a pre-trained planner also has innate errors that could make the evaluation process inaccurate. SDE Deng et al. (2021a) requires reconstructing object boundaries which is generally complicated and challenging.

(二)翻译

2.1 3D目标检测是什么?

问题定义 3D 对象检测旨在从感官输入中预测驾驶场景中 3D 对象的边界框。3D目标检测的一般公式可以表示为

其中 B = {B1, · · · , BN } 是场景中一组 N 个 3D 对象,f det 是 3D 对象检测模型,ISensor 是一个或多个感官输入。如何表示 3D 对象 Bi 是此任务中的一个关键问题,因为它确定应该为以下预测和规划步骤提供哪些 3D 信息。在大多数情况下,3D对象被表示为包含该对象的3D长方体,即

其中(xc, yc, zc)为长方体的三维中心坐标,l,w, h分别为长方体的长度、宽度和高度,θ为地平面上长方体的航向角,即偏航角,类为三维物体的类别,如汽车、卡车、行人、骑自行车的人。在Caesar等人(2020)中,使用了描述沿地面x轴和y轴的3D对象速度的附加参数vx和vy。

感官输入 有许多类型的传感器可以为 3D 对象检测提供原始数据。在传感器中,雷达、相机和激光雷达(光探测和测距)传感器是应用最广泛的三种感官类型。雷达探测范围长,对不同的天气条件具有鲁棒性。由于多普勒效应,雷达可以提供额外的速度测量。相机便宜且易于访问,并且对于理解语义(例如交通标志的类型)至关重要。相机产生图像Icam∈RW ×H ×3进行三维目标检测,其中W、H为图像的宽度和高度,每个像素有3个RGB通道。尽管价格便宜,但相机具有用于 3D 对象检测的内在限制。首先,摄像机只捕获外观信息,不能直接获取场景的三维结构信息。另一方面,3D 对象检测通常需要在 3D 空间中进行准确的定位,而从图像中估计的三维信息(例如深度)通常具有较大的误差。此外,从图像中检测通常容易受到极端天气和时间条件的影响。从夜间或雾天的图像检测对象比在晴天的检测要困难得多,这导致了为自动驾驶获得足够的鲁棒性的挑战。

作为另一种解决方案,激光雷达传感器可以通过发射激光束然后测量其反射信息来获得场景的细粒度 3D 结构。发射 m 个光束并在一个扫描周期内进行 n 次测量的 LiDAR 传感器可以产生距离图像 Irange ∈ R m×n×3 ,其中距离图像的每个像素在球坐标系中包含距离 r、方位角α 和倾角 φ 以及反射强度。距离图像是由激光雷达传感器获得的mat的原始数据,通过将球坐标转换为笛卡尔坐标,可以进一步转换为点云。点云可以表示为 Ipoint ∈ RN ×3 ,其中 N 表示场景中的点数,每个点有 3 个 xyz 坐标通道。距离图像和点云都包含激光雷达传感器直接获取的准确的3D信息。因此,与相机相比,激光雷达传感器更适合检测三维空间中的对象,激光雷达传感器也不太容易受到时间和天气变化的影响。然而,激光雷达传感器比相机贵得多,这可能会限制驾驶场景中的应用。3D目标检测示意图如图2所示。

分析:与旨在在图像中生成 2D 边界框的 2D 对象检测 2D 对象检测的比较是计算机视觉中的一个基本问题。3D对象检测方法借鉴了2D对应方法的许多设计范式:建议生成和细化、锚点、非最大抑制等。然而,从很多方面来看,3D目标检测并不是二维目标检测方法对三维空间的幼稚适应。(1) 3D对象检测方法必须处理异构数据表示。从点云中检测需要新的算子和网络来处理不规则的点云数据,从点云和图像中检测需要特殊的融合机制。(2) 3D对象检测方法通常利用不同的投影视图来生成对象预测。与从透视图检测对象的 2D 对象检测方法不同,3D 方法必须考虑不同视图来检测 3D 对象,例如从鸟瞰图、点视图和圆柱形视图。(3) 3D 对象检测对 3D 空间中对象的准确定位有很高的要求。分米级定位误差可能导致行人和骑自行车等小物体的检测失败,而在 2D 对象检测中,几个像素的定位误差仍然可以在预测边界框和地面实况边界框之间保持高度交叉联合 (IoU)。因此,精确的三维几何信息对于点云或图像的三维目标检测是必不可少的。

分析:与室内 3D 对象检测的比较室内场景中的 3D 对象检测 还有一个工作分支(Qi 等人,2018、2019、2020;Liu 等人,2021d)。室内数据集,例如ScanNet (Dai et al., 2017)、SUN RGB-D (Song et al., 2015),提供从RGB-D传感器重建的房间的3D结构,以及室内场景中的门、窗户、床、椅子等3D物体检测也基于点云或图像。然而,与室内 3D 对象检测相比,驾驶场景中检测存在独特的挑战。(1)激光雷达和RGB-D传感器的点云分布不同。在室内场景中,点相对均匀地分布在扫描表面上,大多数 3D 对象在其表面上接收到足够数量的点。然而,在驾驶场景中,大多数点落在激光雷达传感器的附近,那些远离传感器的3D对象将只接收几个点。因此,驾驶场景中的方法特别需要处理3D对象的各种点云密度,并准确地检测出那些遥远和稀疏的对象。(2)驾驶场景中的检测对推理延迟有特殊要求。驾驶场景中的感知必须是实时的,以避免事故。因此,这些方法需要计算效率,否则它们不会应用于实际应用。

2.2 数据集

构建了大量的驾驶数据集,为三维目标检测提供多模态感官数据和3D注释。表 1 列出了在驾驶场景中收集数据并提供 3D 长方体注释的数据集。KITTI (Geiger et al., 2012) 是一个开创性的工作,它提出了一个标准的数据收集和注释范式:为车辆配备摄像头和激光雷达传感器,驾驶道路上的车辆进行数据收集,并从收集的数据中注释 3D 对象。以下作品主要从 4 个方面进行了改进。(1) 增加数据的规模。与Geiger等人(2012)相比,最近的大规模数据集(Sun等人,2020c;Caesar等人,2020;Mao等人,2021b)有超过10x点云、图像和注释。(2) 提高数据的多样性。Geiger等人(2012)只包含白天和恶劣天气下获得的驾驶数据,而最近的数据集(Choi等人,2018;Chang等人,2019;Pham等人,2020;Caesar等人,2020;Sun等人,2020c;肖等人,2021年;毛等人,2021b;Wilson等人,2021)提供夜间或雨天捕获的数据。(3) 提供更多带注释的类别。一些数据集(Liao等人,2021;Xiao等人,2021年;Geyer等人,2020;Wilson等人,2021年;Caesar等人,2020)可以提供更细粒度的对象类,包括动物、障碍、交通锥等。它们还提供了现有类的细粒度子类别,例如Caesar等人(2020)中现有行人类的成人和子类别。(4) 提供更多模式的数据。除了图像和点云之外,最近的数据集还提供了更多的数据类型,包括高清地图(Kesten 等人,2019;Chang 等人,2019;Sun 等人,2020c;Wilson 等人,2021)、雷达数据(Caesar 等人,2020)、远程激光雷达数据(Weng 等人,2020;Wang 等人,2021j)、热图像(Choi 等人,2018)。

分析:驾驶数据集的未来前景 研究界见证了自动驾驶场景中 3D 对象检测数据集的爆炸式增长。可以问以下问题:下一代自动驾驶数据集看起来是什么。考虑到 3D 对象检测不是一个独立的任务,而是驱动系统中的组件,我们建议未来的数据集将包括自动驾驶中的所有重要任务:感知、预测、规划和映射,作为一个整体和以端到端的方式,以便从整体和系统的角度考虑 3D 对象检测方法的开发和评估。有一些数据集(Sun et al., 2020c; Caesar et al., 2020; Yogamani et al., 2019)致力于这个目标。

2.3 评估指标

已经提出了各种评估指标来衡量 3D 对象检测方法的性能。这些评估指标可以分为两类。第一类尝试将 2D 对象检测的平均精度 (AP) 度量 (Lin et al., 2014) 扩展到 3D 空间:

其中 p(r ) 是与 (Lin et al., 2014) 相同的精确召回曲线。与 2D AP 度量的主要区别在于在计算精度和召回率时基本事实和预测之间的匹配标准。KITTI Geiger等人(2012)提出了两种广泛使用的AP指标:P3D和A PB E V,其中如果两个长方体的3D交集超过并集(3D IoU)高于某个阈值,则AP3D将预测对象与各自的地面真相匹配,PB E V基于鸟瞰图(BEV IoU)中两个长方体的IoU。NuScenes Caesar等人(2020)提出了一个Pcenter,其中如果预测对象中心位置的距离低于某个阈值,则将预测对象与地面真实对象匹配,并进一步提出NuScenes检测分数(NDS)来考虑A Pcenter和其他参数的误差,即大小、航向、速度。Waymo Sun等人(2020c)提出了一种Phungarian,它应用匈牙利算法来匹配地面真相和预测,并提出了Heading (A P H)加权的AP,将航向误差作为系数纳入AP计算。

另一类方法试图从更实际的角度解决评估问题。这个想法是 3D 对象检测的质量应该与下游任务(即运动规划)相关,因此最佳检测方法应该最有助于规划者在实际应用中确保驾驶的安全性。为了实现这一目标,PKL (Philion et al., 2020) 分别使用基于预测和地面实况检测的自我车辆未来计划状态的 KL 散度来衡量检测质量。SDE Deng等人(2021a)利用物体边界到自我车辆的最小距离作为支撑距离,测量支撑距离误差。

分析:不同评估指标的优缺点 基于 AP 的评估指标(Geiger 等人,2012 年;Caesar 等人,2020 年;Sun 等人,2020c)可以自然地继承 2D 检测的优势。然而,这些指标忽略了检测对安全问题的影响,这也在实际应用中至关重要。例如,在AP计算中,对自我车辆附近的物体进行误检,远离自我车辆可能会在AP计算中获得类似的惩罚水平,但在实际应用中,对附近物体的误检比远处物体的误检要危险得多。因此,从安全驾驶的角度来看,基于 AP 的指标可能不是最优解。PKLPhilion等人(2020)和SDE Deng等人(2021a)通过考虑下游任务中检测的影响部分解决了这个问题,但在建模这些影响时将引入额外的挑战。PKL Philion等人(2020)需要一个预先训练的运动规划器来评估检测性能,但预先训练的规划器也有先天错误,可以使评估过程不准确。SDE Deng 等人。 (2021a)需要重建通常复杂且具有挑战性的对象边界。

(三)理解

3D目标检测可以看成一个函数的输出,这个函数就是检测的模型,输入是各种传感器的数据,例如激光雷达、摄像头等等,输出就是N个3D的目标。而每一个3D目标具有八个参数,分别是物体中心坐标(x,y,z),物体的长宽高l,w,h以及相对于视角的一个偏转

和物体类别class。

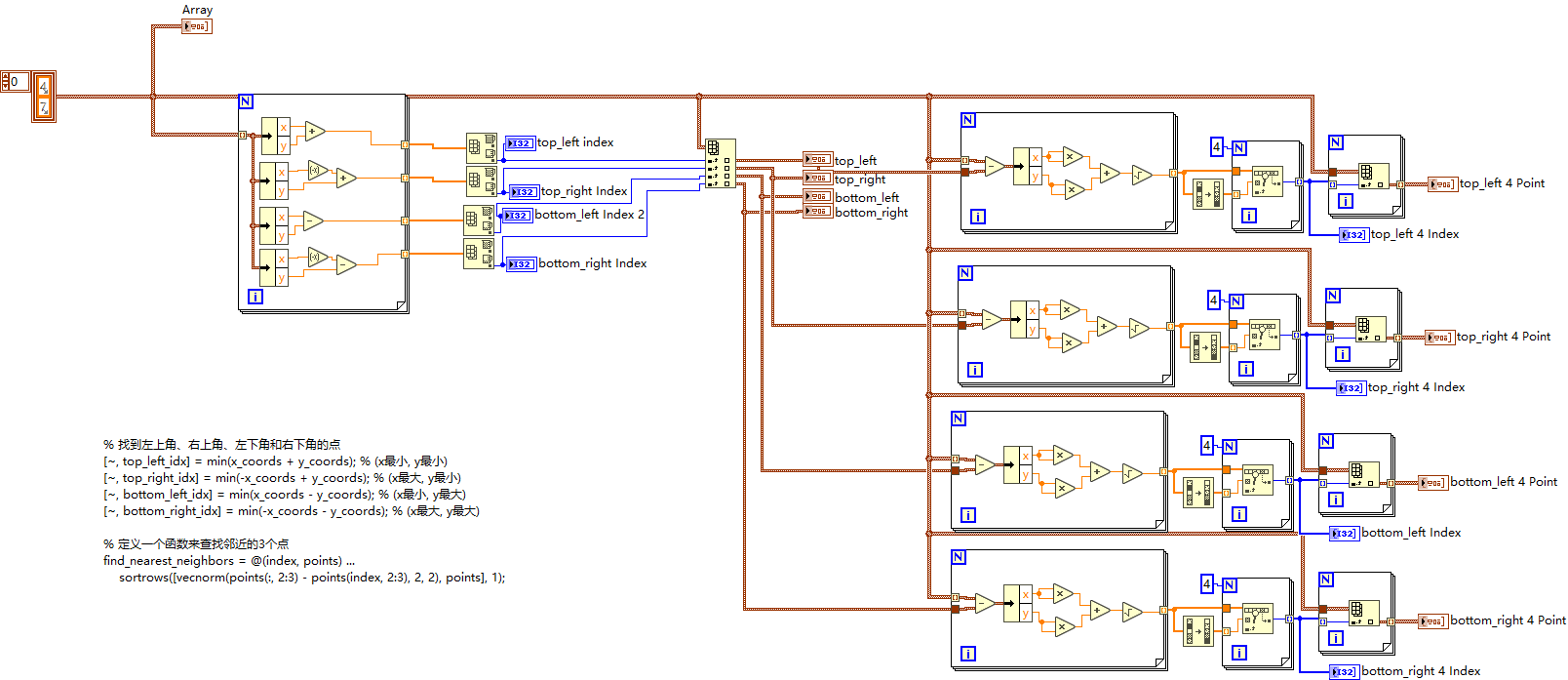

在这张图中可以清楚的看到各种输入设备及其输入的数据样式,我们常用的摄像头输入的就是一些平面的、2D的图片,而激光雷达通过周期性扫描生成一个范围图,再将范围图使用的球坐标转换成点云图的笛卡尔坐标系就得到了所示的点云图像,点云图像通常是大量的点构成的。

2D的目标检测通常只需要标出物体的位置即可,不关注物体的朝向和高度方面的信息,而这些信息都是3D目标检测需要关注的。

可以看到,3D目标检测框具有更多的参数来表示物体的高度和偏移角。

未完待续…… 大家可以订阅专栏及时获取更新消息!