通常成功的大门,其实都是虚掩着的

现在大多在爬取微博时,都是采用selenium框架,爬取pc端微博页面,模拟鼠标下拉来解决动态加载的问题,虽然笨拙,但是也能解决问题。今天我给大家推荐个更加好的方法。首先清看下面两个url对应的页面有什么不同?

https://weibo.cn/1195354434 (手机触屏版新浪微博林俊杰个人主页的url)

https://m.weibo.cn/u/1195354434 (手机端新浪微博林俊杰个人主页的url)

https://weibo.com/jjlin (PC端新浪微博林俊杰个人主页的url)https://weibo.com/1195354434这两个url一样

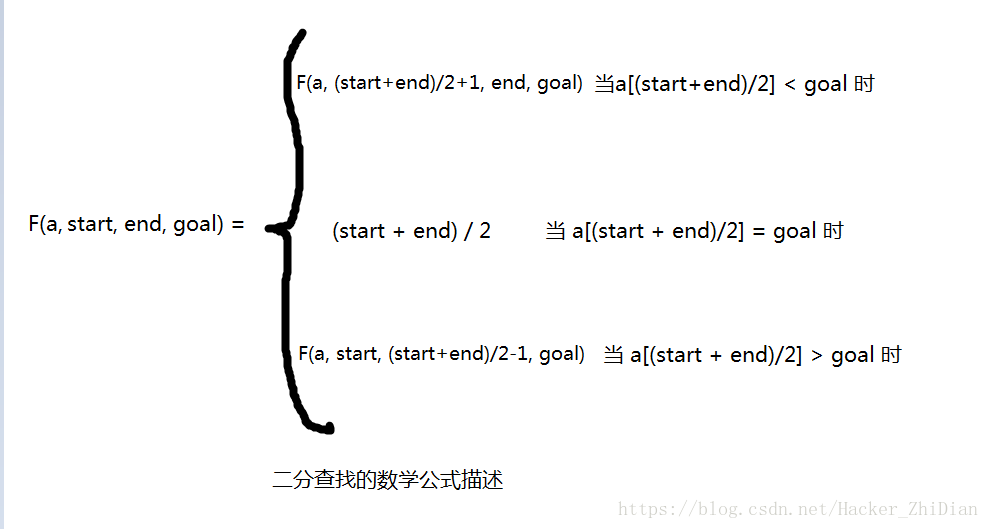

相信看到这里,应该可以想到我们使用第一类的url去爬取数据应该更好,看下图,翻看微博所有内容只需要更改url里面的参数即可,相信到这里大家都会了吧,爬取微博的url也是虚掩着的,只是你没发现

代码大部分拷贝而来(做了优化改进),请看原文[Python3爬虫]爬取新浪微博用户信息及微博内容

"""

@author: cht

@time: 2019/12/7 17:33

"""# -*- coding: utf-8 -*-import time

import csv

from bs4 import BeautifulSoup

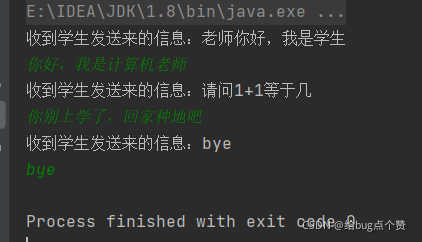

from selenium import webdriverclass NEW_weibo():def Login(self,id,username,password):try:print(u'登陆新浪微博手机端...')browser = webdriver.Chrome()url = 'https://passport.weibo.cn/signin/login'browser.get(url)time.sleep(3)usernameFlag = browser.find_element_by_css_selector('#loginName')time.sleep(2)usernameFlag.clear()usernameFlag.send_keys(username)passwordFlag = browser.find_element_by_css_selector('#loginPassword')time.sleep(2)passwordFlag.send_keys(password)print('# 点击登录')browser.find_element_by_css_selector('#loginAction').click()##这里给个15秒非常重要,因为在点击登录之后,新浪微博会有个九宫格验证码,下图有,通过程序执行的话会有点麻烦(可以参考崔庆才的Python书里面有解决方法),这里就手动,但是我还没遇到验证码问题time.sleep(15)except Exception as e:print(e)print('---------------登录Error---------------------')print('完成登陆!')try:print("爬取指定id微博用户信息")# id = '1195354434'# 用户的url结构为 url = 'http://weibo.cn/' + idurl = 'http://weibo.cn/' + idbrowser.get(url)time.sleep(3)# 使用BeautifulSoup解析网页的HTMLsoup = BeautifulSoup(browser.page_source, 'lxml')# 提取商户的uid信息uid = soup.find('td', attrs={'valign': 'top'})uid = uid.a['href']uid = uid.split('/')[1]# 提取最大页码数目pageSize = soup.find('div', attrs={'id': 'pagelist'})pageSize = pageSize.find('div').getText()Max_pageSize = (pageSize.split('/')[1]).split('页')[0]# 提取微博数量divMessage = soup.find('div', attrs={'class': 'tip2'})weiBoCount = divMessage.find('span').getText()weiBoCount = (weiBoCount.split('[')[1]).replace(']', '')# 提取关注数量和粉丝数量a = divMessage.find_all('a')[:2]FolloweCount = (a[0].getText().split('[')[1]).replace(']', '')FollowersCount = (a[1].getText().split('[')[1]).replace(']', '')print("微博页数:%s"%Max_pageSize)print("微博数目:%s"%weiBoCount)print("关注数目:%s"%FolloweCount)print("粉丝数目:%s"%FollowersCount)except Exception as e:print(e)# 通过循环来抓取每一页数据try:csv_file = open('./linjunjie.csv', "w", encoding='utf-8')csv_writer = csv.writer(csv_file)for i in range(1, 31): # Max_pageSize+1# 每一页数据的url结构为 url = 'http://weibo.cn/' + id + ‘?page=’ + inew_url = url + '?page=' + str(i)browser.get(new_url)time.sleep(1)# 使用BeautifulSoup解析网页的HTMLsoup = BeautifulSoup(browser.page_source, 'lxml')body = soup.find('body')divss = body.find_all('div', attrs={'class': 'c'})[1:-2]for divs in divss:# yuanChuang : 0表示转发,1表示原创yuanChuang = '1' # 初始值为原创,当非原创时,更改此值div = divs.find_all('div')# 这里有三种情况,两种为原创,一种为转发if (len(div) == 2): # 原创,有图# 爬取微博内容content = div[0].find('span', attrs={'class': 'ctt'}).getText()aa = div[1].find_all('a')for a in aa:text = a.getText()try:if (('赞' in text) or ('转发' in text) or ('评论' in text)):# 爬取点赞数if ('赞' in text):likes = (text.split('[')[1]).replace(']', '')# 爬取转发数elif ('转发' in text):forward = (text.split('[')[1]).replace(']', '')# 爬取评论数目elif ('评论' in text):comments = (text.split('[')[1]).replace(']', '')# 爬取微博来源和时间span = divs.find('span', attrs={'class': 'ct'}).getText()releaseTime = str(span.split('来自')[0])tool = span.split('来自')[1]except Exception as e:print("第%s页微博出错了:%s" % (i, e))continue# 和上面一样elif (len(div) == 1): # 原创,无图content = div[0].find('span', attrs={'class': 'ctt'}).getText()aa = div[0].find_all('a')try:for a in aa:text = a.getText()if (('赞' in text) or ('转发' in text) or ('评论' in text)):if ('赞' in text):likes = (text.split('[')[1]).replace(']', '')elif ('转发' in text):forward = (text.split('[')[1]).replace(']', '')elif ('评论' in text):comments = (text.split('[')[1]).replace(']', '')span = divs.find('span', attrs={'class': 'ct'}).getText()releaseTime = str(span.split('来自')[0])tool = span.split('来自')[1]except Exception as e:print("第%s页微博出错了:%s" % (i, e))continue# 这里为转发,其他和上面一样elif (len(div) == 3): # 转发的微博yuanChuang = '0'content = div[0].find('span', attrs={'class': 'ctt'}).getText()aa = div[2].find_all('a')try:for a in aa:text = a.getText()if (('赞' in text) or ('转发' in text) or ('评论' in text)):if ('赞' in text):likes = (text.split('[')[1]).replace(']', '')elif ('转发' in text):forward = (text.split('[')[1]).replace(']', '')elif ('评论' in text):comments = (text.split('[')[1]).replace(']', '')span = divs.find('span', attrs={'class': 'ct'}).getText()releaseTime = str(span.split('来自')[0])tool = span.split('来自')[1]except Exception as e:print("第%s页微博出错了:%s" % (i, e))continueprint("发布时间:%s"%releaseTime)print("内容:%s"%content)weibocontent = [releaseTime,content,likes,forward,comments,tool]csv_writer.writerow(weibocontent)time.sleep(2)print("第%s页内容爬取完成"%i)finally:csv_file.close()if __name__ == '__main__':wb = NEW_weibo()username = "" #微博账号password = "" #微博密码id = '1195354434'#每个微博用户都有一个固定的id,这个是林俊杰id,如果不知道id怎么找,只要打开F12,对应的个人微博主页的url就会变化带有id了wb.Login(id,username,password)爬取的内容保存为csv文件

接下来还用一种爬取微博评论内容的爬虫,具体实现方式参考《利用Python分析《庆余年》人物图谱和微博传播路径》