Meaning Space and Semantic Laws of Motion

意义空间和语义运动规律

We discussed above that inside ChatGPT any piece of text is effectively represented by an array of numbers that we can think of as coordinates of a point in some kind of “linguistic feature space”. So when ChatGPT continues a piece of text this corresponds to tracing out a trajectory in linguistic feature space. But now we can ask what makes this trajectory correspond to text we consider meaningful. And might there perhaps be some kind of “semantic laws of motion” that define—or at least constrain—how points in linguistic feature space can move around while preserving “meaningfulness”?

我们在上文中讨论了,在 ChatGPT 内部,任何一段文本实际上都由一组数字表示,我们可以将其看作是某种“语言特征空间”中的一个点的坐标。因此,当 ChatGPT 继续一段文本时,相当于在语言特征空间中描绘出一条轨迹。但现在我们可以问,是什么使这条轨迹对应于我们认为有意义的文本。或者说,是否可能存在某种“语义运动定律”,定义或至少约束着语言特征空间中的点在保持“有意义性”的同时如何移动?

So what is this linguistic feature space like? Here’s an example of how single words (here, common nouns) might get laid out if we project such a feature space down to 2D:

那么,这个语言特征空间是什么样的呢?以下是一个示例,展示了如果将这样一个特征空间投影到二维时,单个单词(这里是普通名词)可能会如何排列:

We saw another example above based on words representing plants and animals. But the point in both cases is that “semantically similar words” are placed nearby.

我们之前也看到了另一个基于代表植物和动物的单词的示例。但无论哪种情况,关键在于“语义相似的单词”被放置在附近。

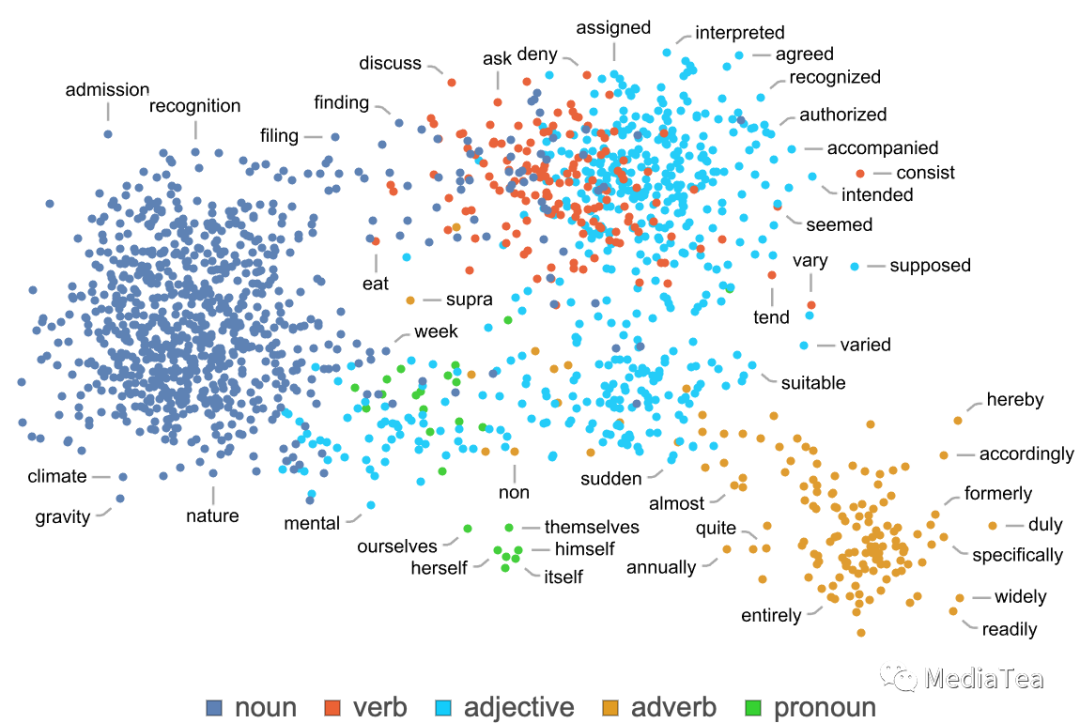

As another example, here’s how words corresponding to different parts of speech get laid out:

再举一个例子,这是根据不同词性排列的单词的示例:

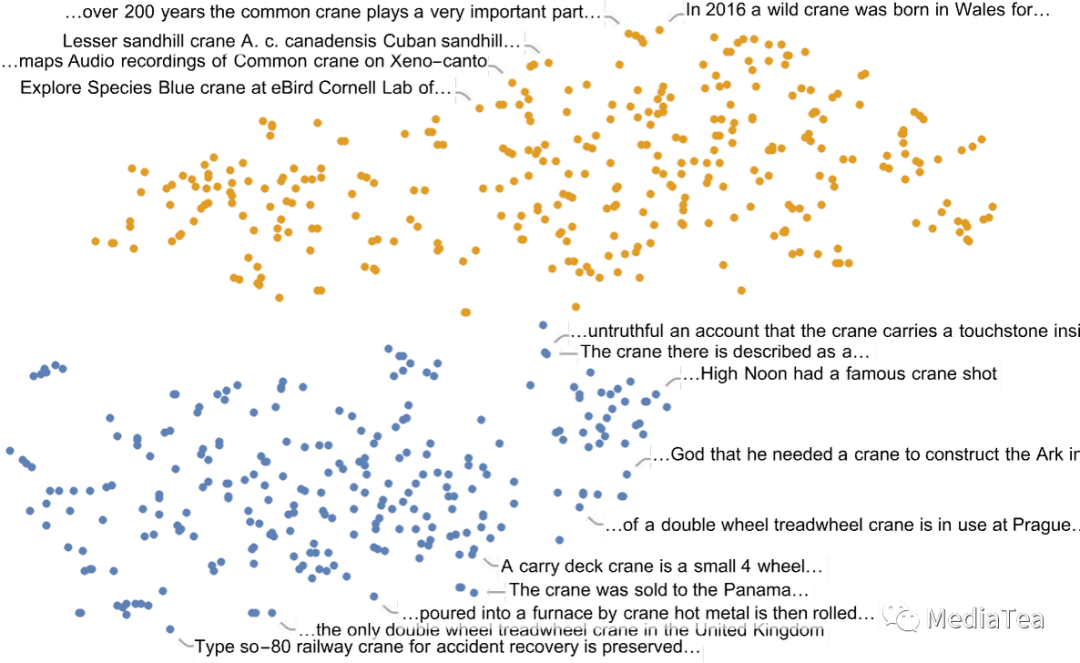

Of course, a given word doesn’t in general just have “one meaning” (or necessarily correspond to just one part of speech). And by looking at how sentences containing a word lay out in feature space, one can often “tease apart” different meanings—as in the example here for the word “crane” (bird or machine?):

当然,一个给定的单词通常并不只具有“一个意义”(或者不一定仅对应一个词性)。通过观察包含某个单词的句子在特征空间中的分布,我们通常可以区分出不同的含义——就像这里针对单词“crane”(鸟还是机械?)的例子:

OK, so it’s at least plausible that we can think of this feature space as placing “words nearby in meaning” close in this space. But what kind of additional structure can we identify in this space? Is there for example some kind of notion of “parallel transport” that would reflect “flatness” in the space? One way to get a handle on that is to look at analogies:

好的,至少我们可以认为,在这个特征空间中,将“意义上相近的词语”放在空间中的接近位置是合理的。但是我们能在这个空间中识别出什么额外的结构呢?例如,是否存在某种“平行运输”的概念,反映了空间中的“平坦性”?一种解决方法是看类比:

And, yes, even when we project down to 2D, there’s often at least a “hint of flatness”, though it’s certainly not universally seen.

是的,即使我们将其投影到二维,通常也至少有一点“平坦性的迹象”,尽管它肯定并非普遍存在。

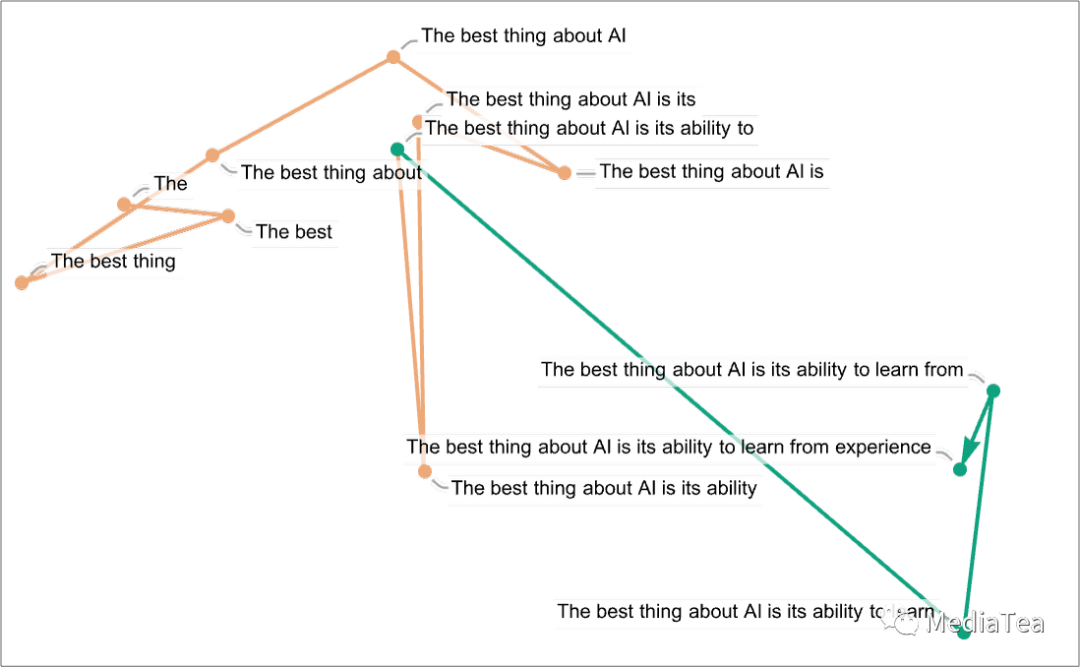

So what about trajectories? We can look at the trajectory that a prompt for ChatGPT follows in feature space—and then we can see how ChatGPT continues that:

那么轨迹呢?我们可以看看 ChatGPT 提示在特征空间中遵循的轨迹,然后我们可以看看 ChatGPT 如何继续这个轨迹:

There’s certainly no “geometrically obvious” law of motion here. And that’s not at all surprising; we fully expect this to be a considerably more complicated story. And, for example, it’s far from obvious that even if there is a “semantic law of motion” to be found, what kind of embedding (or, in effect, what “variables”) it’ll most naturally be stated in.

这里肯定没有“几何上显而易见”的运动规律。这一点一点也不令人惊讶;我们完全预料到这将是一个相当复杂的故事。例如,即使真的存在一条“语义运动定律”,它最自然地是用何种嵌入方式(或实际上是用什么“变量”)来陈述也远非显而易见。

In the picture above, we’re showing several steps in the “trajectory”—where at each step we’re picking the word that ChatGPT considers the most probable (the “zero temperature” case). But we can also ask what words can “come next” with what probabilities at a given point:

在上图中,我们展示了“轨迹”的几个步骤,在每个步骤中,我们都选择了 ChatGPT 认为最可能出现的单词(“零温度”情况)。但我们也可以问,在给定点,哪些词语可能“接下来出现”,以及它们出现的概率是多少:

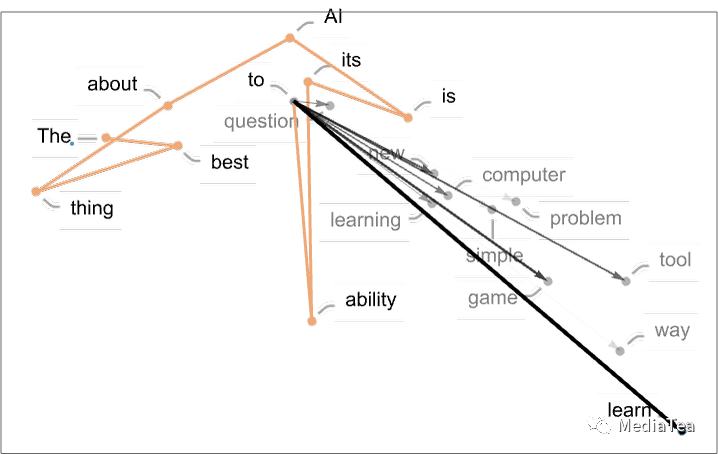

And what we see in this case is that there’s a “fan” of high-probability words that seems to go in a more or less definite direction in feature space. What happens if we go further? Here are the successive “fans” that appear as we “move along” the trajectory:

在这种情况下,我们看到的是一个在特征空间中大致朝着明确方向的高概率词汇“扇形”。如果我们继续研究呢?以下是沿轨迹“移动”时出现的连续“扇形”:

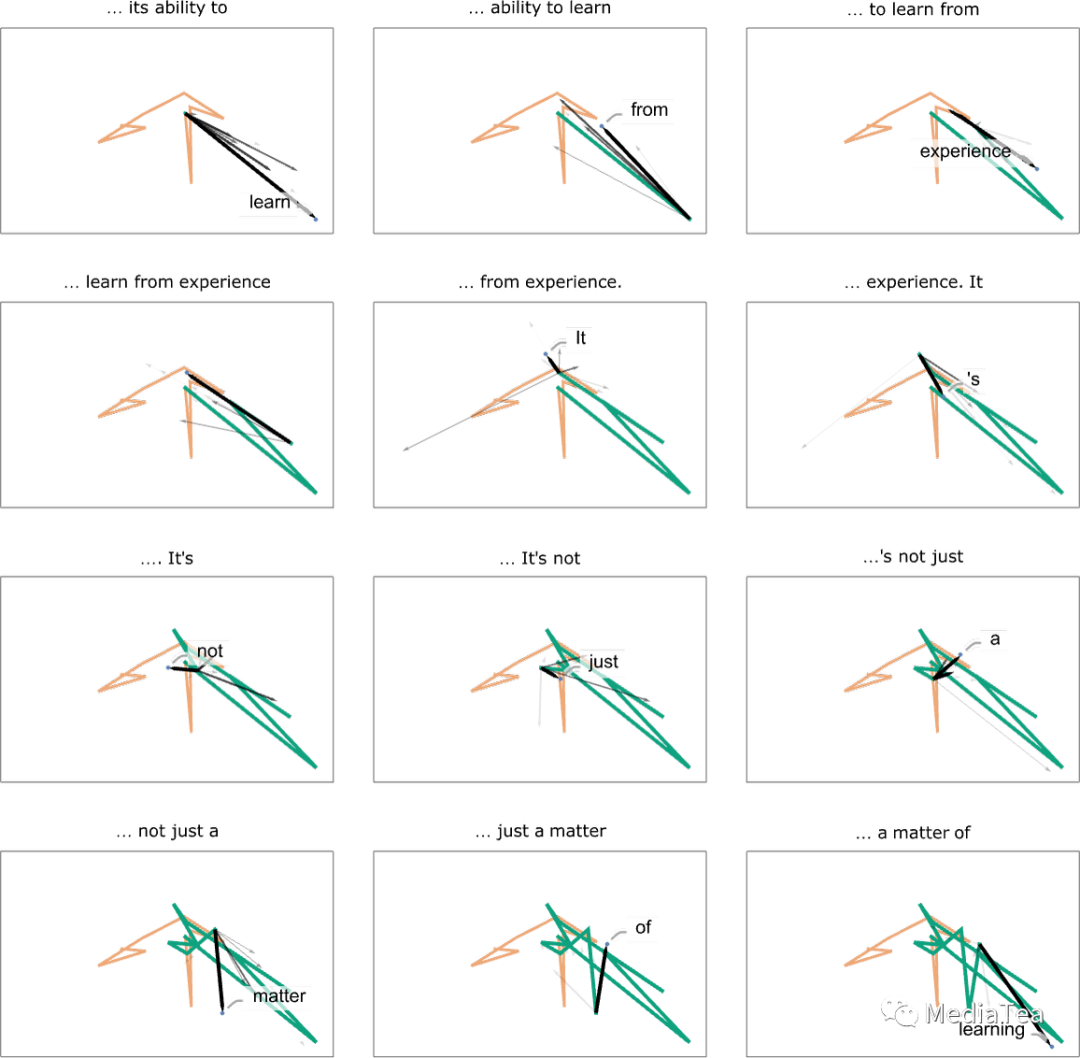

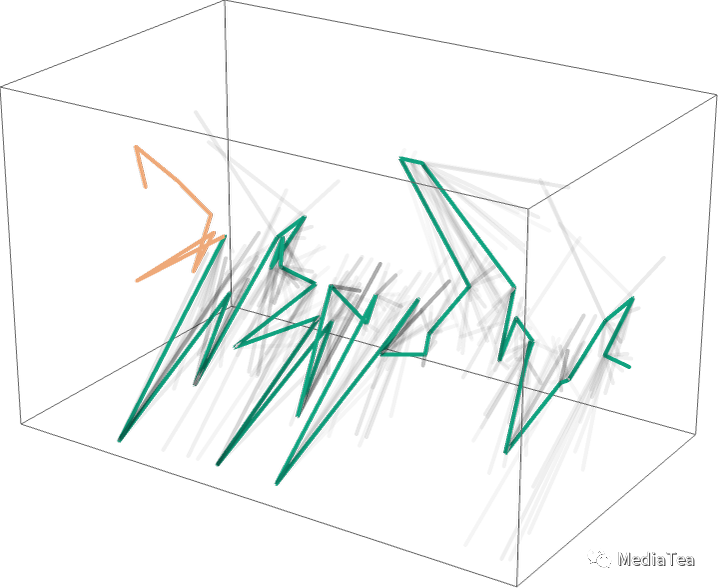

Here’s a 3D representation, going for a total of 40 steps:

这是一个三维表示,总共进行了 40 个步骤:

And, yes, this seems like a mess—and doesn’t do anything to particularly encourage the idea that one can expect to identify “mathematical-physics-like” “semantic laws of motion” by empirically studying “what ChatGPT is doing inside”. But perhaps we’re just looking at the “wrong variables” (or wrong coordinate system) and if only we looked at the right one, we’d immediately see that ChatGPT is doing something “mathematical-physics-simple” like following geodesics. But as of now, we’re not ready to “empirically decode” from its “internal behavior” what ChatGPT has “discovered” about how human language is “put together”.

是的,这看起来很混乱,并且并未特别鼓励人们期待通过实证研究“ChatGPT 在内部做什么”来识别类似“数学物理”的“语义运动定律”。但也许我们只是在看“错误的变量”(或错误的坐标系统),如果我们只观察正确的变量,我们会立即发现 ChatGPT 正在做一些类似于沿测地线运动的“数学物理简单”事情。但到目前为止,我们尚未准备好从其“内部行为”中“实证解码”出 ChatGPT 已经“发现”了关于人类语言是如何“组合”的知识。

“点赞有美意,赞赏是鼓励”