WebRTC音视频通话-iOS端调用ossrs直播拉流

之前实现iOS端调用ossrs服务,文中提到了推流。没有写拉流流程,所以会用到文中的WebRTCClient。请详细查看:https://blog.csdn.net/gloryFlow/article/details/132257196

一、iOS播放端拉流效果

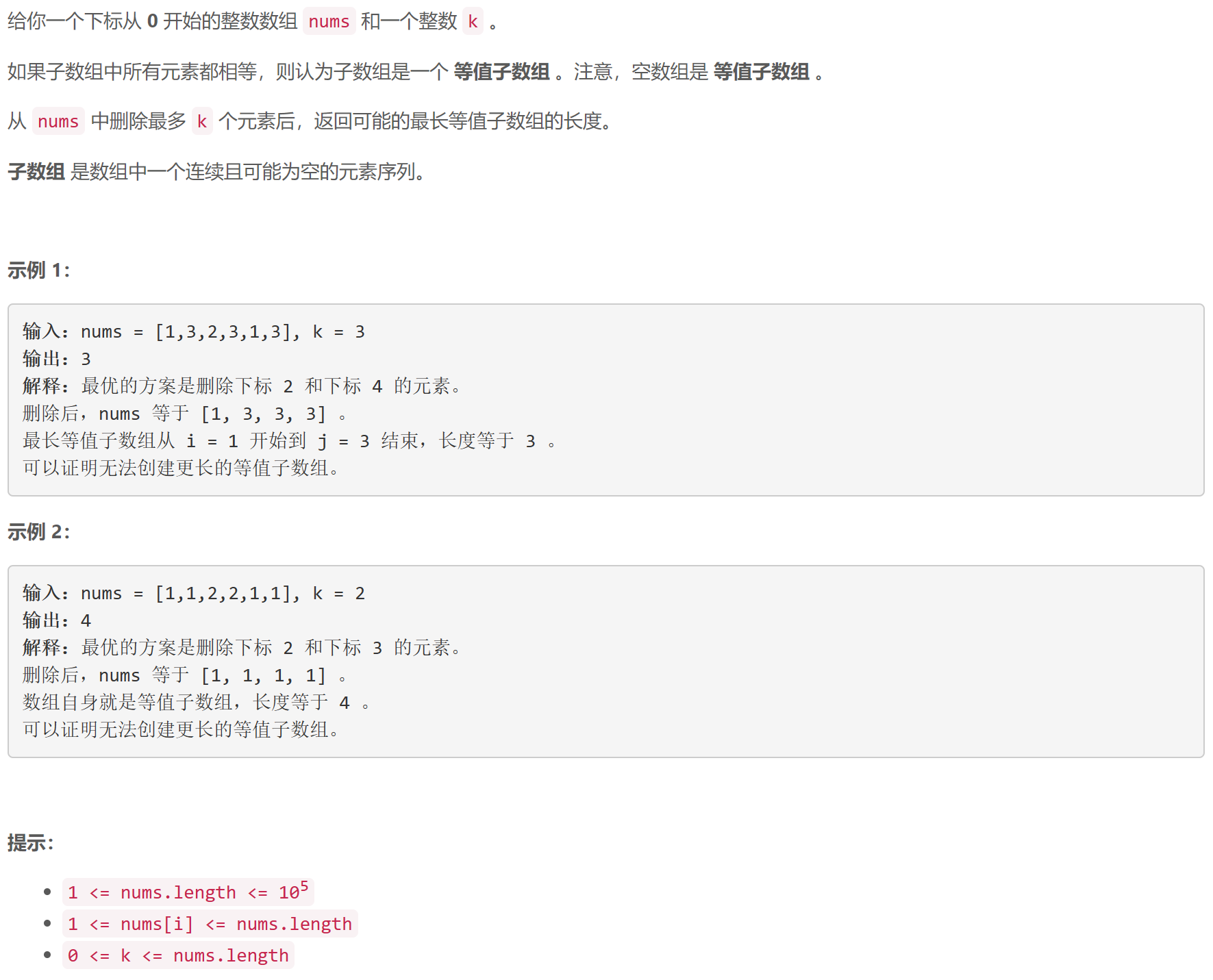

二、实现iOS端调用ossrs拉流

最近有朋友问过,我发现之前少了一块拉流流程,这里补充一下。

2.1、拉流实现时候设置WebRTCClient

拉流实现时候设置WebRTCClient时候初始化,这里isPublish为false哦

#pragma mark - Lazy

- (WebRTCClient *)webRTCClient {if (!_webRTCClient) {_webRTCClient = [[WebRTCClient alloc] initWithPublish:NO];}return _webRTCClient;

}

2.2、设置拉流显示的画面View。

之前的文中摄像头画面显示使用的是startCaptureLocalVideo,但是拉流需要设置remoteRenderView

WebRTCClient中有定义:

/**RTCVideoRenderer*/

@property (nonatomic, weak) id<RTCVideoRenderer> remoteRenderView;

设置拉流显示的画面View

#import "RTCPlayView.h"@interface RTCPlayView ()@property (nonatomic, strong) WebRTCClient *webRTCClient;

@property (nonatomic, strong) RTCEAGLVideoView *remoteRenderer;@end@implementation RTCPlayView- (instancetype)initWithFrame:(CGRect)frame webRTCClient:(WebRTCClient *)webRTCClient {self = [super initWithFrame:frame];if (self) {self.webRTCClient = webRTCClient;self.remoteRenderer = [[RTCEAGLVideoView alloc] initWithFrame:CGRectZero];self.remoteRenderer.contentMode = UIViewContentModeScaleAspectFit;[self addSubview:self.remoteRenderer];self.webRTCClient.remoteRenderView = self.remoteRenderer;}return self;

}- (void)layoutSubviews {[super layoutSubviews];self.remoteRenderer.frame = self.bounds;NSLog(@"self.remoteRenderer frame:%@", NSStringFromCGRect(self.remoteRenderer.frame));

}@end

这里使用的创建RTCEAGLVideoView,设置self.webRTCClient.remoteRenderView为self.remoteRenderer

2.3、调用ossrs服务play,接口为rtc/v1/play/

实现拉流调用流程和推理类似,这里不再说明,请查看 https://blog.csdn.net/gloryFlow/article/details/132257196

具体方法如下

- (void)playBtnClick {__weak typeof(self) weakSelf = self;[self.webRTCClient offer:^(RTCSessionDescription *sdp) {[weakSelf.webRTCClient changeSDP2Server:sdp urlStr:@"https://192.168.10.102:1990/rtc/v1/play/" streamUrl:@"webrtc://192.168.10.102:1990/live/livestream" closure:^(BOOL isServerRetSuc) {NSLog(@"isServerRetSuc:%@",(isServerRetSuc?@"YES":@"NO"));}];}];

}

完整的Controller代码如下

#import "RTCPlayViewController.h"@interface RTCPlayViewController ()<WebRTCClientDelegate>@property (nonatomic, strong) WebRTCClient *webRTCClient;@property (nonatomic, strong) RTCPlayView *rtcPlayView;@property (nonatomic, strong) UIButton *playBtn;@end@implementation RTCPlayViewController- (void)viewDidLoad {[super viewDidLoad];// Do any additional setup after loading the view.self.view.backgroundColor = [UIColor whiteColor];self.rtcPlayView = [[RTCPlayView alloc] initWithFrame:CGRectZero webRTCClient:self.webRTCClient];[self.view addSubview: self.rtcPlayView];self.rtcPlayView.backgroundColor = [UIColor lightGrayColor];self.rtcPlayView.frame = self.view.bounds;CGFloat screenWidth = CGRectGetWidth(self.view.bounds);CGFloat screenHeight = CGRectGetHeight(self.view.bounds);self.playBtn = [UIButton buttonWithType:UIButtonTypeCustom];self.playBtn.frame = CGRectMake(50, screenHeight - 160, screenWidth - 2*50, 46);self.playBtn.layer.cornerRadius = 4;self.playBtn.backgroundColor = [UIColor grayColor];[self.playBtn setTitle:@"publish" forState:UIControlStateNormal];[self.playBtn addTarget:self action:@selector(playBtnClick) forControlEvents:UIControlEventTouchUpInside];[self.view addSubview:self.playBtn];self.webRTCClient.delegate = self;

}- (void)playBtnClick {__weak typeof(self) weakSelf = self;[self.webRTCClient offer:^(RTCSessionDescription *sdp) {[weakSelf.webRTCClient changeSDP2Server:sdp urlStr:@"https://192.168.10.102:1990/rtc/v1/play/" streamUrl:@"webrtc://192.168.10.102:1990/live/livestream" closure:^(BOOL isServerRetSuc) {NSLog(@"isServerRetSuc:%@",(isServerRetSuc?@"YES":@"NO"));}];}];

}#pragma mark - WebRTCClientDelegate

- (void)webRTCClient:(WebRTCClient *)client didDiscoverLocalCandidate:(RTCIceCandidate *)candidate {NSLog(@"webRTCClient didDiscoverLocalCandidate");

}- (void)webRTCClient:(WebRTCClient *)client didChangeConnectionState:(RTCIceConnectionState)state {NSLog(@"webRTCClient didChangeConnectionState");/**RTCIceConnectionStateNew,RTCIceConnectionStateChecking,RTCIceConnectionStateConnected,RTCIceConnectionStateCompleted,RTCIceConnectionStateFailed,RTCIceConnectionStateDisconnected,RTCIceConnectionStateClosed,RTCIceConnectionStateCount,*/UIColor *textColor = [UIColor blackColor];BOOL openSpeak = NO;switch (state) {case RTCIceConnectionStateCompleted:case RTCIceConnectionStateConnected:textColor = [UIColor greenColor];openSpeak = YES;break;case RTCIceConnectionStateDisconnected:textColor = [UIColor orangeColor];break;case RTCIceConnectionStateFailed:case RTCIceConnectionStateClosed:textColor = [UIColor redColor];break;case RTCIceConnectionStateNew:case RTCIceConnectionStateChecking:case RTCIceConnectionStateCount:textColor = [UIColor blackColor];break;default:break;}dispatch_async(dispatch_get_main_queue(), ^{NSString *text = [NSString stringWithFormat:@"%ld", state];[self.playBtn setTitle:text forState:UIControlStateNormal];[self.playBtn setTitleColor:textColor forState:UIControlStateNormal];if (openSpeak) {[self.webRTCClient speakOn];}

// if textColor == .green {

// self?.webRTCClient.speakerOn()

// }});

}- (void)webRTCClient:(WebRTCClient *)client didReceiveData:(NSData *)data {NSLog(@"webRTCClient didReceiveData");

}#pragma mark - Lazy

- (WebRTCClient *)webRTCClient {if (!_webRTCClient) {_webRTCClient = [[WebRTCClient alloc] initWithPublish:NO];}return _webRTCClient;

}@end

至此,可以实现iOS端调用的ossrs视频通话拉流

其他

之前搭建ossrs服务,可以查看:https://blog.csdn.net/gloryFlow/article/details/132257196

之前实现iOS端调用ossrs音视频通话,可以查看:https://blog.csdn.net/gloryFlow/article/details/132262724

之前WebRTC音视频通话高分辨率不显示画面问题,可以查看:https://blog.csdn.net/gloryFlow/article/details/132240952

修改SDP中的码率Bitrate,可以查看:https://blog.csdn.net/gloryFlow/article/details/132263021

GPUImage视频通话视频美颜滤镜,可以查看:https://blog.csdn.net/gloryFlow/article/details/132265842

RTC直播本地视频或相册视频,可以查看:https://blog.csdn.net/gloryFlow/article/details/132267068

三、小结

WebRTC音视频通话-iOS端调用ossrs直播拉流。用到了WebRTC调用ossrs实现推拉流效果。内容较多,描述可能不准确,请见谅。

https://blog.csdn.net/gloryFlow/article/details/132417602

学习记录,每天不停进步。