Hotel Booking Analysis

目的:从我们拥有的数据集中创建有意义的估计量,并通过将它们与不同的ML模型和ROC曲线的准确性得分进行比较,来选择预测性能最好的模型。

1- EDA

2- Preprocessing

3- Models and ROC Curve Comparison

- Logistic Regression

- Gaussian Naive Bayes

- Support Vector Classification

- Decision Tree Model

- Random Forest

- Model Tuning for Random Forest

- XGBoost

- Neural Network

- Model Tuning for Neural Network

import numpy as np

import pandas as pd

import seaborn as snsimport matplotlib.pyplot as pltfrom sklearn.metrics import accuracy_score, roc_auc_score, roc_curve, confusion_matrix, auc

from sklearn.model_selection import train_test_split, cross_val_score, GridSearchCV

from sklearn.preprocessing import LabelEncoder, StandardScaler from sklearn.tree import DecisionTreeClassifier

from sklearn.linear_model import LogisticRegression

from sklearn.svm import SVC

from sklearn.naive_bayes import GaussianNB

from sklearn.ensemble import RandomForestClassifier

from xgboost import XGBClassifier

from sklearn.neural_network import MLPClassifierfrom warnings import filterwarnings

filterwarnings('ignore')

df = pd.read_csv("../kaggle/hotel_bookings.csv")

df.head()

| hotel | is_canceled | lead_time | arrival_date_year | arrival_date_month | arrival_date_week_number | arrival_date_day_of_month | stays_in_weekend_nights | stays_in_week_nights | adults | ... | deposit_type | agent | company | days_in_waiting_list | customer_type | adr | required_car_parking_spaces | total_of_special_requests | reservation_status | reservation_status_date | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | Resort Hotel | 0 | 342 | 2015 | July | 27 | 1 | 0 | 0 | 2 | ... | No Deposit | NaN | NaN | 0 | Transient | 0.0 | 0 | 0 | Check-Out | 2015-07-01 |

| 1 | Resort Hotel | 0 | 737 | 2015 | July | 27 | 1 | 0 | 0 | 2 | ... | No Deposit | NaN | NaN | 0 | Transient | 0.0 | 0 | 0 | Check-Out | 2015-07-01 |

| 2 | Resort Hotel | 0 | 7 | 2015 | July | 27 | 1 | 0 | 1 | 1 | ... | No Deposit | NaN | NaN | 0 | Transient | 75.0 | 0 | 0 | Check-Out | 2015-07-02 |

| 3 | Resort Hotel | 0 | 13 | 2015 | July | 27 | 1 | 0 | 1 | 1 | ... | No Deposit | 304.0 | NaN | 0 | Transient | 75.0 | 0 | 0 | Check-Out | 2015-07-02 |

| 4 | Resort Hotel | 0 | 14 | 2015 | July | 27 | 1 | 0 | 2 | 2 | ... | No Deposit | 240.0 | NaN | 0 | Transient | 98.0 | 0 | 1 | Check-Out | 2015-07-03 |

5 rows × 32 columns

df.shape

(119390, 32)

print("# of NaN in each columns:", df.isnull().sum(), sep='\n')

# of NaN in each columns:

hotel 0

is_canceled 0

lead_time 0

arrival_date_year 0

arrival_date_month 0

arrival_date_week_number 0

arrival_date_day_of_month 0

stays_in_weekend_nights 0

stays_in_week_nights 0

adults 0

children 4

babies 0

meal 0

country 488

market_segment 0

distribution_channel 0

is_repeated_guest 0

previous_cancellations 0

previous_bookings_not_canceled 0

reserved_room_type 0

assigned_room_type 0

booking_changes 0

deposit_type 0

agent 16340

company 112593

days_in_waiting_list 0

customer_type 0

adr 0

required_car_parking_spaces 0

total_of_special_requests 0

reservation_status 0

reservation_status_date 0

dtype: int64

# It is better to copy original dataset, it can be needed in some cases.

data = df.copy()

1. EDA

条件分布:在新老顾客中的订单中,订单取消的数量如下,可以发现老顾客没有人取消订单,但是新顾客中有一部分人取消订单。

sns.set(style = "darkgrid")

ax = sns.countplot(x = "is_canceled", hue = 'is_repeated_guest', data = data)

plt.title("Canceled or not", fontdict = {'fontsize': 20})

plt.show()

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-GgSI1rCu-1590722659706)(output_11_0.png)]](https://img-blog.csdnimg.cn/20200529113015255.png?x-oss-process=image/watermark,type_ZmFuZ3poZW5naGVpdGk,shadow_10,text_aHR0cHM6Ly9ibG9nLmNzZG4ubmV0L3FxXzQxMDgxNzE2,size_16,color_FFFFFF,t_70)

重复入住的客人不会取消预订也就不足为奇了。 当然也有一些例外。 同样,大多数顾客不是回头客。

按细分市场和酒店类型划分的酒店住宿之夜的箱形图分布

plt.figure(figsize = (15,10))

sns.boxplot(x = "market_segment", y = "stays_in_week_nights", data = data, hue = "hotel", palette = 'Set1');

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-QT9kvBlv-1590722659713)(output_14_0.png)]](https://img-blog.csdnimg.cn/20200529113059157.png?x-oss-process=image/watermark,type_ZmFuZ3poZW5naGVpdGk,shadow_10,text_aHR0cHM6Ly9ibG9nLmNzZG4ubmV0L3FxXzQxMDgxNzE2,size_16,color_FFFFFF,t_70)

plt.figure(figsize=(15,10))

sns.boxplot(x = "market_segment", y = "stays_in_weekend_nights", data = data, hue = "hotel", palette = 'Set1')

plt.show()

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-PSSPtwdl-1590722659715)(output_15_0.png)]](https://img-blog.csdnimg.cn/20200529113132331.png?x-oss-process=image/watermark,type_ZmFuZ3poZW5naGVpdGk,shadow_10,text_aHR0cHM6Ly9ibG9nLmNzZG4ubmV0L3FxXzQxMDgxNzE2,size_16,color_FFFFFF,t_70)

航空部门(Aviation)的客户似乎没有住在度假酒店,而且日均消费水平相对较低。除此之外,周末和工作日的平均值大致相等。航空部门的客户可能会因业务原因很快到达。也可能大多数机场都离大海有点远,而且最可能离城市酒店更近。

显然,当人们去度假酒店时,他们更喜欢住宿。

市场细分的计数图分布

sns.set(style = "darkgrid")

plt.figure(figsize = (13,10))

ax = sns.countplot(x = "market_segment", hue = 'deposit_type', data = data)

plt.title("Countplot Distrubiton of Segment by Deposit Type", fontdict = {'fontsize':20})

plt.show()

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-OiEX1sEr-1590722659758)(output_18_0.png)]](https://img-blog.csdnimg.cn/2020052911323878.png?x-oss-process=image/watermark,type_ZmFuZ3poZW5naGVpdGk,shadow_10,text_aHR0cHM6Ly9ibG9nLmNzZG4ubmV0L3FxXzQxMDgxNzE2,size_16,color_FFFFFF,t_70)

plt.figure(figsize = (13,10))

sns.set(style = "darkgrid")

plt.title("Countplot Distributon of Segments by Cancellation", fontdict = {'fontsize':20})

ax = sns.countplot(x = "market_segment", hue = 'is_canceled', data = data)

plt.show()

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-lnEv91Ty-1590722659762)(output_19_0.png)]](https://img-blog.csdnimg.cn/20200529113445349.png?x-oss-process=image/watermark,type_ZmFuZ3poZW5naGVpdGk,shadow_10,text_aHR0cHM6Ly9ibG9nLmNzZG4ubmV0L3FxXzQxMDgxNzE2,size_16,color_FFFFFF,t_70)

取消的提前天数密度曲线

(sns.FacetGrid(data, hue = 'is_canceled',height = 6,xlim = (0,500)).map(sns.kdeplot, 'lead_time', shade = True).add_legend());

plt.show()

![![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-yMkdPNbo-1590722659765)(output_21_0.png)]]](https://img-blog.csdnimg.cn/20200529113427749.png?x-oss-process=image/watermark,type_ZmFuZ3poZW5naGVpdGk,shadow_10,text_aHR0cHM6Ly9ibG9nLmNzZG4ubmV0L3FxXzQxMDgxNzE2,size_16,color_FFFFFF,t_70)

每月取消和按酒店类型划分的客户

plt.figure(figsize =(13,10))

sns.set(style="darkgrid")

plt.title("Total Customers - Monthly ", fontdict={'fontsize': 20})

ax = sns.countplot(x = "arrival_date_month", hue = 'hotel', data = data)

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-O3IjHTCP-1590722659767)(output_23_0.png)]](https://img-blog.csdnimg.cn/2020052911334544.png?x-oss-process=image/watermark,type_ZmFuZ3poZW5naGVpdGk,shadow_10,text_aHR0cHM6Ly9ibG9nLmNzZG4ubmV0L3FxXzQxMDgxNzE2,size_16,color_FFFFFF,t_70)

关于图像的解释:Seaborn会对’color’列中的数值进行归类后按照estimator参数的方法(默认为平均值)计算相应的值,计算出来的值就作为条形图所显示的值(条形图上的误差棒则表示各类的数值相对于条形图所显示的值的误差)

plt.figure(figsize =(13,10))

sns.barplot(x = 'arrival_date_month', y = 'is_canceled', data = data)

plt.show()

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-wLd3i8vC-1590722659769)(output_25_0.png)]](https://img-blog.csdnimg.cn/20200529113504721.png?x-oss-process=image/watermark,type_ZmFuZ3poZW5naGVpdGk,shadow_10,text_aHR0cHM6Ly9ibG9nLmNzZG4ubmV0L3FxXzQxMDgxNzE2,size_16,color_FFFFFF,t_70)

plt.figure(figsize = (20,10))

sns.barplot(x = 'arrival_date_month', y = 'is_canceled', hue = 'hotel', data = data)

plt.show()

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-JpwtHY70-1590722659771)(output_26_0.png)]](https://img-blog.csdnimg.cn/20200529113535540.png?x-oss-process=image/watermark,type_ZmFuZ3poZW5naGVpdGk,shadow_10,text_aHR0cHM6Ly9ibG9nLmNzZG4ubmV0L3FxXzQxMDgxNzE2,size_16,color_FFFFFF,t_70)

预处理

缺失值,特征工程和标准化

print("# of NaN in each columns:", df.isnull().sum(), sep='\n')

# of NaN in each columns:

hotel 0

is_canceled 0

lead_time 0

arrival_date_year 0

arrival_date_month 0

arrival_date_week_number 0

arrival_date_day_of_month 0

stays_in_weekend_nights 0

stays_in_week_nights 0

adults 0

children 4

babies 0

meal 0

country 488

market_segment 0

distribution_channel 0

is_repeated_guest 0

previous_cancellations 0

previous_bookings_not_canceled 0

reserved_room_type 0

assigned_room_type 0

booking_changes 0

deposit_type 0

agent 16340

company 112593

days_in_waiting_list 0

customer_type 0

adr 0

required_car_parking_spaces 0

total_of_special_requests 0

reservation_status 0

reservation_status_date 0

dtype: int64

缺失比例计算

def perc_mv(x, y):perc = y.isnull().sum() / len(x) * 100return percprint('Missing value ratios:\nCompany: {}\nAgent: {}\nCountry: {}'.format(perc_mv(df, df['company']),perc_mv(df, df['agent']),perc_mv(df, df['country'])))

Missing value ratios:

Company: 94.30689337465449

Agent: 13.686238378423655

Country: 0.40874445095904177

data["agent"].value_counts().count()

333

我们可以看到94.3%的公司名缺少值。 因此选择删除公司那一列。

代理列的13.68%缺少值,无需删除代理栏。 但是我们也不应该删除行,因为13.68%的数据确实是巨大的数据,并且这些行有机会获得重要的信息。 有333个唯一代理,因为代理太多,可能无法预测。

NA值也可以是当前333个代理中未列出的代理。 我们无法预测代理,并且由于缺失值占所有数据的13%,因此我们也无法删除它们。 相关部分之后,我将决定如何处理代理。

如果我们在“国家/地区”列中删除缺少值的行,那将不是问题。 不过,我将等待相关性。

# company is dropped

data = data.drop(['company'], axis = 1)

# We have also 4 missing values in children column. If there is no information about children, In my opinion those customers do not have any children.

data['children'] = data['children'].fillna(0)

处理特征

我们应该检查特征以创建一些更有意义的变量,并尽可能减少特征数量。

data.dtypes

hotel object

is_canceled int64

lead_time int64

arrival_date_year int64

arrival_date_month object

arrival_date_week_number int64

arrival_date_day_of_month int64

stays_in_weekend_nights int64

stays_in_week_nights int64

adults int64

children float64

babies int64

meal object

country object

market_segment object

distribution_channel object

is_repeated_guest int64

previous_cancellations int64

previous_bookings_not_canceled int64

reserved_room_type object

assigned_room_type object

booking_changes int64

deposit_type object

agent float64

days_in_waiting_list int64

customer_type object

adr float64

required_car_parking_spaces int64

total_of_special_requests int64

reservation_status object

reservation_status_date object

dtype: object

# I wanted to label them manually. I will do the rest with get.dummies or label_encoder.

data['hotel'] = data['hotel'].map({'Resort Hotel':0, 'City Hotel':1})data['arrival_date_month'] = data['arrival_date_month'].map({'January':1, 'February': 2, 'March':3, 'April':4, 'May':5, 'June':6, 'July':7,'August':8, 'September':9, 'October':10, 'November':11, 'December':12})

上述代码将字符串赋值成字数字。

def family(data):if ((data['adults'] > 0) & (data['children'] > 0)):val = 1elif ((data['adults'] > 0) & (data['babies'] > 0)):val = 1else:val = 0return valdef deposit(data):if ((data['deposit_type'] == 'No Deposit') | (data['deposit_type'] == 'Refundable')):return 0else:return 1

def feature(data):data["is_family"] = data.apply(family, axis = 1)data["total_customer"] = data["adults"] + data["children"] + data["babies"]data["deposit_given"] = data.apply(deposit, axis=1)data["total_nights"] = data["stays_in_weekend_nights"]+ data["stays_in_week_nights"]return datadata = feature(data)

上述处理:data[“is_family”]将三列处理成了一列0、1变量,当成年人带上儿童或者婴儿即为1,否则为0;data[“total_customer”]计算为成年人+儿童+婴儿的总人数;data[“deposit_given”]将data[‘deposit_type’]列处理成0、1变量;data[“total_nights”]计算一共住了多少晚上。

完成一些变量处理后,则需要删除用过的变量

data = data.drop(columns = ['adults', 'babies', 'children', 'deposit_type', 'reservation_status_date'])

Correlation,考察相关关系

data.columns

Index(['hotel', 'is_canceled', 'lead_time', 'arrival_date_year','arrival_date_month', 'arrival_date_week_number','arrival_date_day_of_month', 'stays_in_weekend_nights','stays_in_week_nights', 'meal', 'country', 'market_segment','distribution_channel', 'is_repeated_guest', 'previous_cancellations','previous_bookings_not_canceled', 'reserved_room_type','assigned_room_type', 'booking_changes', 'agent','days_in_waiting_list', 'customer_type', 'adr','required_car_parking_spaces', 'total_of_special_requests','reservation_status', 'is_family', 'total_customer', 'deposit_given','total_nights'],dtype='object')

cor_data = data.copy()

复制数据来得出相关系数,不会改变后面建模所用的数据data。

le = LabelEncoder()

cor_data['meal'] = le.fit_transform(cor_data['meal'])

cor_data['distribution_channel'] = le.fit_transform(cor_data['distribution_channel'])

cor_data['reserved_room_type'] = le.fit_transform(cor_data['reserved_room_type'])

cor_data['assigned_room_type'] = le.fit_transform(cor_data['assigned_room_type'])

cor_data['agent'] = le.fit_transform(cor_data['agent'])

cor_data['customer_type'] = le.fit_transform(cor_data['customer_type'])

cor_data['reservation_status'] = le.fit_transform(cor_data['reservation_status'])

cor_data['market_segment'] = le.fit_transform(cor_data['market_segment'])

cor_data.corr()

| hotel | is_canceled | lead_time | arrival_date_year | arrival_date_month | arrival_date_week_number | arrival_date_day_of_month | stays_in_weekend_nights | stays_in_week_nights | meal | ... | days_in_waiting_list | customer_type | adr | required_car_parking_spaces | total_of_special_requests | reservation_status | is_family | total_customer | deposit_given | total_nights | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| hotel | 1.000000 | 0.136531 | 0.075381 | 0.035267 | 0.001817 | 0.001270 | -0.001862 | -0.186596 | -0.234020 | 0.008018 | ... | 0.072432 | 0.047531 | 0.096719 | -0.218873 | -0.043390 | -0.124331 | -0.058306 | -0.040821 | 0.172003 | -0.247479 |

| is_canceled | 0.136531 | 1.000000 | 0.293123 | 0.016660 | 0.011022 | 0.008148 | -0.006130 | -0.001791 | 0.024765 | -0.017678 | ... | 0.054186 | -0.068140 | 0.047557 | -0.195498 | -0.234658 | -0.917196 | -0.013010 | 0.046522 | 0.481457 | 0.017779 |

| lead_time | 0.075381 | 0.293123 | 1.000000 | 0.040142 | 0.131424 | 0.126871 | 0.002268 | 0.085671 | 0.165799 | 0.000349 | ... | 0.170084 | 0.073403 | -0.063077 | -0.116451 | -0.095712 | -0.302175 | -0.043972 | 0.072265 | 0.380179 | 0.157167 |

| arrival_date_year | 0.035267 | 0.016660 | 0.040142 | 1.000000 | -0.527739 | -0.540561 | -0.000221 | 0.021497 | 0.030883 | 0.065840 | ... | -0.056497 | -0.006149 | 0.197580 | -0.013684 | 0.108531 | -0.017683 | 0.052711 | 0.052127 | -0.065963 | 0.031438 |

| arrival_date_month | 0.001817 | 0.011022 | 0.131424 | -0.527739 | 1.000000 | 0.995105 | -0.026063 | 0.018440 | 0.019212 | -0.015205 | ... | 0.019045 | -0.029753 | 0.079315 | 0.000257 | 0.028026 | -0.021090 | 0.010427 | 0.027252 | 0.008746 | 0.021536 |

| arrival_date_week_number | 0.001270 | 0.008148 | 0.126871 | -0.540561 | 0.995105 | 1.000000 | 0.066809 | 0.018208 | 0.015558 | -0.017381 | ... | 0.022933 | -0.028432 | 0.075791 | 0.001920 | 0.026149 | -0.017387 | 0.010611 | 0.025220 | 0.007773 | 0.018719 |

| arrival_date_day_of_month | -0.001862 | -0.006130 | 0.002268 | -0.000221 | -0.026063 | 0.066809 | 1.000000 | -0.016354 | -0.028174 | -0.007086 | ... | 0.022728 | 0.012188 | 0.030245 | 0.008683 | 0.003062 | 0.011460 | 0.014710 | 0.006742 | -0.008616 | -0.027408 |

| stays_in_weekend_nights | -0.186596 | -0.001791 | 0.085671 | 0.021497 | 0.018440 | 0.018208 | -0.016354 | 1.000000 | 0.498969 | 0.045744 | ... | -0.054151 | -0.109220 | 0.049342 | -0.018554 | 0.072671 | 0.008558 | 0.052306 | 0.101426 | -0.114275 | 0.762790 |

| stays_in_week_nights | -0.234020 | 0.024765 | 0.165799 | 0.030883 | 0.019212 | 0.015558 | -0.028174 | 0.498969 | 1.000000 | 0.036742 | ... | -0.002020 | -0.127223 | 0.065237 | -0.024859 | 0.068192 | -0.021607 | 0.050424 | 0.101665 | -0.079999 | 0.941005 |

| meal | 0.008018 | -0.017678 | 0.000349 | 0.065840 | -0.015205 | -0.017381 | -0.007086 | 0.045744 | 0.036742 | 1.000000 | ... | -0.007132 | 0.044658 | 0.059098 | -0.038923 | 0.023136 | 0.015393 | -0.041727 | -0.005975 | -0.090725 | 0.045277 |

| market_segment | 0.083795 | 0.059338 | 0.013797 | 0.107697 | 0.001293 | -0.000510 | -0.004088 | 0.115350 | 0.108569 | 0.145132 | ... | -0.041503 | -0.165814 | 0.232763 | -0.062226 | 0.274373 | -0.061584 | 0.080450 | 0.213221 | -0.183880 | 0.126052 |

| distribution_channel | 0.174419 | 0.167600 | 0.220414 | 0.022644 | 0.007381 | 0.005699 | 0.001578 | 0.093097 | 0.087185 | 0.116957 | ... | 0.048642 | -0.069640 | 0.092396 | -0.132280 | 0.098815 | -0.171330 | 0.000464 | 0.144357 | 0.102548 | 0.101407 |

| is_repeated_guest | -0.050421 | -0.084793 | -0.124410 | 0.010341 | -0.030729 | -0.030131 | -0.006145 | -0.087239 | -0.097245 | -0.057009 | ... | -0.022235 | -0.017111 | -0.134314 | 0.077090 | 0.013050 | 0.083504 | -0.035127 | -0.136748 | -0.058423 | -0.106626 |

| previous_cancellations | -0.012292 | 0.110133 | 0.086042 | -0.119822 | 0.037479 | 0.035501 | -0.027011 | -0.012775 | -0.013992 | -0.003772 | ... | 0.005929 | -0.008188 | -0.065646 | -0.018492 | -0.048384 | -0.110758 | -0.027262 | -0.020058 | 0.143314 | -0.015429 |

| previous_bookings_not_canceled | -0.004441 | -0.057358 | -0.073548 | 0.029218 | -0.021640 | -0.020904 | -0.000300 | -0.042715 | -0.048743 | -0.040417 | ... | -0.009397 | -0.012259 | -0.072144 | 0.047653 | 0.037824 | 0.055051 | -0.022815 | -0.099097 | -0.031509 | -0.053049 |

| reserved_room_type | -0.249677 | -0.061282 | -0.106089 | 0.092809 | -0.007923 | -0.007997 | 0.016929 | 0.142083 | 0.168616 | -0.120749 | ... | -0.068821 | -0.120978 | 0.392060 | 0.131583 | 0.137466 | 0.058693 | 0.323910 | 0.383357 | -0.201348 | 0.181296 |

| assigned_room_type | -0.307834 | -0.176028 | -0.172219 | 0.036141 | -0.006378 | -0.005684 | 0.011646 | 0.086643 | 0.100795 | -0.120792 | ... | -0.068676 | -0.084427 | 0.258134 | 0.160131 | 0.124683 | 0.172537 | 0.292940 | 0.302422 | -0.246602 | 0.109042 |

| booking_changes | -0.072820 | -0.144381 | 0.000149 | 0.030872 | 0.004809 | 0.005508 | 0.010613 | 0.063281 | 0.096209 | 0.024650 | ... | -0.011634 | 0.092029 | 0.019618 | 0.065620 | 0.052833 | 0.140799 | 0.079121 | -0.003173 | -0.119333 | 0.096498 |

| agent | -0.158500 | -0.127883 | -0.171430 | -0.017723 | -0.000799 | 0.001638 | -0.002271 | -0.110284 | -0.110354 | -0.095428 | ... | -0.039667 | 0.066095 | -0.126407 | 0.113648 | -0.085429 | 0.123264 | -0.032656 | -0.155423 | -0.013898 | -0.125406 |

| days_in_waiting_list | 0.072432 | 0.054186 | 0.170084 | -0.056497 | 0.019045 | 0.022933 | 0.022728 | -0.054151 | -0.002020 | -0.007132 | ... | 1.000000 | 0.099121 | -0.040756 | -0.030600 | -0.082730 | -0.057927 | -0.036312 | -0.026431 | 0.120249 | -0.022652 |

| customer_type | 0.047531 | -0.068140 | 0.073403 | -0.006149 | -0.029753 | -0.028432 | 0.012188 | -0.109220 | -0.127223 | 0.044658 | ... | 0.099121 | 1.000000 | -0.077155 | -0.030060 | -0.135624 | 0.066004 | -0.060139 | -0.113232 | -0.086745 | -0.137577 |

| adr | 0.096719 | 0.047557 | -0.063077 | 0.197580 | 0.079315 | 0.075791 | 0.030245 | 0.049342 | 0.065237 | 0.059098 | ... | -0.040756 | -0.077155 | 1.000000 | 0.056628 | 0.172185 | -0.050520 | 0.309360 | 0.368105 | -0.087608 | 0.067945 |

| required_car_parking_spaces | -0.218873 | -0.195498 | -0.116451 | -0.013684 | 0.000257 | 0.001920 | 0.008683 | -0.018554 | -0.024859 | -0.038923 | ... | -0.030600 | -0.030060 | 0.056628 | 1.000000 | 0.082626 | 0.179310 | 0.069141 | 0.047934 | -0.094982 | -0.025794 |

| total_of_special_requests | -0.043390 | -0.234658 | -0.095712 | 0.108531 | 0.028026 | 0.026149 | 0.003062 | 0.072671 | 0.068192 | 0.023136 | ... | -0.082730 | -0.135624 | 0.172185 | 0.082626 | 1.000000 | 0.225674 | 0.128205 | 0.156834 | -0.268034 | 0.079259 |

| reservation_status | -0.124331 | -0.917196 | -0.302175 | -0.017683 | -0.021090 | -0.017387 | 0.011460 | 0.008558 | -0.021607 | 0.015393 | ... | -0.057927 | 0.066004 | -0.050520 | 0.179310 | 0.225674 | 1.000000 | 0.013117 | -0.055273 | -0.478747 | -0.012781 |

| is_family | -0.058306 | -0.013010 | -0.043972 | 0.052711 | 0.010427 | 0.010611 | 0.014710 | 0.052306 | 0.050424 | -0.041727 | ... | -0.036312 | -0.060139 | 0.309360 | 0.069141 | 0.128205 | 0.013117 | 1.000000 | 0.579899 | -0.106643 | 0.058049 |

| total_customer | -0.040821 | 0.046522 | 0.072265 | 0.052127 | 0.027252 | 0.025220 | 0.006742 | 0.101426 | 0.101665 | -0.005975 | ... | -0.026431 | -0.113232 | 0.368105 | 0.047934 | 0.156834 | -0.055273 | 0.579899 | 1.000000 | -0.080676 | 0.115463 |

| deposit_given | 0.172003 | 0.481457 | 0.380179 | -0.065963 | 0.008746 | 0.007773 | -0.008616 | -0.114275 | -0.079999 | -0.090725 | ... | 0.120249 | -0.086745 | -0.087608 | -0.094982 | -0.268034 | -0.478747 | -0.106643 | -0.080676 | 1.000000 | -0.104314 |

| total_nights | -0.247479 | 0.017779 | 0.157167 | 0.031438 | 0.021536 | 0.018719 | -0.027408 | 0.762790 | 0.941005 | 0.045277 | ... | -0.022652 | -0.137577 | 0.067945 | -0.025794 | 0.079259 | -0.012781 | 0.058049 | 0.115463 | -0.104314 | 1.000000 |

29 rows × 29 columns

cor_data.corr()['stays_in_week_nights']

hotel -0.234020

is_canceled 0.024765

lead_time 0.165799

arrival_date_year 0.030883

arrival_date_month 0.019212

arrival_date_week_number 0.015558

arrival_date_day_of_month -0.028174

stays_in_weekend_nights 0.498969

stays_in_week_nights 1.000000

meal 0.036742

market_segment 0.108569

distribution_channel 0.087185

is_repeated_guest -0.097245

previous_cancellations -0.013992

previous_bookings_not_canceled -0.048743

reserved_room_type 0.168616

assigned_room_type 0.100795

booking_changes 0.096209

agent -0.110354

days_in_waiting_list -0.002020

customer_type -0.127223

adr 0.065237

required_car_parking_spaces -0.024859

total_of_special_requests 0.068192

reservation_status -0.021607

is_family 0.050424

total_customer 0.101665

deposit_given -0.079999

total_nights 0.941005

Name: stays_in_week_nights, dtype: float64

删除一些列:

cor_data = cor_data.drop(columns = ['total_nights', 'arrival_date_week_number', 'stays_in_weekend_nights', 'arrival_date_month', 'agent'], axis = 1)

删除空值的行:

indices = cor_data.loc[pd.isna(cor_data["country"]), :].index

cor_data = cor_data.drop(cor_data.index[indices])

cor_data.isnull().sum()

hotel 0

is_canceled 0

lead_time 0

arrival_date_year 0

arrival_date_day_of_month 0

stays_in_week_nights 0

meal 0

country 0

market_segment 0

distribution_channel 0

is_repeated_guest 0

previous_cancellations 0

previous_bookings_not_canceled 0

reserved_room_type 0

assigned_room_type 0

booking_changes 0

days_in_waiting_list 0

customer_type 0

adr 0

required_car_parking_spaces 0

total_of_special_requests 0

reservation_status 0

is_family 0

total_customer 0

deposit_given 0

dtype: int64

删除空值的行和一些列:

indices = data.loc[pd.isna(data["country"]), :].index

data = data.drop(data.index[indices])

data = data.drop(columns = ['arrival_date_week_number', 'stays_in_weekend_nights', 'arrival_date_month', 'agent'], axis = 1)

data.columns

Index(['hotel', 'is_canceled', 'lead_time', 'arrival_date_year','arrival_date_day_of_month', 'stays_in_week_nights', 'meal', 'country','market_segment', 'distribution_channel', 'is_repeated_guest','previous_cancellations', 'previous_bookings_not_canceled','reserved_room_type', 'assigned_room_type', 'booking_changes','days_in_waiting_list', 'customer_type', 'adr','required_car_parking_spaces', 'total_of_special_requests','reservation_status', 'is_family', 'total_customer', 'deposit_given','total_nights'],dtype='object')

df1 = data.copy()

将分类变量处理成虚拟变量:

#one-hot-encoding

df1 = pd.get_dummies(data = df1, columns = ['meal',

'market_segment', 'distribution_channel',

'reserved_room_type', 'assigned_room_type','customer_type', 'reservation_status'])

df1['country'] = le.fit_transform(df1['country'])

le.fit_transform:参考博客:le.fit_transform

,也是将字符变量处理成数字变量

Decision Tree Model (reservation_status included)

y = df1["is_canceled"]

X = df1.drop(["is_canceled"], axis=1)X_train, X_test, y_train, y_test = train_test_split(X, y, test_size = 0.30, random_state = 42)

cart = DecisionTreeClassifier(max_depth = 12)

cart_model = cart.fit(X_train, y_train)

y_pred = cart_model.predict(X_test)

print('Decision Tree Model')print('Accuracy Score: {}\n\nConfusion Matrix:\n {}\n\nAUC Score: {}'.format(accuracy_score(y_test,y_pred), confusion_matrix(y_test,y_pred), roc_auc_score(y_test,y_pred)))

Decision Tree Model

Accuracy Score: 1.0Confusion Matrix:[[22353 0][ 0 13318]]AUC Score: 1.0

准确率100%

pd.DataFrame(data = cart_model.feature_importances_*100,columns = ["Importances"],index = X_train.columns).sort_values("Importances", ascending = False)[:20].plot(kind = "barh", color = "r")plt.xlabel("Feature Importances (%)")

plt.show()

在分析相关系数时,我们已经看到了预订状态对因变量的影响比较大。建模时保留这个变量会完全主导其他变量。 如将reservation_status保留在数据中,有可能达到100%的准确率。为了分析起见,将删除Reservation_status并继续分析。

比较模型之前的最终安排

df2 = df1.drop(columns = ['reservation_status_Canceled', 'reservation_status_Check-Out', 'reservation_status_No-Show'], axis = 1)

这三个变量是由reservation_status处理成虚拟变量生成的,所以要删除不能只删除reservation_status_Check-Out,而应该全部删除。

y = df2["is_canceled"]

X = df2.drop(["is_canceled"], axis=1)X_train, X_test, y_train, y_test = train_test_split(X, y, test_size = 0.30, random_state = 42)

定义模型和评价模型的函数、图像等:

def model(algorithm, X_train, X_test, y_train, y_test):alg = algorithmalg_model = alg.fit(X_train, y_train)global y_prob, y_predy_prob = alg.predict_proba(X_test)[:,1]y_pred = alg_model.predict(X_test)print('Accuracy Score: {}\n\nConfusion Matrix:\n {}'.format(accuracy_score(y_test,y_pred), confusion_matrix(y_test,y_pred)))def ROC(y_test, y_prob):false_positive_rate, true_positive_rate, threshold = roc_curve(y_test, y_prob)roc_auc = auc(false_positive_rate, true_positive_rate)plt.figure(figsize = (10,10))plt.title('Receiver Operating Characteristic')plt.plot(false_positive_rate, true_positive_rate, color = 'red', label = 'AUC = %0.2f' % roc_auc)plt.legend(loc = 'lower right')plt.plot([0, 1], [0, 1], linestyle = '--')plt.axis('tight')plt.ylabel('True Positive Rate')plt.xlabel('False Positive Rate')plt.show()

sklearn中predict_proba用法(注意和predict的区别)

Model and ROC Curve Comparison

Logistic Regression Model

print('Model: Logistic Regression\n')

model(LogisticRegression(solver = "liblinear"), X_train, X_test, y_train, y_test)

Model: Logistic RegressionAccuracy Score: 0.8038742956463233Confusion Matrix:[[20486 1867][ 5129 8189]]

cross_val_score:交叉验证

LogR = LogisticRegression(solver = "liblinear")

cv_scores = cross_val_score(LogR, X, y, cv = 8, scoring = 'accuracy')

print('Mean Score of CV: ', cv_scores.mean())

Mean Score of CV: 0.7701217519101682

ROC(y_test, y_prob)

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-fU2GDzgw-1590722659775)(output_76_0.png)]](https://img-blog.csdnimg.cn/20200529113722242.png?x-oss-process=image/watermark,type_ZmFuZ3poZW5naGVpdGk,shadow_10,text_aHR0cHM6Ly9ibG9nLmNzZG4ubmV0L3FxXzQxMDgxNzE2,size_16,color_FFFFFF,t_70)

Gaussian Naive Bayes Model

print('Model: Gaussian Naive Bayes\n')

model(GaussianNB(), X_train, X_test, y_train, y_test)

Model: Gaussian Naive BayesAccuracy Score: 0.586246530795324Confusion Matrix:[[ 9604 12749][ 2010 11308]]

NB = GaussianNB()

cv_scores = cross_val_score(NB, X, y, cv = 8, scoring = 'accuracy')

print('Mean Score of CV: ', cv_scores.mean())

Mean Score of CV: 0.5624280984012298

ROC(y_test, y_prob)

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-qPqPU9wt-1590722659777)(output_80_0.png)]](https://img-blog.csdnimg.cn/20200529113739647.png?x-oss-process=image/watermark,type_ZmFuZ3poZW5naGVpdGk,shadow_10,text_aHR0cHM6Ly9ibG9nLmNzZG4ubmV0L3FxXzQxMDgxNzE2,size_16,color_FFFFFF,t_70)

Support Vector Classification Model

print('Model: SVC\n')def model1(algorithm, X_train, X_test, y_train, y_test):alg = algorithmalg_model = alg.fit(X_train, y_train)global y_predy_pred = alg_model.predict(X_test)print('Accuracy Score: {}\n\nConfusion Matrix:\n {}'.format(accuracy_score(y_test,y_pred), confusion_matrix(y_test,y_pred)))model1(SVC(kernel = 'linear'), X_train, X_test, y_train, y_test)

Decision Tree Model

print('Model: Decision Tree\n')

model(DecisionTreeClassifier(max_depth = 12), X_train, X_test, y_train, y_test)

DTC = DecisionTreeClassifier(max_depth = 12)

cv_scores = cross_val_score(DTC, X, y, cv = 8, scoring = 'accuracy')

print('Mean Score of CV: ', cv_scores.mean())

Mean Score of CV: 0.6725617115938002

ROC(y_test, y_prob)

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-BurbtMk6-1590722659778)(output_86_0.png)]](https://img-blog.csdnimg.cn/20200529113812518.png?x-oss-process=image/watermark,type_ZmFuZ3poZW5naGVpdGk,shadow_10,text_aHR0cHM6Ly9ibG9nLmNzZG4ubmV0L3FxXzQxMDgxNzE2,size_16,color_FFFFFF,t_70)

Random Forest

print('Model: Random Forest\n')

model(RandomForestClassifier(), X_train, X_test, y_train, y_test)

Model: Random ForestAccuracy Score: 0.8835748927700373Confusion Matrix:[[20946 1407][ 2746 10572]]

RFC = RandomForestClassifier()

cv_scores = cross_val_score(RFC, X, y, cv = 8, scoring = 'accuracy')

print('Mean Score of CV: ', cv_scores.mean())

Mean Score of CV: 0.6697106885103477

ROC(y_test, y_prob)

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-pxeKMGcR-1590722659780)(output_90_0.png)]](https://img-blog.csdnimg.cn/20200529113835974.png?x-oss-process=image/watermark,type_ZmFuZ3poZW5naGVpdGk,shadow_10,text_aHR0cHM6Ly9ibG9nLmNzZG4ubmV0L3FxXzQxMDgxNzE2,size_16,color_FFFFFF,t_70)

Random Forest Model Tuning

rf_parameters = {"max_depth": [10,13],"n_estimators": [10,100,500],"min_samples_split": [2,5]}

rf_model = RandomForestClassifier()

rf_cv_model = GridSearchCV(rf_model,rf_parameters,cv = 10,n_jobs = -1,verbose = 2)rf_cv_model.fit(X_train, y_train)

Fitting 10 folds for each of 12 candidates, totalling 120 fits[Parallel(n_jobs=-1)]: Using backend LokyBackend with 4 concurrent workers.

[Parallel(n_jobs=-1)]: Done 33 tasks | elapsed: 2.5min

[Parallel(n_jobs=-1)]: Done 120 out of 120 | elapsed: 12.5min finishedGridSearchCV(cv=10, error_score=nan,estimator=RandomForestClassifier(bootstrap=True, ccp_alpha=0.0,class_weight=None,criterion='gini', max_depth=None,max_features='auto',max_leaf_nodes=None,max_samples=None,min_impurity_decrease=0.0,min_impurity_split=None,min_samples_leaf=1,min_samples_split=2,min_weight_fraction_leaf=0.0,n_estimators=100, n_jobs=None,oob_score=False,random_state=None, verbose=0,warm_start=False),iid='deprecated', n_jobs=-1,param_grid={'max_depth': [10, 13], 'min_samples_split': [2, 5],'n_estimators': [10, 100, 500]},pre_dispatch='2*n_jobs', refit=True, return_train_score=False,scoring=None, verbose=2)

print('Best parameters: ' + str(rf_cv_model.best_params_))

Best parameters: {'max_depth': 13, 'min_samples_split': 2, 'n_estimators': 500}

rf_tuned = RandomForestClassifier(max_depth = 13,min_samples_split = 2,n_estimators = 500)print('Model: Random Forest Tuned\n')

model(rf_tuned, X_train, X_test, y_train, y_test)

Model: Random Forest TunedAccuracy Score: 0.8515320568529057Confusion Matrix:[[21151 1202][ 4094 9224]]

调整后的模型的准确性得分比默认模型差。 在默认模型中,最大深度没有限制。 最大深度的增加为我们提供了更好的准确性得分,但可能会降低泛化性。

XGBoost Model

print('Model: XGBoost\n')

model(XGBClassifier(), X_train, X_test, y_train, y_test)

Model: XGBoostAccuracy Score: 0.8696980740657677Confusion Matrix:[[20570 1783][ 2865 10453]]

XGB = XGBClassifier()

cv_scores = cross_val_score(XGB, X, y, cv = 8, scoring = 'accuracy')

print('Mean Score of CV: ', cv_scores.mean())

Mean Score of CV: 0.651031688035794

ROC(y_test, y_prob)

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-yWBo0xJW-1590722659782)(output_101_0.png)]](https://img-blog.csdnimg.cn/20200529113941814.png?x-oss-process=image/watermark,type_ZmFuZ3poZW5naGVpdGk,shadow_10,text_aHR0cHM6Ly9ibG9nLmNzZG4ubmV0L3FxXzQxMDgxNzE2,size_16,color_FFFFFF,t_70)

Neural Network Model

scaler = StandardScaler()

scaler.fit(X_train)

X_train_scaled = scaler.transform(X_train)

X_test_scaled = scaler.transform(X_test)

print('Model: Neural Network\n')

model(MLPClassifier(), X_train_scaled, X_test_scaled, y_train, y_test)

Model: Neural NetworkAccuracy Score: 0.8486445572033304Confusion Matrix:[[20212 2141][ 3258 10060]]

ROC(y_test, y_prob)

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-nExY6bgw-1590722659784)(output_105_0.png)]](https://img-blog.csdnimg.cn/20200529114010999.png?x-oss-process=image/watermark,type_ZmFuZ3poZW5naGVpdGk,shadow_10,text_aHR0cHM6Ly9ibG9nLmNzZG4ubmV0L3FxXzQxMDgxNzE2,size_16,color_FFFFFF,t_70)

Neural Network Model Tuning

mlpc_parameters = {"alpha": [1, 0.1, 0.01, 0.001],"hidden_layer_sizes": [(50,50,50),(100,100)],"solver": ["adam", "sgd"],"activation": ["logistic", "relu"]}

mlpc = MLPClassifier()

mlpc_cv_model = GridSearchCV(mlpc, mlpc_parameters,cv = 10,n_jobs = -1,verbose = 2)mlpc_cv_model.fit(X_train_scaled, y_train)

Fitting 10 folds for each of 32 candidates, totalling 320 fits[Parallel(n_jobs=-1)]: Using backend LokyBackend with 4 concurrent workers.

[Parallel(n_jobs=-1)]: Done 33 tasks | elapsed: 13.5min

[Parallel(n_jobs=-1)]: Done 154 tasks | elapsed: 123.4min

[Parallel(n_jobs=-1)]: Done 320 out of 320 | elapsed: 290.8min finishedGridSearchCV(cv=10, error_score=nan,estimator=MLPClassifier(activation='relu', alpha=0.0001,batch_size='auto', beta_1=0.9,beta_2=0.999, early_stopping=False,epsilon=1e-08, hidden_layer_sizes=(100,),learning_rate='constant',learning_rate_init=0.001, max_fun=15000,max_iter=200, momentum=0.9,n_iter_no_change=10,nesterovs_momentum=True, power_t=0.5,random_state=None, shuffle=True,solver='adam', tol=0.0001,validation_fraction=0.1, verbose=False,warm_start=False),iid='deprecated', n_jobs=-1,param_grid={'activation': ['logistic', 'relu'],'alpha': [1, 0.1, 0.01, 0.001],'hidden_layer_sizes': [(50, 50, 50), (100, 100)],'solver': ['adam', 'sgd']},pre_dispatch='2*n_jobs', refit=True, return_train_score=False,scoring=None, verbose=2)

print('Best parameters: ' + str(mlpc_cv_model.best_params_))

Best parameters: {'activation': 'relu', 'alpha': 0.1, 'hidden_layer_sizes': (100, 100), 'solver': 'adam'}

mlpc_tuned = MLPClassifier(activation = 'relu',alpha = 0.1,hidden_layer_sizes = (100,100),solver = 'adam')

print('Model: Neural Network Tuned\n')

model(mlpc_tuned, X_train_scaled, X_test_scaled, y_train, y_test)

Model: Neural Network TunedAccuracy Score: 0.859409604440582Confusion Matrix:[[20464 1889][ 3126 10192]]

ROC(y_test, y_prob)

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-iOjwRzF7-1590722659785)(output_112_0.png)]](https://img-blog.csdnimg.cn/20200529114028200.png?x-oss-process=image/watermark,type_ZmFuZ3poZW5naGVpdGk,shadow_10,text_aHR0cHM6Ly9ibG9nLmNzZG4ubmV0L3FxXzQxMDgxNzE2,size_16,color_FFFFFF,t_70)

Conclusion

Feature Importances

randomf = RandomForestClassifier()

rf_model1 = randomf.fit(X_train, y_train)pd.DataFrame(data = rf_model1.feature_importances_*100,columns = ["Importances"],index = X_train.columns).sort_values("Importances", ascending = False)[:15].plot(kind = "barh", color = "r")plt.xlabel("Feature Importances (%)")

Text(0.5, 0, 'Feature Importances (%)')

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-nE3xsCdT-1590722659787)(output_115_1.png)]](https://img-blog.csdnimg.cn/20200529114043150.png?x-oss-process=image/watermark,type_ZmFuZ3poZW5naGVpdGk,shadow_10,text_aHR0cHM6Ly9ibG9nLmNzZG4ubmV0L3FxXzQxMDgxNzE2,size_16,color_FFFFFF,t_70)

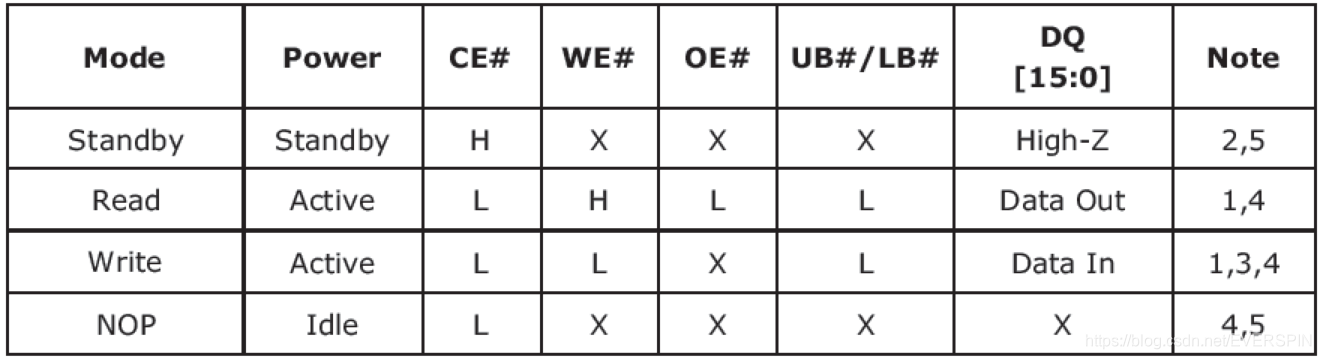

Summary Table of the Models

table = pd.DataFrame({"Model": ["Decision Tree (reservation status included)", "Logistic Regression","Naive Bayes", "Support Vector", "Decision Tree", "Random Forest","Random Forest Tuned", "XGBoost", "Neural Network", "Neural Network Tuned"],"Accuracy Scores": ["1", "0.804", "0.582", "0.794", "0.846","0.883", "0.851", "0.869", "0.848", "0.859"],"ROC | Auc": ["1", "0.88", "0.78", "0","0.92", "0.95", "0", "0.94","0.93", "0.94"]})table["Model"] = table["Model"].astype("category")

table["Accuracy Scores"] = table["Accuracy Scores"].astype("float32")

table["ROC | Auc"] = table["ROC | Auc"].astype("float32")pd.pivot_table(table, index = ["Model"]).sort_values(by = 'Accuracy Scores', ascending=False)

pandas 透视表

| Accuracy Scores | ROC | Auc | |

|---|---|---|

| Model | ||

| Decision Tree (reservation status included) | 1.000 | 1.00 |

| Random Forest | 0.883 | 0.95 |

| XGBoost | 0.869 | 0.94 |

| Neural Network Tuned | 0.859 | 0.94 |

| Random Forest Tuned | 0.851 | 0.00 |

| Neural Network | 0.848 | 0.93 |

| Decision Tree | 0.846 | 0.92 |

| Logistic Regression | 0.804 | 0.88 |

| Support Vector | 0.794 | 0.00 |

| Naive Bayes | 0.582 | 0.78 |