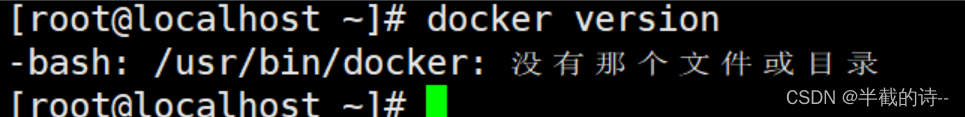

1.解压

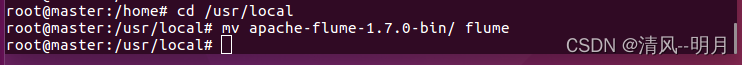

2.改名

3.修改权限

![]()

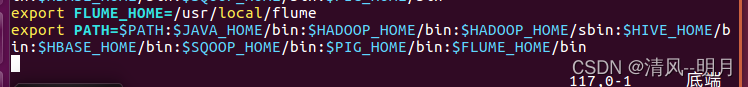

4.编辑环境变量并source

export FLUME_HOME=/usr/local/flume

export PATH=$PATH:$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$HIVE_HOME/bin:$HBASE_HOME/bin:$SQOOP_HOME/bin:$PIG_HOME/bin:$FLUME_HOME/bin

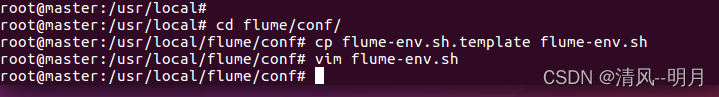

5.配置

5.配置

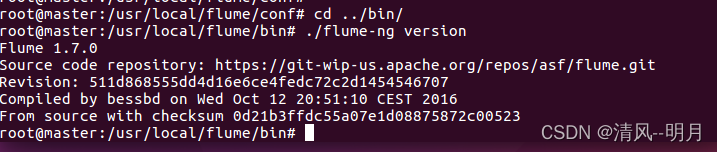

6.查看版本

7.启动Hadoop

8.创建smple文件夹在此文件夹下创建a2.conf

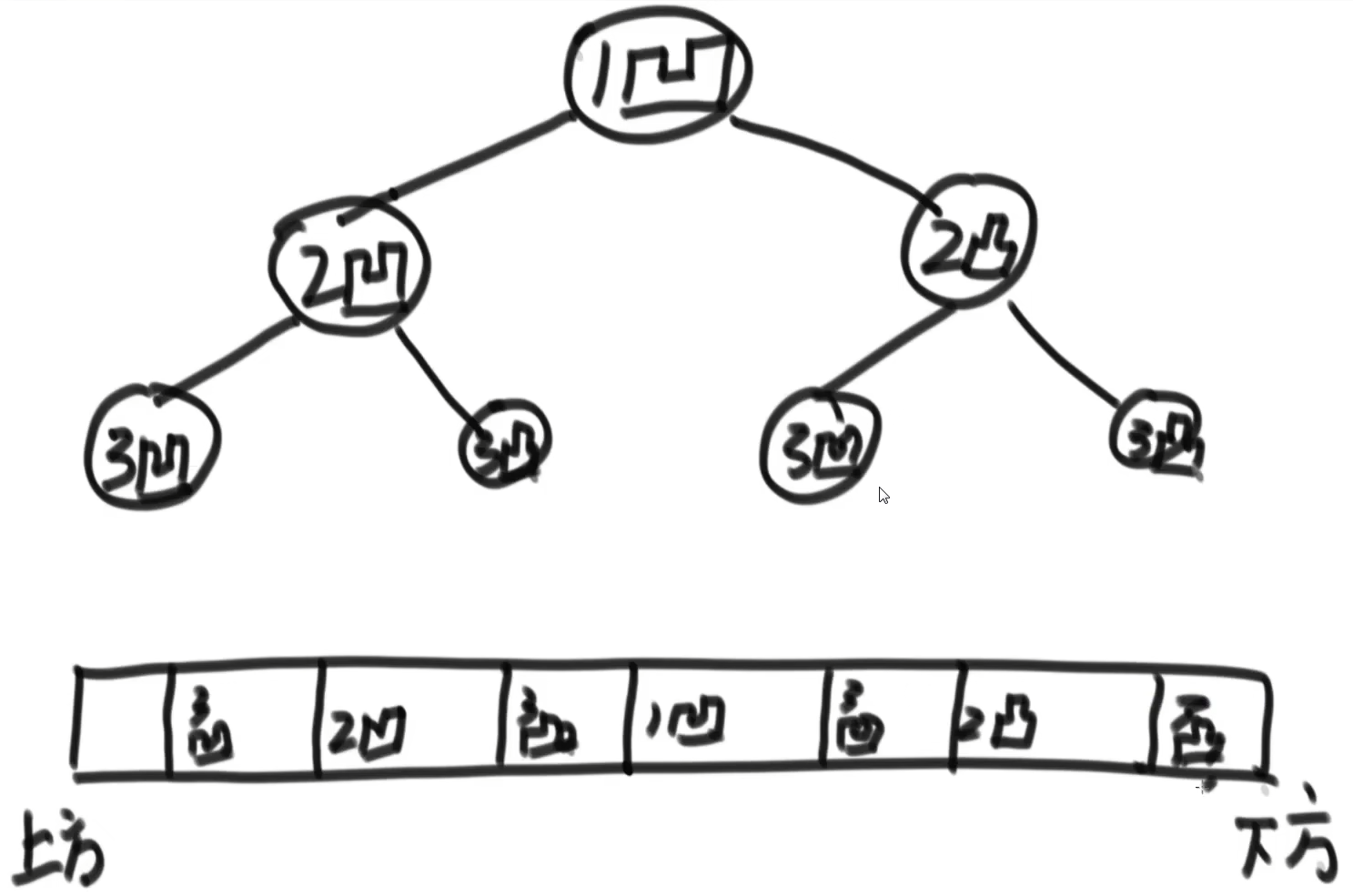

a2.sources = r1

a2.channels = c1

a2.sinks = k1a2.sources.r1.type = exec

a2.sources.r1.command = tail -F /simple/data.txta2.channels.c1.type = memory

a2.channels.c1.capacity = 1000

a2.channels.c1.transactionCapacity = 100a2.sinks.k1.type = hdfs

a2.sinks.k1.hdfs.path = hdfs://master:9000/flume/date_hdfs.txt

a2.sinks.k1.hdfs.filePrefix = events-

a2.sinks.k1.hdfs.fileType = DataStreama2.sources.r1.channels = c1

a2.sinks.k1.channel = c1

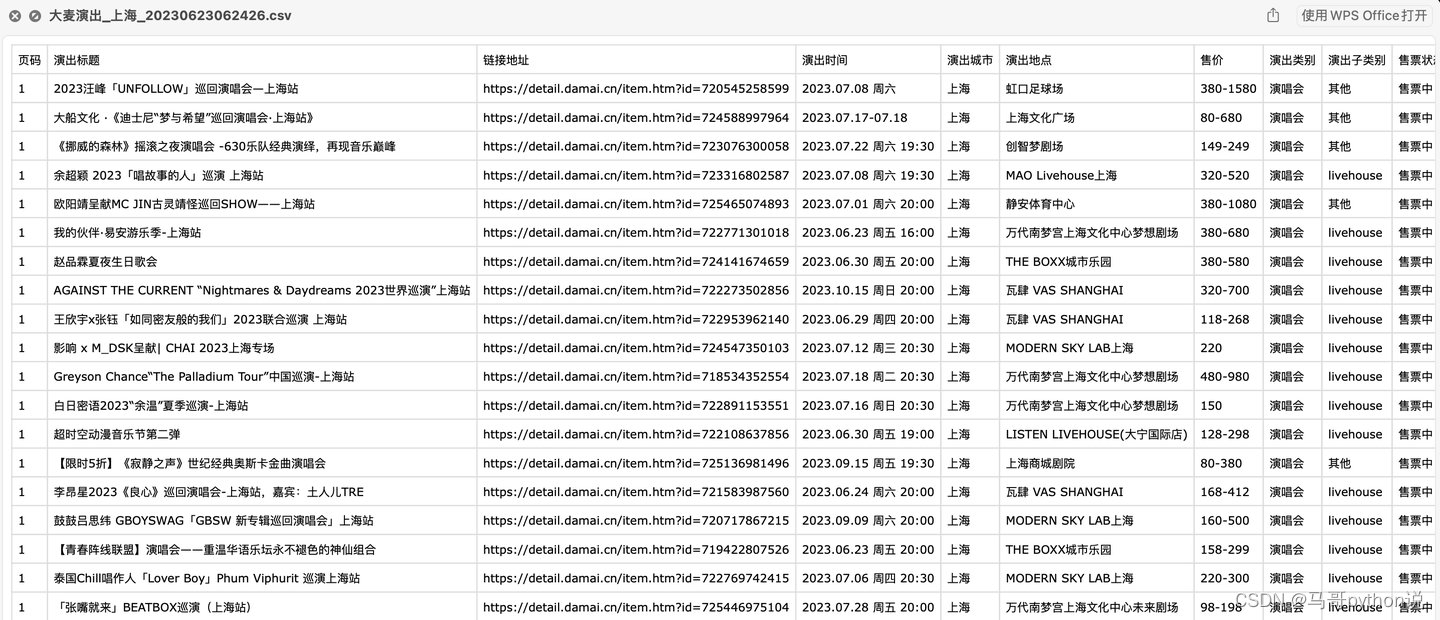

data.txt

(a,1,2,3,4.2,9.8)

(a,3,0,5,3.5,2.1)

(b,7,9,9,-,-)

(a,7,9,9,2.6,6.2)

(a,1,2,5,7.7,5.9)

(a,1,2,3,1.4,0.2)

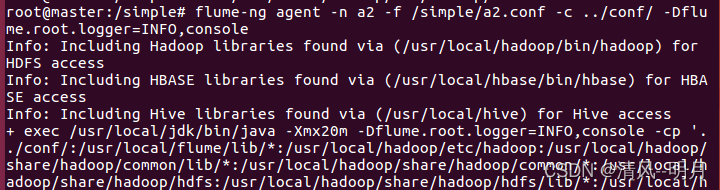

9.启动flume

flume-ng agent -n a2 -f /simple/a2.conf -c ../conf/ -Dflume.root.logger=INFO,console

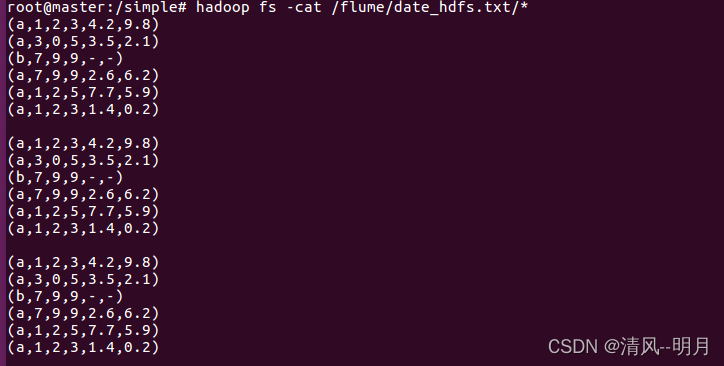

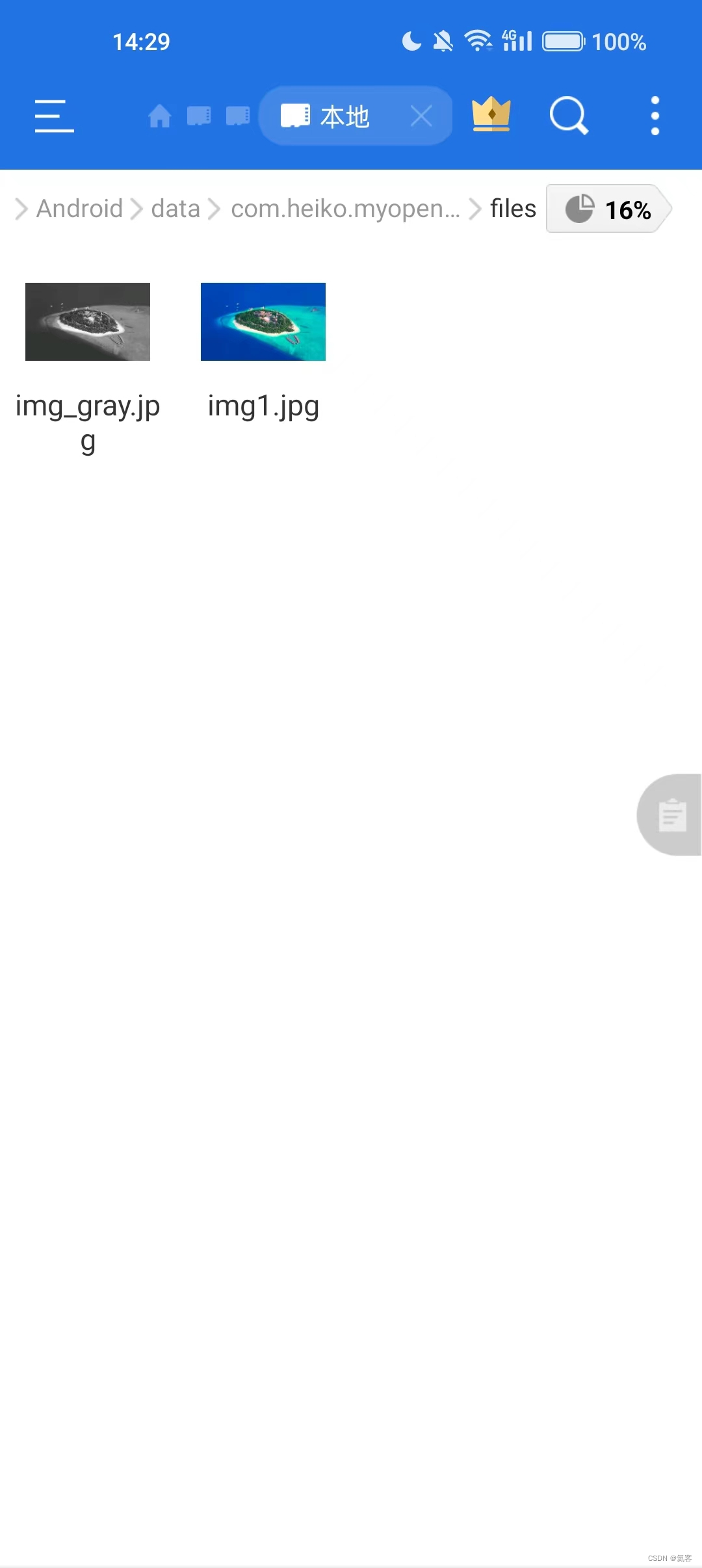

10. 查看