文章目录

- 一 项目简介

- 二 项目安装和演示

- 1 安装

- 2 演示

- 三 源码分析

- 1 项目结构

- 2 主程序源代码分析

- 四 添加自定义网址

- 结语

一 项目简介

二 项目安装和演示

1 安装

# clone the repo

$ git clone https://github.com/sherlock-project/sherlock.git# change the working directory to sherlock

$ cd sherlock# install the requirements

$ python3 -m pip install -r requirements.txt

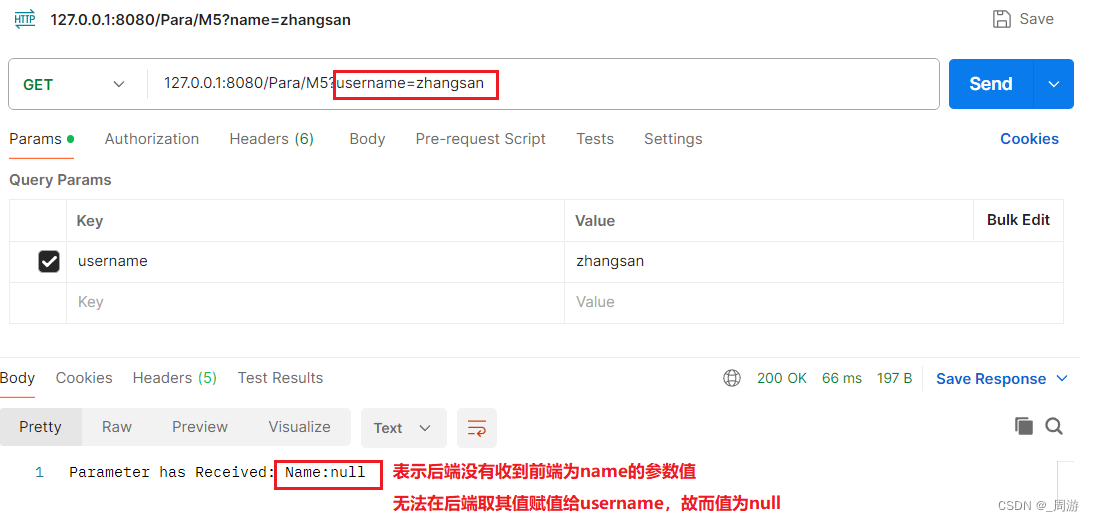

2 演示

python3 sherlock user123

- 结果:

[*] Checking username user123 on:[+] 7Cups: https://www.7cups.com/@user123

[+] 8tracks: https://8tracks.com/user123

[+] Air Pilot Life: https://airlinepilot.life/u/user123

[+] Airliners: https://www.airliners.net/user/user123/profile/photos

[+] Anilist: https://anilist.co/user/user123/

[+] Apple Developer: https://developer.apple.com/forums/profile/user123

[+] Apple Discussions: https://discussions.apple.com/profile/user123

[+] BLIP.fm: https://blip.fm/user123

[+] Bikemap: https://www.bikemap.net/en/u/user123/routes/created/

[+] Bitwarden Forum: https://community.bitwarden.com/u/user123/summary

[+] BodyBuilding: https://bodyspace.bodybuilding.com/user123

[+] Bookcrossing: https://www.bookcrossing.com/mybookshelf/user123/

[+] BuyMeACoffee: https://buymeacoff.ee/user123

[+] CGTrader: https://www.cgtrader.com/user123

[+] Career.habr: https://career.habr.com/user123

[+] Championat: https://www.championat.com/user/user123

[+] Chess: https://www.chess.com/member/user123

[+] Code Snippet Wiki: https://codesnippets.fandom.com/wiki/User:user123

[+] Codecademy: https://www.codecademy.com/profiles/user123

[+] Codeforces: https://codeforces.com/profile/user123

[+] Coders Rank: https://profile.codersrank.io/user/user123/

[+] Codewars: https://www.codewars.com/users/user123Process finished with exit code 0

三 源码分析

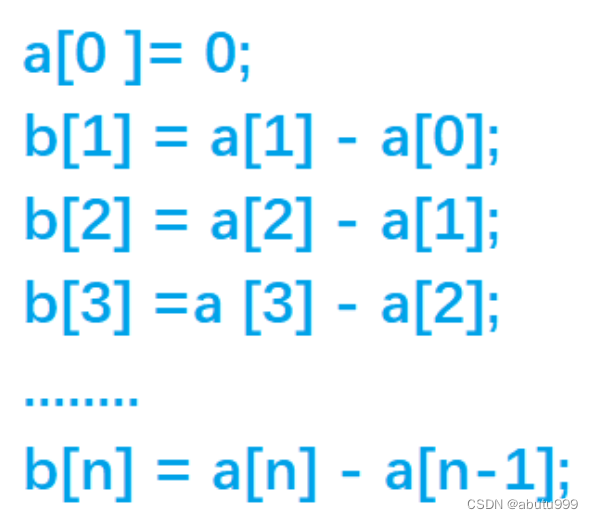

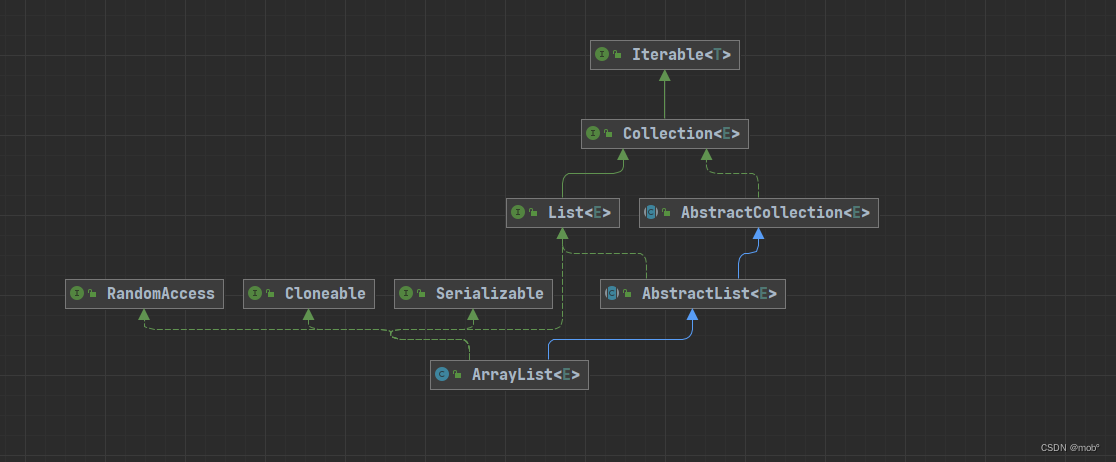

1 项目结构

- sherlock

- resources

- data.json:社交媒体网站信息

- key:网站名(短名)

- value

- errorMsg:错误消息

- errorType:错误类型

- url:网址

- urlMain:域名

- username_claimed:

- regexCheck:正则校验

- request_method:请求方法

- request_payload:请求参数

- urlProbe:url探测

- isNSFW:

- data.json:社交媒体网站信息

- tests:测试

- notify.py:通知对象,在程序开始,发送请求等阶段打印消息

- result.py:结果对象,解析相应结果

- sherlock.py:主程序,解析网址,封装参数,发送请求,解析响应

- sites.py:网站信息对象,解析date.json网站数据,封装为网站信息对象

- resources

- removed_sites.json:已移除的网站

- requirements.txt:依赖文档

- site_list.py:根据data.json更新site.md文档

注意:

-

url网址中{}为占位符,后续会被输入的用户名替换

-

request_method,request_payload 一般在非get请求使用

2 主程序源代码分析

sherloc.py 源代码如下所示:

#! /usr/bin/env python3"""

Sherlock: Find Usernames Across Social Networks ModuleThis module contains the main logic to search for usernames at social

networks.

"""import csv

import signal

import pandas as pd

import os

import platform

import re

import sys

from argparse import ArgumentParser, RawDescriptionHelpFormatter

from time import monotonicimport requestsfrom requests_futures.sessions import FuturesSession

from torrequest import TorRequest

from result import QueryStatus

from result import QueryResult

from notify import QueryNotifyPrint

from sites import SitesInformation

from colorama import init

from argparse import ArgumentTypeErrormodule_name = "Sherlock: Find Usernames Across Social Networks"

__version__ = "0.14.3"class SherlockFuturesSession(FuturesSession):def request(self, method, url, hooks=None, *args, **kwargs):"""Request URL.This extends the FuturesSession request method to calculate a responsetime metric to each request.It is taken (almost) directly from the following Stack Overflow answer:https://github.com/ross/requests-futures#working-in-the-backgroundKeyword Arguments:self -- This object.method -- String containing method desired for request.url -- String containing URL for request.hooks -- Dictionary containing hooks to execute afterrequest finishes.args -- Arguments.kwargs -- Keyword arguments.Return Value:Request object."""# Record the start time for the request.if hooks is None:hooks = {}start = monotonic()def response_time(resp, *args, **kwargs):"""Response Time Hook.Keyword Arguments:resp -- Response object.args -- Arguments.kwargs -- Keyword arguments.Return Value:Nothing."""resp.elapsed = monotonic() - startreturn# Install hook to execute when response completes.# Make sure that the time measurement hook is first, so we will not# track any later hook's execution time.try:if isinstance(hooks["response"], list):hooks["response"].insert(0, response_time)elif isinstance(hooks["response"], tuple):# Convert tuple to list and insert time measurement hook first.hooks["response"] = list(hooks["response"])hooks["response"].insert(0, response_time)else:# Must have previously contained a single hook function,# so convert to list.hooks["response"] = [response_time, hooks["response"]]except KeyError:# No response hook was already defined, so install it ourselves.hooks["response"] = [response_time]return super(SherlockFuturesSession, self).request(method, url, hooks=hooks, *args, **kwargs)def get_response(request_future, error_type, social_network):# Default for Response object if some failure occurs.response = Noneerror_context = "General Unknown Error"exception_text = Nonetry:response = request_future.result()if response.status_code:# Status code exists in response objecterror_context = Noneexcept requests.exceptions.HTTPError as errh:error_context = "HTTP Error"exception_text = str(errh)except requests.exceptions.ProxyError as errp:error_context = "Proxy Error"exception_text = str(errp)except requests.exceptions.ConnectionError as errc:error_context = "Error Connecting"exception_text = str(errc)except requests.exceptions.Timeout as errt:error_context = "Timeout Error"exception_text = str(errt)except requests.exceptions.RequestException as err:error_context = "Unknown Error"exception_text = str(err)return response, error_context, exception_textdef interpolate_string(input_object, username):if isinstance(input_object, str):return input_object.replace("{}", username)elif isinstance(input_object, dict):return {k: interpolate_string(v, username) for k, v in input_object.items()}elif isinstance(input_object, list):return [interpolate_string(i, username) for i in input_object]return input_objectdef check_for_parameter(username):"""checks if {?} exists in the usernameif exist it means that sherlock is looking for more multiple username"""return "{?}" in usernamechecksymbols = []

checksymbols = ["_", "-", "."]def multiple_usernames(username):"""replace the parameter with with symbols and return a list of usernames"""allUsernames = []for i in checksymbols:allUsernames.append(username.replace("{?}", i))return allUsernamesdef sherlock(username,site_data,query_notify,tor=False,unique_tor=False,proxy=None,timeout=60,

):"""Run Sherlock Analysis.Checks for existence of username on various social media sites.Keyword Arguments:username -- String indicating username that reportshould be created against.site_data -- Dictionary containing all of the site data.query_notify -- Object with base type of QueryNotify().This will be used to notify the caller aboutquery results.tor -- Boolean indicating whether to use a tor circuit for the requests.unique_tor -- Boolean indicating whether to use a new tor circuit for each request.proxy -- String indicating the proxy URLtimeout -- Time in seconds to wait before timing out request.Default is 60 seconds.Return Value:Dictionary containing results from report. Key of dictionary is the nameof the social network site, and the value is another dictionary withthe following keys:url_main: URL of main site.url_user: URL of user on site (if account exists).status: QueryResult() object indicating results of test foraccount existence.http_status: HTTP status code of query which checked for existence onsite.response_text: Text that came back from request. May be None ifthere was an HTTP error when checking for existence."""# Notify caller that we are starting the query.query_notify.start(username)# Create session based on request methodologyif tor or unique_tor:# Requests using Tor obfuscationunderlying_request = TorRequest()underlying_session = underlying_request.sessionelse:# Normal requestsunderlying_session = requests.session()underlying_request = requests.Request()# Limit number of workers to 20.# This is probably vastly overkill.if len(site_data) >= 20:max_workers = 20else:max_workers = len(site_data)# Create multi-threaded session for all requests.session = SherlockFuturesSession(max_workers=max_workers, session=underlying_session)# Results from analysis of all sitesresults_total = {}# First create futures for all requests. This allows for the requests to run in parallelfor social_network, net_info in site_data.items():# Results from analysis of this specific siteresults_site = {"url_main": net_info.get("urlMain")}# Record URL of main site# A user agent is needed because some sites don't return the correct# information since they think that we are bots (Which we actually are...)headers = {"User-Agent": "Mozilla/5.0 (Macintosh; Intel Mac OS X 10.12; rv:55.0) Gecko/20100101 Firefox/55.0",}if "headers" in net_info:# Override/append any extra headers required by a given site.headers.update(net_info["headers"])# URL of user on site (if it exists)url = interpolate_string(net_info["url"], username)# Don't make request if username is invalid for the siteregex_check = net_info.get("regexCheck")if regex_check and re.search(regex_check, username) is None:# No need to do the check at the site: this username is not allowed.results_site["status"] = QueryResult(username, social_network, url, QueryStatus.ILLEGAL)results_site["url_user"] = ""results_site["http_status"] = ""results_site["response_text"] = ""query_notify.update(results_site["status"])else:# URL of user on site (if it exists)results_site["url_user"] = urlurl_probe = net_info.get("urlProbe")request_method = net_info.get("request_method")request_payload = net_info.get("request_payload")request = Noneif request_method is not None:if request_method == "GET":request = session.getelif request_method == "HEAD":request = session.headelif request_method == "POST":request = session.postelif request_method == "PUT":request = session.putelse:raise RuntimeError(f"Unsupported request_method for {url}")if request_payload is not None:request_payload = interpolate_string(request_payload, username)if url_probe is None:# Probe URL is normal one seen by people out on the web.url_probe = urlelse:# There is a special URL for probing existence separate# from where the user profile normally can be found.url_probe = interpolate_string(url_probe, username)if request is None:if net_info["errorType"] == "status_code":# In most cases when we are detecting by status code,# it is not necessary to get the entire body: we can# detect fine with just the HEAD response.request = session.headelse:# Either this detect method needs the content associated# with the GET response, or this specific website will# not respond properly unless we request the whole page.request = session.getif net_info["errorType"] == "response_url":# Site forwards request to a different URL if username not# found. Disallow the redirect so we can capture the# http status from the original URL request.allow_redirects = Falseelse:# Allow whatever redirect that the site wants to do.# The final result of the request will be what is available.allow_redirects = True# This future starts running the request in a new thread, doesn't block the main threadif proxy is not None:proxies = {"http": proxy, "https": proxy}future = request(url=url_probe,headers=headers,proxies=proxies,allow_redirects=allow_redirects,timeout=timeout,json=request_payload,)else:future = request(url=url_probe,headers=headers,allow_redirects=allow_redirects,timeout=timeout,json=request_payload,)# Store future in data for access laternet_info["request_future"] = future# Reset identify for tor (if needed)if unique_tor:underlying_request.reset_identity()# Add this site's results into final dictionary with all the other results.results_total[social_network] = results_site# Open the file containing account links# Core logic: If tor requests, make them here. If multi-threaded requests, wait for responsesfor social_network, net_info in site_data.items():# Retrieve results againresults_site = results_total.get(social_network)# Retrieve other site information againurl = results_site.get("url_user")status = results_site.get("status")if status is not None:# We have already determined the user doesn't exist herecontinue# Get the expected error typeerror_type = net_info["errorType"]error_code = net_info.get("errorCode")# Retrieve future and ensure it has finishedfuture = net_info["request_future"]r, error_text, exception_text = get_response(request_future=future, error_type=error_type, social_network=social_network)# Get response time for response of our request.try:response_time = r.elapsedexcept AttributeError:response_time = None# Attempt to get request informationtry:http_status = r.status_codeexcept Exception:http_status = "?"try:response_text = r.text.encode(r.encoding or "UTF-8")except Exception:response_text = ""query_status = QueryStatus.UNKNOWNerror_context = Noneif error_text is not None:error_context = error_textelif error_type == "message":# error_flag True denotes no error found in the HTML# error_flag False denotes error found in the HTMLerror_flag = Trueerrors = net_info.get("errorMsg")# errors will hold the error message# it can be string or list# by isinstance method we can detect that# and handle the case for strings as normal procedure# and if its list we can iterate the errorsif isinstance(errors, str):# Checks if the error message is in the HTML# if error is present we will set flag to Falseif errors in r.text:error_flag = Falseelse:# If it's list, it will iterate all the error messagefor error in errors:if error in r.text:error_flag = Falsebreakif error_flag:query_status = QueryStatus.CLAIMEDelse:query_status = QueryStatus.AVAILABLEelif error_type == "status_code":# Checks if the Status Code is equal to the optional "errorCode" given in 'data.json'if error_code == r.status_code:query_status = QueryStatus.AVAILABLE# Checks if the status code of the response is 2XXelif not r.status_code >= 300 or r.status_code < 200:query_status = QueryStatus.CLAIMEDelse:query_status = QueryStatus.AVAILABLEelif error_type == "response_url":# For this detection method, we have turned off the redirect.# So, there is no need to check the response URL: it will always# match the request. Instead, we will ensure that the response# code indicates that the request was successful (i.e. no 404, or# forward to some odd redirect).if 200 <= r.status_code < 300:query_status = QueryStatus.CLAIMEDelse:query_status = QueryStatus.AVAILABLEelse:# It should be impossible to ever get here...raise ValueError(f"Unknown Error Type '{error_type}' for " f"site '{social_network}'")# Notify caller about results of query.result = QueryResult(username=username,site_name=social_network,site_url_user=url,status=query_status,query_time=response_time,context=error_context,)query_notify.update(result)# Save status of requestresults_site["status"] = result# Save results from requestresults_site["http_status"] = http_statusresults_site["response_text"] = response_text# Add this site's results into final dictionary with all of the other results.results_total[social_network] = results_sitereturn results_totaldef timeout_check(value):"""Check Timeout Argument.Checks timeout for validity.Keyword Arguments:value -- Time in seconds to wait before timing out request.Return Value:Floating point number representing the time (in seconds) that should beused for the timeout.NOTE: Will raise an exception if the timeout in invalid."""float_value = float(value)if float_value <= 0:raise ArgumentTypeError(f"Invalid timeout value: {value}. Timeout must be a positive number.")return float_valuedef handler(signal_received, frame):"""Exit gracefully without throwing errorsSource: https://www.devdungeon.com/content/python-catch-sigint-ctrl-c"""sys.exit(0)def main():version_string = (f"%(prog)s {__version__}\n"+ f"{requests.__description__}: {requests.__version__}\n"+ f"Python: {platform.python_version()}")parser = ArgumentParser(formatter_class=RawDescriptionHelpFormatter,description=f"{module_name} (Version {__version__})",)parser.add_argument("--version",action="version",version=version_string,help="Display version information and dependencies.",)parser.add_argument("--verbose","-v","-d","--debug",action="store_true",dest="verbose",default=False,help="Display extra debugging information and metrics.",)parser.add_argument("--folderoutput","-fo",dest="folderoutput",help="If using multiple usernames, the output of the results will be saved to this folder.",)parser.add_argument("--output","-o",dest="output",help="If using single username, the output of the result will be saved to this file.",)parser.add_argument("--tor","-t",action="store_true",dest="tor",default=False,help="Make requests over Tor; increases runtime; requires Tor to be installed and in system path.",)parser.add_argument("--unique-tor","-u",action="store_true",dest="unique_tor",default=False,help="Make requests over Tor with new Tor circuit after each request; increases runtime; requires Tor to be installed and in system path.",)parser.add_argument("--csv",action="store_true",dest="csv",default=False,help="Create Comma-Separated Values (CSV) File.",)parser.add_argument("--xlsx",action="store_true",dest="xlsx",default=False,help="Create the standard file for the modern Microsoft Excel spreadsheet (xslx).",)parser.add_argument("--site",action="append",metavar="SITE_NAME",dest="site_list",default=None,help="Limit analysis to just the listed sites. Add multiple options to specify more than one site.",)parser.add_argument("--proxy","-p",metavar="PROXY_URL",action="store",dest="proxy",default=None,help="Make requests over a proxy. e.g. socks5://127.0.0.1:1080",)parser.add_argument("--json","-j",metavar="JSON_FILE",dest="json_file",default=None,help="Load data from a JSON file or an online, valid, JSON file.",)parser.add_argument("--timeout",action="store",metavar="TIMEOUT",dest="timeout",type=timeout_check,default=60,help="Time (in seconds) to wait for response to requests (Default: 60)",)parser.add_argument("--print-all",action="store_true",dest="print_all",default=False,help="Output sites where the username was not found.",)parser.add_argument("--print-found",action="store_true",dest="print_found",default=True,help="Output sites where the username was found (also if exported as file).",)parser.add_argument("--no-color",action="store_true",dest="no_color",default=False,help="Don't color terminal output",)parser.add_argument("username",nargs="+",metavar="USERNAMES",action="store",help="One or more usernames to check with social networks. Check similar usernames using {?} (replace to '_', '-', '.').",)parser.add_argument("--browse","-b",action="store_true",dest="browse",default=False,help="Browse to all results on default browser.",)parser.add_argument("--local","-l",action="store_true",default=False,help="Force the use of the local data.json file.",)parser.add_argument("--nsfw",action="store_true",default=False,help="Include checking of NSFW sites from default list.",)args = parser.parse_args()# If the user presses CTRL-C, exit gracefully without throwing errorssignal.signal(signal.SIGINT, handler)# Check for newer version of Sherlock. If it exists, let the user know about ittry:r = requests.get("https://raw.githubusercontent.com/sherlock-project/sherlock/master/sherlock/sherlock.py")remote_version = str(re.findall('__version__ = "(.*)"', r.text)[0])local_version = __version__if remote_version != local_version:print("Update Available!\n"+ f"You are running version {local_version}. Version {remote_version} is available at https://github.com/sherlock-project/sherlock")except Exception as error:print(f"A problem occurred while checking for an update: {error}")# Argument check# TODO regex check on args.proxyif args.tor and (args.proxy is not None):raise Exception("Tor and Proxy cannot be set at the same time.")# Make promptsif args.proxy is not None:print("Using the proxy: " + args.proxy)if args.tor or args.unique_tor:print("Using Tor to make requests")print("Warning: some websites might refuse connecting over Tor, so note that using this option might increase connection errors.")if args.no_color:# Disable color output.init(strip=True, convert=False)else:# Enable color output.init(autoreset=True)# Check if both output methods are entered as input.if args.output is not None and args.folderoutput is not None:print("You can only use one of the output methods.")sys.exit(1)# Check validity for single username output.if args.output is not None and len(args.username) != 1:print("You can only use --output with a single username")sys.exit(1)# Create object with all information about sites we are aware of.try:if args.local:sites = SitesInformation(os.path.join(os.path.dirname(__file__), "resources/data.json"))else:sites = SitesInformation(args.json_file)except Exception as error:print(f"ERROR: {error}")sys.exit(1)if not args.nsfw:sites.remove_nsfw_sites()# Create original dictionary from SitesInformation() object.# Eventually, the rest of the code will be updated to use the new object# directly, but this will glue the two pieces together.site_data_all = {site.name: site.information for site in sites}if args.site_list is None:# Not desired to look at a sub-set of sitessite_data = site_data_allelse:# User desires to selectively run queries on a sub-set of the site list.# Make sure that the sites are supported & build up pruned site database.site_data = {}site_missing = []for site in args.site_list:counter = 0for existing_site in site_data_all:if site.lower() == existing_site.lower():site_data[existing_site] = site_data_all[existing_site]counter += 1if counter == 0:# Build up list of sites not supported for future error message.site_missing.append(f"'{site}'")if site_missing:print(f"Error: Desired sites not found: {', '.join(site_missing)}.")if not site_data:sys.exit(1)# Create notify object for query results.query_notify = QueryNotifyPrint(result=None, verbose=args.verbose, print_all=args.print_all, browse=args.browse)# Run report on all specified users.all_usernames = []for username in args.username:if check_for_parameter(username):for name in multiple_usernames(username):all_usernames.append(name)else:all_usernames.append(username)for username in all_usernames:results = sherlock(username,site_data,query_notify,tor=args.tor,unique_tor=args.unique_tor,proxy=args.proxy,timeout=args.timeout,)if args.output:result_file = args.outputelif args.folderoutput:# The usernames results should be stored in a targeted folder.# If the folder doesn't exist, create it firstos.makedirs(args.folderoutput, exist_ok=True)result_file = os.path.join(args.folderoutput, f"{username}.txt")else:result_file = f"{username}.txt"with open(result_file, "w", encoding="utf-8") as file:exists_counter = 0for website_name in results:dictionary = results[website_name]if dictionary.get("status").status == QueryStatus.CLAIMED:exists_counter += 1file.write(dictionary["url_user"] + "\n")file.write(f"Total Websites Username Detected On : {exists_counter}\n")if args.csv:result_file = f"{username}.csv"if args.folderoutput:# The usernames results should be stored in a targeted folder.# If the folder doesn't exist, create it firstos.makedirs(args.folderoutput, exist_ok=True)result_file = os.path.join(args.folderoutput, result_file)with open(result_file, "w", newline="", encoding="utf-8") as csv_report:writer = csv.writer(csv_report)writer.writerow(["username","name","url_main","url_user","exists","http_status","response_time_s",])for site in results:if (args.print_foundand not args.print_alland results[site]["status"].status != QueryStatus.CLAIMED):continueresponse_time_s = results[site]["status"].query_timeif response_time_s is None:response_time_s = ""writer.writerow([username,site,results[site]["url_main"],results[site]["url_user"],str(results[site]["status"].status),results[site]["http_status"],response_time_s,])if args.xlsx:usernames = []names = []url_main = []url_user = []exists = []http_status = []response_time_s = []for site in results:if (args.print_foundand not args.print_alland results[site]["status"].status != QueryStatus.CLAIMED):continueif response_time_s is None:response_time_s.append("")else:response_time_s.append(results[site]["status"].query_time)usernames.append(username)names.append(site)url_main.append(results[site]["url_main"])url_user.append(results[site]["url_user"])exists.append(str(results[site]["status"].status))http_status.append(results[site]["http_status"])DataFrame = pd.DataFrame({"username": usernames,"name": names,"url_main": url_main,"url_user": url_user,"exists": exists,"http_status": http_status,"response_time_s": response_time_s,})DataFrame.to_excel(f"{username}.xlsx", sheet_name="sheet1", index=False)print()query_notify.finish()if __name__ == "__main__":main()主要流程:

- 程序开始

- 创建参数解析器并添加参数,输出参数对象

- 信号处理:处理输入CTRL+C程序退出

- 获取gihub当前程序版本与本地版本比对,不一致输出提升信息

- 根据参数做相应的处理

- args.no_color:初始化颜色

- args.local:载入网站数据,封装为网站对象数组

- 创建查询通知对象

- 遍历输入的用户名

- 获取响应结果

- 查询通知开始

- 获取请求session和request

- 设置最大工作数

- 遍历网站数组

- 解析请求网址,参数,请求方法,请求头等

- 发送请求,获取响应(异步)

- 解析响应结果并返回

- 确认输出位置

- 确认输出文件格式

- 获取响应结果

- 通知结束

注意:

- 通知机制提供开始、更新、结束方法,输出标准输出,字体设置颜色

四 添加自定义网址

目前检查网址多为外网地址,对我们来说非常的不友好,我们来自己添加个测试下,以csdn博客为例,json数据如下:

{"csdn": {"errorMsg": "Oops! That page doesn\u2019t exist or is private","errorType": "message","url": "https://blog.csdn.net/{}","urlMain": "https://blog.csdn.net/","username_claimed": "blue"}

}

测试结果:

[*] Checking username gaogzhen on:[+] [4430ms] csdn: https://blog.csdn.net/gaogzhen[*] Search completed with 1 resultsProcess finished with exit code 0

结语

欢迎小伙伴一起学习交流,需要啥工具或者有啥问题随时联系我。

❓QQ:806797785

⭐️源代码地址:https://gitee.com/gaogzhen/smart-utilities.git

[1]sherlock(福尔摩斯)-通过用户名寻找社交媒体帐户[CP/OL]

[2]GitHub 一周热点汇总第11期(2024/02/18-02/24)[CP/OL]