KVM 集成 OpenvSwitch 虚拟交换机

KVM(Kernel-based Virtual Machine)是Linux内核中的一种虚拟化技术,它允许在同一台主机上运行多个虚拟机。

在默认情况下,KVM使用基于Linux bridge的网络虚拟化解决方案。Linux bridge是一种内核模块,可将多个网络接口桥接在一起,从而实现虚拟机与物理网络之间的通信。然而,Linux bridge功能相对有限,难以满足复杂网络虚拟化场景的需求。

与之相比,Open vSwitch是一种生产级别的软件交换机,被广泛应用于云计算、软件定义网络等领域。它提供了更加丰富和灵活的网络虚拟化功能,例如VLAN交换、QoS、镜像等,可以轻松实现网络隔离、负载均衡等高级功能。

因此,将KVM从默认的Linux bridge切换到Open vSwitch,可以大幅提升网络虚拟化的性能和功能。在接下来的博客中,我会详细介绍如何完成这一切换,包括Open vSwitch的安装、配置虚拟交换机、集成KVM等步骤。

操作系统版本:Ubuntu 22.04 LTS

安装 KVM

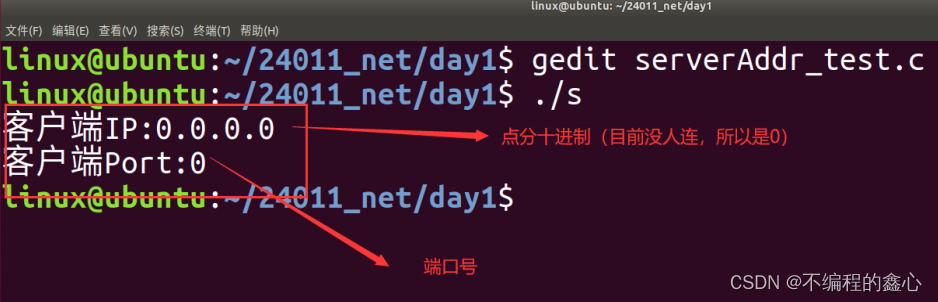

检查您的 CPU 是否支持虚拟化,运行以下命令,输出大于0,说明KVM与系统兼容,可以安装。

egrep -c 'vmx|svm' /proc/cpuinfo

使用apt命令安装qemu-kvm及相关工具

apt update -y

apt install -y qemu-kvm libvirt-daemon-system virtinst libvirt-clients bridge-utils

安装openvSwitch

apt update -y

apt install -y openvswitch-switch

创建KVM网络

默认KVM已经基于Linux Bridge 创建了一个名为default的NAT网络。

root@node1:~# brctl show

bridge name bridge id STP enabled interfaces

virbr0 8000.525400f7e041 yes root@node1:~#

root@node1:~# virsh net-list Name State Autostart Persistent

--------------------------------------------default active yes yes

由于创建虚拟机时可以自由选择使用哪个网络,这里我们为KVM创建一个新的网络即可,原来的网络可以保持不变,或者配置后删除。

创建ovs网桥

在定义基于 OVS 的 libvirt 网络之前,您需要在 OVS 中创建虚拟交换机。

root@node1:~# ovs-vsctl add-br ovsbr0

然后验证它是否正确创建:

root@node1:~# ovs-vsctl show

654f0d05-ff5e-4979-a3ac-5b82b5f237a2Bridge ovsbr0Port ovsbr0Interface ovsbr0type: internalovs_version: "2.17.0"

现在你可以开始使用 libvirt 了。

定义 libvirt 网络

由于 libvirt 默认使用 Linux bridge,任何创建新网络的 virsh 子命令都会基于它创建一个网络,因此您需要创建一个包含基于 OVS 的网络定义的 XML 文件,并将其导入到库虚拟机。

生成一个随机UUID

root@node1:~# uuidgen

c654bba4-224b-46a6-b9fb-99c71087cd05

创建ovs网络定义文件

cat >ovs-network.xml<<EOF

<network><name>ovs</name><uuid>c654bba4-224b-46a6-b9fb-99c71087cd05</uuid><forward mode='bridge'/><bridge name='ovsbr0'/><virtualport type='openvswitch'/>

</network>

EOF

注意:可以使用 uuidgen 为网络生成新的通用唯一标识符 (UUID)。

然后在libvirt中导入网络:

virsh net-define ovs-network.xml

仅在 libvirt 中定义网络不足以使网络可用。您必须启动网络并启用开机自动启动:

virsh net-start ovs

virsh net-autostart ovs

查看新创建的网络

# virsh net-list

Name State Autostart Persistent

--------------------------------------------

default active yes yes

ovs active yes yes

创建虚拟机

此时ovs网络已经准备就绪,我们使用精简的 cirros 镜像来创建2个虚拟机连接到该ovs网桥,该镜像仅有20M左右,非常适合用作测试。

下载镜像

wget https://download.cirros-cloud.net/0.6.2/cirros-0.6.2-x86_64-disk.img

复制镜像到镜像模板目录

mkdir -p /var/lib/libvirt/images/templates/

cp cirros-0.6.2-x86_64-disk.img /var/lib/libvirt/images/templates/

创建镜像磁盘目录,并复制镜像

mkdir -p /var/lib/libvirt/images/{cirros01,cirros02}

cp /var/lib/libvirt/images/templates/cirros-0.6.2-x86_64-disk.img /var/lib/libvirt/images/cirros01/

cp /var/lib/libvirt/images/templates/cirros-0.6.2-x86_64-disk.img /var/lib/libvirt/images/cirros02/

创建镜像元数据文件:

cat >cirros.meta<<EOF

{

"instance-id": "10",

"local-hostname": "cirros"

}

EOFcat >cirros.user<<EOF

#!/bin/sh

echo DATASOURCE_LIST="nocloud" > /etc/cirros-init/config

EOF

说明:通过DATASOURCE_LIST="nocloud"参数指定cirros镜像在非云环境运行,防止连接不存在的元数据服务器出现启动缓慢问题:

checking http://169.254.169.254/2009-04-04/instance-id

failed 1/20: up 5.14. request failed

failed 2/20: up 7.18. request failed

failed 3/20: up 9.19. request failed

运行第一个虚拟机

virt-install \--name cirros01 \--vcpus 1 \--memory 256 \--disk path=/var/lib/libvirt/images/cirros01/cirros-0.6.2-x86_64-disk.img \--os-variant cirros0.5.2 \--import \--autostart \--noautoconsole \--network network:ovs \--cloud-init user-data="/root/cirros.user",meta-data="/root/cirros.meta"

运行第二个虚拟机

virt-install \--name cirros02 \--vcpus 1 \--memory 256 \--disk path=/var/lib/libvirt/images/cirros02/cirros-0.6.2-x86_64-disk.img \--os-variant cirros0.5.2 \--import \--autostart \--noautoconsole \--network network:ovs \--cloud-init user-data="/root/cirros.user",meta-data="/root/cirros.meta"

说明:

--network:必选,指定新建虚拟机使用ovs网络--cloud-init:可选,防止cirros虚拟机启动过慢

查看创建的虚拟机

root@node1:~# virsh listId Name State

--------------------------5 cirros01 running6 cirros02 running

为cirros01虚拟机配置IP地址

root@node1:~# virsh console cirros01

Connected to domain 'cirros01'

Escape character is ^] (Ctrl + ])login as 'cirros' user. default password: 'gocubsgo'. use 'sudo' for root.

cirros login: cirros

Password:

$

$ sudo ip a add 10.0.0.10 dev eth0

为cirros02虚拟机配置IP地址

root@node1:~# virsh console cirros02

Connected to domain 'cirros02'

Escape character is ^] (Ctrl + ])login as 'cirros' user. default password: 'gocubsgo'. use 'sudo' for root.

cirros login: cirros

Password:

$

$ sudo ip a add 10.0.0.11 dev eth0

测试在cirros01虚拟机访问cirros02虚拟机

root@node1:~# virsh console cirros01

Connected to domain 'cirros01'

Escape character is ^] (Ctrl + ])login as 'cirros' user. default password: 'gocubsgo'. use 'sudo' for root.

cirros login: cirros

Password:

$

$ sudo ip a add 10.0.0.10 dev eth0

$

$

$ ping 10.0.0.11 -c 4

PING 10.0.0.11 (10.0.0.11) 56(84) bytes of data.

64 bytes from 10.0.0.11: icmp_seq=1 ttl=64 time=0.904 ms

64 bytes from 10.0.0.11: icmp_seq=2 ttl=64 time=0.729 ms

64 bytes from 10.0.0.11: icmp_seq=3 ttl=64 time=1.12 ms

64 bytes from 10.0.0.11: icmp_seq=4 ttl=64 time=0.847 ms--- 10.0.0.11 ping statistics ---

4 packets transmitted, 4 received, 0% packet loss, time 3005ms

rtt min/avg/max/mdev = 0.729/0.900/1.122/0.142 ms

$

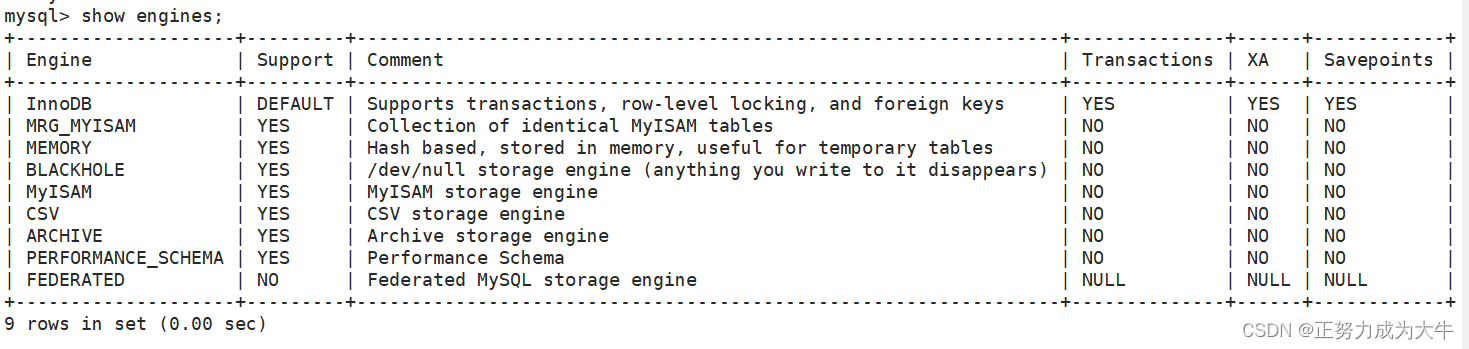

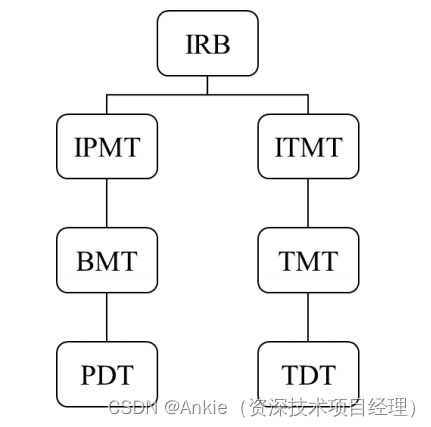

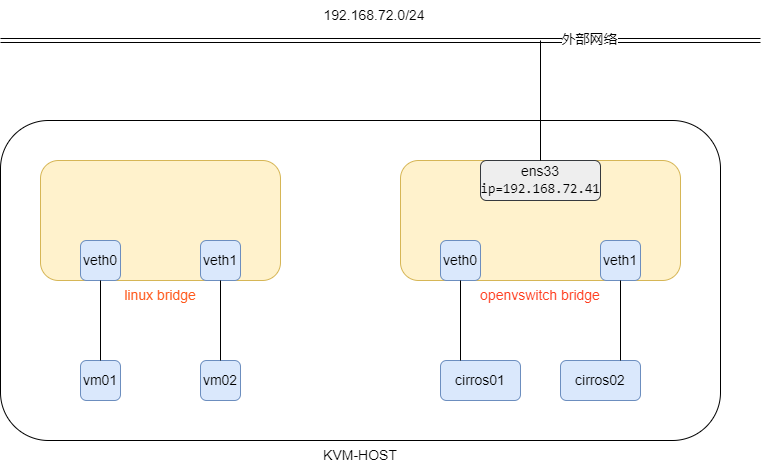

ovs连接外网

上面我们测试了将两台虚拟机连接到同一个openvSwitch 虚拟交换机,两台虚拟机能够直接在二层相互通信,下面介绍将物理网卡添加到ovs网桥,实现ovs网桥下的虚拟机访问外网。

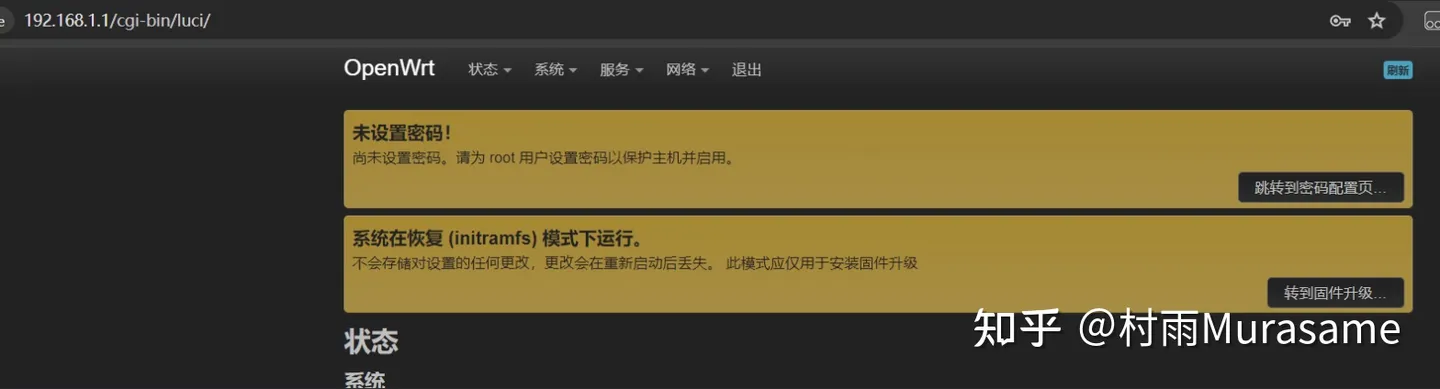

最终网络如下图所示:

查看主机网卡默认配置

root@node1:~# cat /etc/netplan/00-installer-config.yaml

# This is the network config written by 'subiquity'

network:version: 2ethernets:ens33:dhcp4: falseaddresses:- 192.168.72.41/24nameservers:addresses:- 223.5.5.5- 223.6.6.6routes:- to: defaultvia: 192.168.72.8

root@node1:~#

修改主机网卡配置,将ens33主网卡IP迁移到网桥端口,并且将ens33网卡加入ovs网桥中

root@node1:~# cat /etc/netplan/00-installer-config.yaml

# This is the network config written by 'subiquity'

network:ethernets:ens33:dhcp4: falsedhcp6: falsebridges:ovsbr0:openvswitch: {}interfaces: [ ens33 ]dhcp4: falsedhcp6: falseaddresses: [192.168.72.41/24]routes:- to: defaultvia: 192.168.72.8nameservers:addresses: ["223.5.5.5","223.6.6.6"]parameters:stp: falseforward-delay: 4version: 2

验证配置文件,并应用配置使配置生效(谨慎操作,可能有SSH连接中断风险)

root@node1:~# netplan try

root@node1:~# netplan apply

查看OVS网桥,ens33网口成功添加到ovs网桥

root@node1:~# ovs-vsctl show

e2084c87-149e-428e-a7bd-89c644a0a9ceBridge ovsbr0fail_mode: standalonePort ens33Interface ens33Port ovsbr0Interface ovsbr0type: internalPort vnet1Interface vnet1Port vnet0Interface vnet0ovs_version: "2.17.9"

查看主机IP,已经配置到OVS网桥接口ovsbr0

root@node1:~# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft foreverinet6 ::1/128 scope host valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq master ovs-system state UP group default qlen 1000link/ether 00:50:56:aa:61:b5 brd ff:ff:ff:ff:ff:ffaltname enp2s1

3: ovs-system: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000link/ether da:1e:a6:0a:ff:8e brd ff:ff:ff:ff:ff:ff

4: ovsbr0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN group default qlen 1000link/ether 00:50:56:aa:61:b5 brd ff:ff:ff:ff:ff:ffinet 192.168.72.41/24 brd 192.168.72.255 scope global ovsbr0valid_lft forever preferred_lft foreverinet6 fe80::250:56ff:feaa:61b5/64 scope link valid_lft forever preferred_lft forever

5: virbr0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default qlen 1000link/ether 52:54:00:f7:e0:41 brd ff:ff:ff:ff:ff:ffinet 192.168.122.1/24 brd 192.168.122.255 scope global virbr0valid_lft forever preferred_lft forever

6: vnet0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel master ovs-system state UNKNOWN group default qlen 1000link/ether fe:54:00:81:1d:ee brd ff:ff:ff:ff:ff:ffinet6 fe80::fc54:ff:fe81:1dee/64 scope link valid_lft forever preferred_lft forever

7: vnet1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel master ovs-system state UNKNOWN group default qlen 1000link/ether 0e:a2:61:58:2c:1a brd ff:ff:ff:ff:ff:ffinet6 fe80::ca2:61ff:fe58:2c1a/64 scope link valid_lft forever preferred_lft forever

root@node1:~#

连接到虚拟机,查看虚拟机已经自动获取到外网IP地址(物理网络有提供DHCP服务,否则需要手动配置IP),并且能够正常与外网通信。

root@node1:~# virsh console cirros01

Connected to domain 'cirros01'

Escape character is ^] (Ctrl + ])$

$ ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft foreverinet6 ::1/128 scope host valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast qlen 1000link/ether 52:54:00:c7:81:b6 brd ff:ff:ff:ff:ff:ffinet 192.168.72.149/24 brd 192.168.72.255 scope global dynamic noprefixroute eth0valid_lft 42878sec preferred_lft 37478secinet6 fe80::5054:ff:fec7:81b6/64 scope link valid_lft forever preferred_lft forever

$

$ ping www.baidu.com -c 4

PING 183.2.172.185 (183.2.172.185) 56(84) bytes of data.

64 bytes from 183.2.172.185 (183.2.172.185): icmp_seq=1 ttl=53 time=7.58 ms

64 bytes from 183.2.172.185 (183.2.172.185): icmp_seq=2 ttl=53 time=8.47 ms

64 bytes from 183.2.172.185 (183.2.172.185): icmp_seq=3 ttl=53 time=8.24 ms

64 bytes from 183.2.172.185 (183.2.172.185): icmp_seq=4 ttl=53 time=8.13 ms--- 183.2.172.185 ping statistics ---

4 packets transmitted, 4 received, 0% packet loss, time 3005ms

rtt min/avg/max/mdev = 7.576/8.104/8.472/0.329 ms

$

参考:https://www.redhat.com/sysadmin/libvirt-open-vswitch