DaemonSet控制器

DaemonSet控制器是Kubernetes中的一种控制器,用于确保集群中的每个节点都运行一个Pod的副本。它通常用于在整个集群中部署一些系统级别的服务:

- 在每一个node节点运行一个存储服务,例如gluster,ceph。

- 在每一个node节点运行一个日志收集服务,例如fluentd,logstash。

- 在每一个node节点运行一个监控服务,例如Prometheus Node Exporter,zabbix agent等。

DaemonSet控制器的工作方式是,它会监视集群中的节点,并确保在每个节点上都有一个Pod实例在运行。如果节点增加或减少,DaemonSet 控制器会相应地调整 Pod 的副本数量,以确保每个节点都有一个Pod在运行。

DaemonSet日志收集EFK

使用StatefulSet部署elasticsearch三节点集群,使用headless service集群通信,部署动态存储为elasticsearch提供存储服务。

部署StorageClass

请参考https://blog.csdn.net/qq42004/article/details/137113713?spm=1001.2014.3001.5502

部署elasticsearch

官方网站https://www.elastic.co/guide/en/elasticsearch/reference/6.0/getting-started.html

-

部署Service

部署一个NodePort的类型的Service方便后面查询集群信息。

apiVersion: v1

kind: Namespace

metadata:name: efklabels:app: efk

---

apiVersion: v1

kind: Service

metadata:name: efklabels:app: efknamespace: efk

spec:clusterIP: Noneselector:app: efkports:- name: efk-port-httpport: 9200- name: efk-port-insideport: 9300

---

apiVersion: v1

kind: Service

metadata:name: efk-httplabels:app: efk-httpnamespace: efk

spec:selector:app: efkports:- name: efk-port-httpport: 9200protocol: TCPtargetPort: 9200nodePort: 32005type: NodePortelasticsearch的9200端口用于所有通过HTTP协议进行的API调用。包括搜索、聚合、监控、以及其他任何使用HTTP协议的请求,所有的客户端库都会使用该端口与elasticsearch进行交互。9300端口是一个自定义的二进制协议,用于集群中各节点之间的通信。用于诸如集群变更、主节点选举、节点加入/离开、分片分配等事项。

- 部署elasticsearch

apiVersion: apps/v1

kind: StatefulSet

metadata:name: efklabels:app: efknamespace: efk

spec:replicas: 3serviceName: efk selector:matchLabels:app: efktemplate:metadata:name: efk-conlabels:app: efkspec:hostname: efk initContainers:- name: chmod-dataimage: docker.io/library/busybox:latestimagePullPolicy: IfNotPresentcommand:- sh- "-c"- |chown -R 1000:1000 /usr/share/elasticsearch/datasecurityContext:privileged: truevolumeMounts:- name: data mountPath: /usr/share/elasticsearch/data- name: vm-max-countimage: docker.io/library/busybox:latestimagePullPolicy: IfNotPresentcommand: ["sysctl","-w","vm.max_map_count=262144"]securityContext:privileged: true- name: ulimitimage: docker.io/library/busybox:latestimagePullPolicy: IfNotPresentcommand: ["sh","-c","ulimit -Hl unlimited && ulimit -Sl unlimited && ulimit -n 65536 && id",]securityContext:privileged: truecontainers:- name: efkimage: docker.elastic.co/elasticsearch/elasticsearch:7.17.19imagePullPolicy: IfNotPresentresources:limits: cpu: "1"#memory: "512Mi"requests:cpu: "0.5" #memory: "256Mi" env: - name: cluster.namevalue: efk-log- name: node.namevalueFrom:fieldRef:fieldPath: metadata.name - name: discovery.seed_hostsvalue: "efk-0.efk,efk-1.efk,efk-2.elk"- name: cluster.initial_master_nodesvalue: "efk-0,efk-1,efk-2"- name: bootstrap.memory_lockvalue: "false"- name: ES_JAVA_OPTSvalue: "-Xms512m -Xmx512m"ports:- containerPort: 9200name: port-httpprotocol: TCP- containerPort: 9300name: port-insideprotocol: TCPvolumeMounts:- name: datamountPath: /usr/share/elasticsearch/data- name: timemountPath: /etc/localtimevolumes:- name: timehostPath: path: /etc/localtimetype: File volumeClaimTemplates:- metadata:name: dataspec:storageClassName: "nfs-stgc-delete"accessModes: ["ReadWriteOnce"]resources:requests:storage: 1Gi在initContainers中修改部分系统参数(部署的时候可以用Docker跑一下部署版本elasticsearch看需要修改那些参数)

securityContext:privileged: true

开启容器的特权模式。

env: - name: cluster.namevalue: elk-log- name: node.namevalueFrom:fieldRef:fieldPath: metadata.name - name: discovery.seed_hostsvalue: "efk-0.efk,efk-1.efk,efk-2.elk"- name: cluster.initial_master_nodesvalue: "efk-0,efk-1,efk-2"- name: bootstrap.memory_lockvalue: "false"- name: ES_JAVA_OPTSvalue: "-Xms512m -Xmx512m"

- node.name:处理elasticsearch中一个未引入元数据的报错。

- discovery.seed_hosts:配置节点发现。

- cluster.initial_master_nodes:当第一次启动一个全新的elasticsearch集群时,会有一个集群引导步骤,该步骤确定在 第一次选举中计算其选票的主合格节点集。在开发模式下,在未配置发现设置的情况下,此步骤由节点自身自动执行。由于这种自动引导本质上是不安全的,当您在生产模式下启动一个全新的集群时,您必须明确列出在第一次选举中应该计算其投票的主合格节点。此列表是使用cluster.initial_master_nodes设置设置的。在重新启动群集或向现有群集添加新节点时,不应使用此设置。

- ES_JAVA_OPTS:允许传递一些JVM(java虚拟机)的参数给elasticsearch进程。-Xms512m -Xmx512m指定了进行初始堆内存和最大内存大小为512M。

- bootstrap.memory_lock:锁定内存,禁用内存交换。k8s本身禁用了交换分区,此处设置为false(默认也是false)。

查看集群信息:

- 查看节点状态:http://192.168.0.100:32005/_cat/nodes?v&pretty

ip heap.percent ram.percent cpu load_1m load_5m load_15m node.role master name

10.244.1.245 28 79 4 3.63 2.97 1.78 cdfhilmrstw - efk-2

10.244.1.244 30 79 3 3.63 2.97 1.78 cdfhilmrstw - efk-0

10.244.2.235 51 67 5 0.70 0.73 0.67 cdfhilmrstw * efk-1

- 查看集群健康信息:http://192.168.0.100:32005/_cluster/health?pretty 状态信息为:green

{"cluster_name" : "efk-log","status" : "green","timed_out" : false,"number_of_nodes" : 3,"number_of_data_nodes" : 3,"active_primary_shards" : 3,"active_shards" : 6,"relocating_shards" : 0,"initializing_shards" : 0,"unassigned_shards" : 0,"delayed_unassigned_shards" : 0,"number_of_pending_tasks" : 0,"number_of_in_flight_fetch" : 0,"task_max_waiting_in_queue_millis" : 0,"active_shards_percent_as_number" : 100.0

}

- 查看集群的状态信息:http://192.168.0.100:32005/_cluster/state?pretty

{"cluster_name" : "efk-log","cluster_uuid" : "STY6wpxzS0qHBy5XrjQRuw","version" : 93,"state_uuid" : "bV0lB3d6TzOYIZc9WE92dg","master_node" : "-KIR5smtTvCZE3RJSEPh1w","blocks" : { },"nodes" : {"HEpcL5aSTdaEXdE_75319g" : {"name" : "efk-2","ephemeral_id" : "ToczCgVFSCuloVO2gitEaA","transport_address" : "10.244.1.245:9300","attributes" : {"ml.machine_memory" : "3956289536","ml.max_open_jobs" : "512","xpack.installed" : "true","ml.max_jvm_size" : "536870912","transform.node" : "true"},"roles" : ["data","data_cold","data_content","data_frozen","data_hot","data_warm","ingest","master","ml","remote_cluster_client","transform"]},"Qs7bNaTvS8CSdMmQuu9Jog" : {"name" : "efk-0","ephemeral_id" : "Gdyw-MhAQhmeLl7k2Sm5HQ","transport_address" : "10.244.1.244:9300","attributes" : {"ml.machine_memory" : "3956289536","ml.max_open_jobs" : "512","xpack.installed" : "true","ml.max_jvm_size" : "536870912","transform.node" : "true"},"roles" : ["data","data_cold","data_content","data_frozen","data_hot","data_warm","ingest","master","ml","remote_cluster_client","transform"]},"-KIR5smtTvCZE3RJSEPh1w" : {"name" : "efk-1","ephemeral_id" : "J7o-nw8LQxaxw7O9kjvEIg","transport_address" : "10.244.2.235:9300","attributes" : {"ml.machine_memory" : "3956289536","xpack.installed" : "true","transform.node" : "true","ml.max_open_jobs" : "512","ml.max_jvm_size" : "536870912"},"roles" : ["data","data_cold","data_content","data_frozen","data_hot","data_warm","ingest","master","ml","remote_cluster_client","transform"]}},.......

部署kibana

apiVersion: v1

kind: Service

metadata:name: kibananamespace: efklabels:app: kibana

spec:selector:app: kibanaports:- name: port-kibanaport: 5601appProtocol: TCPnodePort: 30006targetPort: 5601type: NodePort

---

apiVersion: apps/v1

kind: Deployment

metadata: name: kibananamespace: efklabels:app: kibana

spec: replicas: 1selector:matchLabels:app: kibanatemplate:metadata:name: kibanalabels:app: kibanaspec:nodeSelector:app: ngcontainers:- name: kibanaimage: docker.elastic.co/kibana/kibana:7.17.19imagePullPolicy: IfNotPresentresources:limits:cpu: "1"requests:cpu: "0.5"env:- name: ELASTICSEARCH_HOSTSvalue: http://efk:9200volumeMounts:- name: timemountPath: /etc/localtime- name: data-kibanamountPath: /etc/elk/kibana/datasubPath: dataports:- name: kibana-portcontainerPort: 5601volumes:- name: timehostPath: path: /etc/localtimetype: File - name: data-kibanahostPath:path: /mnt/kibana

env:- name: ELASTICSEARCH_HOSTSvalue: http://efk:9200

配置访问elasticsearch地址

DaemonSet部署fluentd

- 创建配置文件

k8s是基于Containerd部署的要读取的日志在/var/log/containers下,产生的cri日志需要进行解析不然会遇到格式错误的 JSON问题。简写一个配置文件:

apiVersion: v1

kind: ConfigMap

metadata:name: fluentlabels:app: fluentnamespace: efk

data:system.conf: |<source>@type tailformat jsonpath /var/log/containers/*.logparser critag kube.*read_from_head true<parse>@type cri format regex#expression /^(?<time>.+) (?<stream>stdout|stderr)( (?<logtag>.))? (?<log>.*)$/Regex ^(?<time>[^ ]+) (?<stream>stdout|stderr) (?<logtag>[^ ]*) (?<message>.*)$</parse></source><match kube.**>@type elasticsearchhost efk.efk.svc.cluster.localport 9200logstash_format truelogstash_prefix kubelogstash_dateformat %Y.%m.%dinclude_tag_key truetag_key @log_name</match>-

部署fluentd

fluentd官方文档:https://github.com/fluent/fluentd-kubernetes-daemonset/tree/master

DaemonSet官方文档:https://kubernetes.io/zh-cn/docs/concepts/workloads/controllers/daemonset/

apiVersion: v1

kind: ServiceAccount

metadata:name: fluentdnamespace: efklabels:app: fluentd

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:name: fluentdlabels:app: fluentd

rules:

- apiGroups:- ""resources:- pods- namespacesverbs:- get- list- watch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:name: fluentd

roleRef:kind: ClusterRolename: fluentdapiGroup: rbac.authorization.k8s.io

subjects:

- kind: ServiceAccountname: fluentdnamespace: efk

---

apiVersion: apps/v1

kind: DaemonSet

metadata:name: fluentdnamespace: efklabels:app: fluentd

spec:selector:matchLabels:app: fluentdtemplate:metadata:labels:app: fluentdspec:serviceAccount: fluentdserviceAccountName: fluentdtolerations:- key: "node-role.kubernetes.io/control-plane"effect: "NoSchedule"initContainers:- name: init-fluentdimage: docker.io/fluent/fluentd-kubernetes-daemonset:v1.16.5-debian-elasticsearch7-amd64-1.0imagePullPolicy: IfNotPresentcommand:- bash- "-c"- |cp /mnt/config-map/system.conf /mnt/conf.dvolumeMounts:- name: confmountPath: /mnt/conf.d- name: confg-mapmountPath: /mnt/config-mapcontainers:- name: fluentdimage: docker.io/fluent/fluentd-kubernetes-daemonset:v1.16.5-debian-elasticsearch7-amd64-1.0imagePullPolicy: IfNotPresentenv:- name: K8S_NODE_NAMEvalueFrom:fieldRef:fieldPath: spec.nodeName- name: FLUENT_ELASTICSEARCH_HOSTvalue: "efk.efk.svc.cluster.local"- name: FLUENT_ELASTICSEARCH_PORTvalue: "9200"- name: FLUENT_ELASTICSEARCH_SCHEMEvalue: "http"- name: FLUENTD_SYSTEMD_CONFvalue: disable- name: FLUENT_CONTAINER_TAIL_EXCLUDE_PATHvalue: /var/log/containers/fluent*- name: FLUENT_CONTAINER_TAIL_PARSER_TYPEvalue: /^(?<time>.+) (?<stream>stdout|stderr)( (?<logtag>.))? (?<log>.*)$/resources:limits:memory: 512Mirequests:cpu: 100mmemory: 200MivolumeMounts:- name: varlogmountPath: /var/log- name: containerslogsmountPath: /var/log/containersreadOnly: true- name: conf mountPath: /fluentd/etc/conf.dterminationGracePeriodSeconds: 30volumes:- name: varloghostPath:path: /var/log- name: containerslogshostPath:path: /var/log/containers- name: confemptyDir: {}- name: confg-mapconfigMap:name: fluent- Deployments和Daemonset区别联系

DaemonSet与Deployments非常类似,它们都能创建Pod,这些Pod对应的进程都不希望被终止掉(例如,Web 服务器、存储服务器)。无状态的Service使用Deployments,比如前端Frontend服务,实现对副本的数量进行扩缩容、平滑升级,比基于精确控制Pod运行在某个主机上要重要得多。 需要Pod副本总是运行在全部或特定主机上,并需要先于其他Pod启动,当这被认为非常重要时,应该使用daemonset。

-

更新DaemonSet

如果修改了节点标签,DaemonSet 将立刻向新匹配上的节点添加Pod,同时删除不能够匹配的节点上的Pod。可以修改 DaemonSet创建的Pod。然而不允许对Pod的所有字段进行更新。当下次某节点(即使具有相同的名称的Pod)被创建时,DaemonSet Controller还会使用最初的模板。你可以删除一个DaemonSet。如果使用kubectl并指定 --cascade=false选项,则Pod将被保留在节点上。然后可以创建具有不同模板的新DaemonSet。具有不同模板的新 DaemonSet 将能够通过标签匹配并识别所有已经存在的 Pod。 如果有任何Pod需要替换,则DaemonSet根据它的updateStrategy来替换

-

与 DaemonSet 中的 Pod 进行通信

- Push:将DaemonSet中的Pod配置为将更新发送到其他服务,例如统计数据库。

- NodeIP 和已知端口:DaemonSet中的Pod可以使用hostPort,从而可以通过节点IP访问到 Pod。客户端能通过某种方法获取节点 IP 列表,并且基于此也可以获取到相应的端口。

- DNS:创建具有相同Pod Selector 的Headless Service,然后通过使用endpoints资源或从DNS中检索到多个A记录来发现DaemonSet。

- Service:创建具有相同Pod Selector的Service,并使用该Service随机访问到某个节点上的daemonset pod(没有办法访问到特定节点)。

- 污点和容忍度

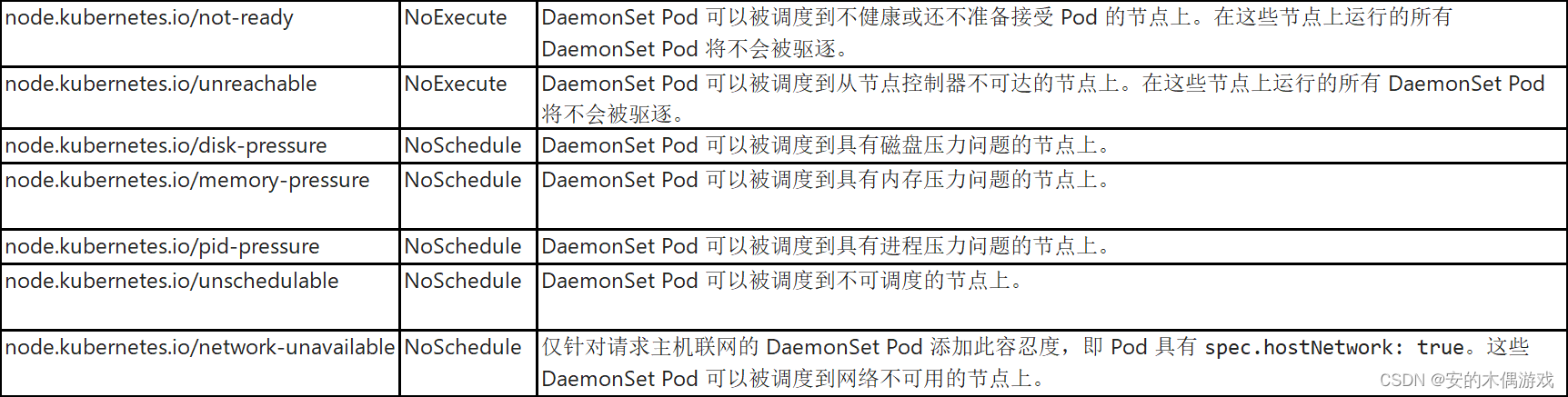

DaemonSet控制器会自动将一组容忍度添加到DaemonSet Pod:

你也可以在DaemonSet的Pod模板中定义自己的容忍度并将其添加到DaemonSet Pod。

查看kibana

点击Discover添加index pattern根据步骤配置完成,再次点击Discover查看日志信息。