cifar10 数据集有60000张图片,每张图片的大小都是 32x32 的三通道的彩色图,一共是10种类别、每种类别有6000张图片,如图4.27所示。

使用前面讲过的残差结构来处理 cifar10 数据集,可以实现比较高的准确率。

首先进行图像增强,使用前面介绍的增强方式。

train_transform = transforms.Compose([transforms.Scale(40),transforms.RandomHorizontalFlip(),transforms.RandomCrop(32),transforms.ToTensor(),transforms.Normalize([0.5, 0.5, 0.5], [0.5, 0.5, 0.5])

])test_transform = transforms.Compose([transforms.ToTensor(),transforms.Normalize([0.5, 0.5, 0.5], [0.5, 0.5, 0.5])

])注意只对训练图片进行图像增强,提高其泛化能力,对于测试集,仅对其中心化,不做其他的图像增强。

下面先定义好 resnet 的基本模块。

def conv3x3(in_channels, out_channels, stride=1):return nn.Conv2d(in_channels,out_channels,kernel_size=3,stride=stride,padding=1,bias=False)# Residual Block

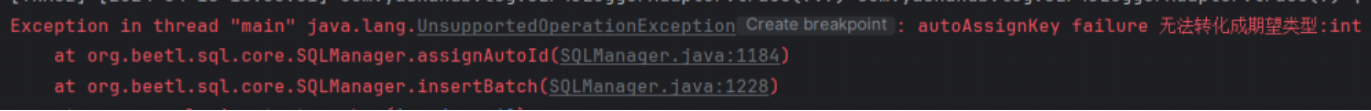

class ResidualBlock(nn.Module):def __init__(self, in_channels, out_channels, stride=1, downsample=None):super(ResidualBlock, self).__init__()self.conv1 = conv3x3(in_channels, out_channels, stride)self.bn1 = nn.BatchNorm2d(out_channels)self.relu = nn.ReLU(inplace=True)self.conv2 = conv3x3(out_channels, out_channels)self.bn2 = nn.BatchNorm2d(out_channels)self.downsample = downsampledef forward(self, x):residual = xout = self.conv1(x)out = self.bn1(out)out = self.relu(out)out = self.bn2(out)if self.downsample:residual = self.downsample(x)out += residualout = self.relu(out)return out和前面介绍的内容一样,先定义残差模块,再将残差模块拼接起来,注意其中的维度变化。

class ResNet(nn.Module):def __init__(self, block, layers, num_classes=10):super(ResNet, self).__init__()self.in_channels = 16self.conv = conv3x3(3, 16)self.bn = nn.BatchNorm2d(16)self.relu = nn.ReLU(inplace=True)self.layer1 = self.make_layer(block, 16, layers[0])self.layer2 = self.make_layer(block, 32, layers[0], 2)self.layer3 = self.make_layer(block, 64, layers[0], 2)self.avg_pool = nn.AvgPool2d(8)self.fc = nn.Linear(64, num_classes)def make_layer(self, block, out_channels, blocks, stride=1):downsample = Noneif (stride != 1) or (self.in_channels != out_channels):downsample = nn.Sequential(conv3x3(self.in_channels, out_channels, stride=stride),nn.BatchNorm2d(out_channels))layers = [block(self.in_channels, out_channels, stride, downsample)]self.in_channels = out_channelsfor i in range(1, blocks):layers.append(block(out_channels, out_channels))return nn.Sequential(*layers)def foward(self, x):out = self.conv(x)out = self.bn(out)out = self.relu(out)out = self.layer1(out)out = self.layer2(out)out = self.layer3(out)out = self.avg_pool(out)out = out.view(out.size(0), -1)out = self.fc(out)return out

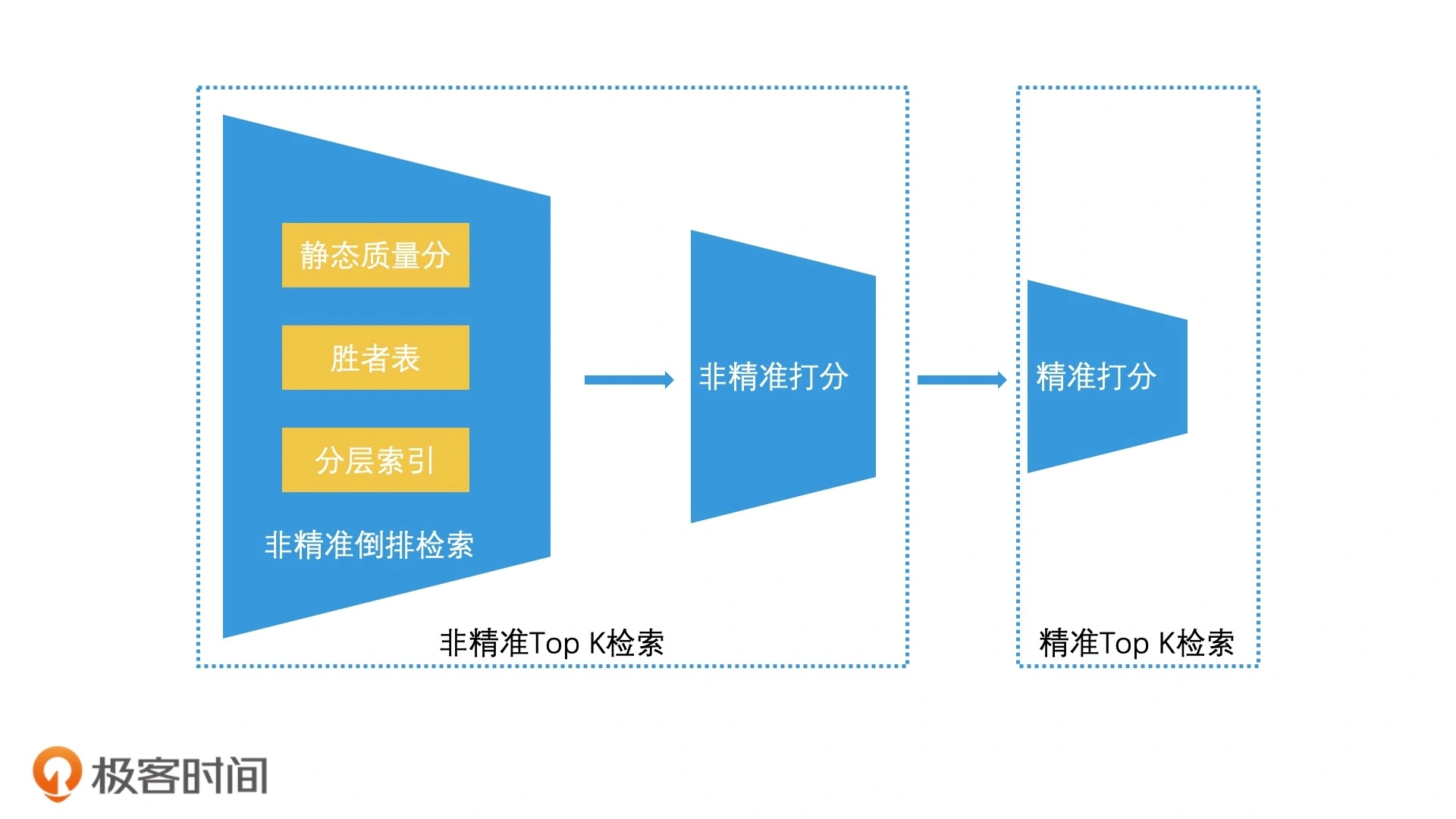

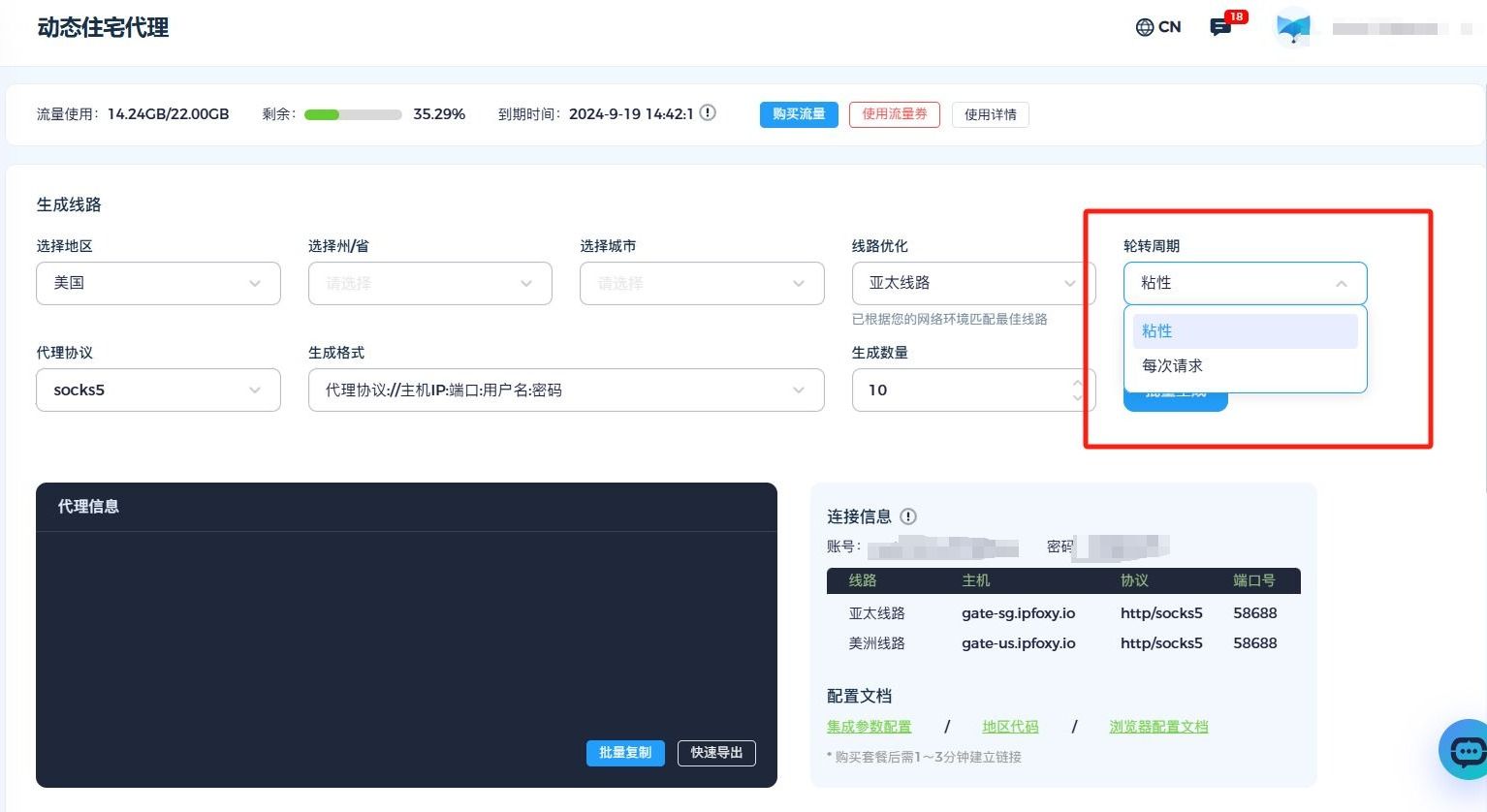

最后在 cifar10 的数据集上跑100个 epoch,实现66.61%的训练集准确率,68%的验证集准确率,因为这里只跑了100次,所以还有一定的提升空间。同时使用更深的残差和更多的训练技巧能实现更好的实验结果,如图4.28所示。

因为这里我是按照自己的想法写的普通版本的 cifar10 分类识别,所以准确率最后并不是很高,如果有人读懂了上面的方法,可以进行试一试。

![[FBCTF2019]RCEService (PCRE回溯绕过和%a0换行绕过)](https://i-blog.csdnimg.cn/direct/ed5f04508d4041af82c9770d26abed10.png)