-

- 代码实战

- 1 数据

- 2 特征工程

- 2.1 数据清洗

- 2.1.1 数据格式处理

- 2.1.2 缺失值

- 2.1.3 标签处理和选择数据

- 2.2 特征衍生

- 2.3 分箱

- 2.1 数据清洗

- 参考资料

- 代码实战

代码实战

1 数据

来自于lending club

print (data.shape) #(39785, 25)

data.info()

'''

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 39785 entries, 0 to 39784

Data columns (total 25 columns):

member_id 39785 non-null int64

loan_amnt #贷款额度 39785 non-null int64

term 39785 non-null object

loan_status 39785 non-null object

int_rate 39785 non-null object

emp_length 38707 non-null object

home_ownership 39785 non-null object

annual_inc #年收入 39785 non-null float64

verification_status 39785 non-null object

desc 26818 non-null object

purpose 39785 non-null object

title 39774 non-null object

zip_code 39785 non-null object

addr_state 39785 non-null object

dti 39785 non-null float64

delinq_2yrs 39785 non-null int64

inq_last_6mths 39785 non-null int64

mths_since_last_delinq 14058 non-null float64

mths_since_last_record 2791 non-null float64

open_acc 39785 non-null int64

pub_rec 39785 non-null int64

total_acc 39785 non-null int64

pub_rec_bankruptcies 39088 non-null float64

issue_d #放款日期 39785 non-null object

earliest_cr_line #信用报告最早日期 39785 non-null object

dtypes: float64(5), int64(7), object(13)

'''2 特征工程

特征工程包括:

- 数据清洗

- 特征衍生

- 特征编码

- 特征筛选

2.1 数据清洗

主要的处理包括:

- 数据格式处理,包括日期以及字符型转为整型

- 缺失值处理

- 处理标签和选择数据

2.1.1 数据格式处理

- int_rate由str转为float

data['int_rate']=data.int_rate.apply(lambda x:float(x.replace("%",""))/100)- 日期处理

Python time strftime() Method

import datetime

import datetime

def ConvertDateStr(x):mth_dict={'Jan':1,'Feb':2,'Mar':3,'Apr':4,'May':5,'Jun':6,'Jul':7,'Aug':8,'Sep':9,'Oct':10,'Nov':11,'Dec':12}yr= int(x[4:6])if yr>17:yr=yr+1900else:yr=yr+2000mth=mth_dict[x[:3]]return datetime.datetime(yr,mth,1)data['app_date_clean']=data['issue_d'].map(lambda d:ConvertDateStr(d))

data['earliest_cr_line_clean']=data['earliest_cr_line_clean'].map(lambda d:ConvertDateStr(d))- emp_length

data.emp_length=data.emp_length.fillna("n/a")

data.emp_length.value_counts()

'''

10+ years 8899

< 1 year 4590

2 years 4394

3 years 4098

4 years 3444

5 years 3286

1 year 3247

6 years 2231

7 years 1775

8 years 1485

9 years 1258

n/a 1078

Name: emp_length, dtype: int64

'''import re

def CareerYear(x):if x.find('n/a')>-1:return -1elif x.find('10+')>-1:return 11elif x.find('< 1')>-1:return 0else:return int(re.sub("\D","",x)) # \D matches any non-digit characterdata.emp_length=data.emp_length.apply(CareerYear)

data.emp_length.value_counts()

'''

11 88990 45902 43943 40984 34445 32861 32476 22317 17758 14859 1258

-1 1078

Name: emp_length, dtype: int64

'''2.1.2 缺失值

total=data.isnull().sum().sort_values(ascending=False)

percent=(data.isnull().sum()/data.isnull().count()).sort_values(ascending=False)

missing_data=pd.concat([total,percent],axis=1,keys=['Total','Percent'])

missing_data.head(10)- 缺失值填补

def MakeupMissing(x):if np.isnan(x):return -1else:return x# 处理mths_since_last_delinq。注意原始值中有0,所以用-1代替缺失

data['mths_since_last_delinq_clean'] = data['mths_since_last_delinq'].map(lambda x:MakeupMissing(x))

data['mths_since_last_record_clean'] = data['mths_since_last_record'].map(lambda x:MakeupMissing(x))

data['pub_rec_bankruptcies_clean'] = data['pub_rec_bankruptcies'].map(lambda x:MakeupMissing(x))- desc:将缺失作为一种状态,非缺失作为另外一种状态

def DescExisting(x):x=str(x)if x=='nan':return 'no desc'else:return 'desc'data['desc_clean']=data['desc'].apply(DescExisting)

data.desc_clean.value_counts()

'''

desc 26818

no desc 12967

Name: desc_clean, dtype: int64

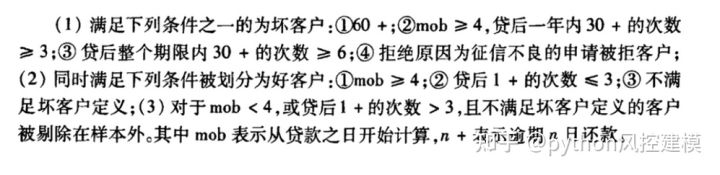

'''2.1.3 标签处理和选择数据

'''

由于存在不同的贷款期限(term),申请评分卡模型评估的违约概率必须要在统一的期限中,且不宜太长,所以选取term=36months的行本

'''

data.term=data.term.apply(lambda x:int(x.replace(" months","")))

selectData=data.loc[data.term==36]

print (selectData.shape) #(29095, 32)data.loan_status.value_counts()

'''

Fully Paid 34115

Charged Off 5670

Name: loan_status, dtype: int64

'''

data['y']=data['loan_status'].map(lambda x:int(x=='Charged Off'))2.2 特征衍生

#贷款额度与收入之比

selectData['amt_ratio']=selectData.apply(lambda x:x['loan_amnt']/x['annual_inc'],axis=1)#考虑earliest_cr_line到申请日期的跨度,以月份记

from dateutil.relativedelta import relativedelta

def MonthGap(earlyDate, lateDate):if lateDate > earlyDate:gap = relativedelta(lateDate,earlyDate)yr = gap.yearsmth = gap.monthsreturn yr*12+mthelse:return 0

selectData['earliest_cr_to_app'] = selectData.apply(lambda x: MonthGap(x.earliest_cr_line_clean,x.app_date_clean), axis = 1)2.3 分箱

采用卡方分箱,要求分完后:

- 不超过5箱

- Bad Rate单调

- 每箱同时包含好坏样本

- 特殊值如-1,单独成一箱

首先我们把变量分为类别型和数值型:

#数值型特征

num_features = ['int_rate_clean','emp_length_clean','annual_inc', 'dti', 'delinq_2yrs', 'earliest_cr_to_app','inq_last_6mths', \'mths_since_last_record_clean', 'mths_since_last_delinq_clean','open_acc','pub_rec','total_acc','amt_ratio']

#类别型特征

cat_features = ['home_ownership', 'verification_status','desc_clean', 'purpose', 'zip_code','addr_state','pub_rec_bankruptcies_clean']对于类别型变量:

1. 当取值较多时(>5),先用bad rate编码,再用连续型分箱的方式进行分箱

2. 当取值较少时:

(1) 如果每种类别同时包含好坏样本,无需分箱

(2) 如果有类别只包含好坏样本的一种,需要合并

more_value_features = []

less_value_features = []

# 第一步,检查类别型变量中,哪些变量取值超过5

for var in cat_features:valueCounts = len(set(trainData[var]))print ("{}:{}".format(var,valueCounts))if valueCounts > 5:more_value_features.append(var) #取值超过5的变量,需要bad rate编码,再用卡方分箱法进行分箱else:less_value_features.append(var)print ("\nunique values for #{} >5, need chiMerge".format(" #".join(more_value_features)))

print ("unique values for #{} <=5".format(" #".join(less_value_features)))'''

home_ownership:5

verification_status:3

desc_clean:2

purpose:14

zip_code:776

addr_state:50

pub_rec_bankruptcies_clean:4unique values for #purpose #zip_code #addr_state >5, need chiMerge

unique values for #home_ownership #verification_status #desc_clean #pub_rec_bankruptcies_clean <=5

'''当取值<5时:如果每种类别同时包含好坏样本,无需分箱;如果有类别只包含好坏样本的一种,需要合并(后续的WOE编码要求每组中包括好坏样本)。

下面定义一个计算样本在某一属性上的坏样本率的函数:

def BinBadRate(df, col, target, grantRateIndicator=0):''':param df: 需要计算好坏比率的数据集:param col: 需要计算好坏比率的特征:param target: 好坏标签:param grantRateIndicator: 1返回总体的坏样本率,0不返回:return: 每箱的坏样本率,以及总体的坏样本率(当grantRateIndicator==1时)'''total = df.groupby([col])[target].count()total = pd.DataFrame({'total': total})bad = df.groupby([col])[target].sum()bad = pd.DataFrame({'bad': bad})regroup = total.merge(bad, left_index=True, right_index=True, how='left')regroup.reset_index(level=0, inplace=True)regroup['bad_rate'] = regroup.apply(lambda x: x.bad * 1.0 / x.total, axis=1)dicts = dict(zip(regroup[col],regroup['bad_rate']))if grantRateIndicator==0:return (dicts, regroup)N = sum(regroup['total'])B = sum(regroup['bad'])overallRate = B * 1.0 / Nreturn (dicts, regroup, overallRate)对于坏样本率为0或者1的组,需要进行合并:

def MergeBad0(df,col,target,direction='bad'):''':param df: 包含检验0%或者100%坏样本率:param col: 分箱后的变量或者类别型变量。检验其中是否有一组或者多组没有坏样本或者没有好样本。如果是,则需要进行合并:param target: 目标变量,0、1表示好、坏:return: 合并方案,使得每个组里同时包含好坏样本'''regroup=BinBadRate(df,col,target)[1]if direction=='bad':# 如果是合并0坏样本率的组,则跟最小的非0坏样本率的组进行合并regroup=regroup.sort_values(by='bad_rate')else:# 如果是合并0好样本样本率的组,则跟最小的非0好样本率的组进行合并regroup=regroup.sort_values(by='bad_rate',ascending=False)col_values=[[name] for name in regroup[col]] #属性的可能取值regroup.index=range(regroup.shape[0])for i in range(regroup.shape[0]-1):col_values[i+1]=col_values[i]+col_values[i+1]del_index.append(i)if direction=='bad':if regroup['bad_rate'][i+1]>0:breakelse: #direction=='good':if regroup['bad_rate'][i+1]<1:breaknew_col_values=[[name] for i, name in enumerate(col_values) if i not in del_index] #合并之后的取值集合newGroup={}for i in range(len(new_col_values)): for val in new_col_values[i]:newGroup[val]='Bin '+str(i)return newGroup # dict, key为属性的可能取值,value为对应的组# (i)当取值<5时:如果每种类别同时包含好坏样本,无需分箱;如果有类别只包含好坏样本的一种,需要合并

merge_bin_dict = {} #存放需要合并的变量,以及合并方法

var_bin_list = [] #由于某个取值没有好或者坏样本而需要合并的变量

for col in less_value_features:binBadRate = BinBadRate(trainData, col, 'y')[0]if min(binBadRate.values()) == 0 : #由于某个取值没有坏样本而进行合并print ('{} need to be combined due to 0 bad rate'.format(col))combine_bin = MergeBad0(trainData, col, 'y')merge_bin_dict[col] = combine_binnewVar = col + '_Bin'trainData[newVar] = trainData[col].map(combine_bin)var_bin_list.append(newVar)if max(binBadRate.values()) == 1: #由于某个取值没有好样本而进行合并print ('{} need to be combined due to 0 good rate'.format(col))combine_bin = MergeBad0(trainData, col, 'y',direction = 'good')merge_bin_dict[col] = combine_binnewVar = col + '_Bin'trainData[newVar] = trainData[col].map(combine_bin)var_bin_list.append(newVar)

# merge_bin_dict={'home_ownership': {'NONE': 'Bin 0', 'MORTGAGE': 'Bin 0', 'OWN': 'Bin 1', 'RENT': 'Bin 2', 'OTHER': 'Bin 3'}}

# var_bin_list=['home_ownership_bin']#less_value_features里剩下不需要合并的变量

less_value_features = [i for i in less_value_features if i + '_Bin' not in var_bin_list]

#less_value_features=['verification_status', 'desc_clean', 'pub_rec_bankruptcies_clean']当类别型变量的取值大于5时,按bad rate进行编码

def BadRateEncoding(df, col, target):''':param df: :param col: 需要按照bad rate进行编码的特征,通常为类别型特征:param target: 类标:return: 属性的取值对应的bad rate'''regroup = BinBadRate(df, col, target, grantRateIndicator=0)[1]br_dict=regroup[[col,'bad_rate']].set_index([col]).to_dict()['bad_rate']badRateEnconding = df[col].map(lambda x: br_dict[x])return {'encoding':badRateEnconding, 'bad_rate':br_dict}# (ii)当取值>5时:用bad rate进行编码,放入连续型变量里

br_encoding_dict = {} #记录按照bad rate进行编码的变量,及编码方式

for col in more_value_features:br_encoding = BadRateEncoding(trainData, col, 'y')trainData[col+'_br_encoding'] = br_encoding['encoding']br_encoding_dict[col] = br_encoding['bad_rate']num_features.append(col+'_br_encoding')#br_encoding_dict:

# {'purpose': {'car': 0.08866995073891626, 'credit_card': 0.07595450852965069, 'debt_consolidation': 0.11088348271446863, 'educational': 0.16062176165803108, 'home_improvement': 0.08807045636509207, 'house': 0.10526315789473684, 'major_purchase': 0.08317399617590822, 'medical': 0.14426229508196722, 'moving': 0.14935064935064934, 'other': 0.13350923482849605, 'renewable_energy': 0.16279069767441862, 'small_business': 0.19866666666666666, 'vacation': 0.12560386473429952, 'wedding': 0.0794392523364486}, 'zip_code':{}}参考资料

评分卡系列(二):特征工程

风险狗的数据分析之路