论文原文:[1810.00826] How Powerful are Graph Neural Networks? (arxiv.org)

英文是纯手打的!论文原文的summarizing and paraphrasing。可能会出现难以避免的拼写错误和语法错误,若有发现欢迎评论指正!文章偏向于笔记,谨慎食用!

1. 省流版

1.1. 心得

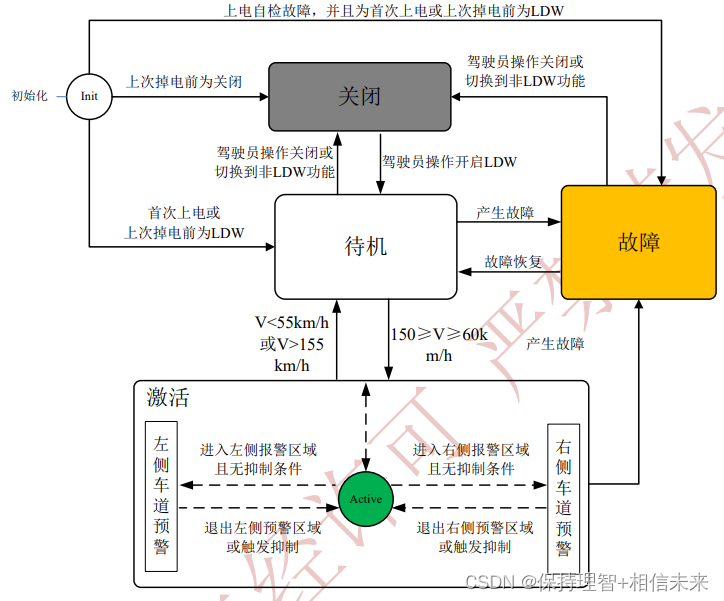

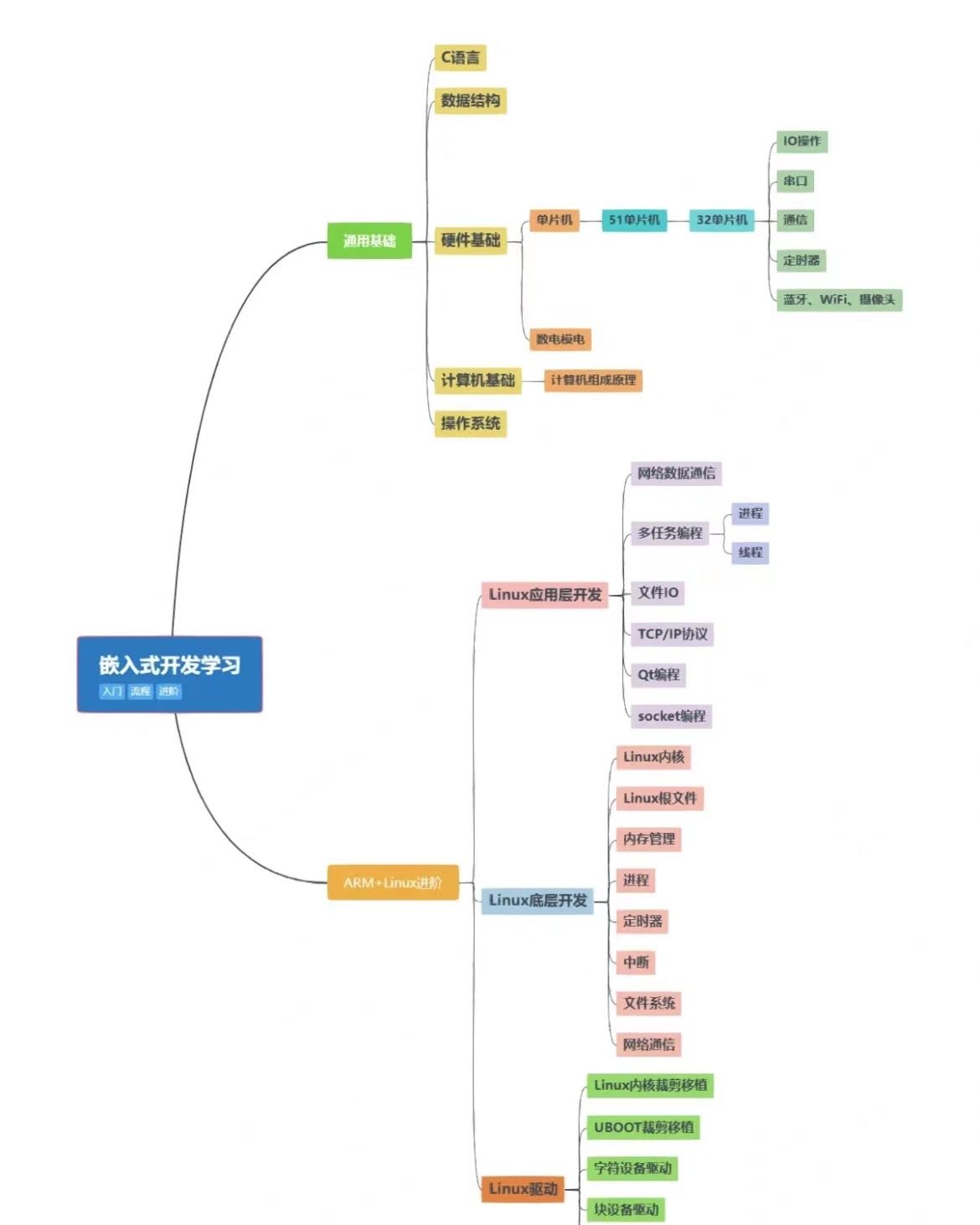

1.2. 论文框架图

2. 论文逐段精读

2.1. Abstract

①Even though the occurrence of Graph Neural Networks (GNNs) changes graph representation learning to a large extent, it and its variants are all limited in representation abilities.

2.2. Introduction

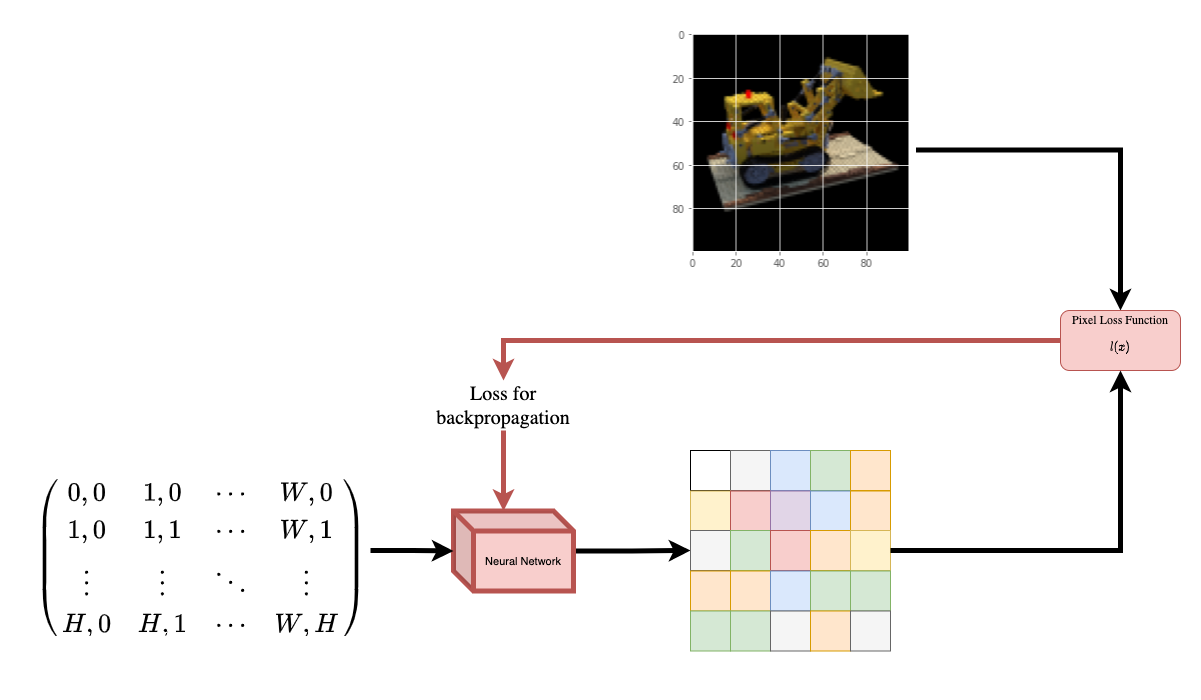

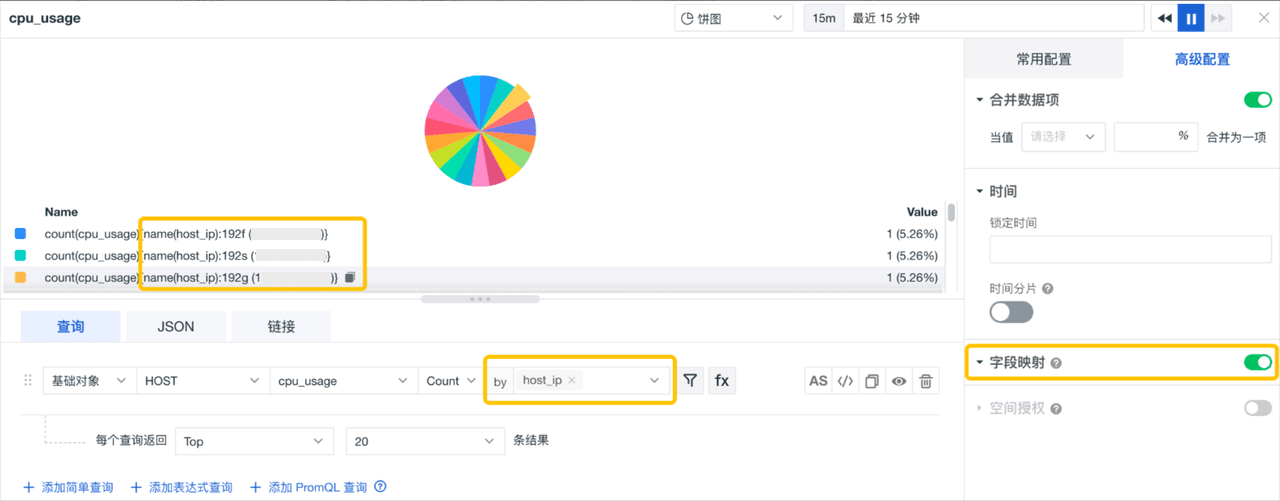

①Briefly introduce how GNN works (combining node information from k-hop neighbors and then pooling)

②The authors hold the view that ⭐ other graph models mostly based on plenty experimental trial-and-errors rather than theoretical understanding

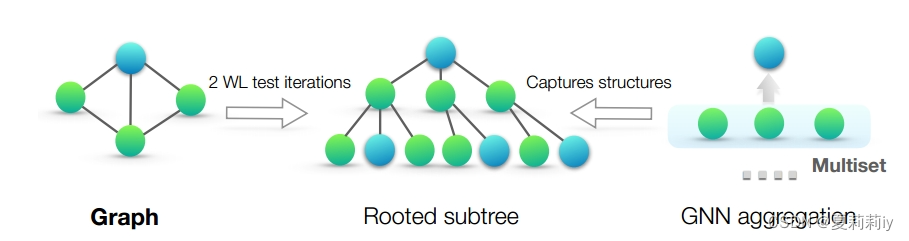

③They combine GNNs and the Weisfeiler-Lehman (WL) graph isomorphism test to build a new framework, which relys on multisets

④GIN is excellent in distinguish, capturing and representaion

heuristics n.[U] (formal) 探索法;启发式

heuristic adj.(教学或教育)启发式的

2.3. Preliminaries

2.4. Theoretical framework: overview

2.5. Building powerful graph neural networks

2.5.1. Graph isomorphism network (GIN)

2.5.2. Graph-level readout of GIN

2.6. Less powerful but still interesting GNNs

2.6.1. 1-layer perceptrons are not sufficient

2.6.2. Structures that confuse mean and max-pooling

2.6.3. Mean learns distributions

2.6.4. Max-pooling learns sets with distinct elements

2.6.5. Remarks on other aggregators

2.7. Other related work

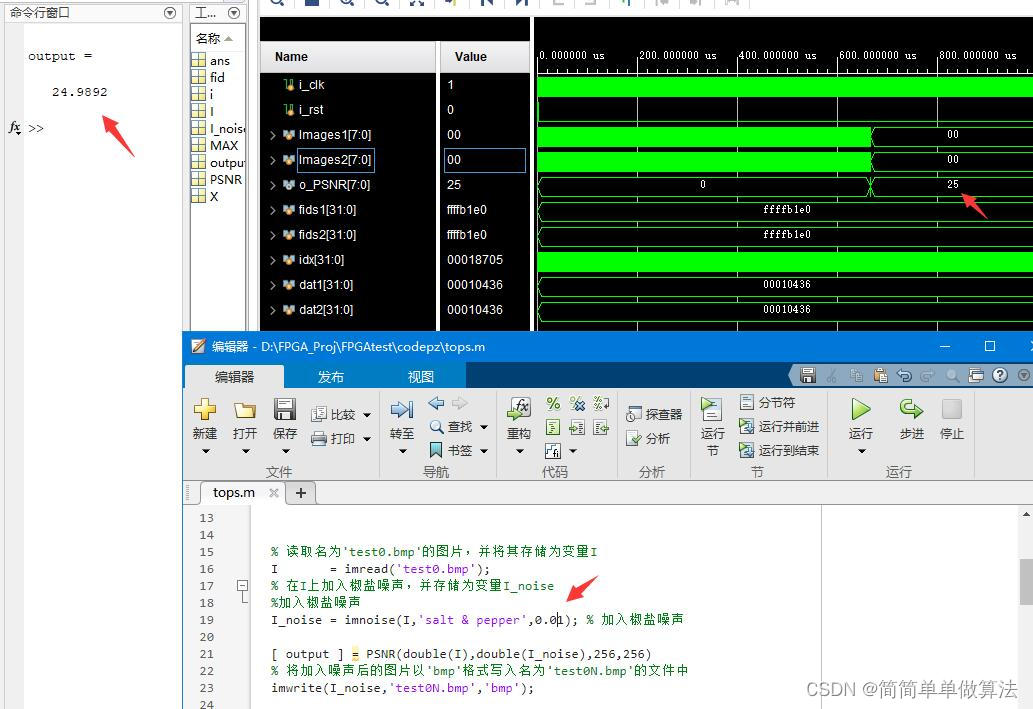

2.8. Experiments

2.8.1. Results

2.9. Conclusion

3. 知识补充

4. Reference List

Xu, K. et al. (2019) 'How Powerful are Graph Neural Networks?', ICLR 2019. doi: https://doi.org/10.48550/arXiv.1810.00826